In this two-part series, I want to outline some basic principles and practices for securing software supply chains.

It’s worth noting up front that, as with most software engineering challenges, there are no silver bullets here. No single activity or approach will render your software supply chain secure. But there are some good places to start and I expect that with growing interest in supply chain security (exemplified by the recent White House Executive Order), we’ll see some industry-accepted best practices evolve over the coming years.

So what might we expect to see in those best practices, and what can we get started on before a wider community consensus is formed?

First, cover your assets.

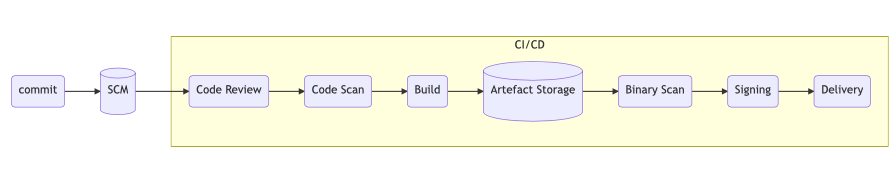

Let’s start with a classic visualization of the software development lifecycle.

This is a generalization, of course, but it does make it easier to reason about typical software development workflows. One thing to notice right away is what’s missing: the 0th step where an engineer is writing code that they then commit. The software supply chain starts with developers and development systems, and it’s a moment that’s open to vulnerabilities. So how do we protect our own contributions?

Two main practices will go a long way to help: protecting our identities, and protecting our development environments, and we can achieve both by practicing good password and software hygiene.

For good password hygiene we should avoid password reuse, so that one password compromise does not have a knock-on effect. Even more than avoiding password reuse, utilizing multi-factor authentication (MFA) wherever possible is a fantastic way to protect your identity. MFA can prevent a password compromise resulting in malicious activity with the associated accounts.

When it comes to software hygiene, my number one recommendation is to avoid the curl-pipe-bash paradigm. It has become common to offer a quick-start solution for installing a new component where the instructions give you a URL to fetch data from, and immediately feed into, an interpreter. How many of us check the contents of that script? How confident are we in our assessment of the script’s activities? How often do we verify that the script we retrieve is what the author intended to publish and what we reviewed? It is easy to make mistakes here as we saw recently with the Codecov compromise. Even though Codecov publishes checksums of its script, many users — including their own container images — were not verifying the fetched data against the published checksum.

To be honest, I could stop right there. Just practicing good password and software hygiene goes a long way to protecting against many common initial access vectors, including compromised developer credentials, social engineering and compromises based on known vulnerabilities.

Now, apply it to the software pipeline.

Not surprisingly, these same recommendations apply to the whole pipeline. We should be protecting our secrets by following the principles of least privilege so that, at any time, only entities that truly need them can access them.

It’s also helpful to utilize short-lived secrets in the pipeline. That has two primary benefits:

- An undetected compromise will only have a short, fixed timeframe in which it can be used maliciously.

- Regularly creating new secrets allows for much faster replacement of any compromised credentials in the future, since regularly rotating secrets will require you to establish a well-tested process for replacing them.

Three further practices help maintain software pipeline hygiene:

- Minimizing the size and complexity of any software images, or installations, in the infrastructure in order to reduce the attack surface available for targeting compromises.

- Keeping infrastructure software up-to-date, to prevent attackers using known vulnerabilities (as happened with the Equifax breach).

- Removing unmaintained software. This includes not just the software we ingest, but also custom tooling that we developed ourselves but no longer maintain.

The importance of deterministic processes

The last thing I want to cover in this first post is the value of following deterministic build processes. After all, it’s hard to secure something we can’t reason about, and it’s difficult to reason about something that does not behave in a predictable way. But more fundamentally, only a deterministic build system can provide the stable foundation on which to build a secure software supply chain.

Non-deterministic behavior can be as innocuous-seeming as files being ordered differently on each invocation of a build, or having floating dependencies where each build can potentially ingest a different set of dependency versions.

Arguably, the only way to really ensure that you have deterministic processes in place is by automating as much of the release pipeline as possible. You’ll hear people suggest that we need to automate everything except for code review and final release approval, but in reality we can do a lot of good just by moving in that direction whenever we can.

Defending builds against attack

Attacks on the toolchain were some of the earliest imagined supply chain attacks, occurring long before we adopted the term. Ken Thompson introduced the idea of a Trusting Trust attack in 1984. In this attack, a compiler is modified to add additional malicious behavior to a program when compiling it. The attack on SolarWinds bears some resemblance to this by subverting build processes to introduce difficult-to-detect behavior at build time. Several other attacks against build tooling have been observed, such as the Octopus Scanner, which corrupted NetBeans caches to inject malicious behavior into Java jar files.

Two open source projects are trying to address these kinds of attacks against build processes:

- The Reproducible Builds project develops tools, documentation, standards and patches for upstream open source projects that enable the production of bit-for-bit identical builds given the same inputs. This is no small feat, as many things influence the output of a build. The project’s major initial innovation was recognizing that the the time at which a build runs is embedded into multiple artifacts produced during that build. It defined a standard way of fixing time for a build, called SOURCE_DATE_EPOCH, that more and more projects are adopting, and which removes a major source of non-deterministic output.

- The Bootstrappable Builds project takes things even further. Its goal is to produce small, auditable compilers that can be used to bootstrap the build of more complex systems, such as an entire Linux-based OS. These small compilers should be concise enough to manually review and audit while also including enough features to bootstrap a more sophisticated compiler with which to build the rest of the toolchain. The auditable toolchain components produced by the Bootstrappable Builds project are used, for example, by the GNU Guix Linux distribution. Over time, that project has reduced their binary seed (the unauditable set of toolchain binaries required to start building their Linux distribution from source) from ~250MB to ~60MB, and they are not done yet.

Understandably, few people want to take the time to replace their entire build machinery, although if you are inspired to start doing that, the ability to produce binary reproducible builds is one of several good reasons why more projects are adopting the Bazel build system.

But adding to build reproducibility in whatever ways we can adds consistency and repeatability in addition to improving security. So what incremental steps can we take towards a more stable foundation without bootstrapping builds or expending the massive effort required to achieve fully-reproducible builds?

Build systems can have a variety of properties. They can be:

- Deterministic – given the same inputs, equivalent actions occur.

- Verifiably/binary reproducible – given the same inputs, they produce bit-for-bit identical output.

- Bootstrappable – the entire toolchain is bootstrapped from a small, auditable, trusted source.

- Hermetic – the build is not affected by external factors (e.g. libraries and software on host systems, access to the internet, etc).

Of these properties I believe that hermetic builds are an excellent end goal, and we can see significant benefit without ever achieving full hermeticity by removing and mitigating external factors affecting the build.

Just running builds in a container, for example, may not provide a fully hermetic environment. But with appropriate container images that are themselves deterministically built and using pinned versions, you can create a more repeatable environment in which to perform builds that are either insensitive to the host system or isolated from it.

One of the most frequently recommended practices for securing software supply chains is something that is much simpler and easier to do when you are using deterministic processes: understanding and maintaining the dependencies we ingest into our releases. That, along with a look at best practices for signing and verifying artifacts, will be my subject in part two.

For now, though, if you do nothing else, please enable multi-factor authentication in as many places as possible!

Stay tuned to the Open Source Blog and follow us on Twitter for more deep dives into the world of open source contributing.