So by now most of you are aware that Virtual SAN 5.5 was released last week, and it came in with a bang. During the launch event, we announced some impressive performance numbers, detailing 2 Million IOPS achieved in a 32-node Virtual SAN cluster. One of the most frequent questions since the launch has been what are the details of the configuration we used to achieve this monumental task. Well wait no longer, this is the post that will reveal the details in all their magnificent glory!

So by now most of you are aware that Virtual SAN 5.5 was released last week, and it came in with a bang. During the launch event, we announced some impressive performance numbers, detailing 2 Million IOPS achieved in a 32-node Virtual SAN cluster. One of the most frequent questions since the launch has been what are the details of the configuration we used to achieve this monumental task. Well wait no longer, this is the post that will reveal the details in all their magnificent glory!

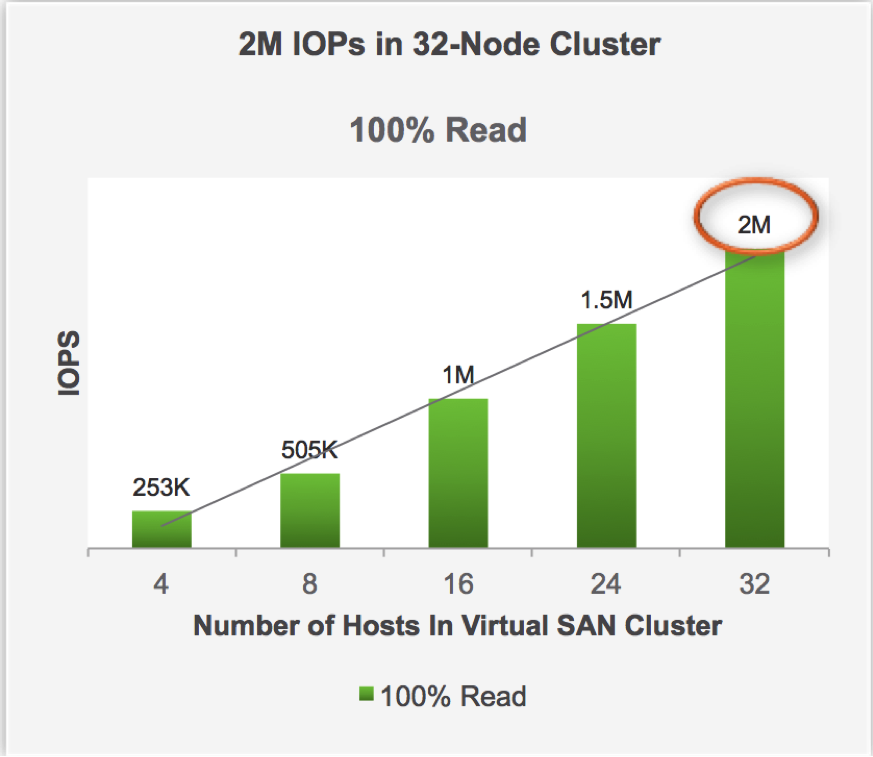

So for those who missed it, during the launch event we released internal benchmark results that show Virtual SAN linearly scaling to 2 million IOPS in a 32-node cluster.

Below we will detail the hardware configuration, software configuration, and settings used for two benchmark tests, one utilizing a 100% read workload, and the other a 70% read, 30% write workload. Lets start with the hardware and software configuration used for both benchmark tests.

As per the Virtual SAN recommended practice, we used uniform nodes to comprise the 32-node cluster. Each host was a Dell PowerEdge R720 with the following configuration

Server Hardware Configuration:

- Processor: Dual-socket Intel Xeon CPU E5-2650 v2 @ 2.6GHz (Ivy Bridge). 8 cores per socket with Hyper-Threading enabled.

- Memory: 128 GB DDR3. 8 x 16GB DIMMs @ 1833MHz

- Network: Intel 82599EB 10GbE

- Storage Controller: LSI 9207-8i controller with phase 18 firmware.

- SSD: 1x 400GB Intel S3700 (INTEL SSDSC2BA40), firmware revision DL04.

- Magnetic HDDs: 4 x 1.1TB 10K RPM Hitachi SAS drives (HUC101212CSS600), firmware revision U5E0; 3 x 1.1TB 10K RPM Seagate SAS drives (ST1200MM0007), firmware revision IS04

Network Infrastructure:

- Arista 7050 switch, 10Gbe, standard MTU (1500 byte)

Software Configuration:

vSphere 5.5 U1 with Virtual SAN 5.5 was used on the cluster. We made the following changes to default vSphere:

The following ESXi advanced parameters were configured. For more information on configuring ESXi advanced parameters, consult the following kb http://kb.vmware.com/kb/1038578 .

- We increased the heap size for the vSphere network stack to 512MB. “esxcli system settings advanced set -o /Net/TcpipHeapMax -i 512”. You can validate this setting using “esxcli system settings advanced list -o /Net/TcpipHeapmax”

- Allowed VSAN to form 32-host clusters. “esxcli system settings advanced set -o /adv/CMMDS/goto11 1”. (for background on why this command is called goto11, watch this clip ☺ )

- We installed the Phase 18 LSI driver (mpt2sas version 18.00.00.00.1vmw) for the LSI storage controller.

- We configured BIOS Power Management (System Profile Settings) for ‘Performance’, i.e., all power saving features were disabled. (for more background on how power management settings can effect peak performance, see this whitepaper).

Virtual SAN Configuration:

- We utilized a single Disk Group/host. Each disk group contained a single SSD and all seven of the magnetic disks.

- A single Virtual SAN vmkernel port was used per host, with a dedicated 10GbE uplink.

100% Read Benchmark

The following configuration was used when testing the 100% read IO profile.

VM Configuration:

Each host ran a single 4-vcpu 32-bit Ubuntu 12.04 VM with 8 virtual disks (vmdk files) on the VSAN datastore. The disks were distributed across two PVSCSI controllers. We used the default driver for pvscsi – version 1.0.2.0-k.

We modified the boot time parameters for pvscsi to better support large-scale workloads with high outstanding IO: “vmw_pvscsi.cmd_per_lun=254 vmw_pvscsi.ring_pages=32”. For more background on configuring this setting, see http://kb.vmware.com/kb/2053145.

For this benchmark test, we applied a storage policy based management setting of HostFailuresToTolerate=0 to the vmdks.

Workload Configuration:

In each VM, we ran IOMeter with 8 worker threads. Each thread was configured to work on 8 GB of a single vmdk. Each thread ran a 100% read, 80% random workload with 4096 byte IOs aligned at the 4096 byte boundary with 16 OIO per worker. In effect, each VM on each host issued:

- 4096 byte IO requests across a 64GB working set

- 100% read, 80% random

- Aggregate of 128 OIO/host

Results:

We ran the above described experiment for one hour and measured aggregate guest IOPS at 60 second intervals. The measured median IOPS was a whopping 2024000!

70% Read 30% Write Benchmark

The following configuration was used when testing the 70% Read 30% write IO profile.

Virtual SAN Configuration:

- Single Disk Group/host. Each disk group contains a single SSD and all seven of the magnetic disks.

- For this benchmark test, we used the default Virtual SAN storage policy based management settings of HostFailuresToTolerate=1 and stripeWidth=1 for all vmdks.

VM Configuration:

Each host ran a single 4-vcpu 32-bit Ubuntu 12.04 VM with 8 virtual disks (vmdk files) on the VSAN datastore. The disks were distributed across two PVSCSI controllers. We used the default driver for pvscsi – version 1.0.2.0-k and modified the boot time parameters for pvscsi to better support high outstanding IO: “vmw_pvscsi.cmd_per_lun=254 vmw_pvscsi.ring_pages=32”.

Workload Configuration:

In each VM, we ran IOMeter with 8 worker threads. Each thread is configured to work on 4GB of a single vmdk. Each thread runs a 70% read, 80% random workload with 4096 byte IOs aligned at the 4096 byte boundary with 8 OIO. In effect, each VM on each host issued:

- 4096 byte IO requests across a 32GB working set

- 70% read, 80% random

- Aggregate of 64 OIO/host

Results:

We ran the above described experiment for three hours and measured aggregate guest IOPS at 60 second intervals. Median IOPS was 652900 and average guest read latency over a 60-second median point was 2.94 milliseconds and average guest write latency was 3.06 milliseconds for an aggregate latency of 2.98 milliseconds per IO. Aggregate network throughput during the 60-second interval was 3178 Mbytes/sec (25.4 Gbps).

Conclusion:

Both the 100% read benchmark and 70% read benchmark showed conclusive results of Virtual SAN scaling linearly to provide additional performance per host in a predictable manner, with only a single disk group configured per host. While Virtual SAN 5.5 is an initial release, such numbers prove that Virtual SAN can provide the performance to meet almost any workload today.

Acknowledgements:

I would like to thank Lenin Singaravelu and Jinpyo Kim of the VMware Performance Engineering team for their contribution in performing the above benchmark tests. I would also like to thank Mark Achtemichuk of the Cloud Infrastructure Technical Marketing team for his contribution in helping to create this blog post. Go Virtual SAN!