By Jim Armstrong

It seems hard to believe to me but it was just six short months ago that we officially launched our Virtual SAN product. I spent the months leading up to the launch and the months since learning about Virtual SAN and the storage market at large and since our launch I have watched as others shared their opinions of our product. By now, I have seen some common areas where people make key mistakes about how Virtual SAN works. What I’d like to do is discuss some common misconceptions about Virtual SAN and hopefully along the way help folks understand what sets Virtual SAN apart from other seemingly similar technologies.

Good Reference Architectures Are Just A Starting Point

The first mistake I have seen people make: drawing too many conclusions from reference architectures. For example, for Virtual SAN and VMware’s Horizon View we have published a reference architecture that walks customers through several calculations and the process we used to arrive at a particular configuration. You can also read our “VMware Virtual SAN Design and Sizing Guide for Horizon View Virtual Desktop Infrastructures” paper here. Both are great reads if you are considering your first Virtual SAN pilot – VDI or otherwise – because they lay out all the details of how we scoped out the amount and type of storage required as well as how the storage is best distributed across hosts for performance and reliability.

But here’s the thing: it is a reference architecture. We picked a VDI workload, assumed every VDI user will be the exact same, and scoped out the architecture from there. As they say in the car industry, “your mileage may vary.” You should definitely read this paper and refer to it when designing your own architecture but it would be fruitless to look at one particular reference architecture and make any sweeping statements about what our Virtual SAN product can or cannot do, how efficient it is, or how much Virtual SAN will cost in your very particular set of circumstances.

With VMware Virtual SAN you are not forced in to a “one size fits all” scenario; in fact, we give you multiple options. You could:

- Do all the calculations yourself by following the reference architectures and Virtual SAN Sizing Guides to build your own custom Virtual SAN solution from components on our VMware Compatibility Guides. To simplify things we also have the online VMware Virtual SAN Sizing Tool available.

- Or you could select a Virtual SAN Ready Node that is designed for VDI, server workloads, or whatever your use case may be and either buy the Ready Node as-is or tweak that configuration to better suit your needs.

- Or you can forego all of that and go with the simplest building block of all, the newly announced hyper-converged infrastructure appliance EVO:RAIL.

Better Hardware and Lower Cost: Virtual SAN Can Do That

With respect to the hardware selected in the Horizon View reference architecture some comments have been made about the decision to use SAS 10K and 15K drives rather than less expensive SATA drives. The simple tradeoff one might be led to make is that SATA drives cost less per GB than SAS and since Virtual SAN has an SSD tier the relative speed of the hard drives really doesn’t matter so just pick the bigger, cheaper SATA drives and go! We certainly could have selected SATA drives to come up with the lowest priced hardware option and it would work just fine. We chose SAS drives for a couple of key reasons, though.

In general, SAS drives are often used for critical applications where there is high IO, especially when that IO is random. If you’ve ever setup a VDI environment you know that those descriptors fit VDI exactly. For instance, as I sit here typing in Word the guy in the cube behind me is working in Outlook, the guy across from me is watching a YouTube video, and if I pop my head up I can see at least 4 copies of Excel, 2 copies of PowerPoint, and then I see the vCloud Air architects and I have no idea what kind of coding tools they are running. The point is, in a VDI environment all of our application IO will be different (i.e. not very cacheable). The techniques we use in Virtual SAN allow us to still benefit from the SSD tier because the initial write operations happen there but eventually that data has to flush to disk. We also have some very cool features in Virtual SAN that serialize the data and remove some of the randomness but still, as a VDI administrator and End User I want the disks underneath to be built for speed. All else being equal, in a VDI environment I would choose SAS disks and this is what was done in the reference architecture. But this does NOT mean you must use SAS. That little caveat highlighted above (“all else being equal”) is the kicker. There is a price difference for SAS and if your budget or disk needs only call for SATA drives then by all means, go with SATA!

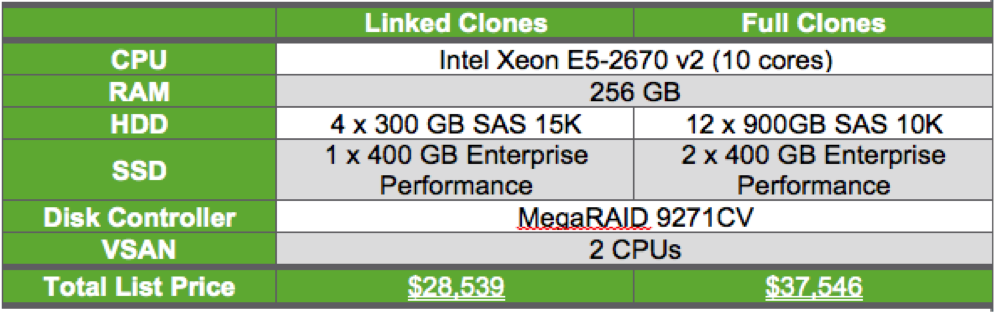

Source: Cisco Build & Price website

Before I move on from the topic of cost and SAS disks, I do want to highlight something. Our reference architecture has SAS drives, dual 10 core CPUs, 256 GB of RAM, and dual 10 GB NICs plus the cost of Virtual SAN itself so you might be thinking this is a much more expensive combination than many of the hyperconverged appliances you may have heard about. I have some good news there, too. One of the GREAT things about having an open hardware ecosystem for Virtual SAN is that you can go and find the prices for everything pretty easily. I decided to use Cisco’s UCS platform to build out a system for Virtual SAN that meets the requirements in the reference architecture and the results are in Figure 3. [Note: I chose Cisco only because of my own familiarity with their online Build & Price tool. Dell, Fujitsu, HP, Hitachi, Huawei, IBM, and SuperMicro all have Virtual SAN Ready Nodes that I could have used as a starting point for this exercise.] The figures in the table are all published list prices. While most hyperconverged vendors do not publicly disclose their prices I can tell you from experience the starting list price for most of the hyperconverged hardware I have seen – even with SATA drives, less RAM, and fewer CPU cores – is much higher than $28,500.

Do Not Get Confused By Array Features: Virtual SAN Is Different

The next thing people seem to get confused about with Virtual SAN is why it does not support some of the vSphere storage features. One example is our VAAI APIs (VAAI is vSphere API for Array Integration). Some vendors running software-based storage solutions on top of vSphere have elected to use VAAI and that is a good thing for them, if they implement VAAI in a meaningful way. Originally, VAAI was designed to allow vSphere hosts to offload storage processing tasks to arrays so that the vSphere hosts can keep their CPU and RAM resources focused on VMs. In a hyperconverged solution where compute and storage are running together, you obviously would not offload the work from the host’s CPUs but VAAI could still allow a third party virtual storage appliance (VSA) to handle the storage operations which may be more efficient than vSphere’s native storage capabilities. The compromise is that those 3rd party VSAs are usually VMs with a large number of vCPUs and reserved vRAM so they have consume a significant portion of the host’s physical resources to do their storage work.

With VMware Virtual SAN there is no VSA — storage processing is built into the vSphere kernel. In addition, since we are not using traditional shared, non-exclusive storage there is no benefit to Virtual SAN that would come with using VAAI.

The same things could be said about features like Storage DRS. Again, if you have separate physical arrays that are not under vSphere’s direct control Storage DRS makes great sense. We can use VASA (vSphere API for Storage Awareness) to communicate bidirectionally with arrays, track I/O utilization, storage allocation, and availability, and allow vSphere to intelligently use Storage vMotion to relocate VMDKs when there is contention. Again, however, with Virtual SAN we have direct and exclusive control of all disks in the hosts and we consume and manage all of that as a single datastore (at least from a VM’s perspective). Therefore Storage DRS is not a feature that provides any benefit with Virtual SAN.

Compression & Deduplication: Understanding the Tradeoffs

There are a couple of familiar storage features that are noticeably not part of our first release of Virtual SAN and some of our competitors like to point them out as weaknesses: compression and deduplication. First let me say that I love compression and deduplication…all things being equal. (There’s that caveat again!) Unfortunately, compression and de-dupe are not magical processes that occur without cost. In fact, they force a rather significant tradeoff decision, especially in a hyperconverged architecture where CPU and RAM have to be shared between the storage system and the VMs. Now again, in a separate physical storage system where the storage media are expensive (think traditional arrays, all flash arrays, etc) compression and deduplication are pretty much table stakes. But then again those systems have their own compute hardware that can do the compression and deduplication work without affecting ESXi compute resources.

For hyperconverged systems, compression and deduplication would be very important if you had a closed architecture where you could not add more disks or higher capacity disks. But to enable compression and deduplication for storage in a hyperconverged system you must take some CPU and RAM resources away from the VMs or have dedicated hardware to do deduplication and compression. So here comes the math problem: how much CPU and RAM overhead are you willing to accept to reduce your disk footprint? As it turns out, this problem isn’t as straightforward as it might seem.

The simplest way to look at it would be to calculate how much a certain percent overhead on the CPU and RAM costs and then figure out what percent of disk space would need to be saved to overcome that cost. But to do so would ignore the fact that vSphere runs as a cluster and would not take in to account the effect on the entire system. Imagine you have a full cluster of 32 vSphere hosts and they are each using 8 GB of RAM for de-dupe and compression. In effect, you have just removed 256 GB of RAM from the vSphere resource pool – an entire host’s worth of RAM resources (and at this point I am ignoring CPU overhead…a significant factor that should be added). I think maybe you can see the problem with our earlier math: when a product takes this 8 GB of RAM on each host and use it for deduplication we impact the consolidation ratio of all the VMs running in the cluster.

For this release of Virtual SAN we faced math like that in our design decisions. In the end, since a Virtual SAN cluster is designed to enable users to easily add a large number of relatively inexpensive disks (up to 140 TB per node or 4.4 PB in a 32 node cluster) we decided the compression and deduplication overhead math could wait. Does this mean we will never do deduplication or compression? No. We know that some workloads can benefit and customers are asking us about it and we continue to explore those features. But, then again, 8 TB disks are on the way so we will have to keep exploring that tradeoff and decide where these features make sense. As you explore the world of software-based and software-defined storage and you talk to vendors that have deduplication and compression, make sure you ask some questions. How much additional overhead do these features require? Can they be used with all workloads or does the vendor only recommend them for a handful of use cases? Can you choose to just upgrade to more or larger hard drives instead of using deduplication and compression?

VMware Virtual SAN: Radically Simple Hypervisor-Converged Storage

VMware Virtual SAN is unlike any other product on the market today. Virtual SAN is the only hypervisor-converged storage solution on the market, it is 100% policy-driven and fully integrated with your VM workflows, and it works great on EVO:RAIL, Virtual SAN Ready Nodes, and completely customized hardware of your choosing. Because Virtual SAN is so different there are some misleading ideas floating around about what the product can and cannot do. I hope this article and others that follow will set the record straight.