This blog focuses on the complete list of steps to be taken to increase the size of the clustered vmdk(s) using multi-writer flag for an Oracle RAC Cluster online using VMware Virtual Volumes (vVols) with VMware ESXi 8.0 Update 2 using Pure Storage X50 AFF.

Current restriction of shared vmdk’s using the multi-writer attribute is that Hot extend virtual disk is disallowed as per KB 1034165 (non-vSAN) & KB 2121181 (vSAN).

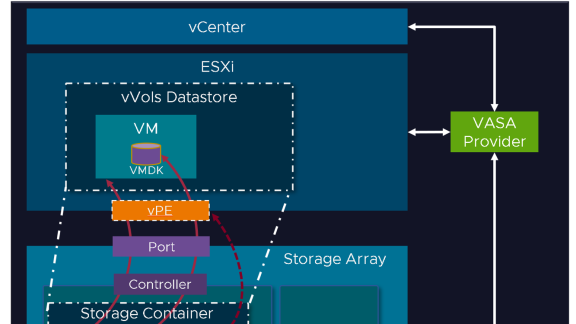

With the release of VMware ESXi 8.0 Update 2, feature to hot-extend a shared vSphere Virtual Volume disk was added

- Hot-extend a shared vSphere Virtual Volumes Disk – vSphere 8.0 Update 2 supports hot extension of shared vSphere Virtual Volumes disks, which allows you to increase the size of a shared disk without deactivating the cluster, and effectively no downtime, and is helpful for VM clustering solutions. For more information, see You Can Hot Extend a Shared vVol Disk.

vmdk(s) – Non-Clustered and Clustered

VMFS is a clustered file system that disables (by default) multiple virtual machines from opening and writing to the same virtual disk (.vmdk file). This prevents more than one virtual machine from inadvertently accessing the same .vmdk file. The multi-writer option allows VMFS-backed disks to be shared by multiple virtual machines.

As we all are aware of, Oracle RAC requires shared disks to be accessed by all nodes of the RAC cluster. KB 1034165 provides more details on how to set the multi-writer option to allow VM’s to share vmdk’s.

Requirement for shared disks with the multi-writer flag setting for a RAC environment is that the shared disk is

- has to set to Eager Zero Thick provisioned

- need not be set to Independent persistent

KB 2121181 provides more details on how to set the multi-writer option to allow VM’s to share vmdk’s on a VMware vSAN environment.

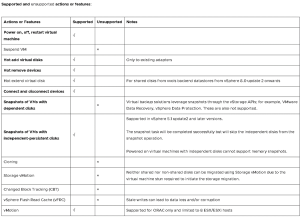

Supported and Unsupported Actions or Features with Multi-Writer Flag ( KB 1034165 & KB 2121181 )

Current restriction of shared vmdk’s using the multi-writer attribute is that Hot extend virtual disk is disallowed as per KB 1034165 & KB 2121181

RHEL 7 and above Hot Extend

Please refer to the RedHat article “Does RHEL 7 support online resize of disk partitions?”

As to older style partitions, this feature has been added in RHEL 7 current release with a feature request (RFE has been filed to add support for online resizing of disk partitions to RHEL 7 in private RHBZ#853105. With this feature, it’s possible to resize the disk partitions online in RHEL 7”

Hot Extend a Shared vVol Disk

Hot extension of a shared vVol disk is supported with ESXi 8.0 Update 2 or later. This allows you to increase the size of a shared disk without deactivating the cluster.

Extending the size of shared vVol disk is a two step process. First you must increase the size of shared vVol disk using vCenter. Then extend the partition size of the disk from the guest OS.

Best Practices for Hot Extending Shared vVol Disk:

- You can only extend one shared vVol disk at a time from a single VM. You cannot extend multiple shared vVol disks simultaneously.

- Do not reconfigure any other VM parameter while you are changing the size of a shared vVol disk. Any other change you make in VM configuration will not be performed during this operation.

- All VMs sharing the disk must be accessible and registered with vCenter. VMs cannot be in a suspended state or in maintenance mode. VMs cannot be in APD or PDL state.

- Do not perform shared disk extension when a cluster upgrade is in progress and there are hosts in the cluster that do not support this feature.

- Hot extend of a shared vVol disk is not supported for disks that have IOFilters attached.

More details can be found at You Can Hot Extend a Shared vVol Disk.

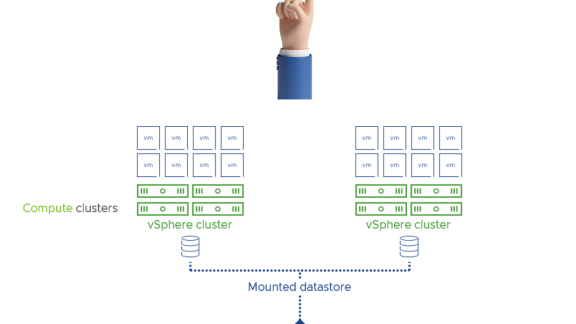

Test Setup for Hot Extend of Oracle RAC multi-writer vmdk’s using VMware Virtual Volumes (vVols)

The lab setup was

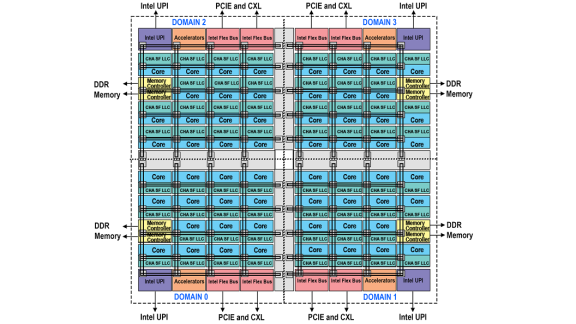

-3 Node vSphere cluster, ESXi, 8.0.2, 22380479 on Inspur NF5280M6 with Intel Ice-Lake Processors Intel(R) Xeon(R) Gold 6338 CPU

-Pure X50 AFF storage was used to provide Virtual Volume support for the Oracle RAC Cluster storage

-All 3 servers had Dual Port Emulex LPe35000/LPe36000 Fibre Channel Adapter to the Pure Storage X50 AFF

2 node Oracle RAC Cluster ‘nrac19c’ was created with 2 RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’.

Both RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’ , were created with OS OEL 8.8 UEK with Oracle 19.19 Grid Infrastructure & RDBMS installed.

Oracle ASM was the storage platform with Oracle ASMLIB. Oracle ASMFD can also be used instead of Oracle ASMLIB.

The rest of the steps, whether we use Oracle ASMLIB or Oracle ASMFD or Linux udev, are the same when extending the size of Oracle ASM disk(s) of an Oracle ASM disk group.

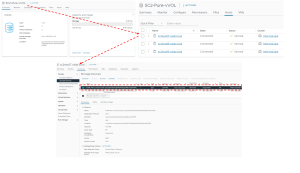

Details of the RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’ are shown as below.

Both RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’ have 3 vmdks:

- Non-clustered Hard Disk 1 of 80G is for the OS

- Non-clustered Hard Disk 2 of 80G is for the Oracle 19c Grid Infrastructure & RDBMS binaries.

- Clustered Hard Disk 3 ‘nrac19c1_19.19_vvol.vmdk‘ of 500G is for the Oracle Cluster which is using Oracle ASM storage with Oracle ASMLIB and is attached to vNVMe controller 0:0 , using multi-writer attribute and on VMware Virtual Volume (vVols) on Pure X50 AFF

For ESXi versions lower than 8.0 Update 2 – virtual NVMe controller is NOT supported for the virtual disks with multi-writer mode. Starting vSphere 8.0 Update 2, – Use vNVMe controller for disks in multi-writer mode: Starting with vSphere 8.0 Update 2, you can use virtual NVMe controllers for virtual disks with multi-writer mode, used by third party cluster applications such as Oracle RAC. Read more at VMware ESXi 8.0 Update 2 Release Notes

Note – We used 1 VMDK for the entire RAC Cluster for sake of illustration , as the intention here was to demonstrate the hot-extend of clustered vmdk’s using multi-writer attribute on VMware vVols for Oracle RAC workloads.

Recommendation is to follow the RAC deployment Guide on VMware for Best Practices with respect to the RAC layout – Oracle VMware Hybrid Cloud High Availability Guide – REFERENCE ARCHITECTURE

OS Details of the Oracle ASM Disk of size 500GB:

[root@nrac19c1 ~]# fdisk -lu

Disk /dev/nvme0n1: 500 GiB, 536870912000 bytes, 1048576000 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd9465420

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 1048575999 1048573952 500G 83 Linux

…..

[root@nrac19c1 ~]#

Oracle ASM disk group details of the 500GB clustered vmdk is shown as below.

[root@nrac19c1 ~]# oracleasm listdisks

DATA_DISK01

[root@nrac19c1 ~]#

List of Oracle ASM disks :

grid@nrac19c1:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

511996 487480 511999 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c1:+ASM1:/home/grid>

The 2 node RAC Cluster with RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’ is up and running with Cluster services shown as below.

Test Cases

The test case is to hot extend the Oracle RAC multi-writer clustered vmdk of size 500 GB on VMware Virtual Volume (vVols) backed by Pure Storage X50 AFF , without any downtime and any disruption to the Oracle RAC Cluster services and databases.

Test Steps

The test steps are.

- ASM diskgroup DATA_DG has an existing ASM disk ‘DATA_DISK01’ of size 500GB at vNVMe 0:0 position.

- Extend the existing disk from size 500GB to size 600GB.

- Observe change of disk size at OS and Oracle ASM level and report any anomalies if any.

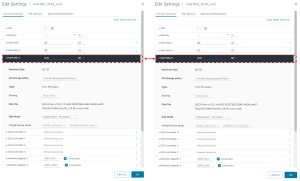

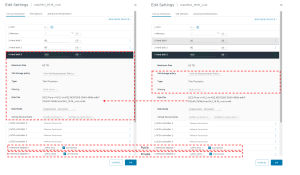

On RAC VM ‘nrac19c1_19.19_vvol’ , Right Click, Edit Settings, and change the clustered vmdk ‘nrac19c1_19.19_vvol.vmdk’ size from 500GB to 600GB.

Click Ok and the hot-extend of the Clustered vmdk vVol starts.

Once the operation completes, the Pure Storage GUI shows that the vVol backing the clustered vmdk size is changed from 500GB to 600GB.

Both RAC VM’s, ‘nrac19c1_19.19_vvol’ and ‘nrac19c2_19.19_vvol’ show the new size of the clustered vmdk ‘nrac19c1_19.19_vvol.vmdk’ size from 500GB to 600GB.

Steps to see the new increased size by the OS and Oracle database

The next step is to observe change of disk size at OS and Oracle ASM level and report any anomalies if any.

The details of the OS rescan, OS repartition , ASM rebalance and ASM drop process can be found here On Demand hot extend non-clustered Oracle Disks online without downtime – Hot Extend Disks

Rescan the OS to see the new added disk and check increased disk size via fdisk command for both RAC VM’s. In our case we are using vNVMe controllers so we would have to use the ‘nvme ns-rescan /dev/nvme0’ command, in case of SCSI controller, one can use ‘./usr/bin/rescan-scsi-bus.sh’ script.

[root@nrac19c1 ~]# nvme ns-rescan /dev/nvme0

[root@nrac19c2 ~]# nvme ns-rescan /dev/nvme0

The fdisk command shows the increase in OS size to 600GB but the partition size is still 500GB.

[root@nrac19c1 ~]# fdisk -lu /dev/nvme0n1

Disk /dev/nvme0n1: 600 GiB, 644245094400 bytes, 1258291200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd9465420

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 1048575999 1048573952 500G 83 Linux

[root@nrac19c1 ~]#

[root@nrac19c2 ~]# fdisk -lu /dev/nvme0n1

Disk /dev/nvme0n1: 600 GiB, 644245094400 bytes, 1258291200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd9465420

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 1048575999 1048573952 500G 83 Linux

[root@nrac19c2 ~]#

The OS messages file shows the increase in OS size to 600GB.

[root@nrac19c1 ~]# tail -100 /var/log/messages

….

Oct 5 11:12:05 nrac19c1 systemd[1]: Started system activity accounting tool.

Oct 5 11:12:07 nrac19c1 kernel: nvme0n1: detected capacity change from 536870912000 to 644245094400

…

[root@nrac19c1 ~]#

[root@nrac19c2 ~]# tail -100 /var/log/messages

…

Oct 5 11:12:08 nrac19c2 kernel: nvme0n1: detected capacity change from 536870912000 to 644245094400

..

[root@nrac19c2 ~]#

The OS sees the increased size at this point of time, Oracle ASM does not see the increased size as the OS partition still shows 500GB and has not changed from ASM’s perspective.

grid@nrac19c1:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

511996 487480 511999 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c1:+ASM1:/home/grid>

grid@nrac19c2:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

511996 487480 511999 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c2:+ASM1:/home/grid>

Delete and Recreate the partition table to see the new disk size – we do this ONLY on 1 RAC node , in this case RAC VM’ ‘nrac19c1_19.19_vvol’.

[root@nrac19c1 ~]# fdisk /dev/nvme0n1

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): d

Selected partition 1

Partition 1 has been deleted.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-1258291199, default 2048):

Last sector, +sectors or +size{K,M,G,T,P} (2048-1258291199, default 1258291199):

Created a new partition 1 of type ‘Linux’ and of size 600 GiB.

Partition #1 contains a oracleasm signature.

Do you want to remove the signature? [Y]es/[N]o: N

Command (m for help): w

The partition table has been altered.

Syncing disks.

[root@nrac19c1 ~]#

Run partx or partprobe to inform OS of partiition table changes.

[root@nrac19c1 ~]# partx -u /dev/nvme0n1

[root@nrac19c1 ~]# fdisk -lu /dev/nvme0n1

Disk /dev/nvme0n1: 600 GiB, 644245094400 bytes, 1258291200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd9465420

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 1258291199 1258289152 600G 83 Linux

[root@nrac19c1 ~]#

[root@nrac19c2 ~]# fdisk -lu /dev/nvme0n1

Disk /dev/nvme0n1: 600 GiB, 644245094400 bytes, 1258291200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd9465420

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 1258291199 1258289152 600G 83 Linux

[root@nrac19c2 ~]#

At this stage, Cluster service are STILL running – no disruption.

ASM still shows old disk size. Run the below command to resize the diskgroup. The new size is written to the Oracle ASM disk header. More information can be found here.

[root@nrac19c1 ~]# oracleasm scandisks ; oracleasm listdisks

Reloading disk partitions: done

Cleaning any stale ASM disks…

Scanning system for ASM disks…

DATA_DISK01

[root@nrac19c1 ~]#

[root@nrac19c2 ~]# oracleasm scandisks ; oracleasm listdisks

Reloading disk partitions: done

Cleaning any stale ASM disks…

Scanning system for ASM disks…

DATA_DISK01

[root@nrac19c2 ~]#

grid@nrac19c1:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

511996 487480 511999 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c1:+ASM1:/home/grid>

grid@nrac19c2:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

511996 487480 511999 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c2:+ASM1:/home/grid>

grid@nrac19c1:+ASM1:/home/grid> sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 – Production on Thu Oct 5 11:44:27 2023

Version 19.19.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 – Production

Version 19.19.0.0.0

SQL> alter diskgroup DATA_DG resize all;

Diskgroup altered.

SQL> Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 – Production

Version 19.19.0.0.0

grid@nrac19c1:+ASM1:/home/grid>

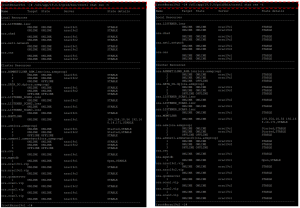

ASM now shows the new increased size of 600GB.

grid@nrac19c1:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

614396 589880 614399 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c1:+ASM1:/home/grid>

grid@nrac19c2:+ASM1:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

614396 589880 614399 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

grid@nrac19c2:+ASM1:/home/grid>

The ASM alert log file shows operation is successful

grid@nrac19c1:+ASM1:/u01/app/grid/diag/asm/+asm/+ASM1/trace> tail -100 alert_+ASM1.log

…..

2023-10-05T12:25:45.183996-07:00

SQL> alter diskgroup DATA_DG resize all

2023-10-05T12:25:45.254310-07:00

…

grid@nrac19c1:+ASM1:/u01/app/grid/diag/asm/+asm/+ASM1/trace>

grid@nrac19c2:+ASM2:/u01/app/grid/diag/asm/+asm/+ASM2/trace> tail -100 alert_+ASM2.log

……

2023-10-05T12:25:45.306678-07:00

NOTE: disk validation pending for 1 disk in group 1/0x5a2016c3 (DATA_DG)

NOTE: completed disk validation for 1/0x5a2016c3 (DATA_DG)

NOTE: membership refresh pending for group 1/0x5a2016c3 (DATA_DG)

2023-10-05T12:25:45.567139-07:00

GMON querying group 1 at 5 for pid 26, osid 5871

2023-10-05T12:25:45.591941-07:00

SUCCESS: refreshed membership for 1/0x5a2016c3 (DATA_DG)

….

grid@nrac19c2:+ASM2:/u01/app/grid/diag/asm/+asm/+ASM2/trace>

Summary

- With the release of VMware ESXi 8.0 Update 2, feature to hot-extend a shared vSphere Virtual Volume disk was added

- This blog focuses on the complete list of steps to be taken to increase the size of the clustered vmdk(s) using multi-writer flag for an Oracle RAC Cluster online using VMware Virtual Volumes (vVols) starting VMware ESXi 8.0 Update

- For earlier versions of vSphere , Current restriction of shared vmdk’s using the multi-writer attribute is that Hot extend virtual disk is disallowed as per KB 1034165(non-vSAN) & KB 2121181 (vSAN)

Acknowledgements

This blog was authored by Sudhir Balasubramanian, Senior Staff Solution Architect & Global Oracle Lead – VMware.

Conclusion

This blog focuses on the complete list of steps to be taken to increase the size of the clustered vmdk(s) using multi-writer flag for an Oracle RAC Cluster online using VMware Virtual Volumes (vVols) starting VMware ESXi 8.0 Update

All Oracle on vSphere white papers including Oracle licensing on vSphere/vSAN, Oracle best practices, RAC deployment guides, workload characterization guide can be found in the url below

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html