In the previous post, I covered capacity management at VM level. In this post, I will cover capacity management at infrastructure level.

At the infrastructure level, you look at the big picture. Hence, it is important that you know your architecture well. One way to easily remember what you have is to keep it simple. Yes, you can have different host specifications—CPU speed, amount of RAM, and so on in a cluster. But, that would be hard to remember if you have a large farm with many clusters.

You also need to know what you actually have at the physical layer. If you don’t know how many CPUs or how much RAM the ESXi host has, then it’s impossible to figure out how much capacity is left. I will use storage as an example to illustrate why this is important. Do you know how many IOPS your storage has?

The majority of shared storage is shared with both ESXi and non-ESXi servers mounted. Even if the entire storage is dedicated to ESXi, there is still the physical backup server mounting it, and it might be doing array-based replication or a snapshot.

Some storage support dynamic tiering (high IOPS, low latency storage fronting the low IOPS, and high latency spindles). In this configuration, the underlying physical IOPS varies from minute to minute. This gives a challenge for ESXi and vRealize Operations to determine the actual physical limit of the array, so you need to take extra care to ensure you accurately account for the resources available. A change in the array configuration can impact your capacity planning. Changing the tier preference of a given LUN can probably be done live, so it can be done without you being informed.

Capacity planning at the compute level

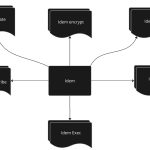

Once you know the actual capacity, you are in a position to figure out the usable portion. The next figure shows the relationship. The raw capacity is what you have physically. The Infrastructure as a Service (IaaS) workload is all the workload that is not caused by a VM. For example, the hypervisor itself consumes CPU and RAM. When you bring an ESXi host into the maintenance mode, it will trigger mass vMotion for all the VMs running on it. That vMotion will take up the CPU and RAM of both ESXi hosts and the network between them. So the capacity left for VM, the usable capacity, is Raw Capacity—IaaS workload—Non vSphere workload.

The usable capacity is not 100% available for VM. You still need to consider HA.

So what are these IaaS workloads? Think of SDDC. All the services or functionalities it provides to the consumer (the VM) run as software. Storage services (for example, Virtual SAN, vSphere Replication), network services (for example, L3 switch in NSX, firewall), security services (for example, Trend Micro Antivirus), and availability services (for example, Storage vMotion); the list goes on. It is software, and software consumes physical resources. The next table lists all the IaaS workloads. It is possible that the list will get outdated as more and more capabilities are moved into software. For each of the workloads, I have put a rough estimate on the impact. It is an estimate as it depends upon the scale. Using vMotion on a small and idle VM will have minimal impact. Using vMotion on five large memory-intensive VMs will have high impact; the same goes for vSphere Replication. Replicating an idle VM with RPO of 24 hours will generate minimal traffic. Replicating many write-intensive VMs with RPO of 15 minutes will generate high network traffic.

In the list of IaaS workloads here, I’m not including VMware Horizon View. Virtual Desktop Infrastructure (VDI) workloads behave differently to server workloads and so they need their own separate breakdown of workload, which have not been included in the table. A VDI (desktop) and VSI (server) farm should be separated physically for performance reasons and so that you may upgrade your vCenter Servers independently. If that is not possible in your environment, then you need to include Horizon view-specific IaaS workloads (recompose, refresh, rebalance, ThinApp streaming, user home drive, and so on) in your calculations. If you ignore these and only include the desktop workloads, you may not be able to perform recompose during office hours.

Get the best performance and efficiency out of your vSphere infrastructure!

Conduct a free trial today by installing vSphere with Operations Management in your environment.

Good capacity management begins with a good design. A good design creates standards that simplify capacity management. If the design is flawed and complex, capacity management may become impossible.

Personally, I’m in favor of dedicated clusters for each service tier in a large environment. We can compare the task of managing the needs of VMs to how an airline business manages the needs of passengers on a plane—the plane has dedicated areas for first class, business class, and economy class. It makes capacity management easier as each class has a dedicated amount of space to be divided among the passengers and the passengers are grouped by service level, so it is easier to tell if the service levels for each class are being met overall. Similarly, I prefer to have three smaller clusters rather than one very large, mixed cluster serving all three tiers. Of course, there are situations where it is not possible to have small clusters; it all depends on requirements and budget. Additionally, there are benefits of having one large cluster, such as lower cost and more resources to handle peak periods. In situations where you choose to mix VMs of different tiers, you should consider using Shares instead of Reservations.

For the compute node, the following table provides an example of cluster design for virtual machine tiers. Your design will likely differ from the following table, as your requirements may differ. The main thing is that you need to have a design, which clearly defines the standard.

In the preceding example, capacity planning becomes simple in tier 1, as there is a very good chance that we hit the availability limit before we hit the capacity limit. Defining how many VMs will be in a cluster in advance will allow you to set a vRealize Operations alert in if that number is breached.

Hope you enjoy reading it so far, I will cover the Storage and Network in the next post.

This post is adapted from the vRealize Operations Performance and Capacity Management book by Iwan ‘e1’ Rahabok.