If you’ve been following Kubernetes and data center trends, you’ve probably heard the arguments raging about the best way to deploy containers. Passionate partisans (with some help from vendors fanning the flames) have lined up on either side of one big question: should you deploy workloads on a virtualized hypervisor or on bare metal, directly on servers or hosts?

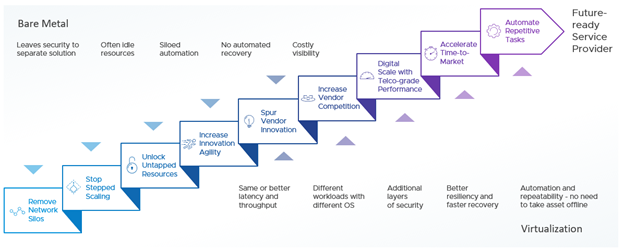

Judging from the heat surrounding this issue, you might think people are debating the best Star Wars movie. You’ll hear lots of strong opinions and lots of emotion. However, when you look at the facts, there’s very little controversy. Everything we know about running application infrastructures shows there’s little advantage to deploying directly on bare metal and significant potential downside.

We’ve had versions of this conversation many times over the last two decades, and the conclusion hasn’t changed: foregoing virtualization offers a short-term fix to a long-term problem. Yes, you might save a little money today. But when weighing the total cost of ownership over the life of your investment, you’ll find that running containerized workloads in a virtualized environment, dramatically reduces operational complexity, extends the life of your investment, and saves you a lot of money in the long run.

Still not sure? Let’s review the top six reasons why virtualization makes sense.

1. Performance is as good or better.

One of the longstanding arguments on the bare metal side of the debate is that you’ll get better performance. Unlike some hypervisor myths, this one was true in certain cases. But it’s not anymore.

Telco radio access network (RAN) workloads have very strict latency requirement – less than 10 microseconds. In the past, these workloads ran faster on bare metal. But today VMware ESXi hypervisors deliver real-time performance. Industry-standard cyclic and Oslat tests show conclusively that the VMware ESXi hypervisor delivers the same performance, well within the 10-microsecond range, even for the most demanding RAN workloads.

2. Reduce capital and operational expenses (CapEx and OpEx).

When you deploy on a hypervisor, you have the flexibility to mix and match workloads, including workloads that need different operating systems (OSs), on the same physical platform. Put simply, you can run more software on less hardware. When you’re running workloads on bare metal, you can only run one OS per server—which means you’re often limited to running one workload per host. That has big implications for your budget.

First, basic capital outlay. Yes, you avoid paying for virtualization software, but you now need to buy more servers or hosts than you otherwise would. It’s in the OpEx, however, where the disadvantages of bare metal really crystallize. Running more servers means you will need more power, more physical rack space, and more time to maintain additional hardware on an ongoing basis.

That last point is especially important for communication service providers (CSPs) weighing their options for 5G radio access network (RAN) software. Out at cell sites, space is at a premium, but the number of things CSPs want to do there keeps growing. Today, you may only be focused on running virtualized distributed unit (DU) software. But what about tomorrow, when you want to deliver a new edge application or run new xApps to optimize your spectral efficiency? If each of those new apps needs to run on a separate physical host, you’re likely to run out of space long before you can take advantage of them.

3. Unlock trapped capacity.

When each workload requires its own server or host, you must overprovision to support peak utilization. And you end up deploying—and paying to maintain—a lot of capacity that rarely gets used.

If this argument sounds eerily familiar, it’s because we had it back when companies were first virtualizing their data centers. All the same benefits still apply; when you virtualize, you can utilize your infrastructure (or cloud spend) to the fullest. As they have for the last 20 years, hypervisors let you burst capacity from a shared pool of resources during peak loads, instead of having to pre-provision dedicated servers or hosts that mostly sit idle.

In a cloud-native world, the benefits of virtualization become even more compelling. You can achieve vastly greater pod and container density—more than 6x more container pods per physical host, according to one independent study—than running on bare metal.

4. Strengthen security.

Containers do offer a basic level of security, but we all know there is no such thing as 100% secure. Hackers are too smart, increasingly well-funded, and constantly finding ways to circumvent defenses. If a malicious actor escapes the container, they could gain access to the host OS, to other containers on the host, and even other hosts on the network.

You may not be able to completely stamp out the threat, but you can lower your risk by using multiple layers of security. And when you virtualize, you gain one additional layer of segmentation by default. If a malicious actor breaks out of a container, they only gain access to the virtual machine (VM) it’s running in—not the OS or other containers. You can also now layer on additional VM-level defenses—micro-segmentation, firewalling, access control—in ways that are impractical when running containers on bare metal.

5. Simplify lifecycle management.

Software updates are a basic fact of life for any application environment. And with 5G introducing more vendors to your network, you can expect more frequent software updates than ever before. If you’re running containers on bare metal, you’ll have to take services offline every time you perform an upgrade or rollback. This means they’re much more time-consuming and potentially disruptive to your customers.

Using a hypervisor abstracts your applications and OS from the underlying hardware. You can run multiple software and OS versions, even multiple versions of Kubernetes, simultaneously. This allows you to roll out new code, without taking assets offline and rerouting traffic while the upgrade is performed.

6. Improve resiliency.

Kubernetes has come a long way, but there are still areas where it can feel less than fully baked—especially for mission-critical applications. Resiliency is one of those areas. By default, Kubernetes doesn’t even notice when a node has gone down until it’s unresponsive for five minutes. Even then, it can’t recover the node; an administrator has to do that manually.

In a virtualized environment, things are much faster and more automated. If one or more nodes goes down, VMware vSphere virtualization software can spot the issue and recover all affected nodes within 2-3 minutes—before Kubernetes even knows there’s a problem. In many cases, the virtualized environment will detect a potential problem with a workload and move that VM before the node ever goes down.

It Pays to Run on Hypervisors

You may think you’re having déjà vu. Didn’t we hash out most of these questions years ago when organizations were first virtualizing their data centers and adopting cloud computing models? We did. And like then, the advantages of hypervisors remain compelling.

When your application environment is based on virtualized logical infrastructure, you gain more flexibility, efficiency, and control. And those advantages hold just as true today, in the context of containerized workloads, as they did for traditional applications.

Businesses never stand still, and the applications they rely on don’t either. You can deploy your workloads on a virtualized platform that lets you evolve your environment to solve tomorrow’s problems as well as today’s, without having to overhaul your infrastructure. Or not. For a big tech controversy, it doesn’t sound that controversial after all.

Please read our white paper to understand how hypervisor performance supports the RAN transformation.

Discover more from VMware Telco Cloud Blog

Subscribe to get the latest posts sent to your email.