My team just announced a new OpenStack distribution for our NFV platform – VMware Integrated OpenStack-Carrier Edition. It is a great solution for our customers looking to benefit from a carrier-grade Network Functions Virtualisation (NFV) platform while leveraging OpenStack to run their Virtual Network Functions (VNFs) workloads. The new distribution is our response to customers asking for a robust and proven NFV environment on which they can monetize NFV services, while also supporting their developers with OpenStack APIs. We’ve packaged the distribution with our current NFV platform to deliver to market VMware vCloud NFV OpenStack Edition. With vCloud NFV-OpenStack, developers can “benefit” from the same Service Level Agreements (SLAs) that the operations team commits to in order to actually generate revenue. It is THAT solid. How is VMware able to offer such a robust solution? By the time you are finished reading this blog post the answer will be clear.

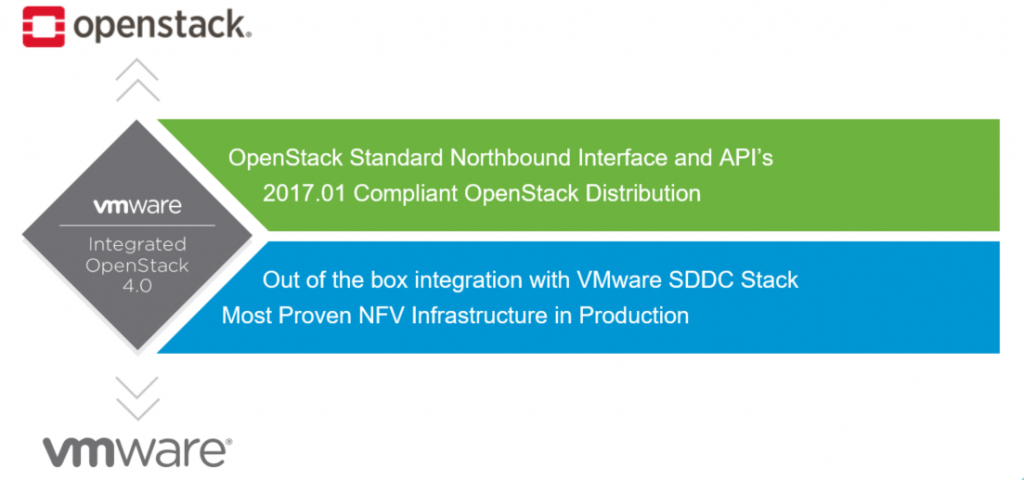

VMware is strongly committed to providing our customers with the flexibility to choose the best Virtualized Infrastructure Manager (VIM) within the vCloud NFV platform to meet their business priorities. One of our VIMs is VMware vCloud Director which is loved by many of our customers already in production. The other VIM is VMware Integrated OpenStack (VIO)-Carrier Edition. VMware has had a DefCore-compliant OpenStack distribution since 2015, and our latest release is an OpenStack 2017.01 Octata-based distribution. If a customer decides to use VIO-CE as the VIM, it provides the customer the best of both worlds: they can select their VIM based on the APIs they want to use to deploy NFV services, and be assured that it has been fully integrated, tested, and certified with our NFV infrastructure. We also incorporated new VIO-CE-focused test cases into our VNF interoperability program, VMware Ready for NFV, and are actively testing interoperability between leading VNFs and both VIMs. To this day, 21 of our 43 Ready for NFV certified VNFs ) already support earlier versions VMware Integrated OpenStack, and will be re-certified on VIO-CE as soon as the code goes GA. Our VNF partners are always given the option to choose which VIM in vCloud NFV they want to test their VNFs with, so having 21 partners supporting VMware Integrated OpenStack is a testament to their own customers interest in OpenStack.

With the introduction of our vCloud NFV-OpenStack, we are updating the Ready for NFV program scope to provide our VNF partners with a way to make sure that they are benefiting from the new carrier-specific capabilities we have introduced in VIO-CE. The new tests also help communication service providers in using the new capabilities as soon as they install the new distribution. In essence, a production-ready OpenStack environment to run revenue-generating network services is a reality. Obviously.

A few of the new VIO-CE features that we added to the VMware Ready for NFV program scope are highlighted below.

- Multi-tenancy and VNF resource reservation – customers that are used to the abstraction layers available in vCloud Director love splitting their physical data centre into purpose built-constructs. They tend to carve out virtual data centres to various VNFs using the Organization Virtual Data Center (OvDC) available in vCloud Director. OpenStack does not have an equivalent construct since data centers are broken down into projects and resources are not limited to specific virtual data centers. Typically, resources are allocated based on first-come, first-served approach. Well, with VIO-CE, we introduce a new concept called Tenant Virtual Data Center. In the updated Ready for NFV program we are looking to test that a Tenant Virtual Data Center (Tenant vDC) can host a VNF and ensure strong resource isolation so that one workload does not infringe on the other. The benefit of this function is that the network provider can deliver Service Level Agreements (SLAs)-based services on shared infrastructure without worrying if the resources assigned to the VNF will be available at all times.

- Dynamic Resource Scaling – one of the benefits of transitioning workloads from dedicated hardware to software is the ability to quickly provide the virtualized function with more hardware resources if the function needs them. There are two ways to scale resources: scale-out and scale-up. In our work with VNF partners we see that scaling-out in response to workload demand is well supported. This method is somewhat limited as it creates another instance of the VNF component that requires the same amount of resources that are already being consumed. The ability to provide a finer grain control of resources in a running VNF is realized when resizing a live network function. This live resizing of a VNF component, by adding the appropriate required resources to the running VNF without the need to reboot the component, is supported by VIO-CE and is obviously tested in the Ready for NFV program scope

- Advanced Networking – There are several advanced networking capabilities that are introduced in VIO-CE that are important for the NFV use case and that are covered by our program. For example, the ability to attach various types of networking interfaces to a VNF component using neutron is crucial. We see this type of functionality as especially of interest to data plane intensive workloads. In some cases, the VNF component is looking for a direct pass-through interface directly to the physical Network Interface Card (NIC) while also using some virtualized interfaces. Data plane traffic typically uses the direct pass-through path while management and control plane interfaces are happy to use our VMXNET3 para-virtualized network interface. This is an obvious operational use case that we translated to our Ready for NFV test plan. We also have seen use cases where the VNF component is using VLAN tags to scale the number of virtual interfaces. This is especially useful for NFV use cases such as virtual routers or Packet Gateway. The ability of the VNF component to tag traffic with a VLAN is also tested in our Ready for NFV program.

One of the nice things in a modular architecture, is the ability to change modules. VMware customers with a vCloud NFV environment can experiment with OpenStack alongside their production setup. In fact, they do not need to put all their eggs in one basket and can experiment with VIO-CE in the lab while continuing to run their production workloads using vCloud Director. With an ever-increasing number of VNF partners supporting VIO-CE, our customers also have a production ready OpenStack cloud.

Jambi

Discover more from VMware Telco Cloud Blog

Subscribe to get the latest posts sent to your email.