By James Wirth, Consulting Architect, VMware and Evan Anderson, Sr. Staff Engineer, VMware

In this post, we’re going to take a look at how and why traditional applications can benefit from the addition of serverless compute capability.

Serverless Compute Capabilities

Serverless compute systems consist of a number of components as described in the post Anatomy of a Functions as a Service (FaaS) Solution. Such FaaS solutions form what is more broadly referred to as ‘serverless’. The combination of components all work together as a cohesive application platform that presents to hosted applications, the following features and capabilities:

Dynamic Scaling

Dynamic scaling refers to the capability of the infrastructure to automatically scale up, scale down and scale to zero. This is of course extremely desirable in a pay per use scenario such as in a public cloud, but it also can be useful in an on-premise environment. Properly leveraged it can provide assurance to application owners that their apps can scale to meet unexpected demand without human intervention. This in turn allows further oversubscription and sharing resources across different workloads with different peak usage times which means that the utilization of the infrastructure is extremely efficient. This additional efficiency can also lead to a lowering of a datacenter’s carbon footprint.

Event-Based Programming

Serverless compute systems used for event or stream based computing rely heavily on message queues to allow the mapping of events to specific function execution.

Rapid Development

Since the only code that a developer needs to add to a serverless compute system is the function code itself, development can iterate extremely rapidly. In addition, the infrastructure dependency management requirements on the developer are light, allowing them to concentrate on what they do best — writing code that delivers business value.

Serverless Storage

Distributed storage systems such as object stores, noSQL databases, big data platforms and so on are often paired with FaaS systems since the serverless functions are ephemeral. Functions are extremely useful for ingesting, moving and manipulating data, and so pairing them with a purpose-built data storage solution is often a necessity.

Serverless Compute Use Case: Extending the Traditional Application

Overview of the Traditional Application

Traditional applications come in many forms but perhaps the most recognizable is the LAMP stack (Linux Apache MySQL and PHP).

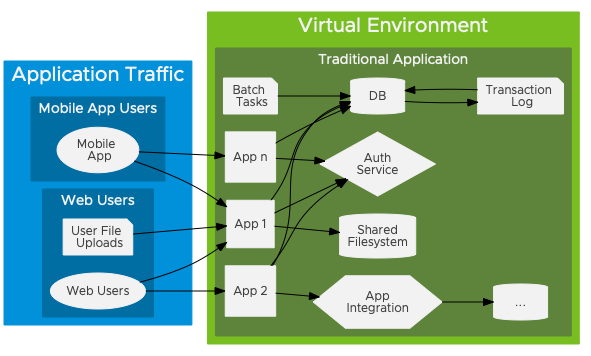

A traditional application based on a LAMP stack might look something like the following diagram:

Figure 1 – Traditional Monolithic Application

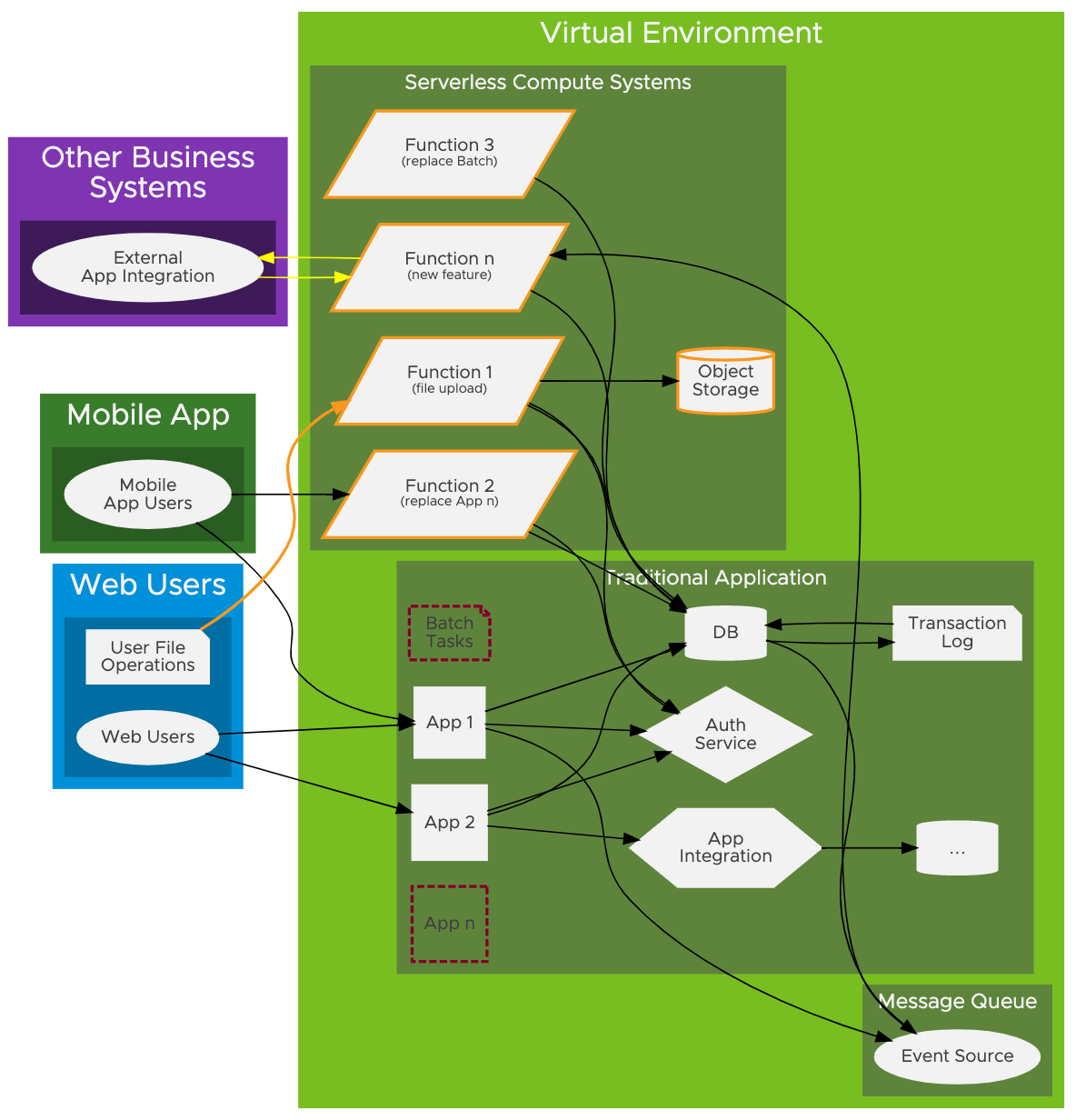

The addition of a Serverless Compute platform to the above traditional application architecture such as the platform described in the blog post: Anatomy of a Functions as a Service (FaaS) Solution would mean that the application developers can augment the existing application with new, capabilities such as:

- File generation, upload and download

- Auto scalability for mobile app support

- Migrate scheduled batch jobs to event-based

- Message bus for integration with additional systems

Figure 2 – Traditional Application with Serverless Compute

In the above diagram, we’ve augmented the traditional application with serverless compute components. The diagram is not intended to be definitive, rather provides a high-level view of the art of the possible. The key changes are as follows:

- Mobile app traffic is directed to functions rather than the app servers

- The App servers and Database send messages to an event queue to enable events to trigger functions rather than relying on scheduled batch jobs

- User file operation has been directed to functions to reduce load on app servers

- External systems have been integrated via functions

- App n can be retired, reducing costs

Why would adopting this model be successful?

Many traditional applications suffer from several challenges including:

- Large monolithic codebases that are difficult to maintain and update

- Complex dependencies

- Difficulty in scaling to meet unexpected load

- Complexity and risk of updating monolithic codebase to integrate into other business systems

The prevailing wisdom has often been that these applications need to be modernized. In a lot of cases that would mean moving to a modern app platform. However, adopting a modern app strategy is likely going to involve a very large and time-consuming effort and the value of the effort may come into question. If the original challenges that lead to the modernization discussion could be met in a different way, without a full-scale refactorization, rather in a more piecemeal or as-needed basis, why not consider that?

Depending on the exact challenges, of course, an option could be the addition of Serverless compute platform that could help address the following areas:

Faster More Granular Deployments

Since a variety of the components of the application including external integrations, mobile app backend, and file operations are now handled by functions and updates or changes to these components can now occur independently of the traditional applications codebase and of those other components. This has several advantages including the ability to make smaller more frequent changes and bugs and/or failures being largely isolated to specific application features.

File Operations – Generation, Upload and Download Challenges

Adding file operations or improving existing file operations is an excellent use case for serverless compute systems. Object stores are great choices for file storage and using them can take the load, both from a performance and storage perspective, away from the application servers. Furthermore, when file operations are handled by functions they can be triggered by events and scale to meet unexpected load and once again scale down when no longer needed.

New and Unpredictable Load from Mobile

A mobile application that is being added to an existing traditional application could be based entirely on serverless architecture. Traditional laptop/desktop use of websites is very different than that of mobile phone use. Mobile devices are starting to overtake traditional web access patterns. With this trend, adding new functionality for mobile users to existing apps as functions makes sense. Like file generation, mobile backends can also benefit from dynamic scaling.

Lack of Integration with other Business Systems

A serverless compute system can provide an excellent means of transferring data between existing systems. The functions effectively form the “glue” between two disparate systems by means of message bus, API gateway and functions to scale to and perform data transfer operations.

Migrate Scheduled Batch Tasks to Event Tasks

Tasks that have regularly scheduled jobs that either check for the presence of something or complete some type of scheduled batch task usually have an event associated with them. Changing these into event-driven tasks would mean the application is more responsive to changes and likely more efficient. Rather than having to wait for a scheduled task or running frequent polling tasks the event can simply trigger a function that completes the task.

Conclusion

Serverless compute systems are being widely adopted and can provide definite benefit to traditional and microservice architected applications alike. Infrastructure providers should, therefore, seek to understand the requirements of their application developing customers and help to provide them with appropriate infrastructure capabilities to meet their needs.

About the Authors:

Evan Anderson is a member of the Modern Applications Business Unit with a focus on serverless and developer enabling technologies. He’s also a contributor to Knative and other OSS projects. He got his start in SRE before moving to cloud infrastructure development.

James Wirth is a member of the Research Labs team within VMware Professional Services and focuses on DevOps, Site Reliability Engineering, Multi-Cloud architectures and new and emerging technologies. He is a proven cloud computing and virtualization industry veteran with over 15 years’ experience leading customers through their cloud computing journey.