Part 2 / 3

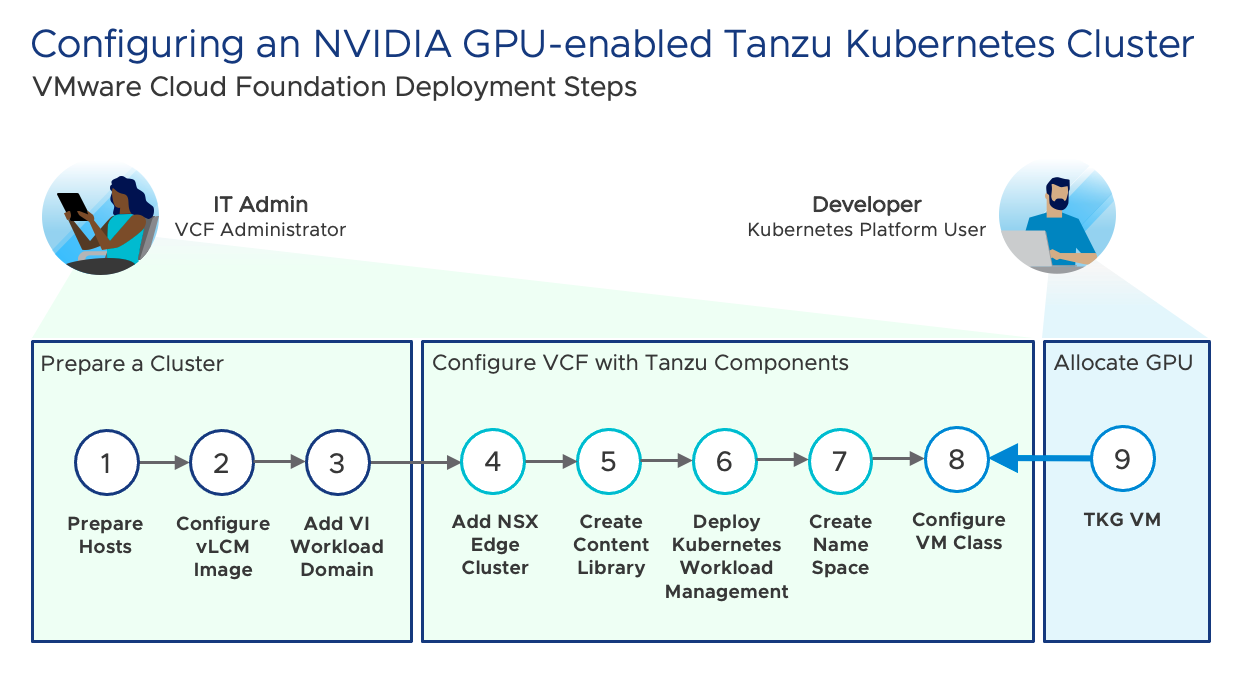

In Part 1 of this 3-part technical blog, we discussed how to prepare VMware Cloud Foundation as an AI / ML platform using NVIDIA A30 and A100 GPU devices.

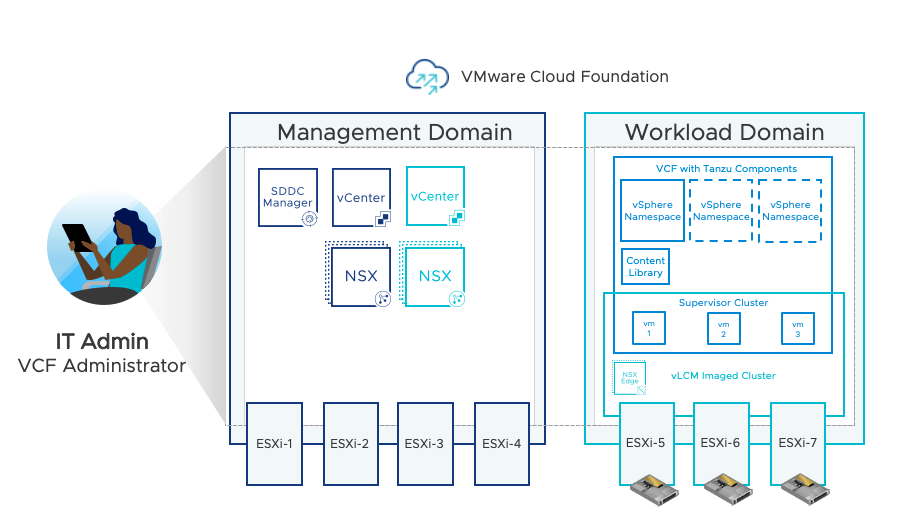

We discussed the first three (3) steps for a VCF Administrator (under the persona of an IT Admin) to prepare a GPU-enabled cluster in readiness to support containerized applications running on Tanzu Kubernetes Clusters.

These steps included:

- Preparing the Hosts

- Configuring a vSphere Lifecyle Manager (vLCM) Image

- Adding a VI Workload Domain.

In this article we will discuss the process of configuring the VCF workload domain with Tanzu. This includes the configuration of an NSX Edge cluster, creating a Content Library, deploying Kubernetes Workload Management, and creating a vSphere Namespace. Each of these steps is carried out by a VCF Administrator.

We will commence at Step 4 and take you through to Step 7.

Step 4: Configure an NSX Edge Cluster

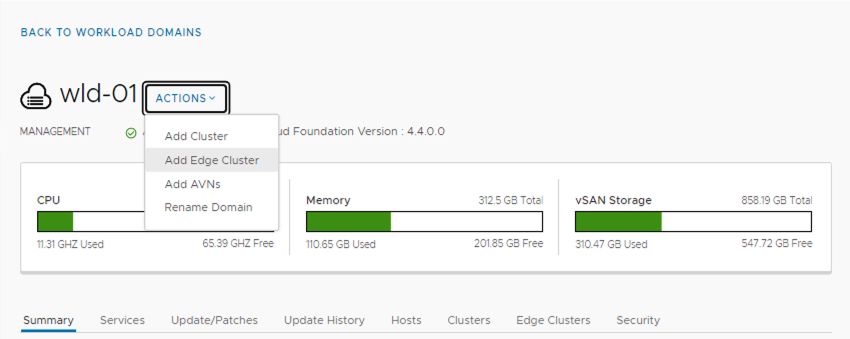

SDDC Manager contains automated workflows to deploy, scale and lifecycle manage a secure VMware Cloud. Prior to configuring Kubernetes Workload Management, an NSX Edge Cluster needs to be configured. An NSX Edge Cluster comprises of two (2) or more NSX Edge node VMs. SDDC Manager automation simplifies the deployment and configuration of an NSX Edge Cluster.

NSX Edge Clusters provide logical routing to external networks as well as providing network services such as load balancing and NAT. The underlying network topologies needed to support Tanzu Kubernetes Clusters are built into NSX-T which is deployed as part of the VCF full stack.

Within SDDC Manager navigate to the VI Workload domain which contains the GPU-enabled cluster and from the Actions Menu select to Add Edge Cluster.

Click here for further detail and a demo on configuring an NSX Edge Cluster within VMware Cloud Foundation.

Step 5: Create a Subscribed Content Library

vSphere 7.0 introduced the inherent capability to run containerized workloads as vSphere pods directly on the hypervisor as virtual machines. While there is no reason developers cannot use vSphere Pods, it’s worth mentioning that many will prefer to deploy Tanzu Kubernetes Clusters (TKCs). TKCs allow Kubernetes Platform Users to pick the version of Kubernetes they use, lifecycle/patch Kubernetes on their own, and provides an “consistent upstream compatible” Kubernetes implementation.

Note: GPU resources can be assigned to VMs when using the Tanzu Kubernetes Grid service. GPU resources are not supported for use with Pod VMs running natively on vSphere.

VCF with Tanzu enables developers to quickly deploy Tanzu Kubernetes clusters (TKCs). TKCs are comprised of a set of purpose-built virtual machines that are deployed into a vSphere Namespace. Kubernetes is enabled on the virtual machines to provide an isolated, upstream compatible Kubernetes instance.

The TKC Virtual machines running within a TKC comprise of TKC control plane nodes and TKG worker nodes. The TKC worker node VMs are hosts for running containers using Kubernetes.

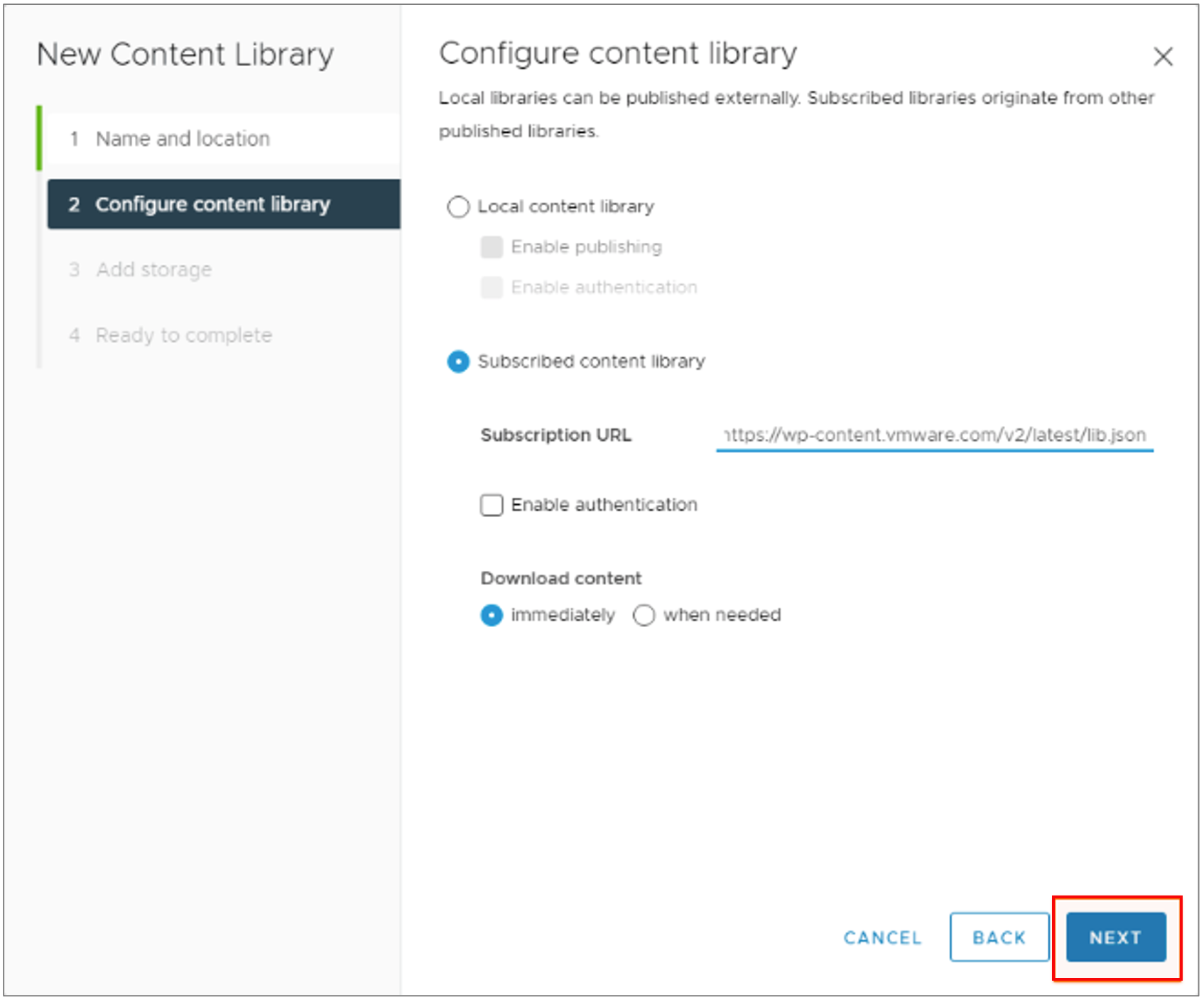

The VMs used for TKCs are deployed using pre-configured OVA images that are downloaded and stored into a vSphere Content Library.

A Content Library is created using the vSphere client and is a required step that must be performed before deploying TKC’s. As new releases of Kubernetes become available, updated VM images used for the TKCs are created by VMware and hosted from a central repository. The VCF Administrator can subscribe to this repository to automatically download new/updated images as they become available.

Step 6: Configure the VI Workload Domain for Kubernetes Workload Management

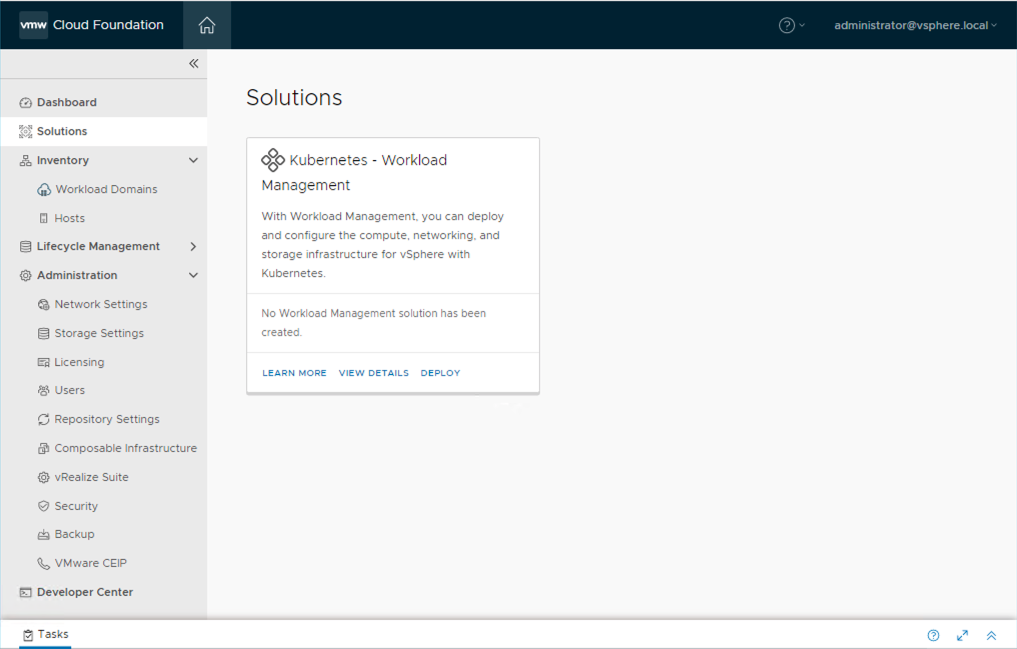

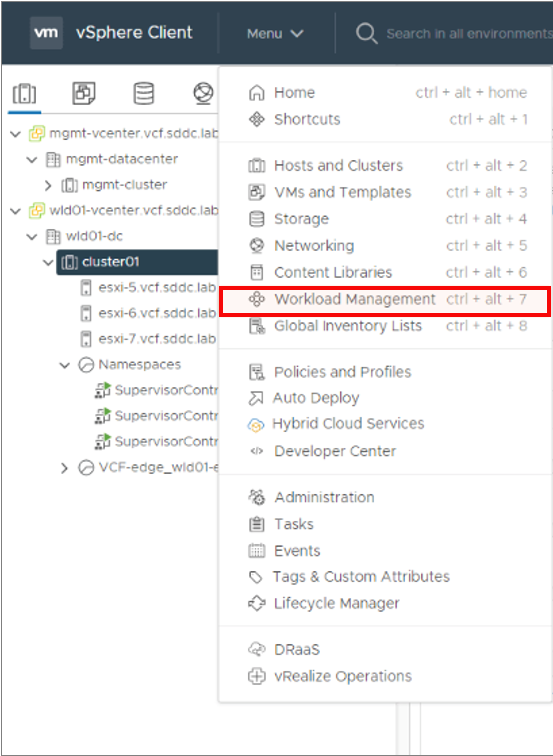

To deploy VMware Cloud Foundation with Tanzu, the VCF Administrator can now commence configuring the VI Workload Domain for Kubernetes Workload Management using SDDC Manager.

SDDC Manager performs a series of checks against the workload domain cluster to ensure it is healthy and ready for vSphere with Kubernetes to be enabled.

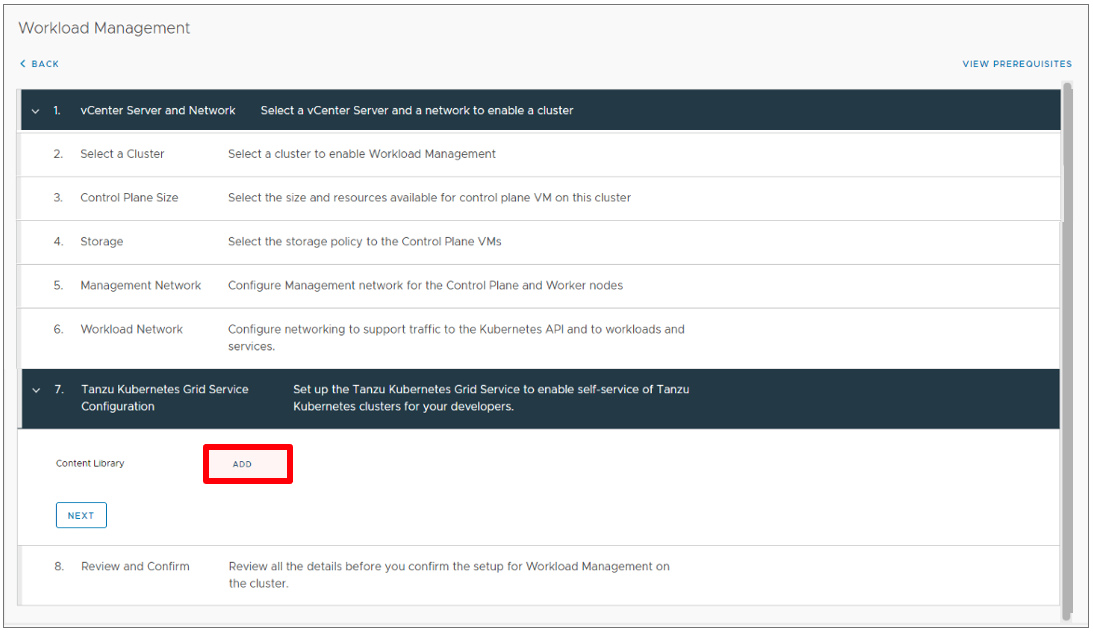

The configuration of the Kubernetes Workload Control Plane (WCP) is completed using the vSphere client and consists of:

- Deploying three (3) special purpose “supervisor control plane VMs” on a vSphere Cluster and instantiating the K8s instance.

- Pushing the container runtime and spherelet VIBs to the ESXi hosts.

- Creating and configuring the related network objects in NSX-T.

- Attach the Subscribed Content Library for TKC to use.

Note: A minimum of three (3) hosts is required for the vSphere cluster. Tanzu deploys three (3) supervisor nodes (VMs) as part of the Kubernetes Supervisor cluster, each which needs to run on their own host. The Supervisor cluster has a direct 1:1 relationship with the vSphere cluster.

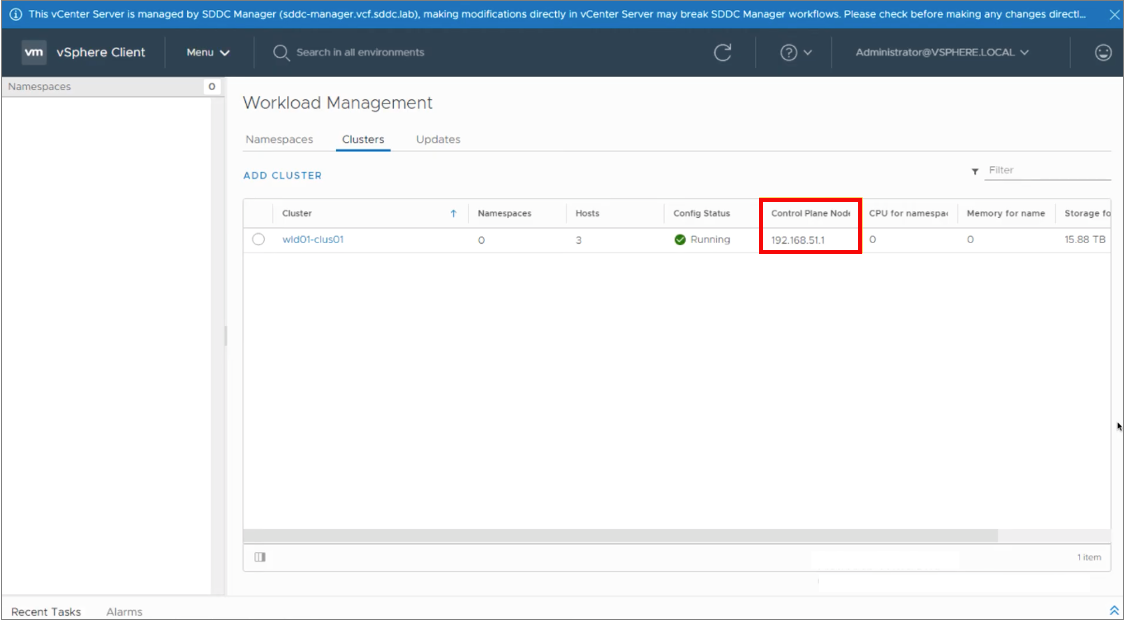

Once completed, the Kubernetes control plane can be accessed via the IP address which was configured as part of the workflow.

Click here for further detail and a demo on configuring Kubernetes Workload Management and instantiating the Kubernetes control plane within VMware Cloud Foundation with Tanzu.

Step 7: Create a vSphere Namespace for Tanzu Kubernetes Clusters

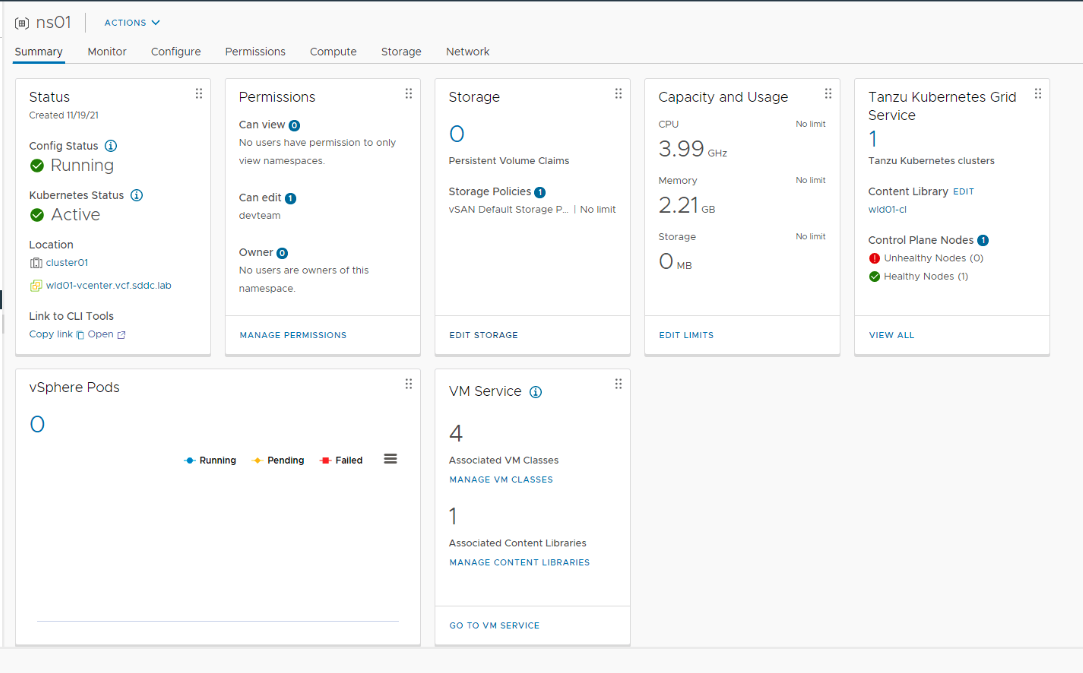

Once Kubernetes Workload Management has been configured, the vSphere client can be used by the VCF Administrator to configure a vSphere Namespace. vSphere Namespaces are sometimes referred to as Supervisor Cluster Namespaces and are used to:

- Control and manage access to the Kubernetes control plane.

- Set and enforce resource consumption limits of CPU, memory and storage resources.

- Assign the Content Library where Tanzu cluster images will be sourced.

Multiple vSphere Namespaces can be configured within a vSphere cluster which has been configured for Workload Management.

Prior to granting Kubernetes Platform Users access to the vSphere Namespace, it is important to first configure any resource limits for CPU, memory and storage and associate the vSphere Namespace with a Content Library. When using GPU devices, a VM Class will also need to be configured before Kubernetes Platform Users start deploying Tanzu Kubernetes clusters.

This diagram below shows an overview of the key components deployed so far within a VMware Cloud Foundation environment configured with a GPU-enabled workload domain and VCF with Tanzu.

Click here for further detail on configuring VMware Cloud Foundation with Tanzu.

In Part 3 of this blog series we will discuss how a VCF Administrator can configure a set of NVIDIA vGPU profiles and assign them to a vSphere Namespace using a Custom VM Class. At this point, Kubernetes Platform Users can then self-assign a GPU profile to a Kubernetes worker node VM. A container within a TKC Worker Node VM can then be configured to consume the resources from the GPU profile.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.