In my last post in this series, I discussed why, if you already have vCenter, you still need to consider adding another virtual infrastructure management tool. While vCenter is an awesome centralized management tool and offers performance insight, it wasn’t intended to be an enterprise-grade performance, resource optimization, and capacity analysis tool like vCenter Operations Manager.

In this post, let’s assume that, based on my last post, you have decided to consider something more than vCenter to manage your growing virtual infrastructure. With so many tools available, how do you tell them apart, how would you narrow down the masses of tools into just a few to test, and finally select a single tool to recommend your company purchase? As a former IT Manager who has been through this process, I can tell you from first-hand experience, if you want to do a thorough job of this, it’s not easy. Sure, you could easily select a tool based on superficial reasons such as the charm of the salesperson or that they had a great ad in a magazine, but really comparing them, testing them, and choosing one based on quantitative reasons is tough as not only do you have to find the similarities and differences but also where tools “are the same but different”.

Differentiating Virtualization Management Tools

On the surface, all virtualization management tools might look alike. You can walk around your local VMUG and probably find more than 5 different virtualization management tools. At VMworld you might find 20+. Over the years, I’ve come up with a few differentiators that allow me to quickly tell them apart. While you are free to come up with your own differentiators or categories, here is what I use:

- What’s the History?

One of the first questions that I ask product managers and engineers (not always salespeople because they too-often don’t know) is about the history and the architecture of the tool. Where did the code for this tool come from? Is it the same tool that I used 10 years ago and it has been “retooled” and “face lifted”? Did the tool used to be an “SNMP element manager”? Many times, this history will “connect dots” for you and you might remember this tool from the past. If nothing else, this knowledge will give you insight into who created the tool, how old it is (which will determine its architecture and whether it was designed for virtualization or physical servers), and what company it came from.

- What’s the Architecture and Design?

For the most part, virtualization admins aren’t developers and, in most cases, they don’t think about a tool’s architecture until they have already bought it. However, you don’t have to be a software developer to know that the architecture of a tool is what defines it’s complexity, scalability, and limitations. For example, if a tool requires that you supply a database connection, you will obviously have to pay for, manage, and monitor utilization of that database over time. Another example might be, how is the tool designed to obtain its data? Is it agent-less, agent-based, or does it allow you to use both? Agent-less designs are great but there are limitations to what they can provide. Agent-based designs can provide a lot of insight but they also, unnecessarily (in many cases) require you to deploy agents on every monitored host. On the other hand, some tools give you the option to use the agent-less approach until you need deeper knowledge, at which time you can install agents.

- Entry Level Price Point / Pricing Model

For many companies (especially the small and medium-size private companies like I came from) make price point the primary deciding factor when it comes to selecting a tool. For example, at my company, I had a mythical “line in the sand” at $100,000 for any hardware or software. If any single solution cost more than $100K I would either tell them “forget it” or “break it into smaller chunks” because I knew that the company owner would laugh at anything that expensive or it would require a massive multi-year campaign to get approved. Thus, I personally always preferred tools that were full-featured but priced in smaller chunks like “per VM”. While in some cases the “per VM” model might cost more than the “per socket” model, with “per VM” I might be able to select just the VMs I want to monitor instead of feeling like I need to purchase a solution to monitor all servers as I never know, with DRS and vMotion, what host my critical VMs will be running on. Your company, on the other hand, might look at the total 5 year cost for the entire virtual infrastructure and, in that case, the “per socket” cost might be more appealing.

However, the most important point about cost I want to make is to not get stuck on it. In other words, don’t rule out the really excellent solutions just because they “cost more”. In many cases, it is worth it to “pay a little more to get a lot more” such as a tool that is easier to use, more full-featured, or more extensible. It’s better to buy the “right tool” the first time instead of growing out of it and having to “pay double” by buying another tool.

- What They Do

Tool vendors should be able to quickly give you 3-5 bullet points covering what the tool does and how it helps you. Recently I asked a CEO for his company’s “value proposition”. 10 minutes later, he was still talking – trying to answer that question. I would ask any tools vendor what their product does and how quickly it will show measurable value for your company. They should be able to answer that question, perhaps, in one sentence.

Tools in the virtualization management category typically provide-

– Monitoring and alerting – showing general virtual infrastructure events such as “host down” or “all paths down”

– Change tracking – with configuration changes, in many cases, causing issues in the future, it’s important to track virtual infrastructure changes as later they may be the root cause of performance problems

– Performance analysis – such as reporting which VM is utilizing the most resources

– Capacity analysis – such as reporting how many more VMs you could add

– Performance and capacity alerting – such as the alerting to an host that is hitting higher than 80% CPU utilization

– Performance and capacity troubleshooting – such as identifying storage I/O contention on a host

Some examples of additional functionality that takes these capabilities further are-

– Remediation of performance problems when they occur – if a VM needs more vRAM it could be dynamically added or if a change caused a performance issue then the tool could reverse the change

– Chargeback and show back – how much are the resources used by the virtual machines and applications estimated to be costing your company

– What-if scenario analysis – answering questions like “if I doubled the memory on all hosts in a cluster, how many more VMs would I be able to add and what would the next bottleneck be?”

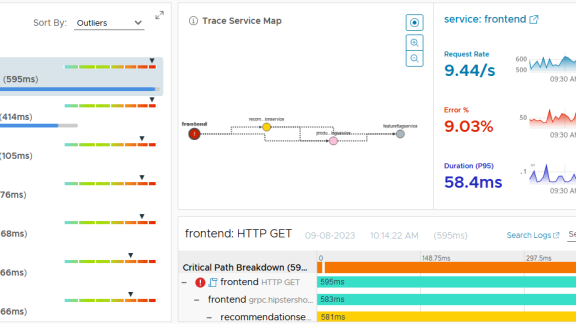

– Application analysis – integrating metrics from applications such as “database I/O latency” or “transactions per second” with traditional performance metrics.

- How They Show Measurable Value

Once you know where a tool came from, how it collects its data, and where it’s stored, you want to know how that tool is really going to help you. After all, it’s one thing for a tool to do “performance monitoring” but, if that just means it offers some pretty speedometer dials with CPU and RAM, that isn’t very helpful in the grand-scene of virtualization management.

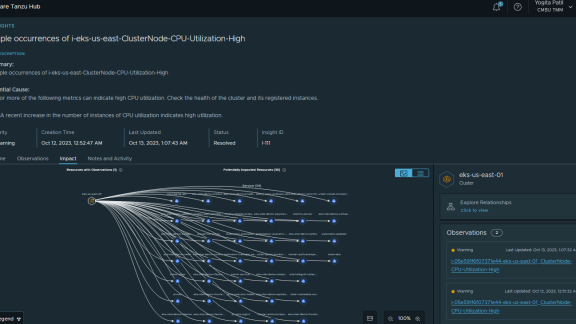

Instead, you want to find out, when the tool “sees a problem”, how will it intelligently identify it? vCenter offers simple thresholds that can be configured (i.e.: when a host has > 80% CPU utilization). Smart management tools should use more than just thresholds. Instead, tools should monitor your typical utilization, over time, learn about what is “normal” for your environment (think “baseline”) and then detect anomalies. In other words, the tool must know “what’s normal vs. abnormal”, just like you do, right?

Once the tool is able to “do what you do” by knowing when there is a real problem and when you trust the results, you can worry about something else in the datacenter instead of performance and capacity in the virtual infrastructure.

- Integration / Extensibility

Many tools just work with the virtual infrastructure only and pride themselves on that. While that is, of course, important, at many companies there is more. Tools should be able to monitor physical servers, applications, or just about anything you want them to. This “extensibility” of a tool gives you great flexibility as a virtualization admin. Ask yourself, can the tool monitor my Amazon Web Services, Oracle database, Hyper-V server, Exchange infrastructure?

Just showing you data from those sources on the monitoring interface in a single window (providing a “single pane of glass”) isn’t enough. Why not use the data collected from these numerous other sources, bring it into your monitoring database, and be able to correlate it with virtual infrastructure statistics? You are investing in the smartest performance and capacity analysis tool you can find, right? Why not use that investment to analyze other datacenter resources?

- Usability

All too often, ease of installation and the usability of a tool is overlooked. I would tell any admin out there that if you can’t deploy the application and realize how it will help you in under 10 minutes then your tool is too complex. Another factor in usability is the user interface.

Virtualization admins deserve beautiful, easy to use, fast, intuitive, and even (dare I say it) FUN to use tools.

My advice – don’t settle for less.

- Future Direction

Once you know where the tool is at today, you should ask “where is the tool going”. What are some of the most requested features? What is planned for the next release? What are the major features you foresee adding in the next 1-2 years? Virtualization management tool developers should be candid with their end users and be able to answer these types of questions. Hopefully you have a grand vision for your future career success, right? Similarly, if the tool vendor can’t offer you a “plan for the future”, then look elsewhere. You want a tool that will be around and will continue to offer greater and greater value for you and your company.

- One Thing That Makes Them Unique

Out of every tool, try to find and remember one thing that makes a tool unique. In the case of vCenter Operations Manager, I remember that “it’s from VMware”. For other companies it might be “legacy element manager tool with a pretty face” or “strong on virtual network analysis in VDI”, or similar.

Selecting The Right Tool For You

I recommend narrowing the field of roughly 20 tools down to 2-3. From there, put those tools to the test in your lab environment. This is important because you need first-hand experience with these. For example, you can see the usability of a tool in a quick demo or video, to some degree. However to really see how usable it is, you’ll need to install it and spend some time clicking around, for yourself. This is also true for showing measurable value. You should be able to do a short proof of concept with a tool, using it to monitor (in a read-only mode) your production virtual infrastructure. If it doesn’t show you real value in a short period of time, then move on to another tool.

Stay tuned to this Cloud Management blog for upcoming posts in this series where I’ll talk about how to test a virtualization management tool in your environment (methods and metrics) as well as what makes vCenter Operations unique.

All my posts on this blog can be found at http://blogs.vmware.com/management/tag/david-davis.