This blog series is not only a step-by-step guide to get you started on VMware Telco Cloud Platform, but also to demonstrate its key capabilities and the showcase its ease of lifecycle management.

This is a “quick jump” blog for the TCP aspirants, to keep as a reference point to help understand the role of VMware Telco Cloud Automation.

VMware Telco Cloud Platform is a cloud native solution with consistent infrastructure and multi-layer automation. Let me therefore briefly describe what it is and what it can do.

The main goal of VMware Telco Cloud Platform is to simplify deployment and lifecycle operations of Kubernetes for multi-cloud deployments while providing centralizing management and governance for clusters. Currently, the product releases in scope for this series of blog posts are:

- VMware Telco Cloud Automation

- VMware Tanzu Standard for Telco; Kubernetes versions include 1.19.1, 1.18.8, 1.17.11

- VMware NSX-T Data Center 3.1

- VMware vRealize Orchestrator Appliance 8.2

- VMware ESXi 7.0 U1

This diagram illustrates how these products come together to form the architecture of the platform:

Watch Full VMware Telco Cloud Platform 5G Demo – Simplifying CNF Operations with Automation

vSphere and Tanzu deliver self-service access to this underpinning consistent infrastructure while providing observability and troubleshooting for Kubernetes workloads.

VMware vSAN, which is an optional add-on component, is a hyper-converged virtualized storage solution with a unified management plane for both VM- and container- based workloads.

With vSAN, storage provisioning for CNFs is automated through its dynamic volume provisioning capability. This enables Kubernetes clusters running on vSphere to provision persistent volumes.

NSX-T provides networking and security for network functions while Antrea provides seamless container connectivity for Kubernetes clusters. Antrea creates an autonomous data plane for Kubernetes clusters where only the host is connected to NSX. Antrea is built on an Open vSwitch and a purpose-built CNI for Kubernetes. It is designed to run anywhere Kubernetes runs whether it’s on-premises, public cloud, or at the edge.

For more information on the components bundled within Telco Cloud Platform, please visit the release notes at https://docs.vmware.com/en/VMware-Telco-Cloud-Platform/2.0/rn/Telco-Cloud-Platform-5G-Edition-20-Release-Notes.html

With VMware Telco Cloud Platform, we can manage all clusters from a central location through its automation tool. The automation becomes extremely important considering that 5G networks consist of hundreds of edges sites, hosting potentially thousands of clusters.

I will be deploying the cluster using the platform’s CaaS automation capability where we can run various CNFs on top to support a wide range of use cases like Private 5G, Mobile Core, virtual CDN, and SD-WAN.

In summary, we will go through three different parts in this series:

- Cluster template designing and creation.

- Deployment of a cluster instance onto the infrastructure.

- Late binding capability with CNF onboarding.

Part 1. Cluster template designing and creation

This first step shows step-by-step instructions and screenshots for deploying the Tanzu Kubernetes Cluster.

Log into Telco Cloud Automation using your admin credentials. Once logged in, the dashboard provides visibility on current cluster state, available resources, performance, and alarms across the registered infrastructure.

Let us create a Kubernetes cluster template to deploy the cluster. The template is the blueprint of the cluster, which contains the required configuration. With Telco Cloud Platform, we can use the template to deploy multiple clusters for consistency and repeatability.

Now, let’s dive into the four simple steps to create the template.

- Select whether the cluster is for Management or for a Workload.

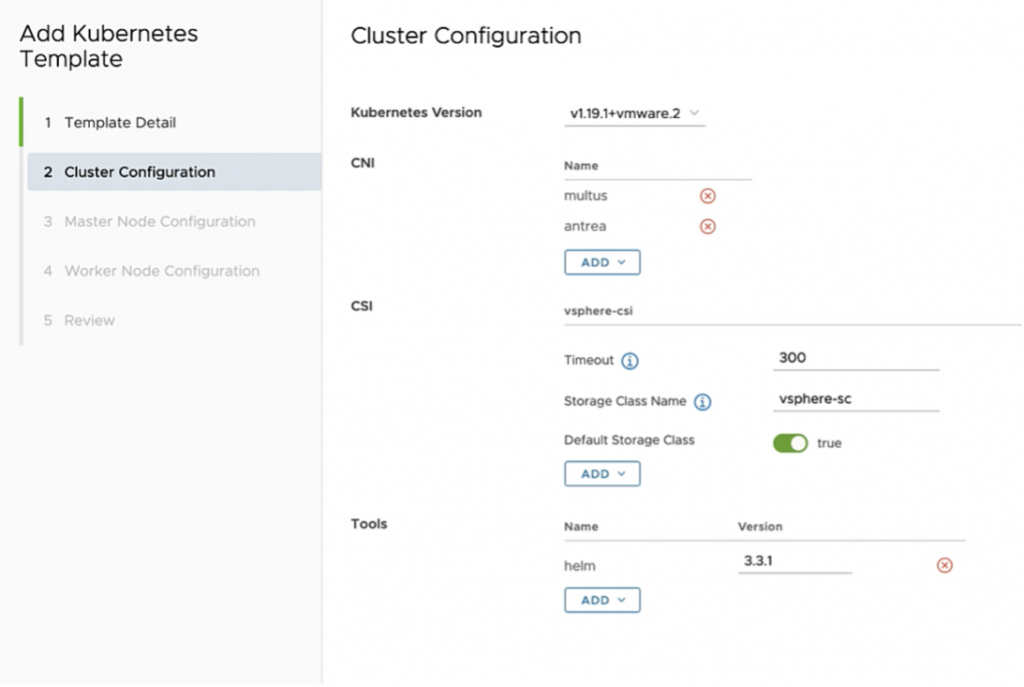

- Provide cluster configuration information such as the version of Kubernetes, tools to use like Helm Charts, the CNI, and the Container Storage Interfaces for vSAN to dynamically provision persistent storage volumes.

- Configure Master Node and Worker Node to specify the details of VMs for both nodes.

- Review the details and Add the template.

Now, let us create a sample demo Management Template. Go to CaaS Infrastructure > Cluster Templates and then click Add. Now it is displaying the Add Kubernetes Template wizard.

Let’s provide the details for our cluster and select Cluster Type as Management Cluster.

By default, the latest version of Kubernetes is selected.

Next, define the cluster configuration and extensions.

In the Master Node Configuration tab, enter the number of controller node VMs to be created.

For the Network, enter the labels to group the networks.

For the Management network, the master node can only support one label, so enter the appropriate label for this profile.

Once done, these labels are applied to the Kubernetes node.

In the Worker Node Configuration tab, add a node pool, which is a group of nodes within a cluster that all have the same configuration.

Now, we just review the details and Add the template for later use.

Similarly, let’s create a demo Workload Template. For this, go to the Add Kubernetes Template wizard and select Cluster Type as Workload Cluster.

For the cluster configuration, select the Kubernetes version from the drop-down menu.

Select a Container Network Interface (CNI). In this demo we use Antrea and Multus.

Multus is mandatory when the network functions require multiple interfaces.

Select a Container Storage Interface (CSI) from the available options, either vSphere CSI or NFS Client. For this demo, let’s use the vSphere CSI.

Only one storage class can be present for each type, meaning we cannot add multiple storage classes for the same type.

For the Tools, choose Helm, which is used to package Kubernetes deployments.

Now, let’s configure both the master and worker nodes.

In the Master Node Configuration tab, enter the number of controller node VMs to be created.

In the Worker Node Configuration tab, add a node pool, which can create multiple node pools based on the requirements.

Under CPU Manager Policy, let’s use the default Kubernetes policy for the cluster we are building now. This allows the scheduler to balance the CPU’s utilization across the cluster pods, or affix the CPU to a certain cluster pod to enable CPU pinning or exclusive CPU. The CPU pinning or exclusive CPU is useful when we don’t want the workload performance to be negatively impacted by CPU throttling or scheduling latency.

Once all these are configured, we can review the template and add it to the template catalog for future deployment.

This template catalog comes in very handy if we are dealing with massive number of clusters across dispersed network domains. We can return to the template configuration at any time to make necessary changes to fit our needs.

This concludes the Part 1 of creating cluster templates using the CaaS Automation capability.

Proceed with the next blog in this series Part2: Deployment of a cluster instance onto the infrastructure.

Discover more from VMware Telco Cloud Blog

Subscribe to get the latest posts sent to your email.