Agility is everything—especially when your data platform needs to grow. That’s why we’ve reimagined gpexpand, our online cluster expansion tool, to deliver a dramatically faster, more seamless scale-out experience. Whether you’re adding a few nodes or moving terabytes of data across your cluster, VMware Tanzu Greenplum now makes it easier than ever to expand without disrupting your business. Discover the powerful new enhancements designed to keep you on track, on time, and ahead of demand.

Enhanced Performance and Resource Usage

Several performance bottlenecks were identified and addressed for each phase of expansion:

Initialization Phase:

- Template compression: gpexpand bootstraps expansion segments from a template, created from the coordinator’s catalog. We now compress this template before distributing it to the expansion hosts, improving network throughput. Savings can be considerable given the usual size of Greenplum catalogs (think millions of partitioned tables!)

- Parallel ledger initialization: gpexpand in this phase has to create a ledger of all tables, across all databases in the cluster, in order to track their expansion status. The ledger is maintained in a SQL query-able heap table: status_detail. Population of this table is now done in parallel, with 1 parallel connection per database.

- Bootstrap connection reuse: Significant connection reuse is now at play during the initialization phase, which has led to a much welcome reduction in the number of short-lived connections.

Redistribution Phase:

- Smarter default for progress tracking: Cluster wide per-table size tracking is expensive and the overhead grows with the number of tables and segments.

Since an expansion of a single table is pretty fast and done in parallel (even entire partition hierarchies), monitoring expansion progress in bytes at the table level is like bringing a gun to a knife-fight!

This is why we have adjusted our default to be –simple-progress, which omits size calculations.

For clusters with fewer monolithic (possibly un-partitioned) tables, size tracking

can still be useful! This is where the new –detailed-progress flag comes in.

- Faster ledger updates: As tables go through expansion, their statuses in the status_detail table have to be continuously updated. UPDATEs made to the status_detail table are numerous and can be a significant bottleneck. When the Global Deadlock Detector (GDD) is off, UPDATEs to distributed heap tables are serialized (an ExclusiveLock must be grabbed).

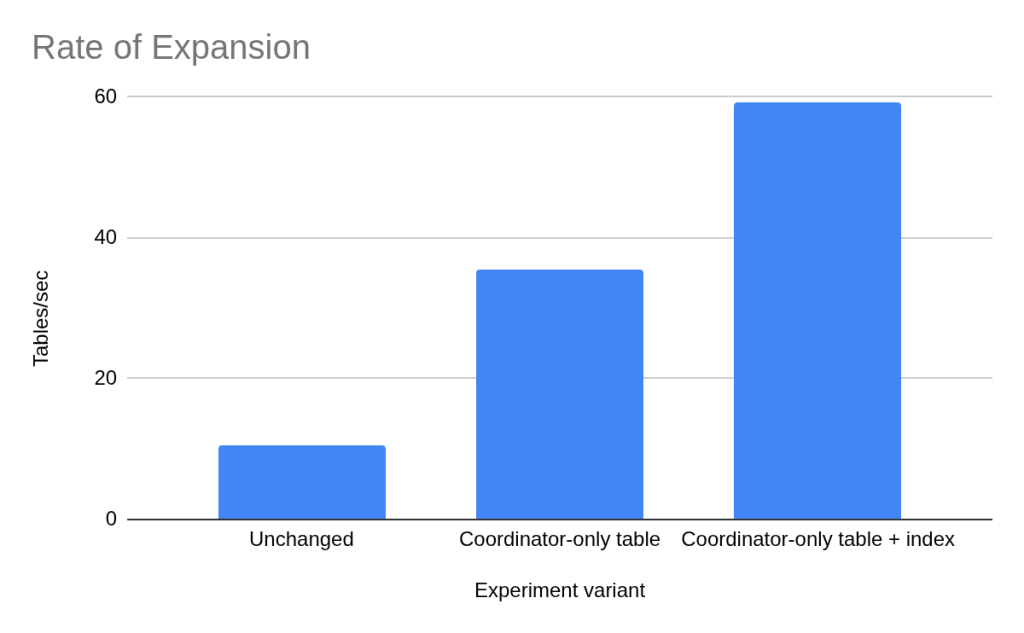

- Coordinator-only distribution – The ledger is now maintained only on the coordinator, which completely eliminates global deadlocks! Thus, expansion jobs can now UPDATE the ledger in parallel (grabbing a chill RowExclusiveLock)

- Indexing: Adding a simple B-tree index on status_detail has supercharged UPDATE performance at the price of a modest 20% increase in ledger size.

- Coordinator-only distribution – The ledger is now maintained only on the coordinator, which completely eliminates global deadlocks! Thus, expansion jobs can now UPDATE the ledger in parallel (grabbing a chill RowExclusiveLock)

The figure shows gains on expanding 2.25million partitioned tables (emptied to expose the bottleneck). A ~6X improvement is visible in the rate of expansion between the version without the changes (Unchanged) and the branch with the changes (Coordinator-only table + index). The size of the ledger is 250MB, with the new index costing a mere 50MB on top.

- Redistribution connection pooling: Client connections to ANY database are NOT cheap! They come with high overhead and consumption of kernel resources (sockets, processes etc). In Greenplum, being an MPP (Massively parallel processing) system, this cost is higher! Each connection has high fan-out – for every connection to the coordinator from a client (gpexpand), a dispatch connection between the coordinator and every segment is created!

Expansion jobs used to open a separate client connection to expand each table. So, if there were T tables distributed across N segments, the cluster-wide footprint was T(N + 1) connections! For 1M tables on a 1000 segment cluster, the number of connections would have been ~1B!

This has now been replaced with a connection pool (of size equal to the number of jobs i.e. the -n flag ranging from 1-96). This means that there are now only n(N+1) connections, where n(N + 1) << T(N + 1). For 1M tables across a 1000 segment cluster, the number of connections is now a max of ~100K.

QoL Improvements

In this release, we have focused our efforts on smoothening the expansion experience for DBAs with the following:

- New expansion states: We have added new states to help DBAs identify the severity of errors encountered during expansion.

- “FAILED” state to track tables that have met with a failure during expansion (e.g. table maps to a bad disk sector).

- “DROPPED” state to track tables that have been dropped post initialization and prior to expansion.

- “FAILED” state to track tables that have met with a failure during expansion (e.g. table maps to a bad disk sector).

- Improved logging: Logging at all levels and all phases has been improved.

- NOHUP by default: gpexpand now keeps running in the background, even if the surrounding terminal is lost. This is key, given it is a long running activity.

- Improvements to documentation: Key updates have been made, including guidance on configuring gpexpand to run smoothly in a resource group environment.

Summary

The following table summarizes version information for the improvements discussed.

| Improvement | Release first available |

|---|---|

| Template Compression | 7.4.0, 6.29.0 |

| Parallel Ledger Initialization | 7.4.0, 6.29.0 |

| Bootstrap connection reuse | 7.4.0, 6.29.0 |

| Smarter default for progress tracking | 7.2.0, 6.28.0 |

| Faster ledger updates | 7.3.0, 6.29.0 |

| Redistribution connection pooling | 7.3.0 |

| New expansion states | 7.3.0, 6.28.0 |

| NOHUP by default | 7.4.0, 6.28.2 |

This is just the beginning. We are dedicated to continuously enhancing expansion capabilities, with further improvements planned for each upcoming release. Stay tuned for more updates!

In the meantime, If you have a feature request or thoughts on how expansion can be improved, please feel free to reach out to us! Thanks for reading.