Application programming interfaces (APIs) are critical to modern applications. APIs are used to communicate information between users and applications, between the different components of a composite application, and to communicate with a rapidly increasing variety of devices.

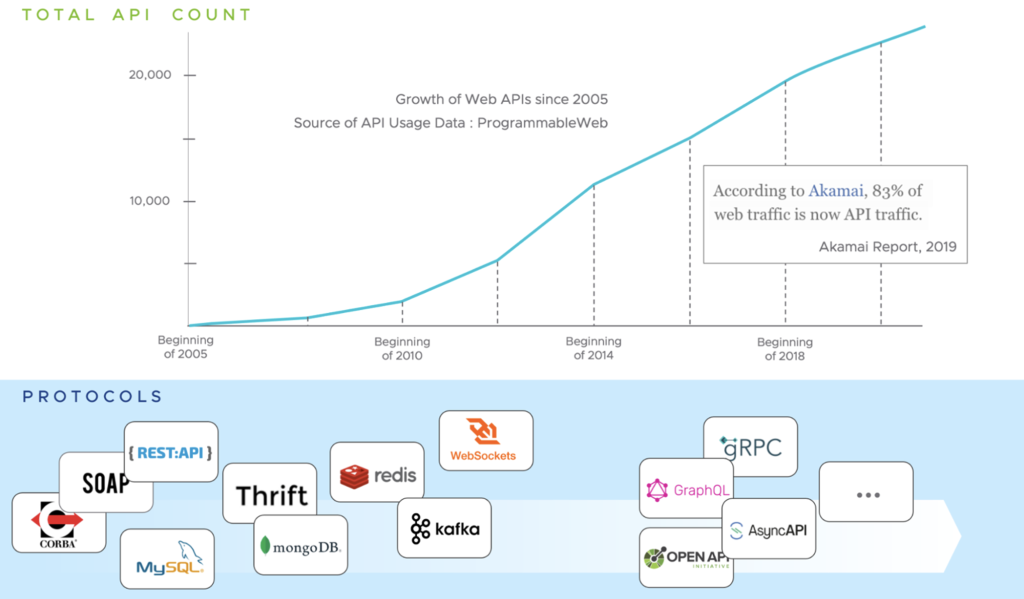

Initially, they mainly existed in the background, hidden from end-users and bad actors. However, as microservices, containers, and cloud-based services have become commonplace, the number of exposed APIs—and attacks against them—has exploded as modern applications can have thousands of components that communicate via APIs: API calls represent 83% of all web traffic.

Data sourced from Programmable Web and Akamai

There are different use cases for APIs. Here are a few, also depicted in the next figure:

- API aggregation. Aggregate a set of APIs and expose them through a single entry point (another API).

- Internal application reusability. A service is offered to multiple consumers (that is, a horizontal service like analytics or payment) through a single well-defined API.

- Polyglot applications. These are applications composed of microservices implemented with different programming interfaces and that typically use incompatible communication interfaces. To normalize their communication interface, APIs are the communication mechanism that the different application components use.

- Phased application modernization. When modernizing applications, it is common to replace fragments of the application with a set of microservices, and the remaining original application communicates to these new microservices through APIs.

- Low-code applications using 3rd party APIs. In low-code applications, drawing from components of widely available products offered through APIs (like Yelp or Stripe) is very common for enterprises.

- Applications using shared public cloud APIs. A variation of the low-code application is when enterprises use APIs from public cloud vendors, instead of deploying custom products.

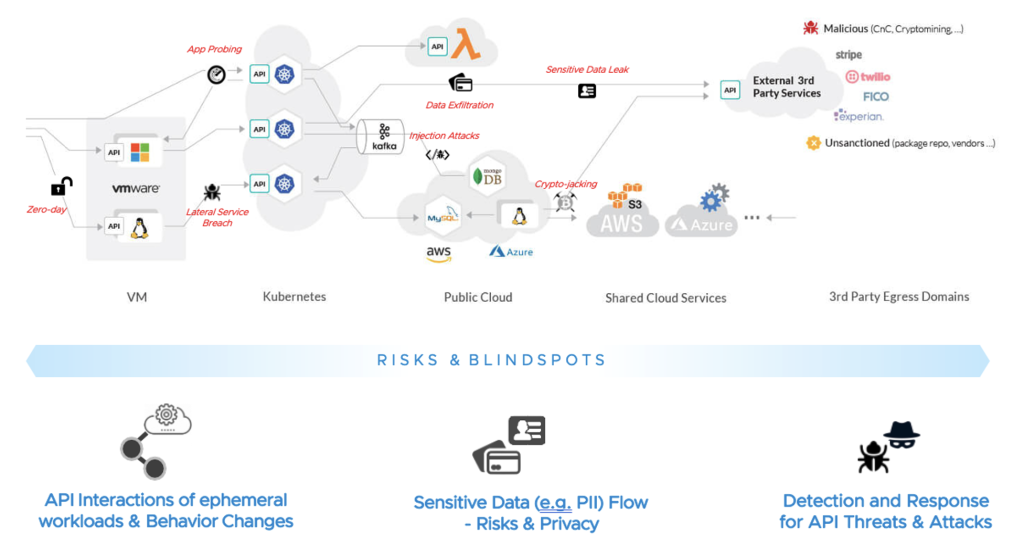

APIs potentially expose a lot of data, including personally identifiable information (PII) that is proprietary or sensitive. As a result, API security has become a critical component of application security, and API security should be part of any comprehensive security plan. Yet security teams often overlook securing APIs. SecOps focus their security enforcement using L4 or application-aware L7 segmentation functions (a traditional firewall, an SDN firewall, a SASE firewall, etc.) These segmentation functions are unaware of the risks created by API communication. Most modern API communication occurs through a blanket TSL/SSL connection over particular ports. Even the traditional L7 segmentation and ‘deep’ inspection services will miss not only the APIs in use but also the data manipulation due to the use of encryption. For API-level granularity, security must move up the stack to the application layer.

Zero Trust Architecture and API Security

NIST Special Publication 800-207 defines Zero Trust as a “set of cybersecurity principles used when planning and implementing an enterprise architecture.” NIST Zero Trust Architecture (ZTA) uses a holistic view that considers potential risks to a given business process and how to mitigate those risks. NIST ZTA considers that all data sources and computing services are considered resources. The list of resources includes mobile devices, data stores, sensors/actuators, IoT devices, etc., and the services. We can summarize many of the NIST ZTA principles that we will list here:

- Resource authentication and authorization are dynamic and strictly enforced before allowing access.

- Access to resources is granular, least privileged, and per session. Every unique transaction/operation requires authentication and authorization before granting access.

- Secure all communication to the maximum level regardless of network location and resource ownership.

- Monitor and measure the integrity, security posture, and behavior changes over time of all resources (for example, vulnerabilities and patching).

In the case of APIs, there are additional risks to consider. There is the risk of not being able to see all the API interactions of an application and how they change over time in the ephemeral world of modern applications. There is the risk of sensitive data that the APIs handle and the risk of compliance exposure, data exfiltration, or data sovereignty breaches. And, of course, a new set of risks due to plausible exploitation of a plethora of API vulnerabilities such as OWASP API Security top 10, API DDOS fan-out, zero-day API attacks, stolen credentials, perimeter breaches, lateral movement of breaches, data leak at the egress, or resource hijacking, to name a few.

Mitigating these risks is an essential—yet daunting—task, especially in the face of the underlying complexity that these modern applications bring. The need is a solution that uses a modern architecture, works in heterogeneous environments, mitigates these risks, and does all this in a performant and scalable manner across multi-cloud environments, but without compromising speed and agility.

Furthermore, with the White House executive order, you need to ensure that security and zero trust architecture are implemented in all environments, including APIs.

Securing Users, Applications, and APIs

Application architectures have constantly been changing over the past several years, evolving from monolithic to service-oriented architecture (SOA) to distributed microservices. The enterprises that are accelerating features to market using agile development methodologies and microservices are experiencing new challenges. One of them is disjointed operational models across incompatible microservices platforms. Consequently, new difficulties arise in observing, troubleshooting, securing, and remediating microservices applications. Service mesh technologies can solve many challenges introduced by distributed cloud-native microservice architectures.

A service mesh helps by abstracting necessary functions (mainly service discovery, connectivity, routing, observability, and security) to an off-application entity, a proxy. The proxy sits in front of each microservice, and all inbound and outbound communications flow through it. Previous service mesh architectures were mainly based in middleware client libraries. VMware provides an enhanced approach to service mesh.

VMware Tanzu Service Mesh (TSM) offers API security, observability, and compliance for all application workload components. TSM utilizes a modern architecture whereby a logically centralized control plane and a distributed data plane work across a large and diverse set of application platforms and environments. TSM focuses on simplifying these operations to manage and secure traffic flowing in and out of the service mesh and across microservices within the service mesh. This way, we can move connectivity and security functions up the stack to the API layer.

TSM uses the Envoy proxy as the data plane. APIs now become the new endpoint, and the promise of Envoy as a ubiquitous data plane is high as it enables Zero Trust to move up the stack. Intel has worked with Envoy and VMware teams to enable a performant Envoy proxy with WebAssembly extensions using Intel AVX-512 crypto instruction set that is available on 3rd Generation Intel Xeon Scalable processors.

“As enterprises become more agile with modern apps, providing a consistent posture around visibility, security, and compliance across clouds becomes the pressing challenge. Together, VMware and Intel are working with Envoy and WebAssembly communities to achieve a consistent security posture from users to corporate resources distributed across on-premise data center, edge, and cloud.,” Pere Monclus, CTO VMware Network and Advanced Security Business Group.

“Intel has been working closely with VMware to enable a performant Tanzu Service Mesh,” said Bob Ghaffari, Vice President and General Manager, Enterprise and Cloud Networking Division at Intel. “With distributed security models becoming increasingly important, Intel and VMware are engaged with the Envoy and WebAssembly communities to enable a scalable and performant cloud-native, zero-trust security enforcement solution.”

For those interested in the details of why and how Envoy and WebAssembly please keep reading… it will get interesting!

Envoy as the Platform and WebAssembly as the Runtime

Envoy is an open source, high-performance edge/middle/service proxy designed for cloud-native applications. It is an API-driven configuration model with powerful observability output and an increasingly extensive feature set. The initial release of Envoy as OSS was in the fall of 2016. Its acceptance into the CNCF occurred the following year, in September 2017, as Envoy rapidly gained traction throughout the industry. The primary usage of Envoy is as a sidecar proxy in service mesh architectures (like in TSM) for East-West traffic, where a service mesh product uses either an upstream Envoy or a custom Envoy. There is no productization of Envoy for pattern.

However, with the advent of multi-cloud architectures and edge computing, Envoy could also be used as an API Gateway to proxy North-South traffic or between clouds to proxy inter-cloud traffic or user-to-application traffic (like in SASE). There are now many productizations of Envoy as an API Gateway (like Contour). To avoid more fragmentation in the ecosystem and decrease the barrier to entry for this use case, the Envoy community recently decided to consolidate all those projects into the Envoy Gateway project.

Envoy has an extensible architecture, and both the core Envoy project and the Envoy Gateway project promote extensibility. At the core of the Envoy proxy lies a variety of filters that provide features such as observability, routing, and security that sequentially execute when traffic arrives at the proxy. Dynamically loading these filters at runtime is available. In addition to the pre-built filters, users can extend this mechanism with custom filters implemented using either C++, Lua, or WebAssembly.

WebAssembly (or Wasm) is a binary instruction format for a stack-based virtual machine. Wasm is designed as a portable compilation target for programming languages, enabling deployment on the web for client and server applications. This portability may sound familiar to readers familiar with the Java Bytecode. It is

- Efficient and fast: Wasm aims to execute at native speed by leveraging the intrinsic hardware capabilities in the platforms it executes on.

- Safe: It describes a memory-safe, sandboxed execution environment.

- Open and debuggable: It is designed to be pretty-printed in a textual format for debugging, testing, experimenting, optimizing, learning, and writing programs by hand.

- Part of the open web platform: It is purposely versionless, feature-tested, and backwards-compatible. Although initially designed to run embedded into web applications in a browser, it allows non-web embeddings like running as a filter in Envoy.

For all these reasons, Wasm is becoming increasingly popular for implementing extension filters in Envoy, as it provides a portable, lightweight, and secure solution for extensions. Such extension filters may include connecting applications to specific hardware capabilities for higher security and performance.

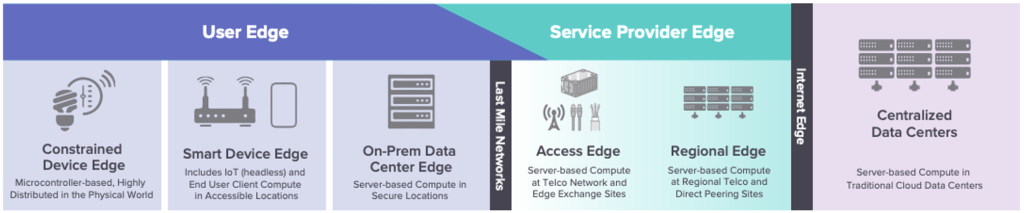

The Linux Foundation describes the Edge Continuum as “the distribution of resources and software stacks between centralized data centers and deployed nodes in the field as a path, on both the service provider and user sides of the last mile network.” It is represented in the next graphic. The technology industry is moving from the cloud, a centralized and homogeneous world, to the edge, a distributed and heterogeneous one. Applications will be built, distributed, and managed differently.

Source: Linux Foundation

The User Edge includes IoT devices with limited capacity and connectivity, smart devices (set-top boxes and phones), and on-prem data centers. Service Provider Edge includes server-based compute at both access networks and regional locations. What all these scenarios share is that they involve distributed computing, in contrast with traditional cloud offerings, that centralize compute in a handful of regions and data centers.

Now if the same Envoy proxy is deployed in the User Edge, the Service Provider Edge, and in the data centers, we invite you to think about and imagine how the same set of security functions implemented in Wasm and running on Envoy which provide API security can help you to consistently implement a Zero Trust Architecture across the entire Edge Continuum.

We will explore details of zero trust architecture in future posts. Stay tuned!