Recall what was happening a decade ago? While 2011 doesn’t seem that long ago (you remember, the Royal Wedding, Kim Kardashian’s divorce, and of course Charlie Sheen’s infamous meltdown), a lot has changed in 10 years. Back then, most data centers were just starting to experiment with virtualization. Remember when it was considered safe for only a handful of non-essential workloads to go virtual? Well, today about half of the servers globally have become virtualized, and we’ve moved well beyond just virtualization. Nearly every enterprise data center has become a hybrid environment, with a mix of physical and virtual storage and compute resources. Containerization and the technologies supporting it are starting to take hold. And of course, cloud computing has become pervasive in all aspects of enterprise computing.

Now, the business benefits of today’s software-defined data center are many, especially in terms of resource efficiency and cost savings. But there’s no denying that complexity has also increased, because all the same resources are still needed—compute, storage, switching, routing—but now any number of these resources may be on-prem or in the cloud. Between maintaining connectivity, ensuring business performance demands are met, and keeping pace with business and technological change, managing today’s data center is quite a challenge. And that’s before we discuss the most top-of-mind challenge for board rooms, business leaders, and IT practitioners: security.

Let’s jump into some of the security challenges of today’s data centers:

1. You can’t secure what you don’t understand

Let’s start with visibility. In a recent webinar with Omdia, the analyst discussed what they call the cyber security operations lifecycle, which outlines the steps for effective SecOps. In that lifecycle, just as in the data center, everything starts with visibility. After all, you cannot secure an environment if you do not understand it. Data center visibility requires reliable, real-time information about workloads, users, devices, and networks, and awareness as to when conditions change, risk conditions escalate, or new threats appear. Especially now in the COVID (and soon—hopefully— post-COVID) era, it’s likely that you’re going to face a highly distributed user base accessing data center resources from remote locations, essentially indefinitely.

So, with that in mind, how many organizations can say with 100% certainty that they have adequate data center visibility? If you don’t, you should wonder whether you‘re executing your security plan successfully. In the end, the lower your visibility, the more security gaps you’ll have.

2. There’s no app for that

Then there’s application security. This could be a whole separate blog, but really you need security controls within and around your applications. This requires different approaches depending on whether we’re talking about traditional on-prem apps or SaaS apps. In addition, keeping up with application policy requirements is an ongoing challenge. With many applications becoming increasingly componentized, you’re going to need to rely on DevSecOps processes to ensure security is baked into the instances supporting containerized, or even serverless, application functions.

3. The only constant is change—fast change

Next, there’s the pace of change. It sounds somewhat cliché, but almost every organization is undergoing some sort of digital transformation, which in varying degrees involves a significant IT infrastructure transition. But the dirty little secret of digital transformation is that it uncorks a permanently accelerated pace of change: more cloud infrastructure, more SaaS, more containerization. And in this decade to come, you’ll contend with things like IoT and 5G as well. That‘s going to mean exponentially more devices connecting to—and feeding data into—your data center. So data center security technologies in turn need to be centered on a policy-based, automation-driven approach that can accommodate an ever-increasing pace of change.

4. Exploding East-West traffic volume is a hacker’s dream

Finally, one specific challenge we need to double click on is East-West traffic. As the data center has evolved, that East-West, intra-data center traffic volume has exploded. Tighter, more efficient data centers have more workloads that produce more network traffic. In most organizations, East-West traffic now accounts for far more volume than the North-South traffic that enters and leaves the data center. And just because East-West traffic is not physically leaving your data center doesn’t mean you can trust it.

East-West traffic is a dream scenario for attackers looking to disguise malicious lateral movement, arguably the biggest concern in a modern data center environment. Think about the most common scenario that typically leads to lateral movement attempts. It’s the theft of valid credentials. In a situation where an attacker is authenticated into a system application using legitimate credentials, you need controls at the network layer to be able to identify and stop malicious traffic from traversing your network and potentially worsening an intrusion. Seen this way, there’s really no question that East-West traffic has essentially become the new network perimeter.

And that requires a new kind of firewall

Now, it’s pretty well established that wherever you have a network perimeter, you need a firewall. By extension, enterprises now need firewalling inside the data center. But traditional perimeter firewall approaches don’t necessarily work for East-West traffic. Let’s dig into that.

First, data center topology gets in the way

Particularly in a predominantly software-defined data center, it may not be feasible to insert virtual firewalling in between every workload, nor is it going to be efficient to route East-West traffic to the location of dedicated firewalls. By doing so, you’re unnecessarily introducing physical-style bottlenecks into a virtual environment—which defeats the whole point of the efficient, flexible software-defined model. Plus, consider the way firewalls control traffic and the types of traffic involved. At the perimeter, you’re primarily blocking specific ports and protocols, and IP address ranges, while with next-gen firewalls you’re controlling traffic based on application types and user groups. That’s all fine, but the controls need to be much more granular in an East-West context. You may have a single application, but different functions of that application, like a web tier, an app function tier, a database tier—all require different controls. Your firewall needs the ability to understand not only the traffic and the application, but also the application components and the business logic that dictate what is and is not acceptable communication.

Then there’s encrypted traffic

When you’re talking about encrypted intra-workload traffic, trying to decrypt, inspect, and re-encrypt those packets requires massive overhead, and it’s often not feasible. However, ignoring that traffic is not a great option either, because really you never know what’s in it.

So, you need East-West firewalling functions, but that doesn’t mean you need to accept the traditional limitations of physical and virtual firewalls. There‘s a different way. And that brings us to the concept of virtual perimeters, or more specifically, micro-segmentation.

The virtual perimeter: old concept, new approach

Micro-segmentation is the use of software and policy to implement granular network segmentation right down to the application and workload level. It isn’t all that new of a concept, but many organizations have yet to embrace it. While micro-segmentationenables many of the same capabilities as a traditional firewall, it obfuscates firewalling to a function within a virtual instance. In other words, the security controls are baked in at the workload level — and that makes it a fundamental step forward for data center firewalls. Looking ahead, firewalling will increasingly move from purpose-specific physical and virtual appliances to become a function within an application, a workload, or container.

Data center security: Improving simplicity and efficiency

In most enterprises, cybersecurity is a top business priority, but security has to be a business enabler. Security leaders who have lived in this world for a long time know that executives don’t want a worst-case security scenario, but they also don’t want their businesses hindered by security architectures that slow down people, processes, or technology and innovation.

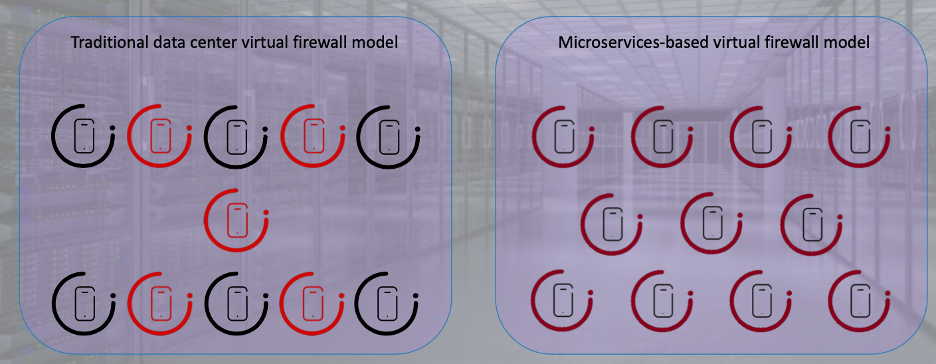

Now, what’s great about microservices and function-based firewalling is that it becomes much easier to fit security flexibly into business processes, even as those processes quickly adapt and change. Consider the traditional virtual firewall model on the left side of the image below, in which the only viable approach is to insert virtual firewall instances in between various workloads and workload groups. Firewall vendors will tell you that for proper network segmentation, you need a lot of firewalls—not a big surprise here. But this model is complicated to deploy and manage. It’s not efficient, it’s rarely cost-effective, and it certainly does not facilitate business agility.

Now, on the right side of the image above, consider a model with micro-segmentation, where you still have the same firewall functions, but they’re baked into each workload, and each has a corresponding policy that dictates the firewall and other network security settings so that as soon as a workload is created, the security policy is already implemented.

From a business standpoint, there’s a lot of value in baking security directly into your vital data center assets. With this kind of policy-based micro-segmentation, as soon as you deploy a workload, you can have the exact security controls that the workload demands. You can prevent the lateral movement threats, restrict application traffic, and realize all the benefits of Zero Trust access—in addition to being able to layer those additional security capabilities that firewalling facilitates, such as IDS/IPS, network traffic analysis, and virtual patching.

Let’s recap: firewalling isn’t going away

Don’t let anyone tell you that firewalling is going away, particularly in the data center. You still need the controls that a firewall offers. However, increasingly traditional standalone firewall instances are going to give way to firewalling as a function. Now is the time to start the process of researching how these technologies work and the benefits they can provide to your organization.

I invite you to dive deeper into this topic, including how to make the technology work in your environment, in this on-demand webinar, What’s Next for Data Center Firewalls? In it, you’ll hear real-world experiences around VMware’s own journey to this new data center security approach from their Director of Solution Engineering and Design for Core IT Infrastructure, who is living these exact data center transformation scenarios. So, if you don’t take my word for it, take his.

Learn more about VMware NSX Service-defined Firewall.