After many years and releases VMware vSAN™ design principals have slowly changed, mostly accounting for new features or functionality. vSAN ESA (Express Storage Architecture), however has a significant number of improvements that require we revisit how we design and size clusters.

Sizing vSAN ESA

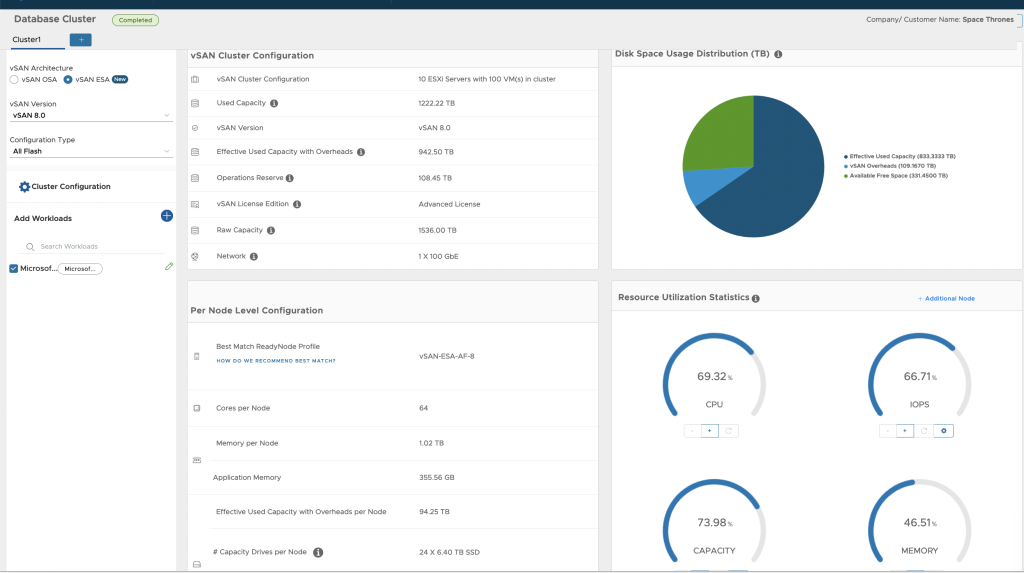

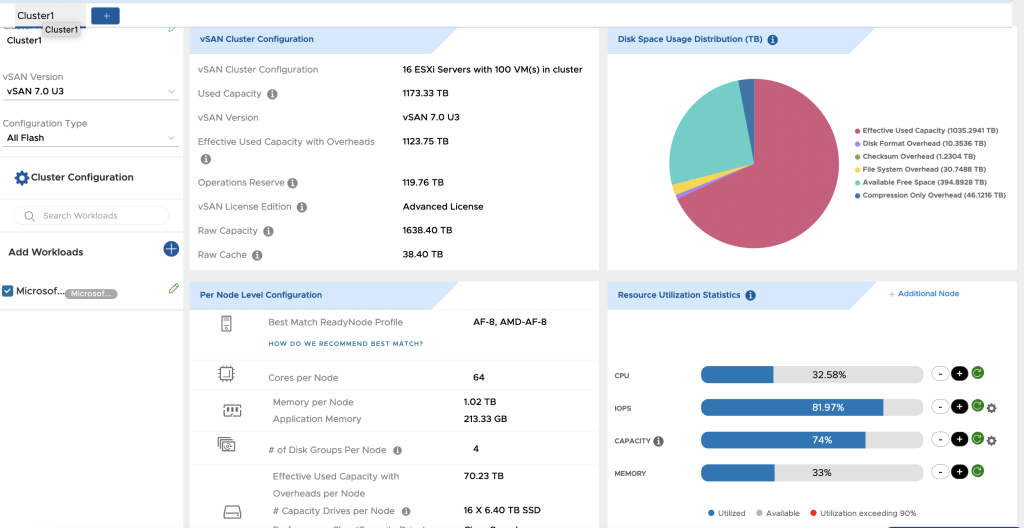

The vSAN Sizer now supports ESA Clusters! To test out sizing out a cluster make sure to select “ESA” and when selecting your cluster configuration specify the ReadyNodes, and quantity and capacity of the drives you wish to use. In the below example the same workload was put into both ESA and OSA sizing, and the difference in host count was significant (16 hosts for OSA, vs 10 hosts for ESA). There can be significant cost savings with vSAN ESA not just by providing more capacity, lower CPU overhead, but also by reducing hosts counts and driving up consolidation ratios.

A Video demonstration the vSAN ReadyNode QuickSizer

The following demo walks through how to use the vSAN Quick Sizer for vSAN ESA, and what a specific configuration would look like in both vSAN ESA vs. vSAN OSA.

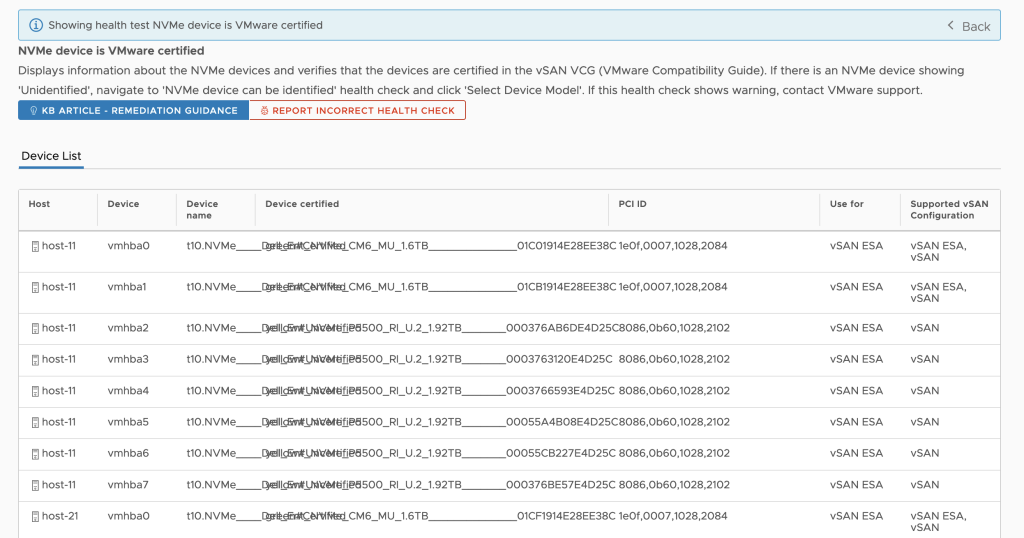

NVMe Drives

vSAN ESA requires drives explicitly certified for vSAN ESA Storage Tier usage. You can find the HCL/VCG for these drives here. These drives are commonly classified as “mixed used” as they do have higher minimum endurance and performance requirements than previous vSAN OSA capacity tier flash devices. As of the writing of this blog they range in size from 1.6TB to 6.4TB, with a maximum of 24 drives supported in a number of 2RU servers. Once you receive your order, make sure to check the vSAN Health Checks in vCenter (Under monitor –> vSAN –> Health) to confirm your drives were delivered as requested.

SPBM Policies Design Implications

RAID Choice – Unless the cluster is 2 node, never use RAID 1. RAID 5 will work on a 3 node cluster now, and there is actually a performance advantage to RAID 5 over RAID 1. RAID 5 now automatically adapts to the cluster size. For larger clusters RAID 6 is also a valid choice.

Security – Encryption now uses a bit less compute because of how data can resync, and move between component legs without needing a decrypt.

Compression – Data compression can actually improve performance now. How? Data is compressed on host where the VM is runningprior to the first write. As a result data transmitted over the network uses less bandwidth as it is already compressed. Disabling compression no longer requires a large scale reshuffling and re-writing of data, instead compression will simply stop for new writes. Compression is now enabled by default, but adjustable on a per VM basis.

CPU

vSAN OSA was capable of using more than a single CPU thread, and had some multi-threaded elements (Compression Only destage as an example) but there were some processes that fundamentally bound 1:1 to threads or thread pools. This meant, that for single VM or single VMDK performance it was possible that improving CPU clock speed could sometimes be a bit important in improving topline performance capabilities. vSAN Express Storage architecture is highly parallel, and has made significant improvements in leveraging multiple CPU cores. In addition a number of IO path improvements have been made to reduce CPU usage (as much as 1/3rd per IOP) compared to OSA. While there will still be impacts of different CPU SKUs (some CPUs have more offload engines for encryption, and a 48 Core Platinum CPU is going to out perform a 4 core Atom), the impact should not be as significant as before.

Network

While not a requirement, I recommend RDMA (RCoEv2) capable NICs, purely as NICs that support this function are likely to have all of the other offloads and performance capabilities you are looking for. The vSAN VCG for NICs can be found here.

vSAN ESA launched with a requirement for 25Gbps networking. To be clear, this requirement was driven less by concerns on traffic amplification (in fact ESA can use significantly less networking traffic for compressible traffic) and more by a desire to make sure hardware was procured that could demonstrate the advantages. I would encourage for core datacenter refreshes to look at 100Gbps even for the top of rack leaf switches for a few reasons.

- vSAN ESA has removed plenty of inside the host bottlenecks, this has resulted in traffic between becoming the most likely cause of bottleneck. This is not necessarily a bad thing but just reflecting that you always have a bottleneck in a distributed system and improvements in one area shift this bottleneck.

- Testing has shown as few as 4 highly performant NVMe drives being able to saturate a 25Gbps networking port when workloads demanded throughput.

- Talking to Partners and OEMs 2 x 100Gbps to a host, is all in ~20% more than 4 x 25Gbps. For greenfield switches 2x the bandwidth at 20% more cost is a great trade off.

- vMotion and other high bandwidth flows can benefit from the bigger cabling, and there becomes less of a concern around the complexity of configuring mLAG when 100Gbps is available for vSAN.

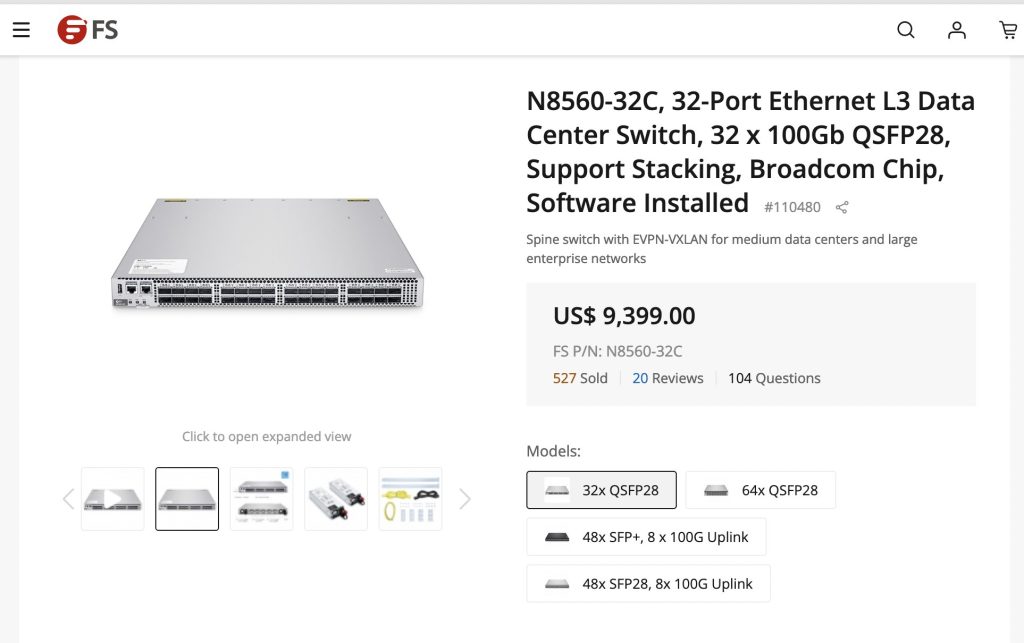

Network Pricing Concerns?

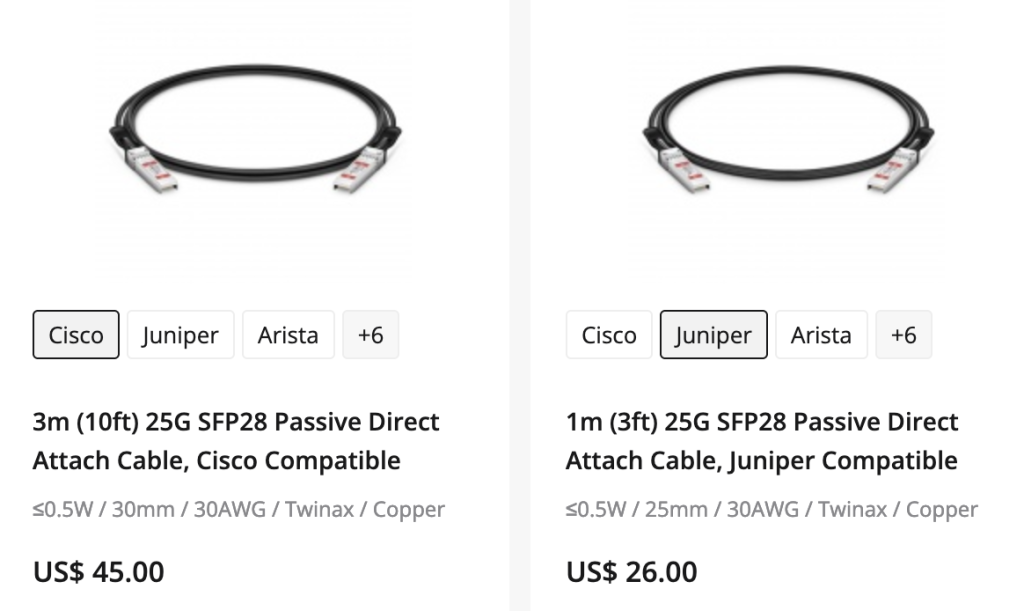

Talking to customers there are some VERY aggressively priced 100Gbps switches on the market that can even support RDMA. Meallnox’s has a number of reduced port count switches, and Trident3+ based ASICs are helping drive down denser 100Gbps deployments. I have heard some concerns on optic costs being why customers want to stay with older 10Gbps, but I would encourage customers to look at either “All In one Cables” AOC cables, that include the transceiver with the cable as a single piece, or passive copper Direct Access Cables (DAC). A number of suppliers offer these at incredibly cost effective price points.

Memory

Currently the minimum ReadyNode profiles for vSAN ESA feature 512GB of Memory. I would like to clear up “ESA is not using all of that!” and is actually quite efficient in it’s memory usage. We are looking to introduce ROBO/Edge profiles that will support a smaller memory footprint (If you have specific needs in this space talk to your account team and have them engage with product management!). A key design principal for ESA was to try to support large amounts of data, without having metadata scaling becoming a challenge for memory/CPU/IO overhead. For this reason we use a high performance key value store designed for fast NVMe devices that can handle inserts at 10x the speed of traditional options with reduced write amplification.

Previously in vSAN OSA to use more than 1GB of memory for read cache, you had to use vRealize AI. In the first release of ESA host local object manager read cache has been increased by a fixed amount. For heavily cache friendly workloads or benchmarks (Small block reads) you may notice some extreme outliers in improved performance.

Other Hardware

TPMs – Remember, while not a vSAN explicit requirement you should have TPM 2.x devices on all hosts. This is critical for a number of host security features (Host attestation, configuration encryption) as well as it enables the secure caching of keys on the local host for vSAN encryption.

Boot Devices – Mirrored M.2 boot devices are the “default standard” for ReadyNodes, and popular as they do not waste drive bays, allow local logging, are highly durable and cost effective.

Conclusion

In many ways, vSAN ESA feels operationally the same as before, but a number of different capabilities and improvements should be considered in the design and sizing of new clusters. Ultimately proper design should be able to deliver in the majority of cases a vSAN ESA cluster that is more capacity, more performant at a lower price than vSAN OSA is able to deliver.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.