When we announced the initial availability for the VMware Private AI Foundation with NVIDIA product at the NVIDIA GTC Conference in March 2024, we published a technical blog that describes the main features of the product, an add-on product to VMware Cloud Foundation. You can read about the main features of the product, as seen above, in that technical overview.

With the announcement in May 2024 that VMware Private AI Foundation with NVIDIA is now generally available, we review below some additional updates that our engineers achieved while the GA version of the VCF Add-On product was being prepared for delivery.

1. More GPU Metrics and Heatmaps in VCF Operations

At initial availability, we showed you some of the VCF Operations capabilities to see the GPU core and GPU memory consumption levels. Now with general availability, we allow two new aspects of GPU performance to be seen VMware Private AI Foundation with NVIDIA we allow two new aspects of GPU performance to be seen.

Firstly, VCF Operations now shows you a dashboard summary of the GPU-equipped clusters in your environment. As seen here, we can quickly determine that GPU memory is at a premium in this cluster.

VCF Operations – showing GPU-equipped clusters

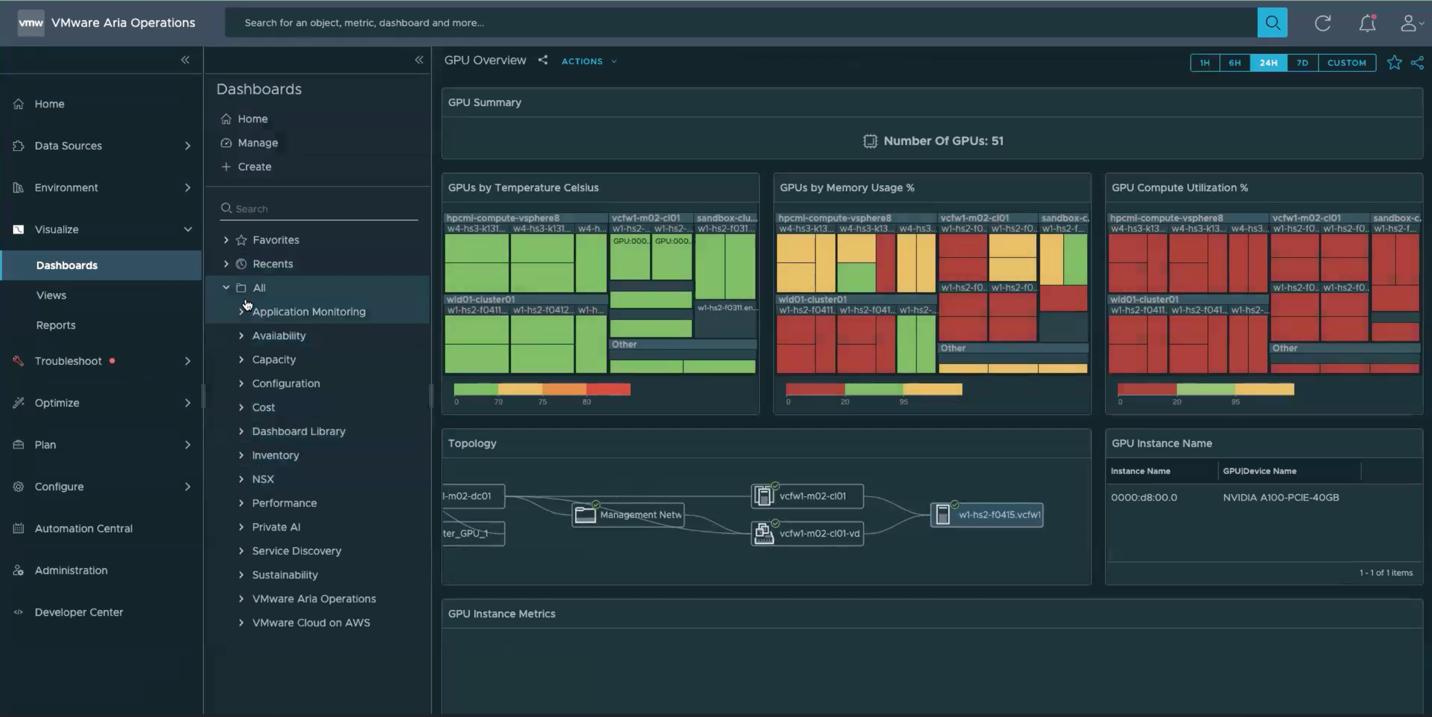

Secondly, we added visibility to the temperature at which the GPUs are operating. This is important so that we can get early warnings of GPU overheating conditions. Here, the panel under Dashboards – Application Monitoring shows three panels measuring different quantities of the GPUs in multiple clusters and hosts. These are temperature (where red indicates a high condition), GPU memory usage (where red indicates low usage) and GPU Compute Utilization (where red indicates a low utilization). We made the latter two red indicators as these draw your attention to the fact that your GPUs are not being used to their full capacity.

VCF Operations – with GPU Heatmaps

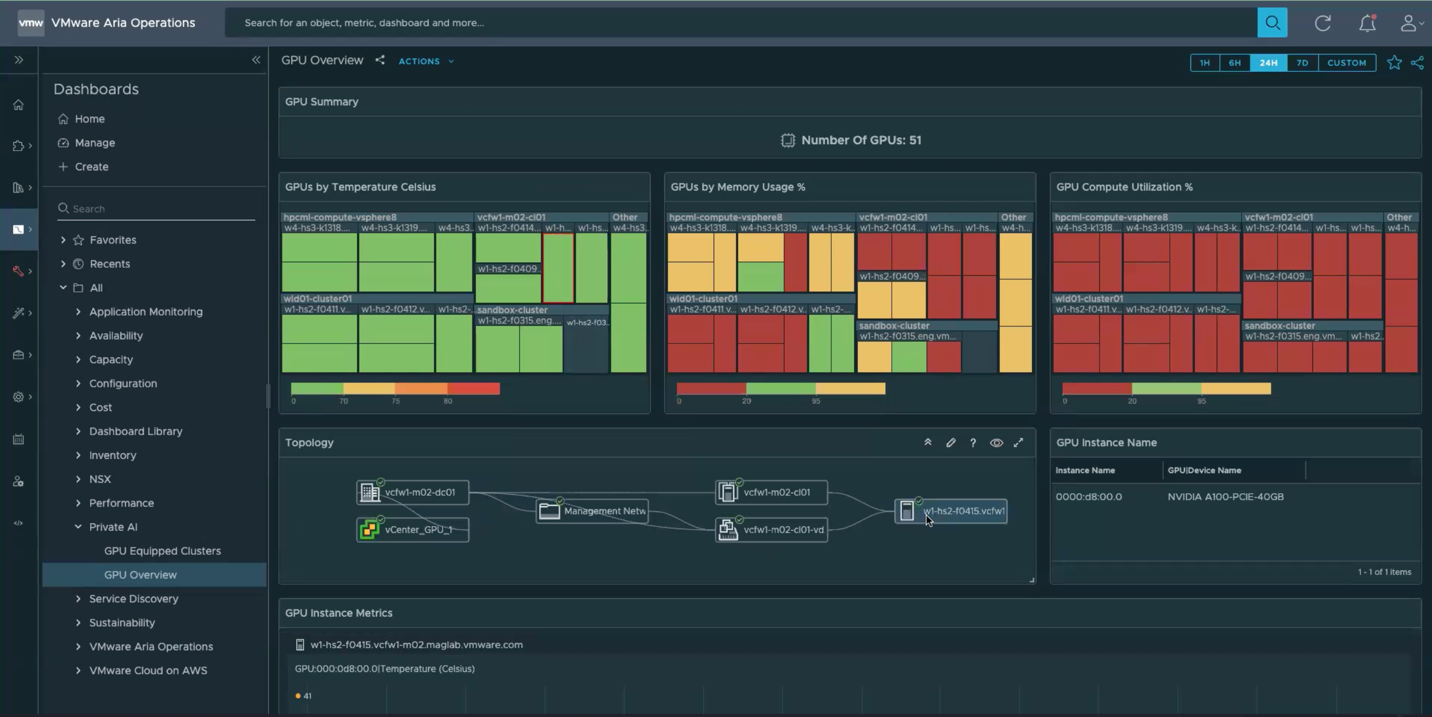

By selecting a GPU tile in this dashboard, we can drill down to see the GPU location, such as the data center, cluster, and host where the GPU is present, along with GPU properties (instance name and device type, seen on the bottom right panel). This is seen below where we have highlighted one panel for one host under the vcfw1-m02-cl01 cluster. We can drill down from Cluster to Host to GPU to get more details if we need to. There are many more features for comparing host GPU usage across your clusters and troubleshooting issues that you will find in our VCF Operations documents.

VCF Operations with Drill-down to the Topology for a particular GPU

2. Automation of PAIF-N Infrastructure Setup

To help our users deploy the new GA release of VMware Private AI Foundation with NVIDIA, VMware’s VCF engineering produced a set of four example PowerCLI scripts. These PowerCLI example scripts are available for use and can be downloaded from the Github page

https://github.com/vmware/PowerCLI-Example-Scripts/tree/master/Scripts/PAIF-N

The four initial example PowerCLI scripts help you to automate the setup of the infrastructure prerequisites for running the VMware Private AI Foundation with NVIDIA suite. A fully deployed VMware Private AI Foundation with NVIDIA infrastructure hosts a set of NVIDIA AI Enterprise containers/microservices that support Large Language Models and Retrieval Augmented Generation (RAG) designs. These are the NVIDIA NIM and NVIDIA NeMo Retriever Microservices seen in the architecture.

The PowerCLI scripts here focus on the infrastructure setup. The scripts take us all the way to the point of being ready to deploy deep learning VMs from the content library. The PowerCLI scripts do not install the containers/microservices but they or doing so.

The PowerCLI example scripts help you with: –

- Creation of a VCF workload domain involving a set of VCF host servers

- Setting up the NVIDIA vGPU Manager (host driver) using vLCM, on your hosts

- Creation of an NSX Edge Cluster within a workload domain, that will serve as the external access mechanism for connecting from your AI workload to the external world

- Enabling the Workload Control plane (i.e. the Kubernetes Supervisor Cluster) for your vCenter within the Workload Domain. The Supervisor Cluster is used for the creation of deep learning VMs or Kubernetes nodes from templates in the VMware Private AI Foundation with NVIDIA content library. That content library is also created by the script. Along with it, a set of VM class objects are created. These are derived from the example vGPU profiles mentioned in the script. The end user chooses a VM class to associate with VMs within their deployment, when creating a Deep Learning VM, or a node in a Kubernetes cluster. The content library and the appropriate VM class instances created are also associated together in this script.

The PowerCLI scripts must first be customized before using them for your own environment. Examples of the features within the PowerCLI scripts that are customized for your own installation are:

-local IP addresses,

-storage policy names,

-workload domain names,

-NSX Edge Cluster-specific names,

-content library names,

-vGPU profiles required

-vCPU count for VMs,

-RAM sizes for VMs

-and of course, passwords

among other customizable items

Once you have edited the scripts for your own use in your on-premises VCF environment, then they provide repeatability of the environment to other sets of hardware (or re-creation) in an automated way, making VMware Private AI with NVIDIA even easier to implement for your data science teams.

Summary

In the newly released Generally Available version of the VMware Private AI Foundation with NVIDIA suite, we see additional functionality in the form of (1) new GPU monitoring capabilities in VCF Operations and (2) new example PowerCLI scripts to help you with the setup of the infrastructure underlying your data science containers. These features, together with the powerful automation and deep learning VMs described earlier (blog), will speed your way towards deploying LLMs and GenAI on VMware Cloud Foundation.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.