Generative AI (Gen AI) is one of the top emerging trends that will transform enterprises in the next 5 to 10 years. At the heart of this wave in AI innovation are large language models (LLMs) that process large and varied data sets. LLMs let people interact with AI models through natural language text or speech.

Investment and activity in research and development of LLMs has seen a significant increase, resulting in the update of current models and release of new ones like Gemini (formerly Bard), Llama 2, PaLM 2, DALL-E, etc. Some are open-source, while others are proprietary to companies such as Google, Meta, and OpenAI. Over the next several years, Gen AI’s value will be driven by fine-tuning and customized domain-specific models unique to each business and industry. Another big development in using LLMs is Retrieval Augmented Generation (RAG), where LLMs are attached to large and varied data sets so businesses can interact with the LLM about the data.

VMware by Broadcom delivers software that modernizes, optimizes, and protects the world’s most complex organizations’ workloads in the data center, across all clouds, in any app and out to the enterprise edge. VMware Cloud Foundation software helps enterprises innovate and transform their business and embrace a broad choice of AI apps and services.. VMware Cloud Foundation provides a unified platform for managing all workloads, including VMs, containers, and AI technologies, through a self-service, automated IT environment.

In August 2023, at VMware Explore in Las Vegas, we announced VMware Private AI and VMware Private AI Foundation with NVIDIA.

Today at NVIDIA GTC, we are excited to announce the Initial Availability of VMware Private AI Foundation with NVIDIA.

VMware Private AI Foundation with NVIDIA

Broadcom and NVIDIA aim to unlock the power of Gen AI and unleash productivity with a joint GenAI platform- VMware Private AI Foundation with NVIDIA.

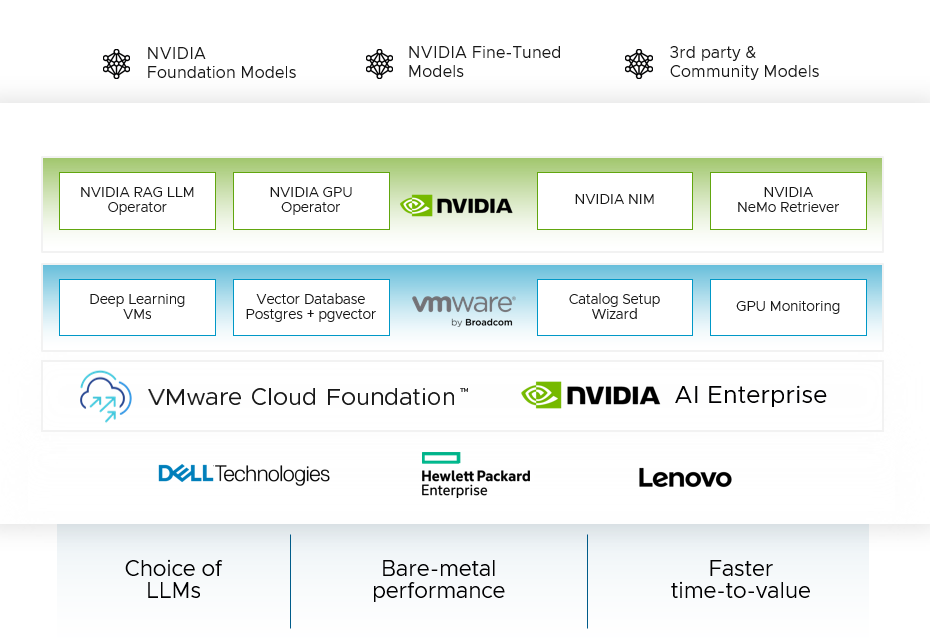

Built and run on the industry-leading private cloud platform, VMware Cloud Foundation, VMware Private AI Foundation with NVIDIA comprises the new NVIDIA NIM inference microservices, AI models from NVIDIA and others in the community (such as Hugging Face), and NVIDIA AI tools and frameworks, which are available with NVIDIA AI Enterprise licenses.

This joint Gen AI platform enables enterprises to run RAG workflows, fine-tune and customize LLM models, and run inference workloads in their data centers, addressing privacy, choice, cost, performance, and compliance concerns. It simplifies Gen AI deployments for enterprises by offering an intuitive automation tool, deep learning VM images, vector database, and GPU monitoring capabilities. This platform is an add-on SKU on top of VMware Cloud Foundation. Note that NVIDIA AI Enterprise licenses will need to be purchased separately from NVIDIA.

Key Benefits

Let’s understand the key benefits of VMware Private AI Foundation with NVIDIA:

- Enable Privacy, Security, and Compliance of AI Models: VMware Private AI Foundation with NVIDIA brings an architectural approach for AI services that enables privacy, security and control of corporate data and more integrated security and management. VMware Cloud Foundation provides advanced safety features such as Secure Boot, Virtual TPM, VM encryption, and more. NVIDIA AI Enterprise services has management software included for workload and infrastructure utilization to scale AI model development and deployment. The software stack for AI comprises over 4,500 open-source software packages that include 3rd-party and NVIDIA software. Part of NVIDIA AI Enterprise services include patches for critical and high CVEs (common vulnerabilities and exposures) with production branches and long-term support branches and maintenance of API compatibility across the entire stack. VMware Private AI Foundation with NVIDIA enables on-premises deployments that provide enterprises with the controls to easily address many regulatory compliance challenges without undergoing a major re-architecture of their existing environment.

- Get Accelerated Performance for GenAI Models regardless of the chosen LLMs: Broadcom and NVIDIA have enabled the software and hardware capabilities to extract maximum performance for your GenAI models. These integrated capabilities, built into the VMware Cloud Foundation platform, include GPU monitoring, live migration, and load balancing; Instant Cloning (ability to deploy multi node clusters with models pre-loaded within a few seconds), virtualization and pooling of GPUs, and scaling of GPU input/output with NVIDIA NVLink and NVIDIA NVSwitch. The latest benchmark study compared AI workloads on the VMware + NVIDIA AI-Ready Enterprise Platform against bare metal. The results show performance that is similar to and, sometimes, better than bare metal. Hence, putting AI workloads on virtualized solutions preserves performance while adding the benefits of virtualization, such as ease of management and enhanced security. NVIDIA NIM allows enterprises to run inference on a range of optimized LLMs, from NVIDIA models to community models like Llama-2 to open-source LLMs like Hugging Face, with exceptional performance.

- Simplify GenAI Deployment and Optimize Costs: VMware Private AI Foundation with NVIDIA empowers enterprises to simplify deployment and achieve a cost-effective solution for their Gen AI models It offers capabilities such as vector database for enabling RAG workflows, deep learning VMs, the quick start automation wizard,helping enterprises get a simplified deployment experience. This platform provides unified management tools and processes, enabling significant cost reductions. This approach enables the virtualization and sharing of infrastructure resources, such as GPUs, CPUs, memory, and networks, leading to dramatic cost reductions, especially for inference use cases where full GPUs may not be necessary.

Architecture

VMware Cloud Foundation, a full-stack private cloud infrastructure solution, and NVIDIA AI Enterprise, an end-to-end, cloud-native software platform, form the bedrock of the VMware Private AI Foundation with NVIDIA platform. Together, they provide enterprises with the capability to launch private and secure Gen AI models.

Key capabilities to highlight:

- VMware-engineered special capabilities: Let’s examine each of these in detail.

- Deep Learning VM templates: Configuring a deep learning VM can be a complex and time-consuming process. Manual creation can cause a lack of consistency and, hence, optimization opportunities across the different development environments. VMware Private AI Foundation with NVIDIA provides deep learning VMs that come pre-configured with required software frameworks like NVIDIA NGC, libraries, and drivers, saving users from the burden of setting up each component.

- Vector Databases for enabling RAG workflows: Vector databases have become very important for RAG workflows. They enable fast querying of data and real-time updates to enhance the outputs of the LLMs without requiring retraining of those LLMs, which can be very costly and time-consuming. These have become the defacto standard for GenAI and RAG workloads. VMware has enabled vector databases by leveraging pgvector on PostgreSQL. This capability is managed through native infrastructure automation and management experience for data services in VMware Cloud Foundation. Data Services Manager simplifies the deployment and management of open-source and commercial databases from a single pane of glass.

- Catalog Setup Wizard: Infrastructure provisioning for AI projects involves several complex steps. These steps are carried out by LOB admins who specialize in selecting and deploying the appropriate VM classes, Kubernetes clusters, vGPUs, and AI/ML software, such as the containers in the NGC catalog. In most enterprises, data scientists, and DevOps spend a significant amount of time assembling the infrastructure they require for AI/ML model development and production. The resulting infrastructure may not be compliant and scalable across teams and projects. Even with streamlined AI/ML infrastructure deployments, data scientists, and DevOps can spend a significant amount of time waiting for LOB Admins to design, curate, and provide the necessary AI/ML infrastructure catalog objects. To address these challenges, VMware Cloud Foundation introduces the Catalog Setup Wizard, a new Private AI Automation Services capability. On Day 0, LOB admins can efficiently design, curate, and provide optimized AI infrastructure catalog items through VMware Cloud Foundation’s self-service portal. After publication, DevOps, and data scientists can effortlessly access the Machine Learning (ML) catalog items and deploy them with minimal effort. The Catalog Setup Wizard reduces the manual workload for admins and shortens the waiting time for DevOps and data scientists by simplifying the process of creating a scalable and conformant infrastructure.

- GPU Monitoring: By gaining visibility into GPU usage and performance metrics, organizations can make informed decisions to optimize performance, ensure reliability, and manage costs in GPU-accelerated environments. With the launch of VMware Private Foundation with NVIDIA, we are excited to introduce GPU monitoring capabilities in VMware Cloud Foundation. This provides views of GPU resource utilization across clusters and hosts alongside the existing host memory and capacity consoles. This gives admins the ability to optimize GPU usage and, hence, optimize performance and cost.

- Exciting Capabilities in NVIDIA AI Enterprise

- NVIDIA NIM: NVIDIA NIM is a set of easy-to-use microservices designed to speed up the deployment of Gen AI across enterprises. This versatile microservice supports NVIDIA AI Foundation Models — a broad spectrum of models, from leading community models to NVIDIA-built models to bespoke custom AI models optimized for the NVIDIA accelerated stack. Built on the foundations of NVIDIA Triton Inference Server, NVIDIA TensorRT, TensorRT-LLM, and PyTorch, NVIDIA NIM is engineered to facilitate seamless AI inferencing at scale, helping developers deploy AI in production with agility and assurance.

- NVIDIA Nemo Retriever: NVIDIA NeMo Retriever, part of the NVIDIA NeMo platform, is a collection of NVIDIA CUDA-X Gen AI microservices enabling organizations to seamlessly connect custom models to diverse business data and deliver highly accurate responses. NeMo Retriever provides world-class information retrieval with the lowest latency, highest throughput, and maximum data privacy, enabling organizations to make better use of their data and generate business insights in real time. NeMo Retriever enhances GenAI applications with enhanced RAG capabilities, which can be connected to business data wherever it resides.

- NVIDIA RAG LLM Operator – The NVIDIA RAG LLM Operator makes it easy to deploy RAG applications into production. The operator streamlines the deployment of RAG pipelines developed using NVIDIA AI workflows examples into production, without rewriting code.

- NVIDIA GPU Operator – The NVIDIA GPU Operator automates the lifecycle management of the software required to use GPUs with Kubernetes. It enables advanced functionality, including better GPU performance, utilization, and telemetry. GPU Operator allows organizations to focus on building applications, rather than managing Kubernetes infrastructure.

- Major Server OEM Support – This platform is supported by the major server OEMs such as Dell, HPE and Lenovo.

Ready to get started on your AI and ML journey? Check out these helpful resources:

- Complete this form* to request access!

- Read the VMware Private AI Foundation with NVIDIA solution brief

- Learn more about VMware Private AI

- Visit the VMware virtual booth at NVIDIA GTC 2024

Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF.

*Submitting this form does not guarantee access to software. Availability is subject to approval and may be limited.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.