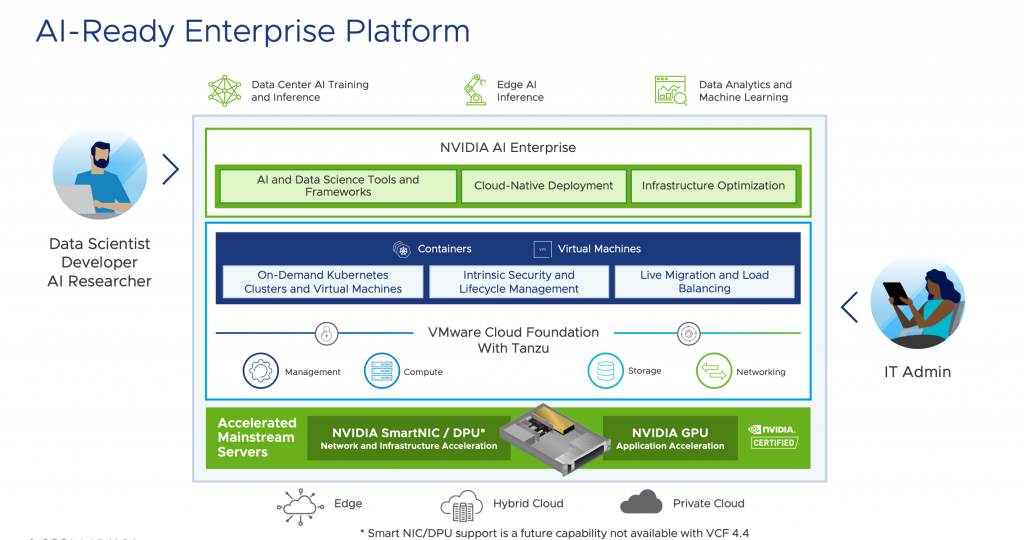

As we ramp up the anticipation of this week’s NVIDIA GTC event, it’s a great opportunity to highlight the latest integration between NVIDIA and VMware. We have expanded support for NVIDIA AI Enterprise 1.1 for private and hybrid-cloud deployments with VMware Cloud Foundation 4.4. This latest integration streamlines deployment of AI Ready Infrastructure and democratizes AI for every enterprise. The result is an AI-Ready enterprise platform that combines the benefits of the full stack VMware Cloud Foundation with Tanzu environment with the NVIDIA software suite running on NVIDIA certified, GPU accelerated mainstream servers using VM and container infrastructure. This solution provides IT admins with the tools and frameworks needed to successfully develop and deploy accelerated AI-ready infrastructure, while enabling IT administrators with full control of infrastructure resources. Learn about this and much more in this pre-GTC Technical blog by NVIDIA.

These critical advantages were also detailed in a white paper written by Enterprise Strategy Group Senior Analysts Paul Nashawaty, and Mike Leone titled: Enabling an AI-ready Infrastructure with VMware. In the paper, Paul and Mike explore the challenges that are present when deploying high performance GPU accelerated infrastructure for AI applications. ESG also highlights the opportunity enabled by utilizing full-stack Hyper-Converged Infrastructure (HCI) as a strategic element to integrate GPU technology into mainstream AI deployments.

Challenges for Successful AI Deployments

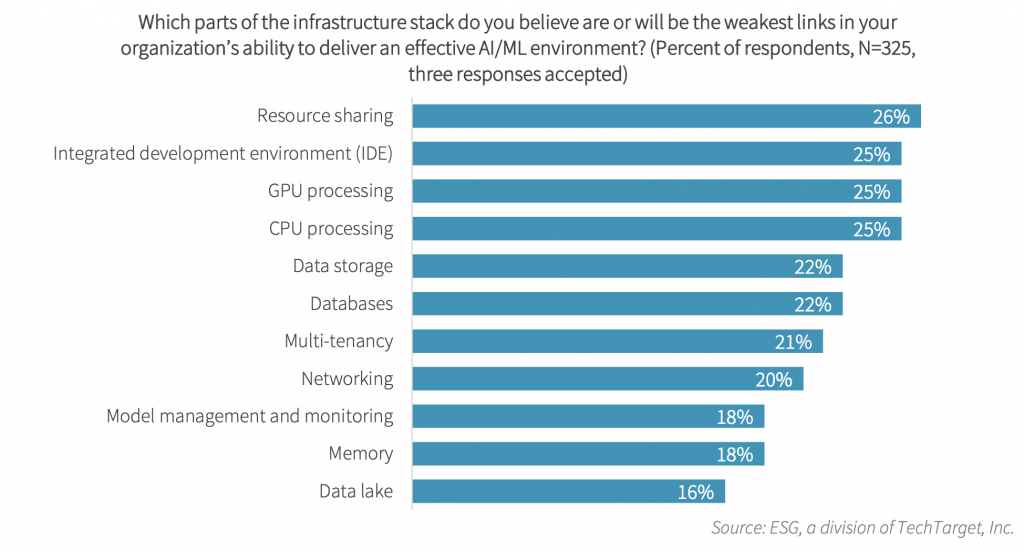

For successful implementation of GPU accelerated infrastructure to support AI deployments, there are several key challenges that IT leadership needs to consider when planning to integrate AI workloads into mainstream IT environments.

According to ESG Analysts, there are two specific areas that require particular focus:

1. Skill Gaps Caused by Infrastructure Shortcomings:

As organizations ramp up AI initiatives, the first challenge comes in the form of existing skills shortage in AI. This is particularly troublesome with IT often outright owning the final decision for AI infrastructure purchases and deployments. This is exacerbated by AI infrastructure that typically requires manual optimization and tight integration of several hardware of software components and resources in order to get to the desired outcome. Many organizations have learned the hard way, simply adding a GPU or two to an existing infrastructure deployment is not yielding the results that the business desire. Legacy infrastructure technology simply cannot keep up with the performance and concurrency demands of AI workloads resulting in inadequate processing power, storage capacity, and networking capabilities.

2. AI Lifecycle Bottlenecks

At the systems level, it is often difficult to integrate all of the tools, technologies and underlying data infrastructure which can create several bottlenecks, all of which impact the time to value of these critical AI initiatives. When surveyed on the areas of the AI lifecycle that cause the most headaches, ESG Research showed that the biggest challenges were at the beginning and end of the cyclical AI lifecycle.

Of course, IT infrastructure teams are tasked with pulling these systems together regardless of these challenges and have found that only full-stack solutions can provide an architecture with the flexibility for successful deployments. Solutions like VMware Cloud Foundation with Tanzu, integrated with NVIDIA AI Enterprise enable IT and SRE teams to standardize on HCI combined with a full suite of NVIDIA AI tools to simplify resource allocation and management. This flexibility delivers infrastructure that is agile enough to flex based on AI workload demand, from experimentation and testing, to training and tuning, and eventually deploying models for inferencing.

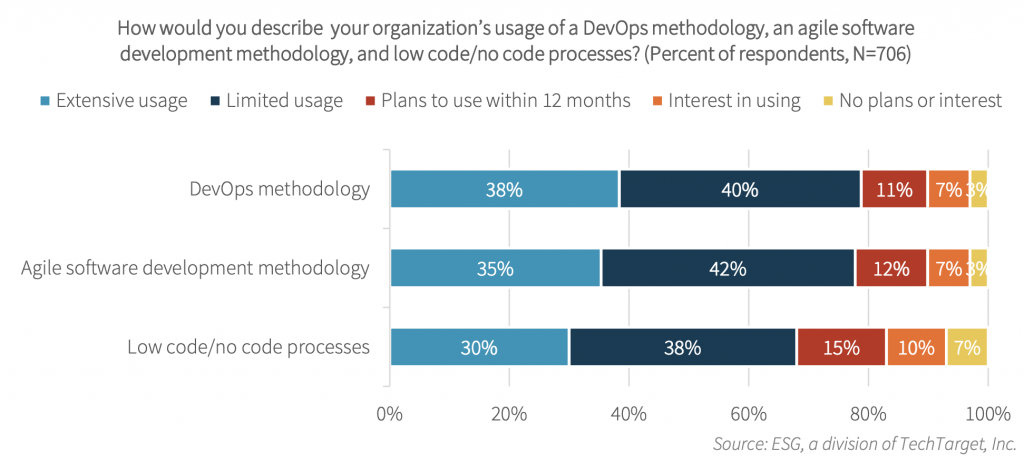

Bringing DevOps and AI Together for Data Science Agility

Lines of Business and IT infrastructure teams are both seeking methodologies to simplify the development of AI processes via increased agility empowered by DevOps. For AI workloads, the challenges of reconfiguring infrastructure and critical components such as GPUs needs to be optimized for tasks like workload placement and mobility. This requires an agile methodology to rapidly deliver against the business needs. DevOps methodologies are ways to address consistency and deliver rapid results from development to deployment. Within their latest white paper, ESG also points out that there is a clear relationship of DevOps methodologies with delivering modern applications.

Storage Class Memory to Support AI/ML on HCI

ESG also details the specific considerations that Storage Class Memory (SCM) to increase storage performance in modern storage systems. The point the analysts make is that when architecting systems with SCM to support AI/ML workloads delivers significant benefits in response time and performance when accessing data. When compared to DRAM, SCM offers increased density, lower power consumption, and improved affordability. SCM can be used in the storage array back-end to provide a caching tier for hot data with ultra-low latency access times, a highly desirable capability to support demanding AI/ML workloads. SCM in the cloud also increases value- added performance. Distributed and multi-cloud applications leverage the performance benefits of SCM. Cloud-based data centers offering data residing on SCM tiers reduce the latency and gain the same performance characteristics found across the full storage hierarchy.

Full Stack HCI Provides the Flexibility and Scale to Accelerate AI Initiatives

ESG concludes that with VMware Cloud Foundation and NVIDIA AI Enterprise, organizations gain access to AI-ready infrastructure building blocks that improve operational efficiency, easily scales, and improves AI time to value. Through access to virtualized GPU instances and container management constructs, organizations are set up for success in enabling IT to deliver an effective infrastructure that supports the dynamic concurrency demands of AI. Regardless of where your organization is on its AI journey, considering an AI-ready infrastructure to effectively scale the use of AI with your business should be on your short list.

Resources and GTC Sessions

If you’re planning to attend the NVIDIA GTC conference, there are a few sessions and resources that will be of interest to you. You can also visit the sponsor page and listen to our experts.

Here is a quick summary of our sponsored sessions you’ll want to attend:

- Unleash and Democratize AI for All Enterprises with NVIDIA DPUs and VMware (S42522)**: Join Marc Fleischman, VMware cloud CTO, and Michael Kagan, CTO of NVIDIA, to learn about how NVIDIA and VMware are unleashing and democratizing AI for all enterprises.

- Machine Learning in the Enterprise with Kubernetes on VMware vSphere (S42512)**: Join Justin Murray, Technical Marketing Architect at VMware, and Shobhit Bhutani, Principal Product Marketing Manager at VMware, and learn about how machine learning applications in the enterprise are easily enabled on VMware vSphere with Tanzu. Justin Murray will also provide a demo of how to deploy a TKG cluster, GPU Operator, and an ML app.

- Making Virtual Real: State-of-the-art Graphics with Horizon and NVIDIA (S42533)**: Join Arindam Nag, Sr. Director of Product Management at VMware, Anirban Chakraborty, Senior Product Line Manager , VMware and Jimmy Rotella, Senior Solutions Architect at NVIDIA, and learn how Horizon and NVIDIA teams are delivering an immersive graphics experience to users and content creators on any device of choice.

There are also an additional 12 sessions provided jointly by our experts and our partners! So, visit VMware’s sponsor page, learn more about VMware and NVIDIA and register for the sessions!

Resources:

- ESG White Paper: Enabling an AI-ready Infrastructure with VMware

- Blog: VMware at NVIDIA GTC Blog

- Solution Brief: AI/ML with NVIDIA and VMware Cloud Foundation

- Blog: Delivering AI Ready Infrastructure with NVIDIA and VMware Cloud Foundation

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.