Biotechnology, or Biotech for short, drives incredible levels of innovation across all facets of our lives. One of the more obvious examples of this innovation is through the development of vaccines and antibiotics. But its presence is spread across a diverse set of use cases, including agricultural, industrial, and environmental problem-solving.

The common denominator across these examples is that powerful applications and technology are used to solve these very difficult challenges. Biotech companies typically use specialized applications that help the processing, post-processing, and analysis of massive data sets and complex computational workflows. For example, molecular docking software like rDock is used for virtual screening: A technique used in drug effectiveness and discovery efforts. This type of data may also be subject to important data security requirements: a non-trivial task for many organizations. Solving the problems of Biotech requires sophisticated software and powerful hardware. VMware has been the platform provider for many in Biotech, including Bharat Biotech, and Almac Group, but advancements in Artificial Intelligence and Machine Learning (AI/ML) introduce all-new levels of problem-solving.

While these types of applications may be unique in their focus, they share characteristics with other solutions that demand a high amount of resources. These traits may include:

- Application architectures using multiple instances of the application (traditional or cloud-native) to scale as needed.

- High levels of resource utilization (CPU, memory, disk, and network) through multithreaded processes.

- AI/ML-driven by CPU or GPU offloading.

- The potential of large amounts of storage for structured and unstructured data.

- Resides on a platform that can scale the backing hardware resources accordingly.

With a high demand for resources, these applications may not always be the best fit for the cloud. One may occasionally see these resource-intensive applications deployed on-premises, directly on servers. The rationale is that it allows these specialized applications to take advantage of dedicated hardware while minimizing the impact on other applications in a shared infrastructure.

Harnessing a Modern Platform

Dedicated hardware placed in the corner of the data center can address short-term needs, but this ad hoc approach introduces an asterisk to the operations, management, and security compliance of an environment.

- How can application and data availability be ensured?

- How can the environment be scaled effectively?

- How are the applications and the data they produce are protected?

- How are the solutions and data secured?

- How can hardware contention be identified and accommodated?

For traditional applications of all types, virtualization has answered these questions for many years. But vSphere and the family of products it belongs to as a part of VMware Cloud Foundation (VCF) is more than just a hypervisor. It is part of a platform that can run the most demanding of workloads using the very latest hardware. It can power next-generation, cloud-native applications using the software you already know.

Recent enhancements demonstrate VMware’s focus toward platform flexibility and performance, such as running containerized cloud native applications using Kubernetes through VMware Tanzu or the scalability improvements found in vSphere 7 U2. Support of vSAN over RDMA found in vSAN 7 U2 gives all-new levels of network performance and efficiency, and the introduction of HCI Mesh compute clusters delivers increased flexibility for vSAN and traditional vSphere clusters. And let’s not forget VMware’s partnership with NVIDIA for computational offloading AI/ML processes to the GPU through the NVIDIA AI Enterprise Suite. It all adds up to a platform that is ready for big data.

Topology Options and Examples

The following are potential examples of how a vSAN powered environment could be used as a platform for a Biotech company of any discipline running computationally intensive software. They assume a proper design exercise has occurred to ensure it meets performance and availability criteria. Some elements or features of one example could be used in combinations with features in another example. The hardware specifications and cluster size could easily be tailored to the specific needs of an organization.

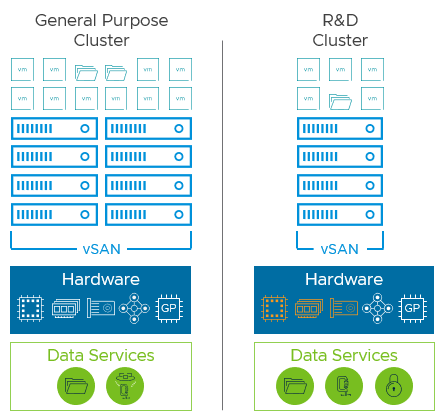

Example 1: Biotech workloads on a standard vSAN cluster

In the simplest of topologies, a standard vSAN cluster could be deployed in an environment. This could sit next to other vSAN clusters, or standard vSphere clusters. It would be managed centrally through vCenter Server, but hardware resources remain completely independent, ensuring complete isolation from other workloads outside of the cluster. This can be ideal for addressing noisy neighbor scenarios, and data services. In this example, vSAN data at rest encryption is enabled to meet potential regulatory requirements.

Figure 1. Dedicated vSAN cluster with fast hardware for Biotech workloads

Additionally, one could enable vSAN file services if there was a need to serve up large repositories of unstructured data. Data could be aggregated onto NFS mounts or SMB shares for easy access by other services or users.

This type of topology would be ideal for environments new to vSAN, as they could become familiar with how the resources could be scaled up or out incrementally to meet the needs of these resource-intensive applications.

Example 2: Biotech workloads on a vSAN cluster using high-performance hardware and offloading

New levels of performance can be introduced into a vSAN cluster through low-latency, highly efficient networking using RDMA. vSAN 7 U2 supports RDMA over Converged Ethernet (RoCE v2). Offloading of application processes to GPUs would occur courtesy of qualified GPU devices, and the NVIDIA AI Enterprise Suite of software. AI and ML are driving all types of innovations, and the use of GPUs has played a big part in the role of AI and ML in the data center.

Figure 2. Dedicated vSAN cluster with RDMA networking and GPUs for fast processing

vSAN over RDMA would reduce the “cost” of CPU cycles per I/O, as RDMA is more efficient than TCP over ethernet. This would leave more CPU cycles available for processing workload data, reducing contention. It also has the potential to deliver lower latency in cases where the network had previously been the primary bottleneck. Offloading application processes to GPUs using the NVIDIA AI Enterprise Suite can dramatically improve application performance, and can free up CPU resources for other activities. These two technologies could be used together, or independently to provide a faster platform for applications.

Example 3: Biotech workloads using Kubernetes

Some applications used in Biotech may be built in the form of a traditional application, while others are running in containers managed by Kubernetes. Available as a part of vSAN Enterprise, and packaged through VCF, one could run a combination of traditional applications, and Kubernetes powered applications in the same cluster. The stateful applications could use persistent volumes, or S3 compatible object storage on the vSAN cluster through the VMware Data Persistence Platform and one of our certified partners.

Figure 3. Dedicated vSAN cluster running vSphere with Tanzu to run Biotech cloud native applications

This type of topology would be ideal in terms of providing a platform that could easily run applications using containers and cloud-native storage, but running the platform on-premises to control costs and deliver the highest levels of performance.

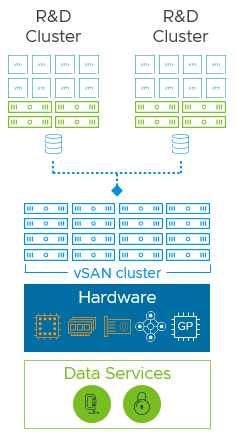

Example 4: Biotech workloads using vSAN courtesy of HCI Mesh

VMware HCI Mesh introduces interesting new possibilities with these types of resource-intensive applications. Consider the following scenario, where an environment already had a high-performing, moderately utilize vSAN cluster in an environment. One could have small vSphere clusters dedicated for the processing of these applications while using a vSAN cluster for storage. This can be particularly beneficial for applications that license based on cluster size while taking full advantage of the existing capacity and performance of the vSAN cluster.

Figure 4. Dedicated vSphere clusters for Biotech workloads using remote vSAN storage through HCI Mesh

Offloading the storage responsibilities could improve the effective performance of the applications on the HCI Mesh compute cluster (aka vSphere cluster) because fewer resources would be responsible for processing I/O when compared to a traditional vSAN cluster.

Practical guidance for vSAN powered clusters in Biotech environments

A proper sizing and design exercise should always be applied to the deployment planning of any environment, especially with resource-intensive applications. The recommendations below offer some general guidance to meet the needs of resource-intensive applications and big data found in Biotech.

- Create a dedicated vSAN cluster for these extremely resource-intensive applications. vSAN treats storage as a resource of the cluster. This can be a significant advantage over traditional architectures, where a dedicated vSAN cluster will ensure that resource-intensive applications will not impact other applications living in other vSAN clusters.

- Focus on VM agility and operation instead of per-host VM density. Resource-intensive VMs can be very good at utilizing hardware. This should be viewed as a good thing, as it allows processes to use all hardware resources available to be completed more quickly. Virtualizing these resource-intensive VM does not reduce the amount of resources they will use, but rather, improves the manageability of the systems as they are not bound to dedicated hardware. This is the same approach used with SAP HANA powered by VMware vSAN.

- Minimize the use of space efficiency features when performance is the top priority. Techniques such as deduplication and compression, and RAID-5/6 erasure coding can be a great way to reduce capacity usage, but it may impact the performance of transactional, resource-intensive workloads. Using vSAN’s “compression only” option with a RAID-1/mirror data placement scheme may be a good comprise of performance versus space efficiency.

- Large amounts of cold data may be a good candidate for space efficiency options. Large data sets may be kept for a variety of use cases. These could reside in large VMDKs attached to VMs, or file shares courtesy of vSAN file services. In either case, these may be good candidates for using RAID-5/6 erasure coding for improved space efficiency. Since a storage policy can be applied to a group of a VM, VMDK, or a file share, you can easily tailor this to suit the capacity and performance needs.

- Use high-performing storage devices for buffering and capacity tiers. Using NVMe based 3D XPoint devices at the buffering tier, and NVMe based flash devices at the capacity tier will help reduce the chances that the storage devices will be a common point of contention. All NVMe based designs also eliminate the need for dedicated storage controllers on each host, as each NVMe device uses its own embedded storage controller.

- Use at least three disk groups per host to improve parallelization. Three disk groups is a good starting point for clusters that may be storage I/O intensive. vSAN supports up to 5 disk groups per host, which can be viewed as a way to increase parallelism and buffer capacity.

- Use buffer devices that are at or greater than vSAN’s logical buffer limit. Ensuring the buffer devices are at least 600GB in size means that you will be able to use as much buffering per disk group as vSAN allows.

- Don’t forget processing power. Not all computationally intensive workloads are I/O intensive. Some applications do much more processing than I/O generation. Assess workloads prior to sizing your vSAN cluster and the amount of processing power required in each host.

- Look to see if your apps support offloading to GPUs. If they can, then the NVIDIA AI Enterprise Suite paired with vSphere and vSAN 7 U2 and later will allow the applications to take advantage of GPU processing power for the application.

- Use high-performing, 25/100Gb switchgear. vSAN relies on reliable, high-performing switchgear that has the processing, backplane, and buffering capabilities necessary for transacting high levels of packets per second. Unfortunately, many of the value-based lack all of those traits. For resource-intensive workloads, use 25/100Gb switchgear for your environment. vSAN using RDMA requires extra levels of attention to ensure there is compliance with hardware certification.

- Use recommended practices when assigning virtual hardware to resource-intensive VMs. Whether the resource-intensive VMs are running in traditional three-tier architecture, or on vSAN, there are optimizations to the assignment and configuration of virtual hardware that may offer improved levels of performance for your VMs.

- Install vSphere/vSAN on persistent flash devices such as an SSD, M.2, U.2, or BOSS module. Using SD cards or USB sticks for the hypervisor was popular at one time, but that trend is fading away fast due to the questionable quality of those devices, and the lack of ability to assign persistent host logging to devices. For the best levels of reliability, stay away from SD cards and USB devices as the hypervisor installation target.

- Base your successes on batch processing times and management agility, not synthetic benchmarks. While HCI Bench can be a great way to make sure your cluster is running properly prior to deployment and establish a baseline for future reference, the success of the platform will be judged on how well it can meet all of the requirements, including batch processing times, high availability scenarios, etc.

Share the success. Introducing innovative approaches to solving difficult problems for your internal consumers can help position the effort as a collaborative benefit to your organization instead of a cost burden. Showcasing the collection of benefits to the organization can help buy-in from key stakeholders if the environment needs to grow. - Understand your disaster recovery (DR) requirements. Protecting important workflows and the big data they generate can be challenging. Planning for DR is about maintaining uptime and continuity in the event of a larger scale outage. Depending on the needs and size of an environment, one could use VMware Site Recovery Manager as a DR solution across company-owned sites, or in single site environments, using VMware Cloud Disaster Recovery to provide all business continuity requirements courtesy of the cloud, all using an easy SaaS-based, pay-as-you-go model.

Summary

A variety of technologies across Biotech are helping to address challenges that were considered unfathomable not long ago. VMware continues to adapt a platform to deliver flexibility and performance to the most demanding of applications. Biotech companies are producers and consumers of big data, and VMware is ready for it.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.