vSAN and VCF environments powered by vSAN offer extraordinary flexibility in scaling. Capacity can be scaled up by adding devices to a host, or scaled out by adding more hosts. How does the use of extremely large flash devices affect a design? Let’s look at the considerations in more detail.

More Storage Capacity Equals More Potential for I/O

Storing a large amount of data at rest is not all that different than storing a small amount of data. The difference is that in a shared storage environment, more data – typically in the form of VMs – can lead to more potential access in the form of reads and writes.

In both HCI and three-tier architectures, increasing capacity can shift performance bottlenecks in unexpected ways. For example, three-tier architectures often see more stress put on the storage controllers as raw storage increases. HCI architectures may see increased stress on the network due to write operations from a higher number of VM’s per physical host.

While high-density devices with relatively low-performance capabilities can provide the illusion of good price per TB value, they may not be able to support the performance demands of the workloads consuming the data. The capacity is technically available, but potentially incapable of being used at the level of performance expected. For vSAN and VCF environments powered by vSAN, there are ways to accommodate for this.

Device Bus and Protocol Considerations

Many high-density storage devices are “value” based, meaning that they don’t offer levels of performance and consistency often demanded in enterprise environments. Flash storage devices using a SATA interface fit into this category. They struggle in performance and consistency due to the various limitations of the protocol, such as half-duplex signaling, single command queues, and higher CPU processing per I/O. SATA is much more fitting for the consumer space, with no planned improvements on its roadmap. The characteristics of SATA simply do not match well against the demands of simultaneous bidirectional access of shared storage.

When considering large capacity devices, consider at minimum, SAS based flash devices. Note that NVMe is the superior standard, and it is only a matter of time before this far-superior bus protocol takes over the enterprise. NVMe devices using NAND flash or 3D XPoint already dominate in more performance-focused arenas, such as caching and buffering devices.

Higher-density devices can also place more stress on the off-device, shared HBAs controlling them. SAS handles this better than SATA, but bottlenecks at a shared controller are more likely as traffic increases. NVMe devices do not have this issue since each NVME device contains its own controller.

Recommendation. For large capacity devices, use at minimum devices that are SAS based, with NVMe being the ideal preference.

Disk Group Design Considerations

With the use of much larger capacity devices, the potential of more workloads may put additional strain on the buffering tier. How does this occur? More stored data from additional VMs will typically increase the aggregate working set size across the hosts in the cluster. More workloads typically mean more hot data. While the vSAN Design and Sizing Guide describes sizing of a buffer tier in an all-flash environment based on performance inputs, accommodating for more workloads using more buffer capacity is a prudent step toward consistency of performance.

Figure 1. Aggregate working sets increase the amount of hot data

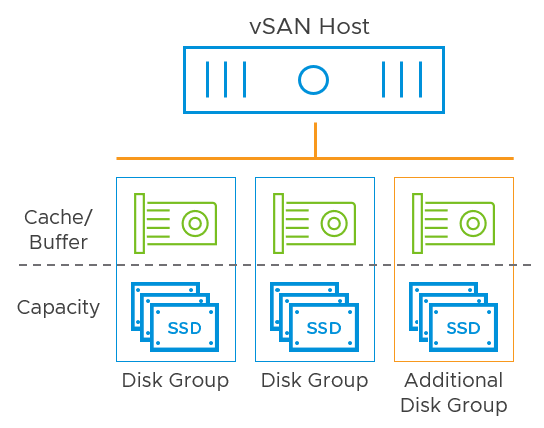

Adding more disk groups to each host introduces more buffer devices available for use, thereby increasing the available buffer capacity to accommodate the additional workloads. Equally important, more disk groups increase the number of parallel I/O operations that can occur.

Figure 2. Additional disk groups provide more buffer capacity

Recommendation. Use more disk groups per host to improve parallelization and increase the overall capacity of the buffer cache to support the likelihood of more active/hot data.

Accommodating for the performance demands of the capacity tier is often overlooked, especially when increasing capacity per host. The buffer tier provides the maximum burst rate of I/O, but the capacity tier is what provides the maximum steady-state rate of I/O. When the capacity tier does not have enough performance, high levels of sustained writes may overwhelm the capabilities of the buffer. The destage rate of the buffer is most often (but not always) bound by the performance capabilities of the capacity tier. When this is the case, capacity tier performance can be improved by using faster flash devices, and more of them. This helps reduce the strain on the buffer tier by destaging data more quickly ensuring additional latency is not pushed back to the guest VMs.

Figure 3. Improving the performance of the capacity tier

Disabling Deduplication and Compression is also an option to improve the performance of the capacity tier. Deduplication and Compression is a process that requires effort during the time of destage, effectively reducing the destage rate. The larger devices introduced to the cluster may provide the capacity requirements necessary without needing to enable to feature.

Recommendation. If you are concerned with your capacity tier performance, use higher-performing SAS or NVMe devices at the capacity tier.

Evacuation and Repair Times Considerations

More potential capacity means longer evacuation times, assuming network and device performance has remained the same.

Figure 4. Host evacuation and repair times bound by the performance of the host devices and the network

The same 10GB network used for hosts that are now using devices 4, 8, or 12 times the capacity as previous configurations may eventually be insufficient for the VM densities on each host. See the post: vSAN Design Considerations – Fast Storage Devices vs Fast Networking for more details. A sufficiently sized vSAN cluster will always have available hosts for any potential resynchronization of object data that helps it maintain its prescribed level of compliance. The process is automated and transparent to the administrator. But the speed at which it is performed depends on the performance of the network and the storage devices used. The consideration of host evacuation and repair times comes down to the level of comfort in the time it takes to rebuild or move a host’s worth of capacity from one host to another should a sustained host failure occur.

Recommendation. Perform a test of a full host evacuation to determine the total time for evacuation, and extrapolate estimates should you dramatically increase the capacity of your hosts. If those numbers do not meet your requirements, then evaluating the capabilities of the storage devices and associated switch fabric is in order.

Host Count Considerations

Higher-density devices are provocative because they imply the possibility of achieving the same or more capacity with fewer devices and/or hosts. The minimum hosts necessary to ensure your defined levels of resilience remain the same. Also, while each host contributes its own respective capacity, remember that it also contributes its own RAM and CPU for I/O processing. Subtracting those resources from the cluster may have some impact if they contribute to any performance bottlenecks.

Recommendation. Ensure that your host count of a vSAN cluster remains sufficiently sized to support the necessary resilience levels.

Summary

High-density storage devices open up new capabilities for vSAN hosts. The flexibility of the architecture allows for quick adoption in almost any vSAN powered environment. They also introduce considerations in design to ensure that the cluster can support the full performance capabilities expected. Taking a little time to better understand how high-density storage devices may impact your design will result in smarter purchasing decisions and more predictable performance outcomes.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.