In the brief yet fascinating history of Kubernetes, the roles of ingress and load balancing have been nothing short of essential for ensuring application reliability and performance—and, much like a kingdom’s throne, these components have already seen three generations of rulers. So, why do enterprise applications today demand advanced load balancing? Here are the key business drivers:

- Load balancers must be next-gen, providing intelligent automation and self-service capabilities.

- They should be developer-friendly, minimizing configuration headaches.

- Lastly, they should be versatile, bundling services like ingress security, analytics, and GSLB—no need to juggle multiple service providers.

As Kubernetes has grown, so too have the methods for managing traffic across its services. Let’s embark on a journey through time, tracing the evolution of load balancing in Kubernetes—from its humble beginnings to the cutting-edge solutions of today.

The Dawn of Load Balancing: Service Type LoadBalancer

The early days of Kubernetes were like the first steps of a fledgling empire. The primary method for load balancing was the Service type LoadBalancer—a simple tool designed to expose services to external traffic, relying on cloud provider-specific load balancers to distribute incoming requests across available pods.

How Service type LoadBalancer Works:

- External Access: It provides an external IP address that clients can use to access the application.

- Automatic Scaling: It automatically scales the load balancer to match the number of backend pods.

- Provider-Specific Implementation: The actual implementation of the Load Balancer service type depends on the cloud provider (e.g., AWS, GCP, Azure).

Limitations:

- Costly Conquests: Creating a load balancer for each service can be expensive, especially in cloud environments where each one incurs additional charges.

- Limited Flexibility: Offers minimal control over traffic routing and customization.

- Scalability Issues: Managing a large number of load balancers becomes cumbersome and inefficient.

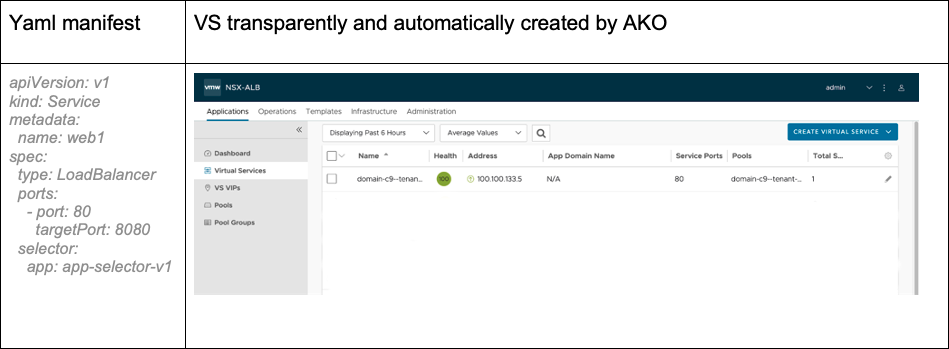

But that’s enough theory- let’s see this in action:

Avi and AKO (Avi Kubernetes Operator), fully support the Service type LoadBalancer. As soon as the developer creates a Service type LoadBalancer, AKO automatically creates an equivalent Virtual Service, seamlessly and transparently.Avi and AKO (Avi Kubernetes Operator), fully support the Service type LoadBalancer. As soon as the developer creates a Service type LoadBalancer, AKO automatically creates an equivalent Virtual Service, seamlessly and transparently.

To illustrate, see the figure below:

However, the basic components that modern developers crave—like an FQDN or SSL/TLS certificates—are noticeably absent from this native service. These missing pieces are essential for nearly all modern applications.

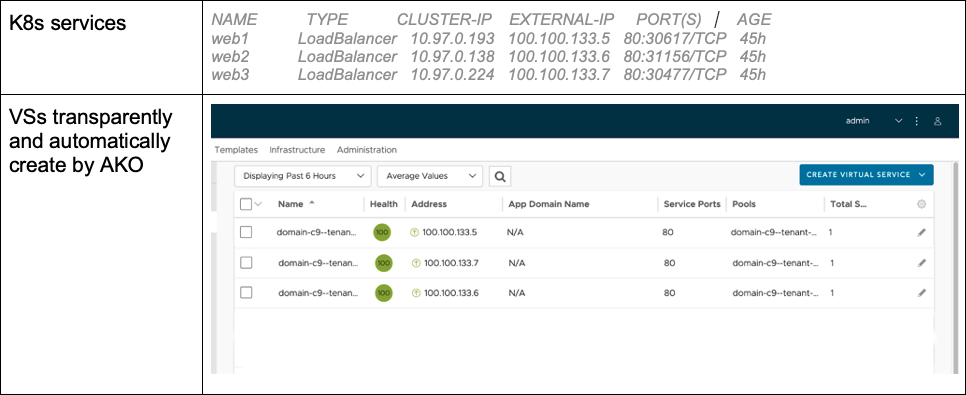

In addition, if you have multiple Service type LoadBalancers, you end up having multiple Virtual Services (VS) as shown in the figure below:

In Fig. 2, notice how the three services of type LoadBalancer each consume their own IP address. As cloud-native applications, microservices architecture, and service mesh continue to expand, this approach will not scale and will eventually become unmanageable!

The Rise of Ingress Controllers: A New Era Begins

As the limitations of the Service type LoadBalancer became more apparent, the Kubernetes community responded with a new leader: the Ingress Controller. Ingress provided a more flexible and cost-effective way to manage external access to Service type LoadBalancer, ushering in a new era of Kubernetes load balancing.

How Ingress Controller Works:

- Single Entry Point: Acts as a single entry point for multiple services, reducing the need for numerous load balancers.

- Advanced Routing: Ingress rules allow for sophisticated routing capabilities based on hostnames and paths.

- SSL Termination: Supports SSL termination, enhancing application security.

Improvements Over Service type LoadBalancer:

- Cost-Efficient Empire: Consolidates multiple services under one entry point, slashing the cost associated with creating multiple LoadBalancer services.

- Flexible Fiefdom: Offers greater control over traffic routing, enabling custom routing rules and configurations.

- Ecosystem Support: A variety of Ingress Controllers are available, such as Avi/AKO offering different features and capabilities.

Limitations:

- Extensibility: The Ingress API is limited in scope and only allows for simple host and/or path based routing. Modern microservice based applications can be much more complex requiring the use of annotations and Custom Resource Definitions (CRDs).

- Scalability Struggles: As services and traffic scale, Ingress Controllers can become bottlenecks, leading to potential performance issues.

- Limited Protocol Support: Ingress only works at Layer 7, specifically optimizing for HTTP and HTTPS traffic.

Let’s see this evolution in action:

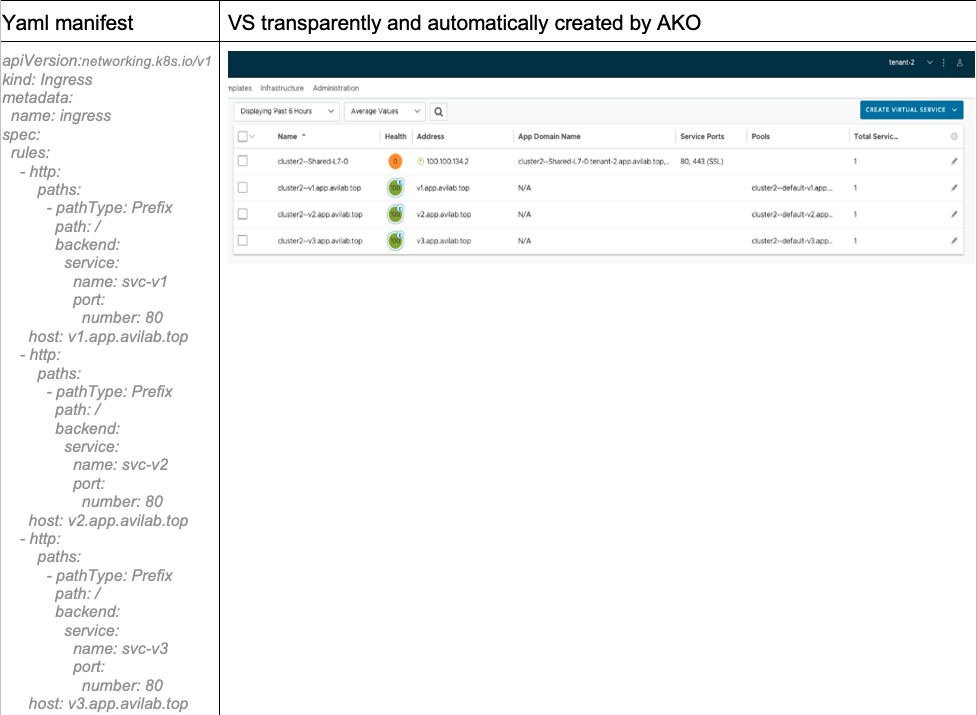

Avi and AKO once again rise to the occasion, fully supporting the Ingress Controller. When a developer creates an ingress, AKO automatically creates an equivalent Virtual Service, seamlessly integrated into your system. But how does this differ from the Service type LoadBalancer?

To illustrate, see the figure below:

In Fig. 3, you can see that the ingress has created four Virtual Services. The VS at the top is the only one consuming an IP and has been configured as a parent VS, with all the traffic directed to this service. The others are child VSs, associated with the parent VS.

Why do we have four VSs instead of just one? Each application may require different advanced configurations, such as:

- SSL/TLS certificates

- HTTP header rewriting

- Web Application Firewall

How can these advanced features be configured? AKO provides Custom Resource Definitions (CRDs) to achieve this, while other products may use CRDs or annotations.

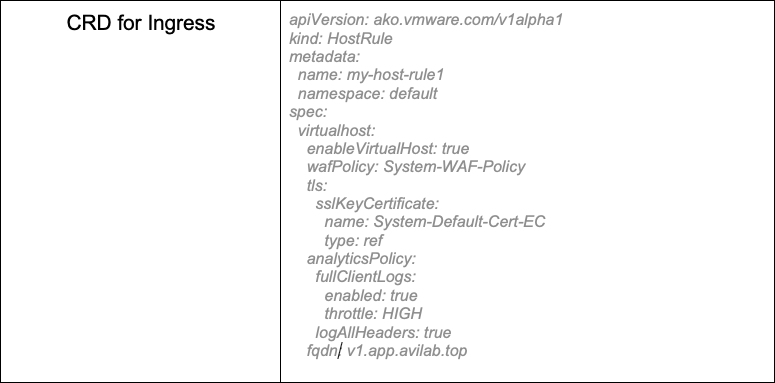

This is an example of CRD for AKO which enables advanced features:

Though Ingress introduces new features, it isn’t without its challenges:

- How do developers learn about CRDs or annotations?

- Ingress primarily targets HTTP/HTTPS applications, but what about other protocols (e.g., TCP, gRPC)?

- And how do teams juggle resources managed by different departments?

The Future Beckons: Enter the Gateway API

As Kubernetes continues to evolve, the demand for a more robust and scalable solution has led to the rise of the Gateway API—a modern marvel that builds on the lessons of its predecessors, offering a flexible and powerful way to manage traffic in Kubernetes.

How Gateway API Works:

- Standardized API: Provides a unified language for defining networking and traffic management, simplifying configuration and management.

- Decoupled Architecture: Separates routing from service configuration, enabling more modular and scalable architectures.

- Advanced Features: Supports features like traffic splitting, retries, and custom policies, out-of-the-box.

- RBAC Personas: Enables the creation of distinct Role-Based Access Control (RBAC) personas, such as infrastructure admin, developer, and platform admin, ensuring granular control and security across different roles within the organization.

Improvements Over Ingress:

- Scalability: Designed to handle large-scale applications with ease, enhancing scalability and performance.

- Flexibility: Offers granular control over traffic management, including custom policies and advanced routing capabilities. Gateway API supports both L4 protocols such as TCP and UDP as well as L7 protocols like HTTP natively within the specification.

- Extensibility: Built with extensibility in mind, allowing seamless integration with existing tools and services.

- Resource object definitions: Gateway API introduces GatewayClass for capability definitions, Gateway for instantiations, HTTPRoute for HTTP traffic rules, and more objects for other protocols.

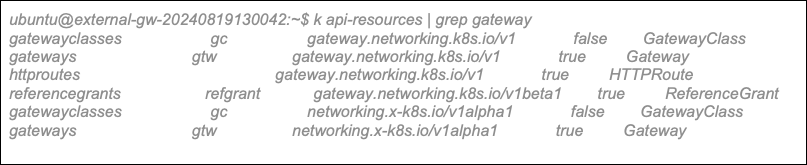

Gateway API is now partially supported, and each new release of Avi/AKO, the supportability will expand. Please refer to the documentation for further details. As a quick reminder, to leverage Gateway API, you need to check that the gateway API CRDs are installed in your K8s cluster:

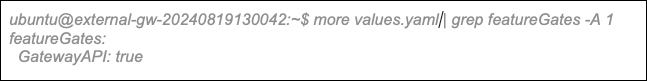

Also double check that this feature has been enabled if you have manually installed AKO:

Finally, let’s see how it works.

In this new era of the Gateway API, everyone has found their place in the kingdom!

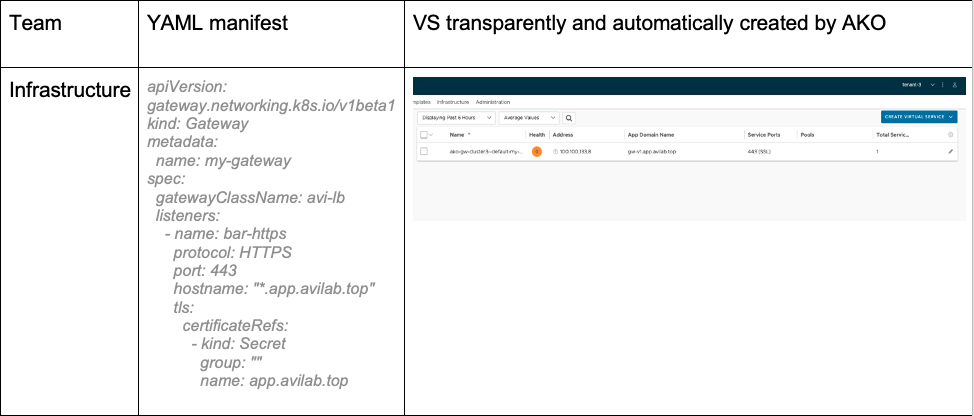

The infrastructure team now commands their own powerful objects to create Virtual Services and the associated Virtual IPs:

In the Figure above, the infrastructure team can configure a (parent) VS that can support wildcard domain name along with a SSL/TLS certificate (likely a wildcard certificate).

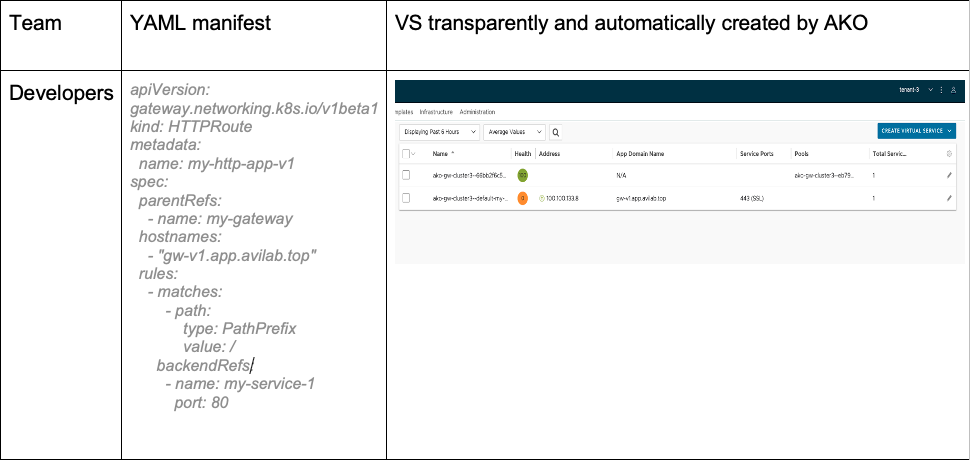

The developers also have their own object to configure their applications with the HTTPRoute object and similar other objects (like GRPCRoute – which is not yet supported) with settings that are well-known for them:

In Fig. 5, the developer team can configure (child) VS(s) that correspond to the rule(s) defined in the HTTPRoute object.

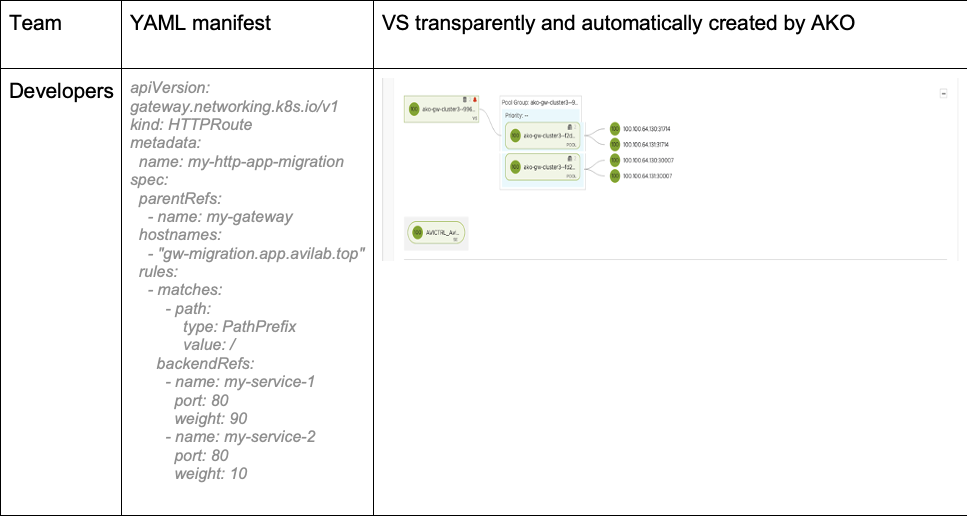

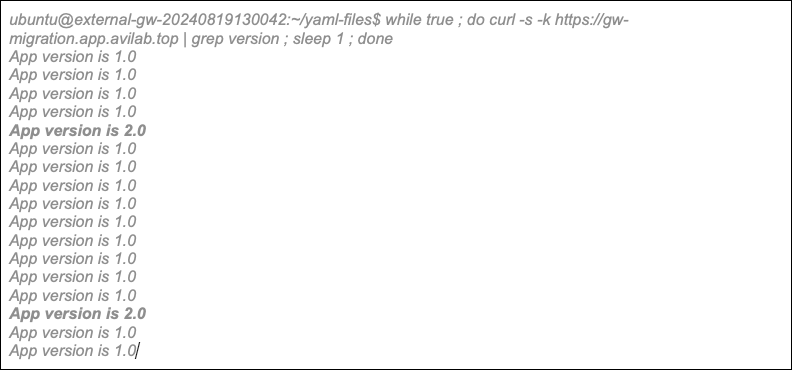

The Gateway API provides a good abstraction for advanced features without relying on CRDs or annotations. For example, developer teams can easily create a configuration to migrate their application from one service to another. In the following example, 80% of the traffic is forwarded to application version 1, while 20% is forwarded to application version 2.

Behold the results! With the Gateway API in place, harmony reigns across the realm. Developers can now wield their power to programmatically configure advanced policies with ease—no need to summon the Infrastructure team. It’s a new era of autonomy and efficiency where each role flourishes within its own domain—and everyone is truly happy.

Conclusion

- Comprehensive Protocol Support: Gateway API supports the most widely used protocols.

- Clear Team Boundaries: The Gateway object defines resources managed by the infrastructure team, while HTTPRoute and similar objects are managed by the development team.

- Bottleneck Buster: You can create multiple gateways, preventing bottlenecks.

- Developer Independence: Developers gain autonomy, with advanced use cases natively supported—no more CRDs or annotations needed.

Ready to modernize your load balancing with Avi? Contact us today to learn more!

The post From Ancient Gates to Modern Gateways: 3 Eras of Load Balancing in Kubernetes appeared first on VMware Load Balancing & WAF Blog.