Heads I win , Tail you gonna lose – famous song of a famous rock band “Bon Jovi” !!!

Business Critical Oracle Workloads have stringent IO requirements and enabling, sustaining, and ensuring the highest possible performance along with continued application availability is a major goal for all mission critical Oracle applications to meet the demanding business SLA’s, all the way from on-premises to VMware Hybrid Clouds

When it comes to Business-Critical Oracle Workloads, the critical requirement is consistent performance and that does not involve tossing a coin every time someone runs a workload to determine whether that would meet the business SLA’s or not!!!

Key points to take away from this blog

This blog is a preview of the changes VMware Engineering will be working on to report more appropriate values for ‘Optimal transfer length’ and ‘Maximum transfer length’ fields in Vital Product Data (VPD) page B0 response, for the underlying physical device, hosting the vmdk’s, to Guest OS for optimal IO performance for Business-Critical Workloads on VMware platform.

This will ensure VMware vSphere platform is more performant for customers to run their Business-Critical workloads and to meet customers stringent SLA’s.

This blog is not meant to be a performance benchmarking-oriented blog in any way.

Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing, so the performance improvement I got with my workload in my lab is in no way representative of any real production workload which means the performance improvements for real world workloads will be better.

VMware Paravirtual SCSI adapter

VMware Paravirtual SCSI adapter is a high-performance storage controller which provides greater throughput and lower CPU use, which boosts performance as compared to the other SCSI controller options. VMware Paravirtual SCSI controllers are best suited for environments running I/O-intensive applications.

More information on VMware Paravirtual SCSI adapter can be found here.

Much has been written and spoken about Queue depths, both on the PVSCSI side and the VMDK side. One of my earliest blogs ‘Queues, Queues and more Queues’ in 2015 attempts to explain the importance of queue depth especially in a virtualized stack.

Oracle Best Practices on VMware vSphere recommends using PVSCSI Controllers for Oracle workloads. In addition, increasing the PVSCSI and vmdk queue depth should also considered as simply using PVSCSI controllers will not help. Use all 4 PVSCSI controllers whenever and wherever possible.

More information on how to set the queue depths to maximum for workloads with intensive I/O patterns can be found in the article KB 2053145.

Linux SCSI INQUIRY, rdm’ s and vmdk’s

On Linux, the sg_vpd command fetches the Vital Product Data (VPD) pages from a disk device using the SCSI INQUIRY command. The vital product data (VPD) Block Limits is used to inform the guest of the transfer limits of the underlying physical device.

Issue is.

- RDM’s – ‘Maximum transfer length’ and ‘Optimal transfer length’ of the RDM lun is reported correctly to the Guest

- Currently with VMDK’s

- VMware does support the vital product data (VPD) page BO, mainly for writeSame and unmap related information but currently reports no limits for ‘Maximum transfer length’ & ‘Optimal transfer length’ of the underlying physical device, its currently reported as ‘0 blocks [not reported]’

- When the vmdk device doesn’t report any transfer length, maximum transfer size will then default to the ‘max_sectors_kb’ setting for that vmdk device and in some cases reported, Linux also defaults to max transfer of 512KB as well.

‘max_sectors_kb’ – defined as the maximum size of an I/O request in kilobytes. This restricts the largest IO size that the OS will issue to a block device.

The default value on RHEL 8.7 / OEL UEK 8.7 is 1280 KB. The maximum value for this parameter is determined by the value of ‘max_hw_sectors_kb’.

More information on ‘max_sectors_kb’ and ‘max_hw_sectors_kb’ can be found here.

Example values of default ‘max_sectors_kb’ ´and ‘max_hw_sectors_kb’ on OEL 8.7 is shown below.

[root@oracle21c-ol8 ~]# uname -a

Linux oracle21c-ol8.vslab.local 5.4.17-2136.313.6.el8uek.x86_64 #2 SMP Thu Oct 20 14:30:48 PDT 2022 x86_64 x86_64 x86_64 GNU/Linux

[root@oracle21c-ol8 ~]#

root@oracle21c-ol8 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux release 8.7 (Ootpa)

[root@oracle21c-ol8 ~]#

[root@oracle21c-ol8 ~]# cat /sys/block/sdh/queue/max_sectors_kb

1280

[root@oracle21c-ol8 ~]#

[root@oracle21c-ol8 ~]# cat /sys/block/sdh/queue/max_hw_sectors_kb

32767

[root@oracle21c-ol8 ~]#

We ran a sanity test check where we ran FIO against a vmdk on a VMFS datastore and a RDM, connected to the same VM and compare the results.

- 500GB EZT vmdk, PVSCSI controller, VMFS6 datastore from AFA array

- 500GB RDM, PVSCSI controller, same AFA array

The SCSI inquiry stats for both devices are almost the same except that the ‘Maximum transfer length’ and ‘Optimal transfer length’ stats are not currently reported for the vmdk to the GOS.

Example of SCSI INQUIRY page 0xB0 for a vmdk on VMFS datastore and RDM, both from the same AFA array, on OEL 8.7 are shown as below –

Test Bed

The Test bed is a 3 Node vSphere Cluster with 3 ESXi servers, VMware ESXi, 8.0.0 Build 63221659 (Engineering Specific Build) with the setup shown as below –

- VMware ESXi, 8.0.0 Build 63221659 (Engineering Specific Build) , VM version 20

- Oracle 21.5 with Grid Infrastructure, ASM Storage and ASMLIB

- OEL UEK 8.7

The 3 ESXi servers are Super Micro SYS-2049U-TR4 , each server has 4 sockets, 24 cores per socket, Intel(R) Xeon(R) Platinum 8260L CPU @ 2.40GHz with 1.5 TB RAM . The vCenter version was 8.0.0 build 20519528

Oracle VM ‘Oracle21C-OL8-Customer’ details are shown as below.

The VM has 18 vCPU’s, 256GB RAM, the single instance database ‘ORA21C’ was created with created with multi-tenant option & provisioned with Oracle Grid Infrastructure (ASM) and Database version 21.5 on O/S RHEL 8.7.

Oracle ASM was the storage platform with Oracle ASMLIB for device persistence. Oracle SGA & PGA set to 32G and 10G respectively.

All Oracle on VMware platform best practices were followed

The vmdk’s for the Oracle VM ‘Oracle21C-OL8-Customer’ are shown as below –

- Hard Disk 1 (SCSI 0:0) – 80G for OS (/)

- Hard Disk 2 (SCSI 0:1) – 80G for Oracle Grid Infrastructure & RDBMS binaries

- Hard Disk 3 (SCSI 1:0) – 100G – ASM Disk Group GRID_DG

- Hard Disk 4 (SCSI 1:1) – 200G – ASM Disk Group DATA_DG

- Hard Disk 5 (SCSI 2:0) – 1 TB – ASM Disk Group SLOB_DG

- Hard Disk 6 (SCSI 3:0) – 100G – ASM Disk Group REDO_DG

- Hard Disk 7 (SCSI 1:2) – 512G – ASM Disk Group FRA_DG

- Hard Disk 8 (SCSI 1:3) – 256G – ASM Disk 2 for ASM Disk Group DATA_DG

- Hard Disk 9 (SCSI 2:1) – 500G – FIO test vmdk

- Hard Disk 10 (SCSI 2:2) – 500G – FIO test RDM

Oracle ASM disk details are as below:

grid@oracle21c-ol8:+ASM:/home/grid> oracleasm listdisks

DATA_DISK01

DATA_DISK02

FRA_DISK01

GRID_DISK01

REDO_DISK01

SLOB_DISK01

grid@oracle21c-ol8:+ASM:/home/grid>

Oracle ASM Disk Group details are as below:

grid@oracle21c-ol8:+ASM:/home/grid> asmcmd lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 1048576 466942 182185 0 182185 0 N DATA_DG/

MOUNTED EXTERN N 512 512 4096 4194304 524284 524188 0 524188 0 N FRA_DG/

MOUNTED EXTERN N 512 512 4096 4194304 102396 102292 0 102292 0 N GRID_DG/

MOUNTED EXTERN N 512 512 4096 1048576 102399 49580 0 49580 0 N REDO_DG/

MOUNTED EXTERN N 512 512 4096 4194304 1048572 126640 0 126640 0 N SLOB_DG/

grid@oracle21c-ol8:+ASM:/home/grid>

Oracle ASM Disks details are as below:

grid@oracle21c-ol8:+ASM:/home/grid> asmcmd lsdsk -k

Total_MB Free_MB OS_MB Name Failgroup Site_Name Site_GUID Site_Status Failgroup_Type Library Label Failgroup_Label Site_Label UDID Product Redund Path

204799 80026 204799 DATA_DISK01 DATA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK01 UNKNOWN ORCL:DATA_DISK01

262143 102159 262143 DATA_DISK02 DATA_DISK02 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) DATA_DISK02 UNKNOWN ORCL:DATA_DISK02

524284 524188 524287 FRA_DISK01 FRA_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) FRA_DISK01 UNKNOWN ORCL:FRA_DISK01

102396 102292 102399 GRID_DISK01 GRID_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) GRID_DISK01 UNKNOWN ORCL:GRID_DISK01

102399 49580 102399 REDO_DISK01 REDO_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) REDO_DISK01 UNKNOWN ORCL:REDO_DISK01

1048572 126640 1048575 SLOB_DISK01 SLOB_DISK01 00000000000000000000000000000000 REGULAR ASM Library – Generic Linux, version 2.0.17 (KABI_V2) SLOB_DISK01 UNKNOWN ORCL:SLOB_DISK01

grid@oracle21c-ol8:+ASM:/home/grid>

Test Use case

2 Test cases using different load generators were used, without and with , the VMware Engineering provided build specific for testing the performance improvement.

- FIO test

- Run 1 – VMDK without the Engineering fix v/s RDM

- Run 2 – VMDK with the Engineering fix

- Oracle workload testing using SLOB

- without Engineering fix

- with Engineering fix

FIO Run 1 – VMDK without the Engineering fix v/s RDM

We ran FIO testing on the test bed, without the fix , against a VMDK & RDM (independent of Engineering fix as disk specifications are vendor specific).

FIO parameter file for both test runs are as below –

[root@oracle21c-ol8 ~]# cat fio.txt

[global]

ioengine=libaio

direct=1

group_reporting

time_based

unified_rw_reporting=1

#

randrepeat=1

readwrite=write

[job1]

loops=1

runtime=5m

name=fiotest

[root@oracle21c-ol8 ~]#

In the case where the INQUIRY page 0xB0 is not supported for the vmdk

- one could potentially set the ‘max_sectors_kb’=32767, maximum value as the ‘max_hw_sector_kb’ and assume that the IO size would then be 32M and the BW would be much higher, not true!!!

- the IO size & bandwidth will be capped at the physical limits of the underlying physical device

With ‘Maximum transfer length’ and ‘Optimal transfer length’ being set to 0 (default)

- this may lead to inconsistent performance across different storage platform as every storage vendor may have certain IO size maximums and so this will result in inconsistent performance.

- this is may also cause inconsistent workload performance with different versions of the Guest OS as each OS may behave and report different, in absence of any real data

3 separate runs of FIO were done as part of Run 1

- VMDK (/dev/sdi)

- FIO run with max_sectors_kb=1280 (default)

- FIO run with max_sectors_kb=32767 (set to maximum value of ‘max_hw_sector_kb)

- RDM (/dev/sdj)

- FIO with max_sectors_kb=4096 (this is derived from the disk specifications of the Array vendor and cannot be changed, independent of VMware Engineering fix)

In case, one if wondering if they could increase the ‘max_sectors_kb’ for the RDM to the maximum value i.e. ‘max_hw_sectors_kb’ – we were not able to as the RDM disk specifications is vendor specific and in this case , the vendor AFA allows only 8192 blocks / 4M maximum, see error message below. This is vendor specific limitation.

root@oracle21c-ol8 ~]# echo 32767 > /sys/block/sdj/queue/max_sectors_kb

-bash: echo: write error: Invalid argument

[root@oracle21c-ol8 ~]#

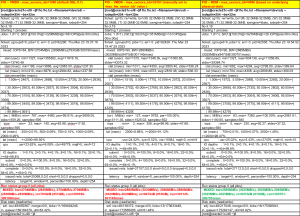

Checking the ‘Maximum transfer length’ and ‘Optimal transfer length’ values across 3 use cases –

FIO test results 3 use cases –

IOSTAT results for FIO test 3 use cases –

Summarizing our FIO results from “FIO Run 1 – VMDK without the Engineering fix v/s RDM”

- FIO Bandwidth

- VMDK with max_sector_size=32767 and RDM test run – Similar results

- VMDK with max_sectors_kb=1280 (default) – Slightly less

- IOSTAT wKB/s – All runs – Similar around 3MB/s

- IOSTAT wareq-sz – reflected the max_sector_kb settings for that device

We captured the esxtop output to see if we had any different results for the Write bandwidth for both the VMDK datastore LUN (naa.624a9370a841b405a3a348ca007eebe1) and RDM (naa.624a9370a841b405a3a348ca00012ac0)

We see similar results for Write MBWRTN/s in all 3 cases around 3MB/s

esxtop Output – Datastore LUN naa.624a9370a841b405a3a348ca007eebe1 – VMDK max_sectors_kb=1280

esxtop Output – Datastore LUN naa.624a9370a841b405a3a348ca007eebe1 – VMDK max_sectors_kb=32767

The IOSTAT wareq-sz , in case of VMDK with max_sectors_kb=32767 (maximum) , did reflect that setting but it did not change anything from a Write bandwidth perspective !!!!!

esxtop Output – RDM with LUN naa.624a9370a841b405a3a348ca00012ac0 – RDM max_sectors_kb=4096

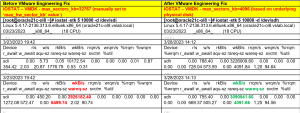

FIO Run 2 – VMDK with the Engineering fix

With VMware Engineering fix reporting more appropriate values for Optimal and Maximum transfer length fields in Vital Product Data (VPD) page B0 response, based on the underlying physical disk from the AFA, we can see that the ‘Maximum transfer length’ and ‘Optimal transfer length’ for the vmdk is now reported correctly by the GOS.,

Let’s compare

- VMDK without Engineering fix, max_sectors_kb=32767

- VMDK with Engineering fix, max_sectors_kb=4096

Checking the ‘Maximum transfer length’ and ‘Optimal transfer length’ values across 2 use cases –

FIO test results 2 use cases –

IOSTAT test results for FIO 2 use cases –

Summarizing our FIO results from “FIO Run 2 – VMDK with the Engineering fix”

- FIO Bandwidth – VMDK with Engineering fix v/s RDM – Higher

- IOSTAT wKB/s – VMDK with Engineering fix v/s RDM – Higher

- IOSTAT wareq-sz – reflected the max_sector_kb settings for that device

We captured the esxtop output to see if we had any different results for the Write bandwidth for the VMDK datastore LUN (naa.624a9370a841b405a3a348ca007eebe1) . We see similar results as earlier for Write MBWRTN/s around 3MB/s

esxtop Output – Datastore LUN naa.624a9370a841b405a3a348ca007eebe1 – VMDK max_sectors_kb=4096

Graphical representation of the results is shown as below.

As indicated earlier, the outlier in the above graph with the IOSTAT ‘wareq-sz’ comes from the fact that one could potentially set the ‘max_sectors_kb’=32767, maximum value as the ‘max_hw_sector_kb’ and assume that the IO size would then be 32M but as wee see, the IO size & bandwidth will be capped at the physical limits of the underlying physical device.

In summary, with the VMware Engineering provided fix, we see the below improvements –

- correctly reported values for ‘Maximum transfer length’ and ‘Optimal transfer length’ for the vmdk with no reduction of bandwidth

- better predictable results with correct ‘Write Average Request Size’ reported by iostat on the GOS with every run

Remember, this blog is not meant to be a performance benchmarking-oriented blog in any way. Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing, so the performance improvement I got with my workload in my lab is in no way representative of any real production workload which means the performance improvements for real world workloads will be better.

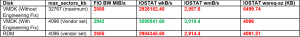

Oracle workload test results using SLOB – without and with the Engineering provided fix

Our second test was to run SLOB workload generator against a 1 TB ASM disk backed vmdk, without and with the Engineering provided fix and study the workload test results.

SLOB 2.5.4.0 was chosen as the load generator for this exercise with following SLOB parameters set as below:

- UPDATE_PCT=100

- RUN_TIME=300

- SCALE=32G

- WORK_UNIT=3

- REDO_STRESS=LITE

We deliberately chose the minimum Work Unit size to drive the most amount of IO without stressing REDO to study the performance metrics differences between the 2 scenarios, without and with the Engineering provided fix.

We ran multiple SLOB runs for all the 3 individual use cases below to compare and study the Oracle metrics, without and with the Engineering fix.

3 Oracle workload SLOB Use Cases

- No Engineering Fix – default values for ‘max_sectors_kb’

- No Engineering Fix – set disk ‘max_sectors_kb’=32767 (‘max_sectors_kb’ = ‘max_hw_sector_kb’)

- With Engineering Fix – ‘Maximum transfer length’ and ‘Optimal transfer length’ set to 8192 blocks / 4M as reported by GOS

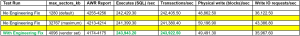

With the Oracle workload SLOB testing, comparing the results without and with the Engineering fix – with lower Physical writes(blocks)/sec and Write Io requests/sec, we achieved

- Executes (SQL)/sec – Increased with the Engineering fix and was predictable and consistent

- Transactions/sec – Increased with the Engineering fix and was predictable and consistent

In conclusion, we were able to Execute more SQL/sec and hence more Transactions/sec with lower Physical writes(blocks)/sec and Write Io requests/sec with the Engineering fix, which means more work done.

Graphical representation of the results is shown as below.

Again, remember, this blog is not meant to be a performance benchmarking-oriented blog in any way. Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing, so the performance improvement I got with my workload in my lab is in no way representative of any real production workload which means the performance improvements for real world workloads will be better.

Summary

This blog is a preview of the changes VMware Engineering will be working on to report more appropriate values for ‘Optimal transfer length’ and ‘Maximum transfer length’ fields in Vital Product Data (VPD) page B0 response, for the underlying physical device, hosting the vmdk’s, to Guest OS for optimal IO performance for Business-Critical Workloads on VMware platform.

This will ensure VMware vSphere platform is more performant for customers to run their Business-Critical workloads and to meet customers stringent SLA’s.

This blog is not meant to be a performance benchmarking-oriented blog in any way.

Remember, any performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing, so the performance improvement I got with my workload in my lab is in no way representative of any real production workload which means the performance improvements for real world workloads will be better.

Summarizing our FIO results from “FIO Run 1 – VMDK without the Engineering fix v/s RDM”

- FIO Bandwidth

- VMDK with max_sector_size=32767 and RDM test run – Similar results

- VMDK with max_sectors_kb=1280 (default) – Slightly less

- IOSTAT wKB/s – All runs – Similar around 3MB/s

- IOSTAT wareq-sz – reflected the max_sector_kb settings for that device

Summarizing our FIO results from “FIO Run 2 – VMDK with the Engineering fix”

- FIO Bandwidth – VMDK with Engineering fix v/s RDM – Higher

- IOSTAT wKB/s – VMDK with Engineering fix v/s RDM – Higher

- IOSTAT wareq-sz – reflected the max_sector_kb settings for that device

In summary, with the VMware Engineering provided fix, we see the below improvements –

- correctly reported values for ‘Maximum transfer length’ and ‘Optimal transfer length’ for the vmdk with no reduction of bandwidth

- better predictable results with correct ‘Write Average Request Size’ reported by iostat on the GOS with every run

With the Oracle workload SLOB testing , comparing the results without and with the Engineering fix – with lower Physical writes(blocks)/sec and Write Io requests/sec, we achieved

- Executes (SQL)/sec – Increased with the Engineering fix and was predictable and consistent

- Transactions/sec – Increased with the Engineering fix and was predictable and consistent

In conclusion, we were able to Execute more SQL/sec and hence more Transactions/sec with lower Physical writes(blocks)/sec and Write Io requests/sec with the Engineering fix, which means more work done.

Acknowledgements

This blog was authored by Sudhir Balasubramanian, Senior Staff Solution Architect & Global Oracle Lead – VMware.

Many thanks to the following for providing VMware Engineering fix and guidance.

- Sujay Godbole, Staff Core Engineer, VMware

Many thanks to the following for proving their invaluable support and inputs in this effort.

- Gautam Swamy, Sr Manager – Core Storage, VMware

- Naveen Krishnamurthy, Senior Product Line Manager – Core Storage, VMware

Conclusion

Business Critical Oracle Workloads have stringent IO requirements and enabling, sustaining, and ensuring the highest possible performance along with continued application availability is a major goal for all mission critical Oracle applications to meet the demanding business SLA’s, all the way from on-premises to VMware Hybrid Clouds

All Oracle on VMware vSphere collaterals can be found in the url below.

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html

Optimizing Oracle workload performance with VMware Guest Operating System Storage Improvements Share on X

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.