This blog is the second blog in the series of “Deploying Oracle Workloads on VMware vSphere 8.0 using NVMe/TCP backed Lightbits software” blogs.

The first blog “Deploying Oracle Workloads on VMware vSphere 8.0 using NVMe/TCP with Lightbits Scale out Disaggregated Storage” can be found here.

Business Critical Oracle Workloads have stringent IO requirements and enabling, sustaining, and ensuring the highest possible performance along with continued application availability is a major goal for all mission critical Oracle applications to meet the demanding business SLA’s, all the way from on-premises to VMware Hybrid Clouds.

Oracle RAC provides high availability and scalability by having multiple instances access a single database which prevents the server from being a single point of failure. Oracle RAC enables you to combine smaller commodity servers into a cluster to create scalable environments that support mission critical business applications.

With increasing need for data to be “fast and furious”, customers are turning to NVMe transfer protocol for accessing data quickly from flash memory storage devices such as solid-state drives (SSDs).

NVMe, or nonvolatile memory express, is a storage access protocol and transport protocol for flash and next-generation solid-state drives (SSDs) that delivers high throughput and fast response times for a variety of traditional and modern workloads. While all-NVMe server-based storage has been available for years, the use of non-NVMe protocols for storage networking can add significant latency.

To address this performance bottleneck, NVMe over TCP or NVMe/TCP, extends NVMe’s performance and latency benefits across TCP based network fabric.

We’ve heard from our customers that they would like to run NVMe/TCP because:

- TCP/IP is ubiquitous

- Well understood – TCP is probably the most common transport

- High performance – TCP delivers excellent performance scalability

- Well suited for large scale deployments and longer distances

- Actively developed – maintenance and enhancements are developed by major players

- Inherently supports in-transit encryption

More information on NVMe/TCP Support with VMware vSphere 7 Update 3 can be found here.

More information on VMware NVMe Concepts can be found here.

Key points to take away from this blog

This blog is not meant to be a performance benchmarking-oriented blog.

This blog is meant to raise the awareness of the simplicity and seamless use of NVMe/TCP storage backed datastores provided by Lightbits Storage to provide vmdk based storage for business-critical Oracle RAC workloads.

Oracle non-RAC workloads can take advantage of NVMe/TCP storage using VM vNVMe controller backed vmdk’s on those NVMe/TCP storage enhancing performance with high throughput, low latency, fast response times and reduced CPU utilization.

Oracle RAC workloads can also take advantage of the same NVMe/TCP storage for all of the above reasons using VM PVSCSI controller backed vmdk’s on those NVMe/TCP storage.

As per KB 1034165 – current restriction for RAC only – “When using the multi-writer mode, the virtual disks must NOT be attached to the virtual NVMe controller” . VMware Engineering is aware of this and has this feature slated on the release roadmap.

From an Oracle workload (RAC & non-RAC) or pretty much any Application on VMware platform, from a functional perspective, a vmdk carved form a NVMe/TCP backed Datastore is just a regular vmdk which then gets attached to the VM and gets consumed the same way as any regular vmdk on a VMFS /vVOL / NFS / vSAN datastore.

Oracle Real Application Clusters (RAC) on VMware vSphere

Oracle Clusterware is portable cluster software that provides comprehensive multi-tiered high availability and resource management for consolidated environments. It supports clustering of independent servers so that they cooperate as a single system.

Oracle Clusterware is the integrated foundation for Oracle Real Application Clusters (RAC), and the high-availability and resource-management framework for all applications on any major platform.

There are two key requirements for Oracle RAC:

• Shared storage

• Multicast Layer 2 networking

These requirements are fully addressed when running Oracle RAC on VMware vSphere, as both shared storage and Layer 2 networking are natively supported.

VMware vSphere HA clusters enable a collection of VMware ESXi hosts to work together so that, as a group, they provide higher infrastructure-level availability for VMs than each ESXi host can provide individually. VMware vSphere HA provides high availability for VMs by pooling the VMs and the hosts on which they reside into a cluster. Hosts in the cluster are monitored and in the event of a failure, the VMs on a failed host are restarted on alternate hosts

Oracle RAC and VMware HA solutions are complementary to each other. Running Oracle RAC on a VMware platform provides the application-level HA enabled by Oracle RAC, in addition to the infrastructure-level HA enabled by VMware vSphere.

More information on Oracle RAC on VMware vSphere can be found at Oracle VMware Hybrid Cloud High Availability Guide – REFERENCE ARCHITECTURE

VMware multi-writer attribute for shared vmdk’s

VMFS is a clustered file system that disables (by default) multiple VMs from opening and writing to the same virtual disk (.vmdk file). This prevents more than one VM from inadvertently accessing the same .vmdk file. The multi-writer option allows VMFS-backed disks to be shared and written to by multiple VMs. An Oracle RAC cluster using shared storage is a typical use case.

By default, the simultaneous multi-writer “protection” is enabled for all. vmdk files ie all VM’s have exclusive access to their vmdk files. So in order for all of the VM’s to access the shared vmdk’s simultaneously, the multi-writer protection needs to be disabled.

KB 1034165 provides more details on how to set the multi-writer option to allow VM’s to share vmdk’s.

In the case of VMware vSphere on VMFS (non vSAN Storage) , vVol (beginning with ESXi 6.5) and NFS datastores, using the multi-writer attribute to share the VMDKs for Oracle RAC requires

- SCSI bus sharing needs to be set to none

- VMDKs must be Eager Zero Thick (EZT) ; thick provision lazy zeroed or thin-provisioned formats are not allowed

- VMDK’s need not be set to Independent persistent

The below table describes the various Virtual Machine Disk Modes

While Independent-Persistent disk mode is not a hard requirement to enable Multi-writer option, the default Dependent disk mode would cause the “cannot snapshot shared disk” error when a VM snapshot is taken. Use of Independent-Persistent disk mode would allow taking a snapshot of the OS disk while the shared disk would need to be backed up separately by a third-party vendor software e.g. Oracle RMAN

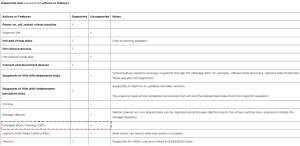

Supported and Unsupported Actions or Features with Multi-Writer Flag

**** Important ***

- SCSI bus sharing is left at default and not touched at all in case of using shared vmdk’s. – Leave it alone for RAC with shared vmdk’s

- It’s only used for RAC’s with RDM (Raw Device Mappings) as shared disks.

VMware recommends using shared VMDK (s) with Multi-writer setting for provisioning shared storage for ALL Oracle RAC environments (KB 1034165)

More information on Oracle RAC on VMware vSphere can be found at Oracle VMware Hybrid Cloud High Availability Guide – REFERENCE ARCHITECTURE

Oracle Storage using vmdk’s on Lightbits NVMe/TCP storage

Oracle (RAC & non-RAC) workloads on VMware platform can take advantage of various storage options available which includes running Oracle workloads on VMware datastores backed by Lightbits NVMe/TCP storage.

More information on storage options available for Oracle workloads on VMware platform can be found here.

From an Oracle (RAC & non-RAC) workload perspective, once a vmdk is created on a Lightbits NVMe/TCP storage backed VMware Datastore and added to a Linux VM, when we login to the guest operating system, we have a choice of either using the newly created Linux device as

- File system

- Oracle Automatic Store Management (ASM) disk.

The rest of steps in partitioning the disks, creating ASM devices on them, adding them to ASM disk groups and creating Single Instance / RAC database is the same as in any physical architecture.

Test Use case

This test bed use case showcases the validation of Oracle RAC on VMware vSphere 8.0 using NVMe/TCP with Lightbits software using this setup below

- VMware ESXi, 8.0.0 Build 20513097

- Lightbits software

- Oracle RAC 19.12 with Grid Infrastructure, ASM Storage and ASMLIB on Oracle Enterprise Linux (OEL) 8.5

Test Bed

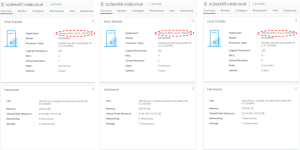

The Test bed is a 3 Node vSphere Cluster with 3 ESXi servers, all version ESXi, 8.0.0 Build 20513097.

The server names are ‘sc2esx67.vslab. local’, ‘sc2esx68.vslab. local’ and ‘sc2esx69.vslab. local’.

The 3 ESXi servers are Intel NF5280M6 Ice Lake Servers, each server has 2 sockets, 32 cores per socket, Intel(R) Xeon(R) Gold 6338 CPU @ 2.00GHz, with 256GB RAM.

The vCenter version was 8.0.0 build 20519528.

The details of the Lightbits software and the Lightbits plugin can be found in the first blog “Deploying Oracle Workloads on VMware vSphere 8.0 using NVMe/TCP with Lightbits Scale out Disaggregated Storage”.

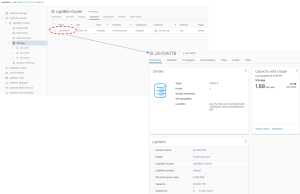

The NVMe/TCP storage and datastore details are shown as below.

The 3 ESXi servers are connected to the Lightbits backed NVMe/TCP datastore.

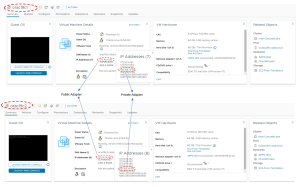

Oracle RAC VM’s ‘orac19c1’ and ‘0rac19c2’ details are shown as below.

Each VM has 16 vCPU’s and 64GB vRAM. RAC database ‘orac19c’ was created with multi-tenant option & provisioned with Oracle Grid Infrastructure (ASM) and Database version 19.15 on O/S OEL 8.5 UEK.

Oracle ASM was the storage platform with Oracle ASMLIB for device persistence. Oracle SGA & PGA set to 32G and 6G, respectively. All Oracle on VMware best practices were followed.

The Public and Private Adapter IP address information is shown as below.

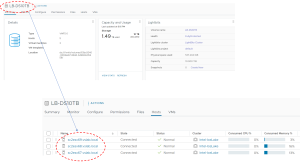

The vmdk’s for the VM ‘orac19c1’ & ‘orac19c2’ are shown as below –

- Hard Disk 1 (SCSI 0:0) – 80G for OS (/)

- Hard Disk 2 (SCSI 0:1) – 80G for Oracle Grid Infrastructure and RDBMS binaries

- Hard Disk 3 (SCSI 1:0) – 500G for Oracle RAC (GIMR, CRS, VOTE, DATA) on Lightbits NVMe/TCP datastore

Note – We used 1 VMDK for the entire RAC Cluster, as the intention here was to demonstrate the simplicity of using Lightbits NVMe/TCP datastore for Oracle RAC workloads

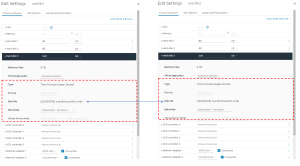

Details of shared Hard Disk 3 vmdk on NVMe/TCP Lightbits NVMe/TCP storage is shown as below.

Recommendation is to follow the RAC deployment Guide on VMware for Best Practices with respect to the RAC layout – Oracle VMware Hybrid Cloud High Availability Guide – REFERENCE ARCHITECTURE

From an Oracle workload (RAC and non-RAC) or pretty much any Application on VMware platform, from a functional perspective, a vmdk carved form a NVMe/TCP backed Datastore is just a regular vmdk which then gets attached to the VM and gets consumed the same way as any regular vmdk on a VMFS /vVOL / NFS / vSAN datastore.

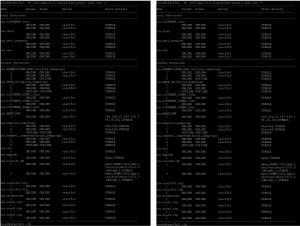

Oracle ASM disk group details for both RAC VM’s ‘orac19c1’ and ‘orac19c2’ are shown as below:

The OS details for RAC VM ‘orac19c1’ are shown as below , RAC VM ‘orac19c2’ has same OS version.

The Cluster services are up as shown below.

Summary

This blog is not meant to be a performance benchmarking-oriented blog.

This blog is meant to raise the awareness of the simplicity and seamless use of NVMe/TCP storage backed datastores provided by Lightbits Storage to provide vmdk based storage for business-critical Oracle RAC workloads.

Oracle non-RAC workloads can take advantage of NVMe/TCP storage using VM vNVMe controller backed vmdk’s on those NVMe/TCP storage enhancing performance with high throughput, low latency, fast response times and reduced CPU utilization.

Oracle RAC workloads can also take advantage of the same NVMe/TCP storage for all of the above reasons using VM PVSCSI controller backed vmdk’s on those NVMe/TCP storage , as per KB 1034165 – current restriction for RAC only – “When using the multi-writer mode, the virtual disks must NOT be attached to the virtual NVMe controller” . VMware Engineering is aware of this and has this feature slated on the release roadmap.

From an Oracle workload (RAC & non-RAC) or pretty much any Application on VMware platform, from a functional perspective, a vmdk carved form a NVMe/TCP backed Datastore is just a regular vmdk which then gets attached to the VM and gets consumed the same way as any regular vmdk on a VMFS /vVOL / NFS / vSAN datastore.

This test bed use case showcases the validation of Oracle RAC on VMware vSphere 8.0 using NVMe/TCP with Lightbits software using this setup below

- VMware ESXi, 8.0.0 Build 20513097

- Lightbits software

- Oracle RAC 19.12 with Grid Infrastructure, ASM Storage and ASMLIB on Oracle Enterprise Linux (OEL) 8.5

Acknowledgements

This blog was authored by Sudhir Balasubramanian, Senior Staff Solution Architect & Global Oracle Lead – VMware.

Many thanks to the following for proving their invaluable support in this effort

- Sagy Volkov & Gagan Gill, Lightbits

Conclusion

This blog is the second blog in the “Deploying Oracle Workloads on VMware vSphere 8.0 using NVMe/TCP backed Lightbits software” series of blogs.

The first blog “Deploying Oracle Workloads on VMware vSphere 8.0 using NVMe/TCP with Lightbits Scale out Disaggregated Storage” can be found here.

Business Critical Oracle Workloads have stringent IO requirements and enabling, sustaining, and ensuring the highest possible performance along with continued application availability is a major goal for all mission critical Oracle applications to meet the demanding business SLA’s, all the way from on-premises to VMware Hybrid Clouds.

Oracle RAC provides high availability and scalability by having multiple instances access a single database which prevents the server from being a single point of failure. Oracle RAC enables you to combine smaller commodity servers into a cluster to create scalable environments that support mission critical business applications.

With increasing need for data to be “fast and furious”, customers are turning to NVMe transfer protocol for accessing data quickly from flash memory storage devices such as solid-state drives (SSDs).

NVMe, or nonvolatile memory express, is a storage access protocol and transport protocol for flash and next-generation solid-state drives (SSDs) that delivers high throughput and fast response times for a variety of traditional and modern workloads. While all-NVMe server-based storage has been available for years, the use of non-NVMe protocols for storage networking can add significant latency.

To address this performance bottleneck, NVMe over TCP or NVMe/TCP, extends NVMe’s performance and latency benefits across TCP based network fabric.

We’ve heard from our customers that they would like to run NVMe/TCP because:

- TCP/IP is ubiquitous

- Well understood – TCP is probably the most common transport

- High performance – TCP delivers excellent performance scalability

- Well suited for large scale deployments and longer distances

- Actively developed – maintenance and enhancements are developed by major players

- Inherently supports in-transit encryption

All Oracle on VMware vSphere collaterals can be found in the url below

Oracle on VMware Collateral – One Stop Shop

https://blogs.vmware.com/apps/2017/01/oracle-vmware-collateral-one-stop-shop.html

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.