A few days ago I did a post on why VR is much quicker with version 5.5 than it had been in the past.

Now let’s take a look at what the result of those changes is.

VR Performance

I replicated a single VM, 32 GB thick provisioned in size of which about 12 GB is actually allocated to disk.

It took 44 minutes to copy that to the target location, including disk creation and setup time. Specifically, 11.77 GB over 44 minutes, 21 seconds. That’s about 4.56 MBps or 36.5 Mbps.

Now, that’s pretty good for a single VMDK! What about when we start stacking up extra replications? What does that do to our throughput?

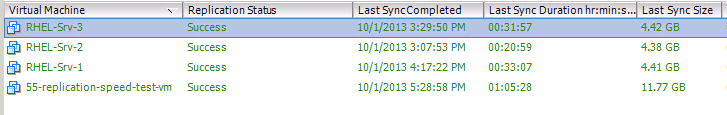

I wiped out that replica and started it up again, plus three other VMs at the same time. They had 2 VRS appliances to work with at the recovery site.

They completed at different times, with the 3 smaller ones of ~4.4 GB completing in 20-30 minutes each, and the 32 GB completing in about an hour, but the aggregate bandwidth for the first 20 minutes would be interesting to figure out.

- RHEL-SRV-1: 4.41 GB in 33 minutes for ~18 Mbps

- RHEL-SRV-2: 4.38 GB in 21 minutes for ~28 Mbps

- RHEL-SRV-3: 4.42 GB in 32 minutes for ~18.8 Mbps

- 55-replication-speed-test-vm: 11.77 GB in 65 minutes for ~24.7 Mbps

Keep in mind these are parallel replications! We can’t precisely just mash the numbers together and come up with a total speed as they did stop at different times, but we are seeing *approximately* 89.5 Mbps for the 4 VMs in parallel, or about 45 Mbps to each VR Appliance at the recovery site.

What about our VR Appliances? Do they bear that out? Well I looked at the maximum receive and transmit throughput rates (receives and writes should be very close to identical… they just pass the data through to the cluster) and we see 9793 KBps (78 Mbps) on one and 6764 KBps (54 Mbps) on the other for a total of 16557 KBps or 132 Mbps at their peak. Now THAT’s pretty good performance!

You may have really fast links, say 1GB, and think that this is nowhere near what your links are capable of sending. If you compare these findings with the previous real-world findings about performance with 5.0 shared by HOSTING in a previous blog post you’ll see individual VM replications are already going through about twice as fast. When we start stacking numerous appliances at the recovery site, and more numbers of parallel replications of VMs, the performance is not capped by the individual maximum buffers and maximum extents giving us these considerably quicker sends of data.

Coming Next – Warnings and caveats about great performance