Our team has been working on building solutions for NSX with Business Critical Applications around Zero trust security, application mobility, operational efficiency, increased security and more .

In a previous post I published a demo about database cloning and enforcing dynamic security policies using NSX: http://blogs.vmware.com/apps/2016/04/dynamically-enforcing-security-on-a-hot-cloned-sql-server-with-vmware-nsx.html

In this blog post we are showcasing the ability to stretch an Oracle RAC solution in an Extended Oracle RAC deployment between multi-datacenter and using VMware NSX for L2 Adjacency.

With Extended Oracle RAC , both Storage and Network virtualization needs to be deployed to provided high availability, workload Mobility, workload balancing and effective Site Maintenance between sites.

NSX supports multi-datacenter deployments to allow L2 adjacency in software, to put it in simple words stretching the network to allow VM too utilize the same subnets in multiple sites.

The really cool thing here is that this is 100% implemented in software and can be easily augmented and replicated to your needs. You can even choose multiple implementation paths and configurations and apply all of them at the same time with minimal dependency to the physical infrastructure and it configuration. After all, this is virtual!

Multi-datacenter NSX can be implemented in multiple ways for different use cases:

- For Disaster Recovery – where we deploy NSX to support a failover scenario where one site is mainly active for a workload and in case of site failure we flip a switch to support the networking from the secondary site.

- For Disaster Avoidance and Workload Mobility – Where we move the networking of VMs to a secondary site on demand

In both cases a workload and its networking is either communicating from one site’s physical infrastructure or the other and when active from one site (the primary) it will traverse from that site’s physical infrastructure to the secondary site if needed.

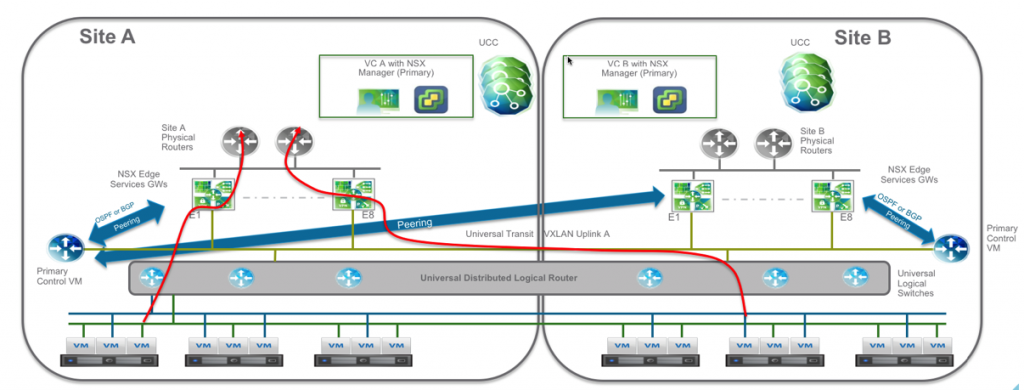

You can see in the diagram below that Site A is the primary and therefore Site B utilizes Site A’s physical routers for ingress and egress communication.

In case of a failure or a migration Site B’s infrastructure becomes active for ingress and egress:

The solution we created for Oracle RAC is different, and that is based on Oracle RAC’s unique requirements. You can see in the demo here

Oracle RAC, requires active networking from each respective site. It requires all nodes to have IPs on the same segment and if the nodes are placed in multiple sites , we then need to setup a solution to allow the same segment in both datacenters.

Since all Oracle RAC nodes are serving the applications and users in read/write for scalability purposes, performance needs to be interchangeable between them, the requirement is that each site will be active on its own infrastructure,

In the diagram below taken from the demo video, you can see the two sites and that each RAC node has “Optimized ingress” and “Locale egress” networking configuration in the respective site’s physical infrastructure and no site is considered “Primary”.

The way this was achieved in this implementation of the solution is by utilizing /32 static routes for site B’s Oracel RAC node that are injected on site B’s edge devices and than advertised to the uplink router using OSPF

One of the interesting challenges with this implementation was regarding the Oracle RAC SCAN IP’s. SCAN (Single Client Access Name) IP’s are IP’s assigned to ann Oracle RAC implementation which is used for client side load balancing between the cluster instances..

SCAN IP’s can comprise of a max of 3 IP’s configured in the DNS and they can come up on any node in the cluster in each one of the sites randomly.

To solve that problem , we created vRO workflows that can detect a VM coming up on site B and going down and run a workflow that can either add or remove a /32 static route from that sites edge device to support the movement of Vms or in our case the SCAN IPs.

Disclaimer, this solution can and will be improved from a scalability perspective, in particular automating route injection, from a performance and availability it is production ready.

Also, Route injection is not the only way to go about this, one can solve the challenge of moving IPs through other means or even from the presentation layer.

The demo explains step by step how this was implemented from an NSX perspective, here is the full link to the demo:

This demo was also featured in Sudhir and my session at VMworld 2016 here:

Worked with me in creating this solution :

Sudhir Balasubramanian – Oracle

Agustin Malanco – NSX

Christpohe Decanini – Automation

As always any comments or questions are welcome.