by: VMware Senior Application Developer Arijit Saha and VMware Lead Application Administrator Gopala Krishna Sandur Kustigi

Most organizations today maintain an inventory of master data entities (customers, employees, products etc.) that must be synced across various applications. An example of this is employee data. Initially, VMware IT developed point-to-point integrations between our human capital management (HCM) application and other applications that need employee data. While a point-to-point approach solves functionality requirements, we soon faced challenges in other areas.

The challenges

When a growing number of applications requesting employee data, this point-to-point approach was leading to scaling, resiliency, and performance challenges that made it difficult to meet dynamic business needs in a timely manner. Key challenges included:

- Same data was fetched multiple times from the HCM app and processed in middleware for each subscriber causing stress on both platforms.

- Frequent bottlenecks in middleware caused by the difference in the rate of producing and consuming data since each consumer ingested data at different speed. These bottlenecks also degraded the performance of other apps running in our middleware layer.

- For any communication issues, we had to rerun the whole process starting from the source.

- The tight coupling of system components made it difficult to design and introduce any changes to business logic.

We needed new integration frameworks to efficiently manage master data entities at scale.

The solution

A publisher-subscriber (Pub-Sub) integration model can yield the following benefits over point-to-point integrations because:

- It eliminates unnecessary stress on the source HCM app as data is pulled/pushed from the source only once for multiple consumer apps

- Business logic and integration platform is decoupled

- It has simpler mapping and data transformation logic in subscriber apps due to the adoption of a canonical structure from the source

- The publisher and subscriber modules are programming language and communication protocol agnostic, which allows these modules to be developed faster.

With the integration framework in place, the only missing piece to complete the puzzle was a suitable message broker.

The implementation

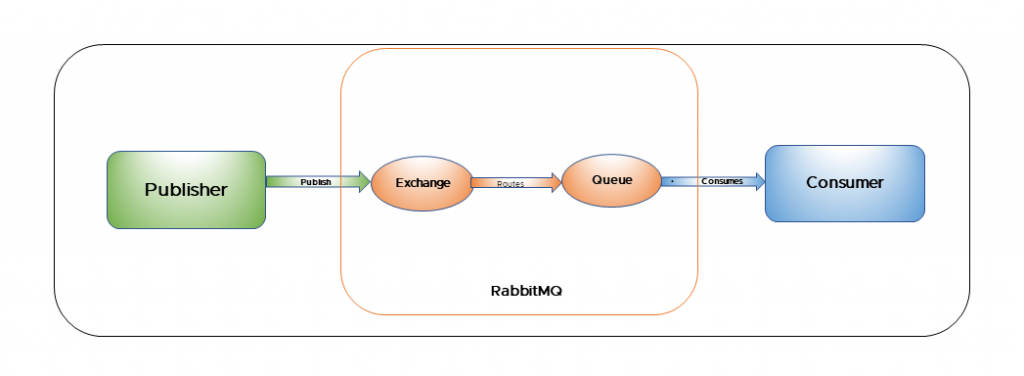

- VMware Tanzu® RabbitMQ® for VMs is a lightweight, open-source message broker. It can be easily deployed both on-premises and in the cloud, and in distributed and federated configurations to meet high scalability and availability requirements. It supports several types of exchange for different use cases. See Figure 1.

Figure 1. Tanzu RabbitMQ components

- To build a pub-sub model for an entity, a standardized canonical data model that is shared between the publisher and all the subscribers must first be defined.

- A common publisher module can fetch data from entity source only once for all the subscribers. Then, it should push this data to the Tanzu RabbitMQ exchange. This publisher does not have any business logic and it is neither affected by addition/deletion/modification nor by any failure/downtime of any downstream subscribers.

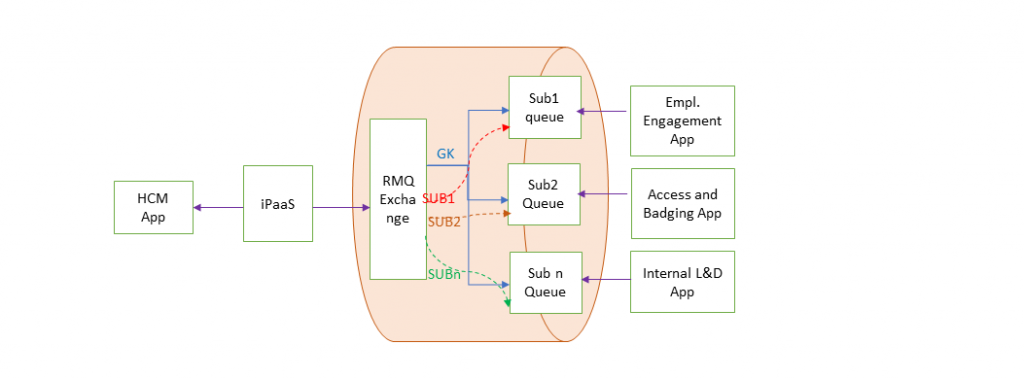

- Tanzu RabbitMQ supports different exchange types to intelligently route messages to queues. For a pub-sub model, ‘direct’ exchange type can be used to conditionally route messages based on the routing key. This gives the flexibility to route messages to a single specific consumer (for example, during failure of that consumer), as well as to all consumers or a subset of consumers (by defining a routing key for each such set). The routing keys are defined at the time of binding a queue to its exchange; one queue can be bound to one exchange using multiple routing keys. See Figure 2.

Figure 2. Integration flow

Blue arrow indicates common paths for all subs with any generic routing key (GK); dotted arrows indicate a path to any one specific subscriber module using sub-specific routing keys, such as ‘SUB1,’ ‘SUB2,’ ’SUB n.’

- For each consumer, we created a new subscriber module and a new queue and binding in Tanzu RabbitMQ. The subscriber module listens to its specific queue. As soon as it reads a message, it transforms and transmits it in a format per the consumer specification.

- Consumer applications then ingest the data pushed by the subscriber app.

Meeting high availability and reliability requirements

To achieve high availability (HA) and resilient message broker status, Tanzu RabbitMQ implements two approaches based on the application integration requirements.

- Tanzu RabbitMQ clustered on single data center

In this approach, Tanzu RabbitMQ is clustered with seven broker nodes. For message persistence, queues are created with type mirrored, durable properties, and HA policy to have the messages published to queues are mirrored to one or more nodes per HA policy attached to queue.

- Tanzu RabbitMQ cluster on two different data centers

In this implementation, Tanzu RabbitMQ is clustered with queues created with type mirrored, durable properties, and HA policy attached.

We have two data centers, DC-1 and DC-2. RabbitMQ cluster-1 in implemented on DC-1 and RabbitMQ cluster-2 is on DC-2.

Consumers and producers of these Tanzu RabbitMQ clusters are native to the data centers.

In both approaches, Tanzu RabbitMQ plugins like shovels are used and set up to achieve message transfer between the two different Tanzu RabbitMQ clusters in case of any subscriber app downtime and Tanzu RabbitMQ upgrade activities.

With the RabbitMQ framework, we were able to reduce the cycle time to launch subscriber apps by 25 percent and also decreased the number of job executions failures caused by system overload. The success of this framework encouraged us to adopt RabbitMQ to solve other integration challenges such as segregating batch and real time processing in an integration platform and replaying execution failures of certain integration patterns.

VMware on VMware blogs are written by IT subject matter experts sharing stories about our digital transformation using VMware products and services in a global production environment. Contact your sales rep or vmwonvmw@vmware.com to schedule a briefing on this topic. Visit the VMware on VMware microsite and follow us on Twitter.