by: VMware on VMware Staff

The VMware Cloud & Productivity Engineering (CPE) team has spent the last 18+ months transitioning traditional operations to a Site Reliability Engineering (SRE) model. Now, entire environments are built and managed by code (commonly known as Infrastructure-as-Code, IaC), making them much more adaptable to cloud-based enterprise demands.

One the first products deployed in the new IaC-based environments was VMware vRealize® Log Insight™ (vRLI). This is a platform that enables teams to easily and effectively parse through logs gathered from the virtual infrastructure layer based on VMware vSphere® and VMware vCloud Director® as well as logs received from physical devices such networking and storage equipment.

Ensuring everything was up to code

Previous CPE environments used a single vRLI server per capacity footprint. This resulted in smaller failure domains, and lacked the significant benefits of clustering (reduced maintenance downtime, fewer ‘servers’ to manage, more agent groups).

Traditional vRLI legacy implementations were proving ineffectual for gaining insight into VMware NSX®, VMware vCenter®, and other environments.

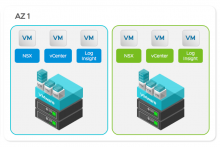

The conversion to an IaC model transformed what was possible with vRLI thanks to automation and version control. Today, the system has the ability to better absorb burst traffic, requires fewer vRLI servers and agent groups to manage, allows for zero-downtime for maintenance/upgrades*, and offers increased resiliency in case of underlying infrastructure failure**. Teams can also apply tags at ingestion. Tags enable engineers to mark certain events based on source log files and environments (Availability Zones, AZs), and can be applied at different locations (vRLI agent, ingestion VIP, and event forward).

The IaC vRLI model offers numerous benefits

Risks (and maximums) we could live with

As with any technology, our team had to agree on internally acceptable risks and maximums. With more than 200 defined and active vRLI alerts, there is a risk of a single point of failure. The solution to that problem is to have all events from all the infrastructure sections forwarded to a single place. This made troubleshooting and correlation easier.

We also employ webhooks. Alerts triggered by vRLI are sent to a custom web server through a webhook, which then converts the alert data format and pushes it to our monitoring system over the API. Thus, alerts generated by vRLI appear properly formatted in the monitoring system and teams don’t have to rely on email parsers. The drawbacks are that alerting will not be available on trivia-level logs, and alerts can be missed to due to Availability Zone isolation or issues with the central cluster.

Due to the underlying hardware, deployments are limited to eight vCPUs (medium-sized nodes). Each node can support 5,000 events per second (EPS). This two-fold increase has enabled our team to easily scale without unnecessary resource allocation. Each cluster should have a minimum of three nodes and can support at least 15,000 EPS.

How did we manage to do it?

Overall lifecycle management has been streamlined versus the traditional approach. Clusters are deployed and configured via the API that simplifies the entire process. Central cluster management—one of the final steps in the engineering continuous integration/continuous delivery (CI/CD) pipeline—involves running pre-general-availability (pre-GA) bits 24/7, and requires frequent upgrades depending on pipeline demands. In contrast, availability zone clusters run the latest GA release and require infrequent upgrades.

The Linux agent installation is managed by Puppet, and configuration is managed by the vRLI server itself. The agent configuration for log forwarding is described on an agent group level inside vRLI. As soon as the agent group configuration is changed, it is pushed dynamically to the agents, members of the respective agent group.

The IaC-based vRLI platform proved that the switch from traditional methodologies can have a significant, and exceptionally positive, impact on IT operations. It also serves as a prototype for future transitions in other areas.

*Native User Datagram Protocol (UDP) syslog traffic can still be lost during VIP migration between nodes

**Logs located on the offline node will be unavailable, but ingestion will continue

VMware on VMware blogs are written by IT subject matter experts sharing stories about our digital transformation using VMware products and services in a global production environment. Contact your sales rep or vmwonvmw@vmware.com to schedule a briefing on this topic. Visit the VMware on VMware microsite and follow us on Twitter.