Have you ever wondered what it takes to build, deploy, and verify 2,000 applications every single day? In this blog post, we will delve into this specific challenge and take a look at how VMware’s Bitnami team uses VMware Image Builder for automating content production and in turn enjoys dramatically boosted productivity. But let’s start from the beginning, and explore what it takes to build and ship software to users.

The curse of the universal software publisher

Bitnami’s motto has always been to make open source software (OSS) easy to use. Commitment to this accounts for the billions of Bitnami OSS downloads occurring every year. Corporations as well as individual users know that our software is trusted, safe, and production-grade. But independent software vendors (ISVs), are interested in making software available to everyone, everywhere, and therefore need to reach the largest audience possible. This aligns with Bitnami’s other less well-known motto: To make the process of secure, universal publishing of software easier for ISVs.

Let’s consider that statement. If you’re an ISV and need to reach the largest number of customers possible, what does this entail? Well, for starters, you probably need your application to be distributed as a container image, perhaps with a bunch of other dependent images, and then you will need to make those images available on places like DockerHub or cloud provider container registries (e.g., Google, Amazon or Microsoft). To make installation easier you’ll need to package your software as a Helm chart or Carvel package. And what about virtual machines (VMs)? That will require your software to be available on marketplaces like VMware or ones from a public cloud vendor in different VM formats. Last, your software may need to be located on the edge or IOT devices, so having multi-architecture support is crucial.

To make sure your software works in all of these permutations, software must be tested. This is particularly true when there are runtime platforms like Kubernetes engines that are heavily dependent on the implementation. If software isn’t tested properly, your reputation as an ISV might be impacted. Therefore, verification must be done for every single version of your software, and especially when there is a vulnerability problem in your product or any of its dependencies (at least for all long-term support versions). Should this be the case, you need a release process to fan out fixes to multiple targets and the ability to do it quickly enough for your customers to be safe and happy.

Now, imagine the above processes, but executed for more than 200 heterogeneous OSS applications. Needless to say, this scenario of a universal software producer would be totally overwhelming for the typical ISV. Luckily, that’s what we do for the Bitnami Public Catalog and VMware Application Catalog. In fact, enterprise customers get a-la-carte versions of former permutations with their own customized base images. This is our greatest challenge, but also our biggest value proposition for ISVs.

The challenge of verifying and producing content at scale

Undoubtedly, from an ISV’s perspective, there is tremendous value in offloading all these content production, verification, and publishing processes to an experienced partner like VMware. This value comes both from the producing and consuming sides:

- Producer value – VMware takes care of that enormously hard, high-cardinality software production problem. Packaging, verifying, and publishing multiple software versions, on multiple target architectures, on multiple packaging formats, and on multiple distribution destinations.

- Consumer value – VMware makes sure that the software is fully tested and functional, compliance-ready, does not contain malware or vulnerabilities, and is shipped with a detailed software bill of materials (SBoM).

This is a big challenge, and until a few months ago, was also very expensive and needed many thousands of hours of manual work and required sustaining overscaled supply chains and dedicated gigantic systems to accomplish. A fixed platform able to sustain the cardinality of the problem described above is neither simple nor pleasant to maintain. GitOps is not fun when data has been piling up for years and your Git repositories go beyond the 4GB mark. Similarly, continuous integration can be a chore when your Jenkins instances have grown into unsustainable monsters.

These pains are why a year and a half ago, a small team set out with a mission to modernize Bitnami’s content production supply chain. The goal was to replace the existing old system with a highly scalable and fully automated content production software supply chain able to package, verify, and publish software in any platform, format, or architecture, while allowing external ISVs to be able to use these services through a SaaS subscription model.

Meet VMware Image Builder

The result of that work is the VMware Image Builder, a complete revamp of the Bitnami content production supply chain that is now offered as a SaaS solution that any company that aims to create and distribute software can use on their own.

The picture above shows a high-level view of the architecture of this content platform. VMware Image Builder is built upon modern engineering principles like domain-driven design, event-driven architecture, and iterative development, where all microservices target highly specific well-defined bounded contexts. At the center, there is a central messaging bus that makes it possible to achieve a great degree of scalability while decoupling all services and guaranteeing the extensibility of the platform. While the platform might look very complex at first glance (and today is running in the cloud), the truth is that the whole platform can run on-premises.

All our microservices are built on Spring Boot and designed with API-first principles. Depending on how a service is going to be consumed, OpenAPI schemas will provide a REST interface for synchronous consumption, while AsyncAPI schemas provide an asynchronous interface to the services. Internally, all the communication between services is done through the asynchronous layer so as to maximize scalability, while a Spring Cloud Gateway instance exposes our public APIs for other companies to integrate with. (Little known fact: VMware Image Builder’s public API is the highest-scored REST API within the whole of VMware.) These public APIs can be changed dynamically without gateway restarts, thanks to a Spring Cloud Gateway plug-in that we have developed internally.

Our content production team builds pipelines that describe how to package, verify, and publish specific software in different formats. You can think about these pipelines as recipes that are sent through the gateway to VMware Image Builder and received by an orchestration engine that takes care of reading the recipes and splitting them into multiple individual tasks that will be consumed and executed asynchronously by the different microservices. This process continues until the whole pipeline is completed and content has either been produced, or a failure has been raised with useful information to the user.

An example of a pipeline could be: “I need to build Redis 6.2.12 packaged as a Helm chart format, and then the Helm chart in VMware Tanzu Kubernetes Grid running on vSphere 7. If that works, I want to publish the verified Helm chart to my corporate Harbor OCI registry.” Most recipes like this will typically go through at least three steps:

- Provisioning – Verifying software needs a system where the software can run. This can be a vCenter server installation, a container runtime environment, or a Kubernetes distribution on some cloud provider. The provisioning microservice takes care of creating and destroying these environments that are ephemeral and last only for a few minutes or hours. Having these environments created on the fly reduces both cost and the creation of errors associated with reusable sustaining long-lasting environments, which can result in potential information leakage and security concerns. Teams can provision clusters by using different standardized sizes like small, medium, large, etc., which also helps to save resources.

Another cool feature within this provisioning system is the ability to pool environments. Some platforms take longer than others to be provisioned (e.g., Openshift on Azure is notoriously slow). The provisioning service is responsible for providing smart pooling capabilities—so we always try to keep a warm pool of fresh instances ready to be used, which reduces pipeline processing time considerably. - Deployment – Once you have a cluster, the software can be installed. The deployment microservice picks an application that comes in a certain packaging format (e.g., Carvel, Helm, container image, VM) and deploys that application to the target environment that was provisioned in the previous step. Having a rapid feedback loop is very important so that VMware Image Builder can deploy the same application multiple times in parallel. So long as the platform (e.g., Kubernetes with multiple namespaces) and the application supports it, this speeds up verification.

- Execution – Once the application has been deployed, VMware Image Builder will execute tasks from the pipeline. For example, these tasks could be: packaging the application into some cloud native formats like container images or VMs, running some static analysis or vulnerability scanning with popular tools like Trivy or Grype, running functional tests with frameworks like Cypress to verify that the application is functional in the target environment, or publishing the verified content to some Open Container Initiative (OCI) repository.

Some of these tasks might not need deployment or provisioning of an environment (e.g., running a Grype scan), and so they do tend to be very quick. Execution tasks are implemented in a framework named Puzzle, and allows teams and engineers to come up with their own pluggable actions to be executed within this platform. Think of those as GitHub Actions or Jenkins plug-ins. Today, there are about 25 different Puzzle actions that engineers can choose from.

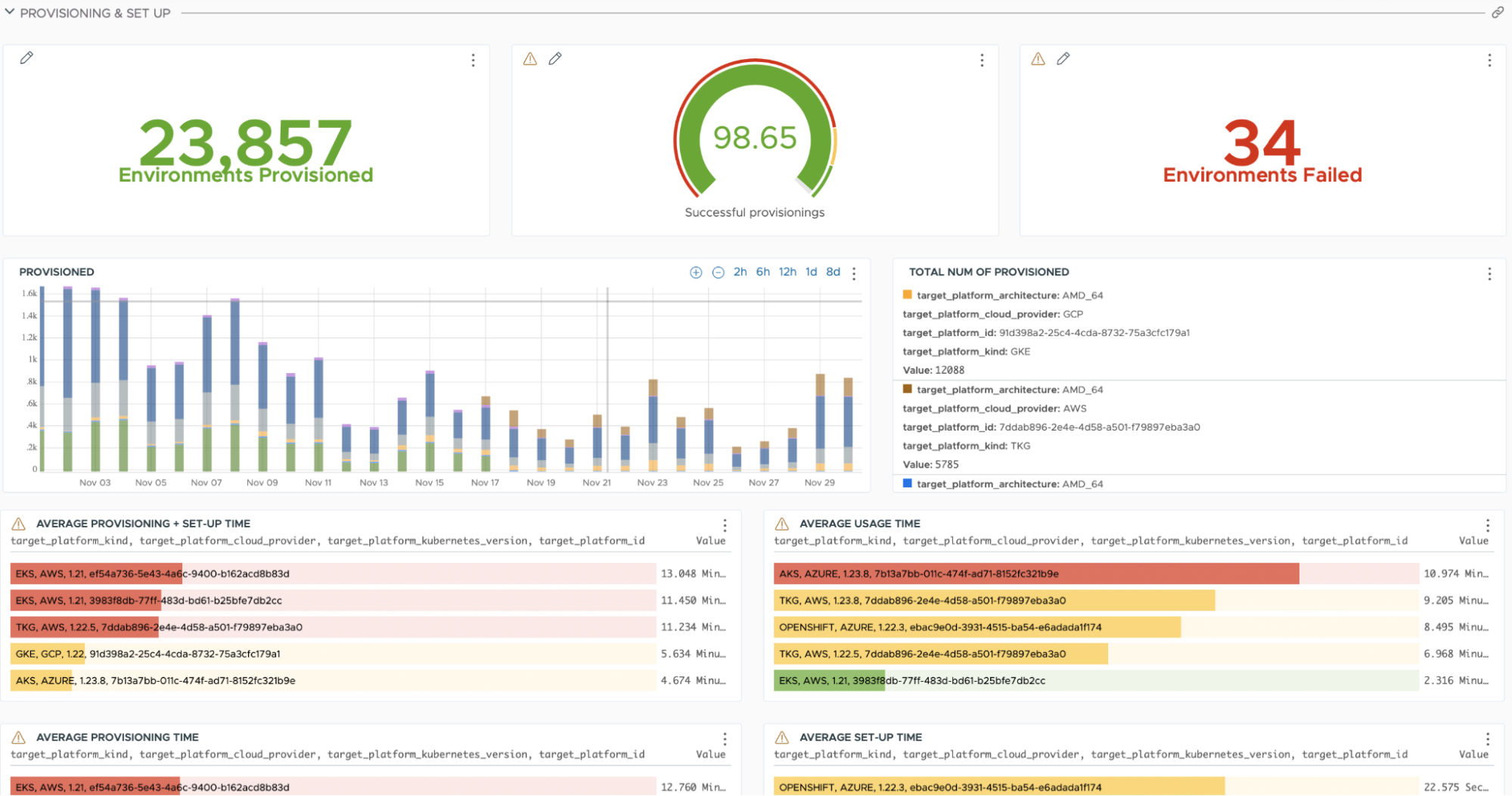

Provisioning, deployment, and execution are the three services that shape the backbone for the main content processing flow and, looking at the numbers, they are doing amazingly well. VMware Image Builder uses VMware Aria Operations for Applications to monitor the SaaS platform. In fact, in November 2022, VMware Image Builder provisioned more than 20,000 clusters of different flavors and sizes. If we dive into the actions executed during that month we can see that there were more than 60,000 application deployments.

Interoperability and the secure software supply chain

In addition to the core services associated with processing a pipeline, some other fundamental services provide capabilities that are of enormous importance to our internal and external customers.

Most actions that get executed generate important metadata, such as test reports, vulnerability scanning results, or a bill of materials. This data helps to fill in other services like vulnerability and inventory microservices which are also accessible externally via APIs. The vulnerability service can be used to query vulnerabilities and common vulnerabilities and exposure (CVE) data for different artifacts and organizations. On the other hand, the inventory microservice provides product discovery capabilities in a completely automated way. VMware Image Builder will automatically detect, track, and create dependency graphs for all of the applications that it processes, providing bill of materials and auditing/inventory traceability. Needless to say that the use of both inventory and vulnerability data together is fundamental in empowering a secure software supply chain.

Complementing these two critical services, a webhooks engine can be used to react to events that happen while the software is being packaged, verified, and published. This can help teams triggering integrations, such as publishing a message to a Slack channel when a new vulnerability is detected, or promoting a VM to multiple hyperscaler marketplaces, to name just a couple of examples.

A content platform for developers by developers

During the past few years, we have seen how the shift-left movement has given developers more capabilities, ownership, and responsibilities. However, this also applies to continuous integration and continuous delivery (CI/CD). While in the past, the road to production was something owned by a few dedicated people running large CI/CD systems like Jenkins, more and more of these responsibilities have shifted to developers who can now run their CI/CD pipelines along with their code. GitHub and GitLab are the two prime examples of platforms that started as source code management systems that have now become powerful and very popular CI/CD platforms with their GitHub Actions and their GitLab Pipelines.

For Bitnami in particular, being closer to the developer is critical. As one of the most popular software distributors in the world, every month we get hundreds of pull requests from external contributors with changes to our OSS packaged software. These contributions need to be verified before being trusted. In the past, this verification was a very painful process, as each and every contribution needed to be manually copied to the internal supply chains for verification. This would keep several engineers completely tied to doing manual verification work for their whole work week.

In order to get closer to external contributors, we made the decision to implement a GitHub Action that integrates VMware Image Builder and GitHub. This GitHub Action submits the pipelines to VMware Image Builder every time an external user creates a new contribution, and also acquires the pipeline execution output from VMware Image Builder and displays that output in a friendly manner to the external users. Therefore, users get immediate feedback when there is any regression on their contributions without having to wait for an engineer to manually review and test their code. Furthermore, our engineers don’t have to replicate the users’ contributions just to find out that it has broken some functionality.

On average, an external contribution in the past would have taken a couple of days of manual work to reproduce and validate. With hundreds of external pull requests received every month, this level of automation is a huge time saver for us, but also for ISVs. Having a GitHub Action available on the GitHub marketplace that allows them to package, verify, and publish their software no matter if that software is a VM or Helm chart, or whether it has to run as a container image or within a vCenter server is something that we believe is enormously valuable.

What comes next?

There has been a considerable investment in building a beneficial platform for our customers and engineers alike, but this is just the beginning. Releasing our engineers from doing all of the tedious and time-consuming work that they had to do has allowed us to focus their talents on research and development—which has already proved to be incredibly valuable. Our revenue has grown steeply and shows that freeing smart people from tedious manual work and allowing them to focus on hard problems can bring big benefits.

In the short term, we aim to move more of the manual work that is still being done to this new platform. Completing the integration with the VMware Application Catalog and integrating VMware Image Builder with other core VMware products are very exciting and important initiatives that are also in our short-term goals. Additionally, we are looking to onboard internal and external customers as we continue adding new actions for automating new flows. If you are interested in collaborating with us, please fill out this form and one of our experts will get in touch with you!