As more and more organizations are using containers in production, Kubernetes has pulled away to become the leading container orchestration platform. Likewise, the Wavefront cloud-native and analytics platform has pulled away to become the top choice for monitoring Kubernetes. Here’s several reasons why.

Wavefront now supports all the most popular Kubernetes implementations: PKS, Cloud PKS, OpenShift, Amazon EKS, Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS). All are supported by our enhanced Kubernetes Integration. In previous blogs, we focused on our PKS, Cloud PKS, and related integrations. Now let’s detail some other Kubernetes implementations, like our OpenShift integration.

OpenShift Monitoring

Our newly released OpenShift integration provides full visibility into the performance of OpenShift, an open source container application platform built around Docker containers and orchestrated by Kubernetes. The Wavefront OpenShift integration uses our enhanced Kubernetes Collector to monitor OpenShift clusters. When you deploy our OpenShift integration, you get metrics about your Kubernetes infrastructure and the state of the resources (pods, services, etc.). Thus, DevOps teams (including SREs, Kubernetes Operators, and Developers) get total insight into the health of their OpenShift implementation.

Replacing Heapster

The Wavefront Kubernetes Collector had previously used Heapster, a popular open source software, for collecting metrics from Kubernetes. Heapster provided two main functionalities:

- An API server that enabled the use of Horizontal Pod Autoscaler (HPA) to scale workloads based on metrics

- Metrics collection with support for third-party data sinks such as Wavefront to store the collected data

Now the Kubernetes maintainers have retired Heapster and replaced it with metrics-server: a slimmed down Heapster that only provides memory and CPU metrics required for the HPA and other core Kubernetes use cases.

Monitoring vendors that relied on Heapster had to develop alternate solutions for collecting Kubernetes metrics.

Wavefront Kubernetes Collector

Wavefront enhanced its Kubernetes Collector to offer a comprehensive solution for monitoring the health of any Kubernetes infrastructure and container workloads running on it. The Wavefront Kubernetes Collector offers all the functionality you could previously got from Heapster, and much more, such as:

- Extensive metrics collection of the Kubernetes clusters, nodes, pods, containers and namespaces

- Support for scraping Prometheus metric endpoints natively

- Auto-discovery of pods and services that expose Prometheus metrics (using annotations/configuration rules)

- Support for sending data to Wavefront using the Wavefront Proxy or direct ingestion

Moreover, Wavefront also released an HPA adapter so you can autoscale your Kubernetes workloads based on any metrics in Wavefront.

Now let’s dive into the Wavefront Kubernetes Collector that’s the foundation of the new Wavefront Kubernetes integrations.

Core Kubernetes Metrics

The Wavefront Kubernetes Collector is a cluster level agent that runs as a pod within your Kubernetes cluster. Once deployed it discovers the nodes in your cluster and starts collecting the metrics (see Table 1) from the Kubelet Summary API on each node.

Table 1. Kubelet Summary API metrics collected by the Wavefront Kubernetes Collector on each node

Table 1. Kubelet Summary API metrics collected by the Wavefront Kubernetes Collector on each node

Additionally, our Kubernetes integrations use Kube State Metrics to provide total visibility into the state of Kubernetes resources. You can monitor these metrics with the pre-packaged dashboards that are available in Wavefront.

These metrics provide DevOps teams full visibility into the health of the Kubernetes infrastructure and workloads.

Annotation-Based Discovery

The Wavefront Kubernetes Collector supports auto-discovery of pods and services that expose Prometheus metric endpoints based on annotations or discovery rules. Annotation-based discovery is enabled by default. See Table 2 for the annotations that apply.

Table 2. The Wavefront Kubernetes Collector annotations

Table 2. The Wavefront Kubernetes Collector annotations

To demonstrate this, let’s deploy the NGINX Ingress Controller provided here. Notice that the NGINX pod includes the Prometheus annotations:

$ kubectl describe pod nginx-ingress-controller-67d559b6b9-mdhh6 -n ingress-nginx

Name: nginx-ingress-controller-67d559b6b9-mdhh6

Namespace: ingress-nginx

Node: gke-test-cluster-default-pool-c4371a1d-tngh/10.168.0.2

Start Time: Wed, 23 Jan 2019 16:39:57 -0800

Labels: app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

Annotations: prometheus.io/port=10254

prometheus.io/scrape=true

From the Wavefront Kubernetes collector logs, we can see that the collector auto-discovers the NGINX pod and configures a Prometheus scrape target:

manager.go:72] added provider: prometheus_metrics_provider: pod-nginx-ingress-controller-67d559b6b9-mdhh6

The collected metrics are immediately available in Wavefront (see Figure 1).

Figure 1. NGINX Ingress Controller metrics collected by Wavefront Kubernetes Collector

Rule-Based Discovery

The Wavefront Kubernetes Collector optionally supports rules that enable discovery based on labels and namespaces. The rules are provided to the collector using the optional –discovery-config flag. Rules support Prometheus scrape options similar to those mentioned above.

For example, we can monitor the DNS that is part of the Kubernetes control plane using the following rule:

global:

discovery_interval: 10m

prom_configs:

- name: kube-dns

labels:

k8s-app: kube-dns

namespace: kube-system

port: 10054

prefix: kube.dns.

The Wavefront Kubernetes Collector auto-discovers the kube-dns pods:

manager.go:108] loading discovery rules

rule.go:32] rule=kube-dns-discovery type=pod labels=map[k8s-app:kube-dns]

rule.go:62] rule=kube-dns-discovery 2 pods found

manager.go:72] added provider: prometheus_metrics_provider: pod-kube-dns-788979dc8f-9vqkp

manager.go:72] added provider: prometheus_metrics_provider: pod-kube-dns-788979dc8f-6dv7b

The DNS metrics are now available in Wavefront (see Figure 2).

Figure 2. Kube-DNS metrics collected by Wavefront Kubernetes Collector

Discovery rules are configured as a ConfigMap. The Wavefront Kubernetes Collector automatically detects configuration changes without having to restart it.

Static Prometheus Scraping

The Wavefront Kubernetes Collector also supports scraping Prometheus targets based on static configurations using the –source flag. For example, to collect etcd metrics:

--source=prometheus:''?url=http://etcd-ip-address:2379/metrics

Monitoring the Wavefront Kubernetes Collector

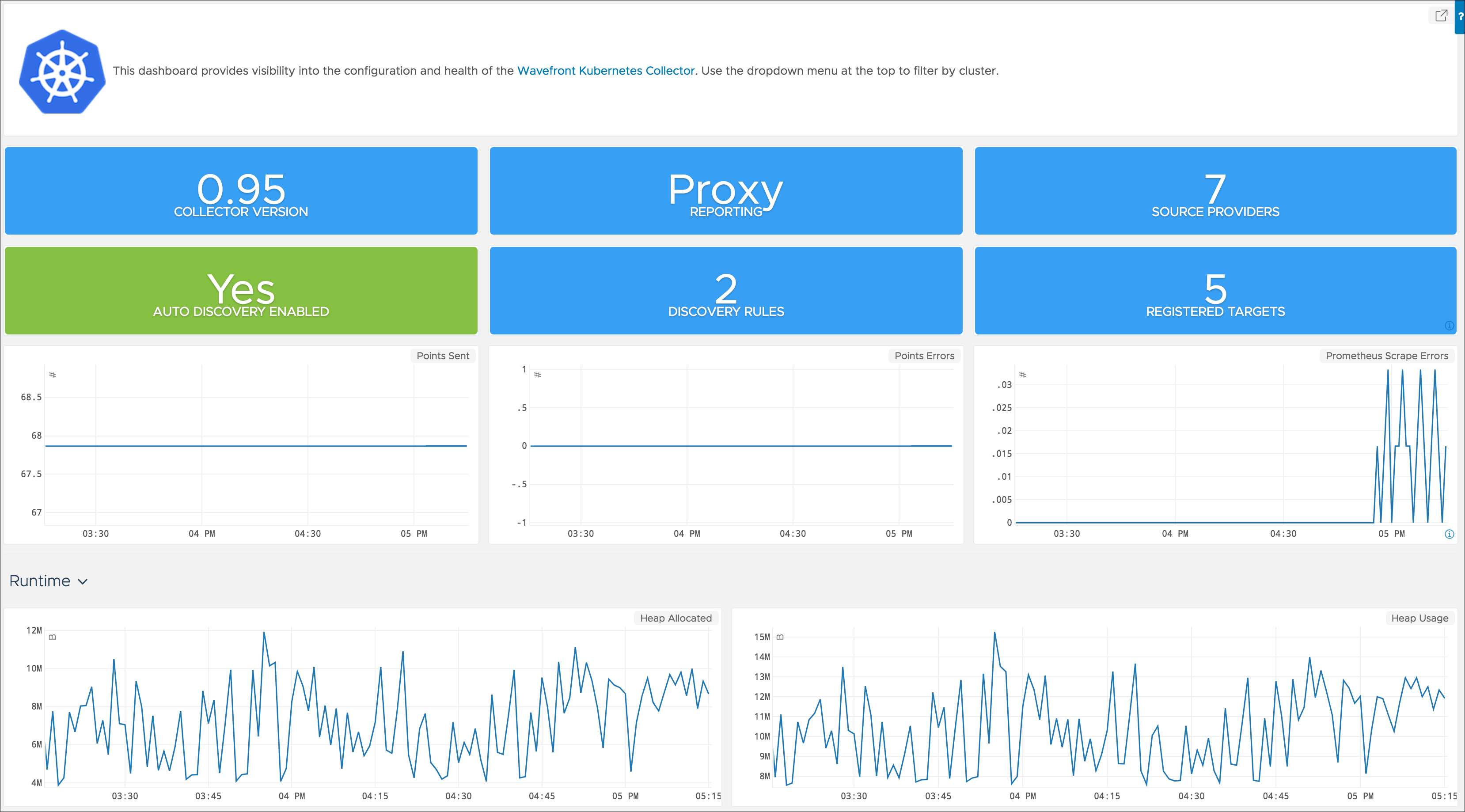

At Wavefront, we love metrics! The Wavefront Kubernetes Collector also emits metrics about its internal health, and we’ve built a handy dashboard (available under our Kubernetes integration) to monitor the Wavefront Kubernetes Collector itself:

Figure 3. The Wavefront Kubernetes Collector internal health dashboard

Conclusion

In summary, it’s effortless to collect metrics from Kubernetes using Wavefront, no matter what Kubernetes implementation you use. Stay tuned for a follow-up blog on how you can leverage any metrics within Wavefront to autoscale your Kubernetes workloads using the new Wavefront HPA adapter.

And beyond just Kubernetes health, the Wavefront platform provides full-stack visibility into the performance of containerized applications and microservices running within Kubernetes, as well as underlying cloud infrastructure. Check out the full list of our integrations here.

If you’re not yet a Wavefront customer, check out these cool features by signing up for a free trial.

Get Started with Wavefront Follow @snottgoblin Follow @WavefrontHQ

The post Wavefront’s Kubernetes Observability Extends Beyond PKS, Cloud PKS, Amazon EKS, Now to OpenShift, GKE, AKS and More appeared first on Wavefront by VMware.