This is part 1 of a pair of articles about clearing bottlenecks in the software delivery lifecycle using AI.

Check out part 2 to learn how AI can help you address bottlenecks in Day 2 software operations.

The biggest use of AI in the tech sector now is code generation. Programmers love it, and it has clear benefits. But what comes after that? How much more productivity can we squeeze out of AI for software development?

The answer lies in looking at the entire end-to-end process of developing software: from idea to code to running in production and responding to the feedback you get from people using the software. This has always been the way to get better at software, and AI is making big promises here.

Improving the software delivery lifecycle (SDLC) with AI

If you’re not a programmer, you probably think that most of what developers do is sit with their fingers on the keyboard writing code. This couldn’t be further from the truth. Programmers only spend anywhere between, I’m going to say, 15% to 20% of their time actually programming. The rest of the time is spent learning, communicating, designing, testing, and planning. Much of this is done through tools, but a substantial amount is done through good old-fashioned humans talking face to face.

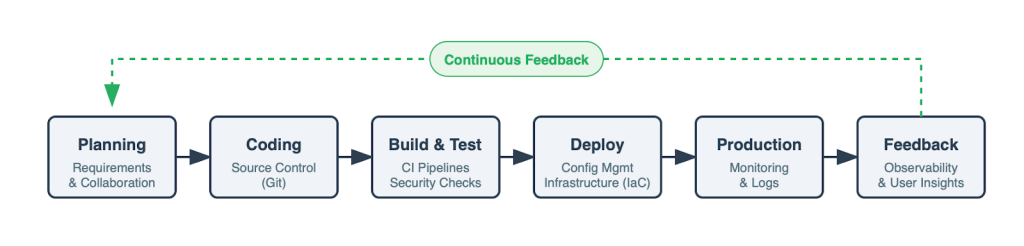

Major components of the software delivery lifecycle process

First, there are tools to track requirements, features, and bugs in the software, often integrated with collaboration tools. Then, you have tools to manage the source code as it moves through its lifecycle (version control, like git). The process is heavily automated by continuous integration/continuous delivery (CI/CD) pipelines, which use automated testing frameworks to do security and compliance checks. Configuration management tools are used to ready the application and its environment for production.

Finally, you have advanced logging and observability tools, alongside basic monitoring, to manage the application and gather feedback on its performance in production. If you’re really advanced, you have tools that help you gather feedback about how your software is used to achieve whatever goals you had at the outset. This feedback is then used in the next version of the software to improve the features.

There’s also the simple tool of “the meeting”: talking with people on the team, product managers, “the business,” and, if you’re really good, the actual users of your applications. The best tool here is usually “the whiteboard,” real or virtual, to help brainstorm and explore ideas.

You could throw in how you structure and manage the organization as well, but I’m going to leave that aside, since the way you manage your software organization is a topic all on its own. That process is also so situational that it’s difficult to generalize about it. What works at Amazon, JPMorganChase, the UK Parliament, or any given business unit at Thales varies widely.

Each of these phases of development can be optimized with AI. How that’s done is being explored and tested out right now, and I don’t think we’ll know exactly what it looks like for the next few years. We do know one thing for enterprises though: we need to make sure it’s secure.

Once you optimize each tool, which represents each step in the SDLC, you’ll encounter the process problem. That is, you’ll encounter people problems.

AI finds bottlenecks as a side effect

When it comes to optimizing a process like the SDLC, there’s an important tool to think about: bottlenecks. Each time you optimize one part of the process, you’ll encounter a bottleneck. This bottleneck has not been optimized yet, or it hasn’t been optimized enough.

If you’re not familiar with battling bottlenecks (which goes by the formal name “theory of constraints”), here’s a good summary of how this theory applies to software from Leah Brown, writing for the IT Revolution weblog:

[C]ode creation isn’t the bottleneck and hasn’t been for decades.

The current constraint in the delivery cycle is usually somewhere downstream of the coding effort: code review, integration, system test, or some other verification or validation step. Not only is generating more code unlikely to lead to greater throughput in the system, but all that unshippable inventory could throw the entire system out of balance, increasing delays.

This insight draws from Eliyahu Goldratt’s Theory of Constraints, illustrated through two characters from management literature:

Herbie, from The Goal, is the slowest scout on a hiking trip who keeps falling behind. No matter how fast the other scouts hike, the group can only move as fast as Herbie.

Brent, from The Phoenix Project, is the brilliant senior technologist with his fingers in everything. Nothing can move forward without Brent. He’s everywhere and nowhere, a constraint disguised as competence.

Both illustrate a fundamental principle: The capacity of the system equals the capacity of the constraint.

If verification and validation are your constraints, generating more code faster does nothing to improve overall productivity. Worse, Lean management tells us that inventory is a liability, not an asset. Unshipped code incurs carrying costs—branch management, feature-flagged dormant code, continual rebasing and retesting, additional complexity, and additional risk.

In short: Generating more code doesn’t speed up overall delivery unless code generation was actually the bottleneck. It might actually increase the cost of delivery.

In 2025 there was a lot of pondering along these lines: “If AI is so great, why aren’t we seeing ROI and productivity gains?” There’s a much-cited claim that 95% of AI projects fail. I think that’s way overblown and it’s been credibly criticized and counter-claimed. There is a gut feel — a vibe — that enterprises have yet to get as big a return on AI as all the hype would indicate.

These bottlenecks are probably one reason why we’re not achieving huge gains. While you’ve optimized one part of any given business process, whether it’s software development or otherwise, until you improve all of the bottlenecks, you’ll fail to get huge results.

How do you spot these bottlenecks? In software development, this is something we’ve studied for a long time. It’s one of the underappreciated advances in industry thinking that DevOps brought to the table. To find the bottlenecks in software development, you have to manage the entire process, that end-to-end SDLC.

That seems obvious, right? But ask yourself, could you draw a detailed map of all of the activities it takes to get one line of code into production? If you’re like most organizations I’ve talked with over the past few decades, you probably can’t.

Worse, if you’re an executive or in senior management, getting an accurate view can be difficult. A friend of mine has the same story from consulting with large organizations in recent years. They will ask the CIO and their team what steps are in the software delivery process. My friend writes these down, finding the teams that do each step. Then they start visiting each team and asking them how they do their work. It takes a little bit of building respect and trust, and then eventually, someone says, “Well, let me tell you how it actually works…”

Organizations have become so siloed that seeing the entire process is impossible. Another trick is to ask who is responsible for this entire process? Outside of a few organizations that obsess over this, like Amazon with the Single Threaded Leader role, very few organizations have roles that hold responsibility for the entire SDLC, including the business outcomes. Responsibility is distributed over many teams. Each of these teams optimizes their part of the process — that is how they get rewarded, after all. Did you do your job, and did you do it well?

How Tanzu Platform helps

The team that makes up the VMware Tanzu division of Broadcom has been focusing on optimizing the end-to-end process and optimizing bottlenecks for decades. It was our primary way of thinking about how we help organizations in the pre-Kubernetes Kraze days. And it remains a strength when you ask business leaders why they keep using the Tanzu Platform: because it speeds up their software delivery process with all the enterprise-y security and governance they need.

In the past couple of years, we’ve been learning how to fit AI into the SDLC. We don’t do everything you can with AI, but here are some areas our customers have been working on:

Escaping the legacy trap: You can’t build AI applications on top of brittle, decade-old infrastructure. The biggest drag on the SDLC is often the “keep the lights on” work, i.e., patching, upgrading frameworks, and refactoring legacy code. You can’t escape this in large organizations that are highly regulated. A Tanzu tool called Application Advisor automates the discovery and remediation of this technical debt. This tool identifies code that needs upgrading and automates much of the refactoring process. Keeping up to date improves security and governance, but that’s table stakes. Once you’ve freed yourself from the legacy trap, your developers have time to focus on building new AI-driven features, rather than fighting old fires. Organizations like KLM, Fiserv, and Alight have used Application Advisor. Alight, for example, saw a 70% reduction in engineering time required for maintenance upgrades.

Securing the AI supply chain: Locking down AI creates “shadow AI.” Tanzu tools help prevent this by securing AI components (like MCP servers) with the same rigor as mission-critical apps. By brokering model access, organizations can protect their IP without halting innovation. Broadcom’s own Global Technology Organization (GTO) used this to let developers safely deploy AI agents that read tickets and write code. As CIO Alan Davidson notes, productivity gains are real “only if we can integrate these tools and agents with safety and security.” Security is one of the biggest bottlenecks in the SDLC, and this approach helps.

Taming agent cost: When you use AI in the SDLC, it doesn’t just write code, it also executes tasks. But an autonomous agent that gets stuck in a reasoning loop can rack up massive bills in minutes. I’ve heard stories of well-meaning agentic processes running over the weekend and blowing through $50,000 or more. When it comes to AI, you need cost controls. Tanzu Platform treats these agents like standard microservices—handling their lifecycle, patching them, and enforcing circuit breakers on token burn. You can also run models locally on the Tanzu Platform and the VMware Private AI Foundation, which allows you to fully control costs because you’re running on your own private cloud. Throwing up ham-fisted cost controls can be a huge bottleneck, but with precise collecting, analytics, and enforcement, you can improve that bottleneck.

Unlocking corporate intelligence: The slowest part of the development cycle is often just finding the right information. And once you’ve found it, there’s a spirit-crushing amount of data cleaning and access controls that need to be put in place to use that data. Developers can waste hours hunting for documentation, and executives can wait days for basic business reports. Our thinking is that by relying on a data lakehouse architecture, you can speed up the data bottleneck. Tanzu Data Intelligence does this. Also, this integration goes all the way to the application development stack, for example, autoconfiguring and securing data access for applications with Spring.

Standardizing the golden path: A chaotic system is its own bottleneck. If every team builds its own custom platform, you create new bottlenecks faster than AI can solve them. Tanzu Platform provides a consistent golden path for getting code to production. By standardizing the environment, how applications are built, and how they move through the build process all the way to running in production, you ensure that every optimization you make scales across the entire organization instantly, rather than being trapped in a single silo. This applies to AI especially, where there’s an overwhelming amount of libraries, services, and methods of using AI. The earlier you standardize how your developers use AI and embed it in your application, the less time you’ll have to burn consolidating in the future.

Preparing your SDLC for AI: Removing bottlenecks and grit

We all know that in complex, valuable systems, there are no quick fixes. This is true with applying AI to enterprise processes like software delivery. There is an “as quick as we can” fix, though, and that is to look at your end-to-end process and think about removing and improving bottlenecks in that process. The first step is simply mapping it out. The next is taking care of the obvious, well, “quick fixes,” like upgrading your software, consolidating your platform stack, how you build and deploy software, and so forth. Take some time to address these obvious things, and then you can move onto the harder part of finding the hidden, people-based bottlenecks. Until you’ve removed those bottlenecks, all the AI optimization in the world will just result in everyone being better, but the overall process still lagging behind.

There’s one thing everyone we’ve been talking with over the past year agrees on: figuring out how to get better use of AI throughout the SDLC and in applications is difficult and complex. That complexity is its own kind of bottleneck, maybe more like grit in the gears of the SDLC. Tanzu Platform and Tanzu Data Intelligence give you an integrated AI platform, and running it on top of VMware Cloud Foundation (VCF) gives you a private cloud that’s ready for AI.