By Lan Vu, Hari Sivaraman, and Uday Kurkure

NVIDIA vGPU allows vSphere to share NVIDIA GPUs among multiple VMs by using either the time-sliced vGPU profile or the MIG-with-vGPU profile (we’ll call this MIG vGPU). These two vGPU modes provide a flexible choice on how GPUs are shared to best leverage the GPU resource. With two options available, you may wonder if you should choose vGPU or MIG vGPU. This blog briefly presents multiple use cases of the two profiles with different workloads. We also show you how to choose the right profile for your workloads to maximize the benefits of vGPU and MIG vGPU. Please check out our whitepaper for the full results of our performance study with more workloads and analysis.

vGPU vs. MIG vGPU When Scaling the Number of VMs per GPU with Machine Learning Workloads

In this experiment, we ran the same Mask R-CNN workload with batch size = 2 (training and inference) scaling from 1–7 VMs concurrently, sharing a single A100 GPU using either the vGPU or MIG vGPU profile. This workload can be considered a lightweight ML workload. We used different profile settings for each test case to allow the maximum use of GPU time and memory in each scenario. MIG vGPU shows better performance (figure 1), especially when the number of VMs sharing the same GPU increase. Due to the use of batch size = 2 for Mask R-CNN, the training task in each VM uses less compute resources (fewer CUDA cores are utilized) and less GPU memory (less than 5GB compared to the 40GB each GPU has). While vGPU does not perform as well as MIG vGPU in this experiment, when there is a requirement for a high consolidation of VMs per GPU, vGPU shows a benefit because it allows up to 10 VMs per GPU compared to MIG vGPU, which currently supports up to 7 VMs per GPU.

Figure 1. Normalized ML training time of vGPU vs. MIG with vGPU when scaling VMs per GPU

vGPU vs. MIG vGPU When Sizing Machine Learning Workloads

In this case study, we explore ML performance when sizing machine learning workloads. We conducted experiments that do Mask R-CNN training and inference in three different scenarios that mimic the ML load in these cases: lightweight, moderate, and heavy. The training time is shown in figure 2. For MIG, when the workload running in each VM uses less GPU compute and memory resources, the training time and throughput of MIG vGPU is better than vGPU. The performance difference between vGPU and MIG vGPU is highest for the light load. MIG vGPU is also better for a moderate load, but the performance difference between vGPU and MIG vGPU is narrower. For a heavy load, vGPU performs better than MIG vGPU, even though the difference is negligible. So, if you know the workload characteristics, you can choose the appropriate option for your application. If you do not know the workload characteristics, we recommend you test both vGPU and MIG vGPU options to select the better one.

Figure 2. Normalized ML training time of vGPU vs. MIG vGPU with different GPU loads

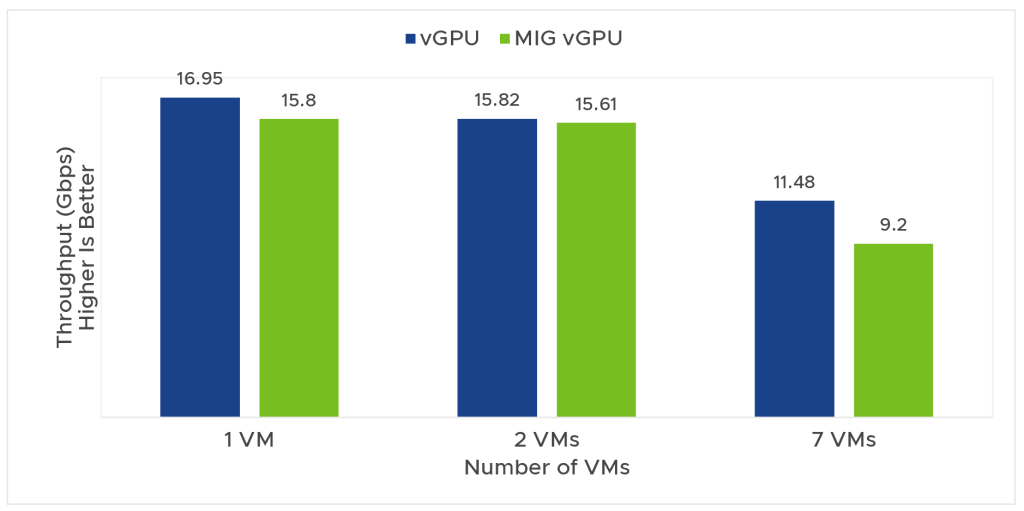

vGPU vs. MIG vGPU for Workloads with Heavy I/O Communications Like Network Function with Encryption

In this experiment, we implemented a network function workload called Internet Protocol Security (IPSec) that is both compute-intensive and I/O-intensive which presents a different workload characteristic. It uses CUDA to copy data between the CPU and GPU and off-loads its computation to GPU. Our IPSec used HMAC-SHA1 and AES-128 bit in CBC mode. The OpenSSL AES-128 bit CBC encryption and decryption algorithm was rewritten in CUDA as part of our implementation[1]. Our test results in figure 3 show that vGPU performs better than MIG vGPU for this scenario. This workload is compute-heavy and utilizes a lot of GPU memory bandwidth. For MIG vGPU, this memory bandwidth is partitioned among VMs. In contrast, vGPU shares the memory bandwidth among VMs. This can explain the performance difference between vGPU and MIG vGPU in this use case. Hence, the workload characteristics are unknown; it is better to test both the profiles and then select the better one.

Figure 3. IPSec throughput of 7 VMs with MIG vGPU profile vs. vGPU profile on A100

Takeaways

We explored multiple workloads that compare the performance of vGPU vs. MIG vGPU. A few key takeaways from our experiments include:

- When your workloads are lightweight (small models, small batch size, and small input data), choosing MIG vGPU can give you a high consolidation of VMs per GPU with better performance. This also means saving money for your ML/AI infrastructure.

- For workloads that heavily use GPU (large models, large batch size, and large input data), which also means the number of VMs sharing one GPU is small, the difference between vGPU and MIG vGPU is relatively small, in which vGPU shows slightly better performance.

- For compute-heavy and I/O-heavy workloads like NFV, vGPU performs better than MIG vGPU in most test cases.

Acknowledgments

We thank Charlie Huang, Manvendar Rawat, and Andy Currid of NVIDIA for reviewing and providing the input for the paper. The authors acknowledge Juan Garcia-Rovetta and Tony Lin of VMware for the support of this work.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.