vSphere administrators find the benefits of vMotion very appealing–it provides business continuity even in the face of physical server downtime for hardware maintenance and troubleshooting. Admins have the flexibility to migrate all the virtual machines off a vSphere host with no disruption in service.

The overall migration time in these host evacuation scenarios is a very important measure. By default, vCenter limits the concurrency of vMotions (number of vMotions at the same time) per host to eight. One of the most commonly asked questions we get is, “Can we can increase the concurrency to speed up the host evacuation time?”

In this blog post, we’ll discuss why these limits exist, share the performance data with different concurrency limits, and explain what we are doing to address the need for reduced overall migration time.

Understanding vCenter Server Concurrency

vCenter Server places limits on the number of simultaneous VM migrations that can occur on each host, network, and datastore. Each vMotion operation is assigned a resource cost. A resource can be a host, datastore or network.

To understand how vCenter concurrency limits work, think of resource limits in terms of available slots. Figure 1 illustrates the vMotion resource costs in a vSphere 7.0 environment in which a host resource has 16 available slots; a 40GbE NIC has 8 available slots. When a vMotion is initiated, each host participating in the migration is charged 2 units and each NIC is charged 1 unit. Note that there is no storage resource cost in this example, since storage is not at play during shared-storage vMotion.

In our example, even if the vMotion network was provisioned with two 40GbE NICs resulting in a total of 16 vMotion NIC units, the migration concurrency will still be limited to 8 due to host resource limits. In other words, a migration that causes any resource to exceed its maximum cost does not proceed immediately, but is queued until other active migrations complete and release resources.

For more information on vCenter concurrency limits for migration or any provisioning operation, refer to the blog post vCenter Limits for Tasks Running at the Same Time.

Performance Evaluation of vCenter Concurrency

We tested a vSphere 7 platform to determine the performance of vMotion migrations with respect to concurrency.

Testbed Details and Methodology

We used the following testbed, workloads, and methodology for performance evaluation.

Testbed

- Hosts: Dell PowerEdge R730 (2 sockets, 12 cores/socket, 1TB memory)

- vSphere host: VMware ESXi 7.0.1

- Storage: 10TB VMFS volume on a Dell EMC Unity 600 all-flash array

- NIC: varied the vMotion bandwidth (10GbE, 40GbE, and 100GbE)

Workloads

- Memtouch workload: Memtouch is a microbenchmark we used to generate different memory access patterns.

- DVD Store workload: DVD Store is an open-source benchmark that simulates users shopping in an online DVD store. We used a workload from this benchmark to generate a SQL Server OLTP load.

Metrics

- Host evacuation time (end-to-end migration time)

- Average vMotion time

- Resource (network/CPU) usage

- Impact on guest performance

Methodology

We used the vCenter advanced configuration setting:

|

1 |

config.vpxd.ResourceManager.costPerVmotionESX6x |

to increase the host resource cost per vMotion, and thereby limit the concurrency to 4. Note that changing the limits is not officially supported.

We used the following scenarios for performance testing (table 1):

| Config Value (Host Resource Cost) | Resulting Max Concurrent vMotions |

| 4 | 4 |

| 2 (Default) | 8 |

Table 1: Test scenarios

The messages in figures 2-3 corresponding to the “Resources currently in use by other operations” indicate that limits are kicking in.

Performance Results

Memtouch Workload

Our first set of tests featured a total of 16 VMs running a memtouch workload and a 40GbE NIC (with 3 vMotion vmkernel NICs) for vMotion traffic. The workload consisted of 4 memtouch threads that scan the entire guest memory and dirty a random byte in each 4KB memory page with 25 microseconds delay between the memory dirty operations. The threads ran in a continuous loop generating a moderate load on the VM (esxtop CPU %USED was 70%).

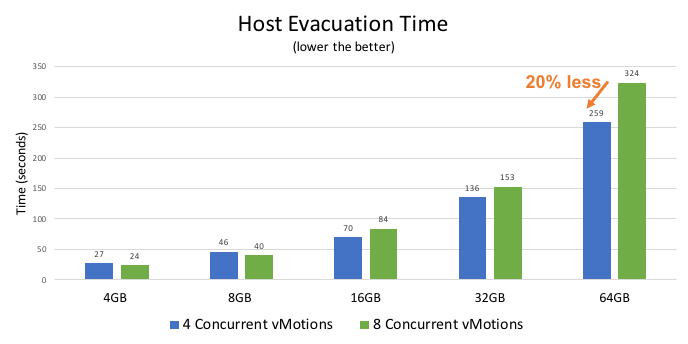

Figure 3 below compares host evacuation time with different concurrency limits for varying VM sizes. With smaller VM sizes (8GB or less), we observe nearly identical evacuation times with both 4 and 8 concurrencies. As the VM sizes increase, we see that 4-concurrency outperforms 8-concurrency. For instance, in the 64GB-VM scenario, we see a 20 percent reduction in host evacuation time with lower concurrency.

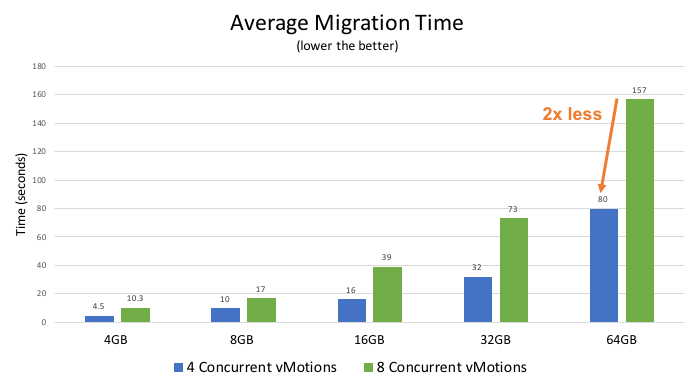

Figure 4 below shows a nearly 2x reduction in average migration time in all the scenarios when running 4 concurrent vMotions. More importantly, the reduction in average migration time translates to efficient resource usage.

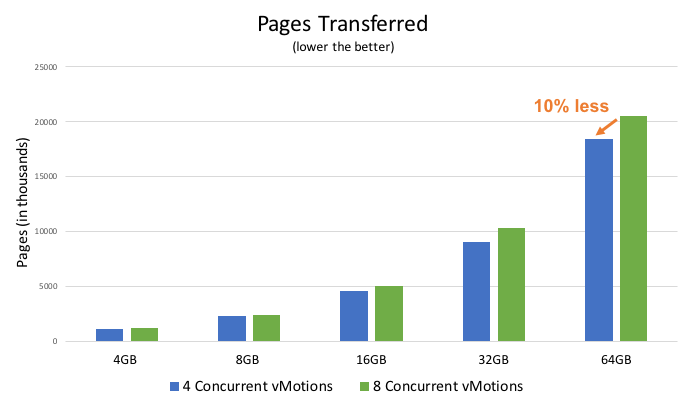

Figure 5 below plots the total memory pages transferred per migration, which is directly proportional to the CPU and network usage. Once again, we see that running 4 vMotions at the same time is a clear winner when considering the system resource usage metric.

DVD Store Workload

In our second set of tests, we expanded our test matrix to include 10GbE and 100GbE scenarios.

Scenario 1: Small VMs / 10GbE

- We deployed Microsoft SQL Server in 16 VMs; we configured each VM with 4 vCPUs and 18GB memory.

- The client machine hosted 16 DVD Store client VMs, each talking to a unique SQL Server VM.

- We configured the DVD Store workload to use a 20GB database size and 20 client threads.

- This scenario used a 10GbE vMotion network.

Scenario 2: Large VMs / 100 GbE

- We deployed Microsoft SQL Server in 16 VMs; we configured each VM with 4 vCPUs and 100GB memory.

- The client machine hosted 16 DVD Store client VMs, each talking to a unique SQL Server VM.

- We configured the DVD Store workload to use a 20GB database size and 20 client threads.

- This scenario used a 100GbE vMotion network (using 4 vmkernel NICs).

We configured the DVD Store benchmark driver to enable fine-grained performance tracking, which helped to quantify the impact on SQL Server throughput (orders processed per second) during evacuation scenarios.

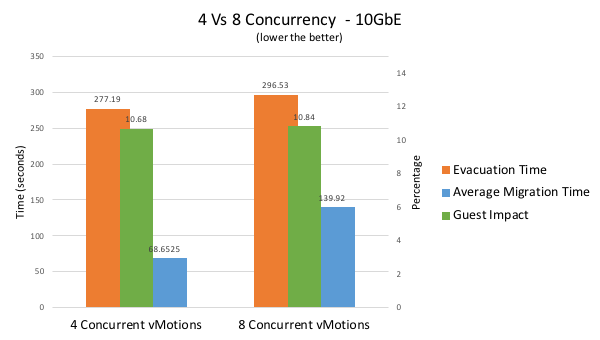

Figure 6 below compares 4 vs 8 concurrent vMotions for scenario 1 using a 10GbE vMotion network. The impact on guest performance was nearly the same in both concurrency scenarios with about a 10% drop in SQL Server transaction throughput during evacuation. However, lower concurrency resulted in a nearly 2x reduction in average vMotion time, combined with a modest reduction (6%) in evacuation time.

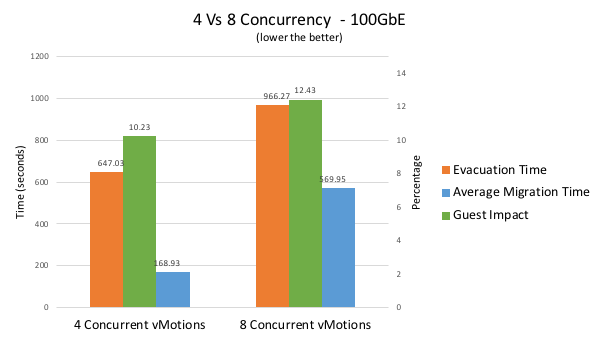

Figure 7 results blow show a similar trend when testing a 100GbE deployment. Once again, we see 4 concurrent vMotions to be a clear winner across all the metrics.

Conclusion

vCenter Server limits the concurrent vMotions per host to ensure resource balance and get the best overall performance. These limits are chosen carefully after extensive experimentation, and hence we strongly discourage changing the limits to increase concurrency.

Our performance results show that even the default concurrency of 8 is less than optimal for host evacuation scenarios. This is because vMotion can effectively saturate the available network bandwidth across a variety of deployment scenarios even with a concurrency of 4. Hence, increasing the concurrency beyond 8 will only put a heavy load on the already saturated network resources resulting in queueing delays and thereby lower performance. Apart from longer host evacuation time, our findings show higher concurrency contributes to a significant increase in average vMotion time and CPU/network resource usage.

Rather than increasing the vCenter concurrency limits, our goal has been to optimize vMotion to effectively saturate the available network bandwidth with as few concurrent vMotions as possible. As evidenced by our performance findings, this approach minimizes the data movement, improves resource efficiency, and reduces the overall evacuation time.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.