Welcome to this new new blog post series about Container Networking with Antrea. In this blog, we’ll take a look at the Egress feature and show how to implement it on vSphere with Tanzu.

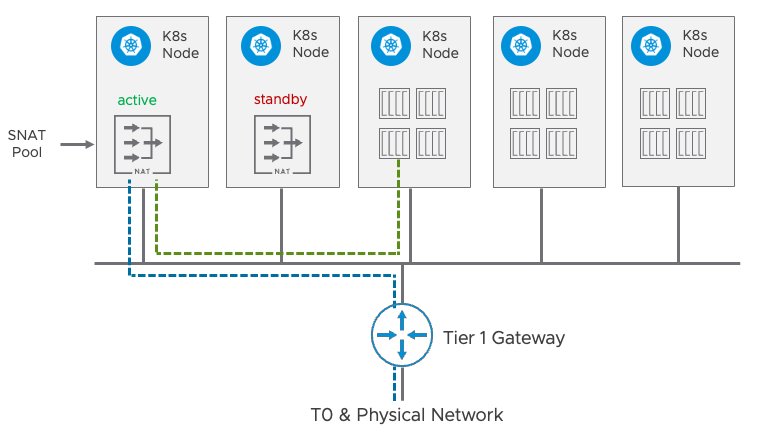

According to the official Antrea documentation Egress is a Kubernetes Custom Resource Definition (CRD) which allows you to specify which Egress (SNAT) IP the traffic from the selected Pods to the external network should use. When a selected Pod accesses the external network, the Egress traffic will be tunneled to the Node that hosts the Egress IP if it’s different from the Node that the Pod runs on and will be SNATed to the Egress IP when leaving that Node. You can see the traffic flow in the following picture.

When the Egress IP is allocated from an externalIPPool, Antrea even provides automatic high availability; i.e. if the Node hosting the Egress IP fails, another node will be elected from the remaining Nodes selected by the nodeSelector of the externalIPPool.

Note: The standby node will not only take over the IP but also send a layer 2 advertisement (e.g. Gratuitous ARP for IPv4) to notify the other hosts and routers on the network that the MAC address associated with the IP has changed.

You may be interested in using the Egress feature if any of the following apply:

- A consistent IP address is desired when specific Pods connect to services outside of the cluster, for source tracing in audit logs, or for filtering by source IP in external firewall, etc.

- You want to force outgoing external connections to leave the cluster via certain Nodes, for security controls, or due to network topology restrictions.

You could also use routed Pods to achieve the same effect. But, with Egress, you can control exactly which Pods have this capability (it’s not all or nothing!).

Feature Gates and Configuration Variables for TKG

The Open Source Antrea Version 1.6 (and higher) enables the Egress feature gate by default. Since the VMware Antrea Enterprise edition has a different release schedule we need to check if Egress is enabled for vSphere with Tanzu or TKG and if not how to enable it.

TKG 1.6 introduced Antrea configuration variables which can be used in the cluster definition. It’s not necessary anymore to patch TKG or pause Kubernetes controllers which override manual changes.

|

1 2 3 4 |

$ cd ~/.config/tanzu/tkg/providers/ $ grep EGRESS config_default.yaml ANTREA_EGRESS_EXCEPT_CIDRS: "" ANTREA_EGRESS: true |

As you can see, Egress is enabled by default with TKG 1.6. All the other available Antrea features (e.g. NODEPORTLOCAL, FLOWEXPORTER, MULITCAST, etc.) can be configured using the corresponding variables.

Custom Resource Definitions for vSphere 8 with Tanzu

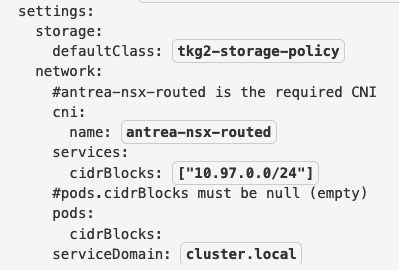

What about vSphere 8 with Tanzu? There is a Kubernetes CRD AntreaConfig available which gives you all the flexibility needed to configure Antrea. Before we deep dive into this topic let’s create a supervisor namespace tkg that overrides the default settings and disables the NAT mode. This means the worker node IPs are routed (otherwise Egress would not make much sense) but the Pod IPs are still NATed. A small routable namespace network segment and a /28 subnet prefix have been chosen for the Egress demo in a vSphere 8 with NSX 4.0.1.1-based setup.

After successfully creating the namespace, let’s check the NSX configuration:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

$ kubectl vsphere login --server supervisor.tanzu.lab \ -u administrator@vsphere.local \ --tanzu-kubernetes-cluster-namespace tkg $ kubectl describe ns tkg Name: tkg Labels: kubernetes.io/metadata.name=tkg vSphereClusterID=domain-c8 Annotations: ls_id-0: a2534289-64d3-461d-88d6-ff43f18a15f2 ncp/nsx_network_config_crd: tkg-ee39aad6-1e0c-4e2c-addf-abbb174b63f6 ncp/router_id: t1_c42605d2-08c5-4e62-8457-868607859414_rtr ncp/subnet-0: 10.221.193.32/28 vmware-system-resource-pool: resgroup-432 vmware-system-vm-folder: group-v433 Status: Active |

If you take a look at the vSphere 8 documentation about routed Pods, you can see a reference to the CNI named antrea-nsx-routed.

For our demo, we will use the new Cluster-API-based ClusterClass approach to create TKG clusters. To enable Egress (and some other features for future demos), we need to create our own Antrea configuration using the AntreaConfig CRD. The method to attach this configuration to a specific cluster is different, though, and uses a special naming convention.

The following command shows that there are already two pre-defined AntreaConfig objects available in our tkg namespace. Both of them have Egress set to false:

|

1 2 3 4 |

$ kubectl get antreaconfigs -A NAMESPACE NAME TRAFFICENCAPMODE ANTREAPROXY vmware-system-tkg v1.23.8---vmware.2-tkg.2-zshippable encap true vmware-system-tkg v1.23.8---vmware.2-tkg.2-zshippable-routable noEncap true |

Our cluster is called cluster-3, so we need to use the name cluster-3-antrea-package in our AntreaConfig definition (i.e. we have to append -antrea-package to the cluster name). This AntreaConfig is then automatically used by the cluster.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

$ cat antreaconfig-3.yaml apiVersion: cni.tanzu.vmware.com/v1alpha1 kind: AntreaConfig metadata: name: cluster-3-antrea-package namespace: tkg spec: antrea: config: featureGates: AntreaProxy: true EndpointSlice: false AntreaPolicy: true FlowExporter: true Egress: true NodePortLocal: true AntreaTraceflow: true NetworkPolicyStats: true $ kubectl apply -f antreaconfig-3.yaml |

Note: Use a separate YAML file for the Antrea configuration! Deleting the cluster will automatically delete the corresponding AntreaConfig Resource. If you put both definitions in the same file the cluster deletion will hang!

Clusters, ClusterClass, and Egress

Now it’s time to create our cluster (with ClusterClass) using the resource type Cluster (and not TanzuKubernetesCluster anymore) and configure Egress.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

$ cat cluster-3.yaml apiVersion: cluster.x-k8s.io/v1beta1 kind: Cluster metadata: name: cluster-3 namespace: tkg spec: clusterNetwork: services: cidrBlocks: ["198.52.100.0/12"] pods: cidrBlocks: ["192.101.2.0/16"] serviceDomain: "cluster.local" topology: class: tanzukubernetescluster version: v1.23.8+vmware.2-tkg.2-zshippable controlPlane: replicas: 1 metadata: annotations: run.tanzu.vmware.com/resolve-os-image: os-name=ubuntu workers: machineDeployments: - class: node-pool name: node-pool-01 replicas: 3 metadata: annotations: run.tanzu.vmware.com/resolve-os-image: os-name=ubuntu variables: - name: vmClass value: best-effort-xsmall - name: storageClass value: vsan-default-storage-policy $ kubectl apply -f cluster3.yaml |

The cluster is using Ubuntu as base operating system and the default vSAN storage policy. Since there is no NetworkClass available in ClusterClass yet, we had to use the AntreaConfig naming convention.

Note: Although the cluster nodes are routed (and not NATed), you can not SSH into them directly because a distributed firewall (DFW) rule has been created to deny access.

If you need this direct access you have to create a specific firewall rule. Otherwise, you can use one of the supervisor VMs or a jumbox container to log into the worker nodes following the official vSphere 8 documentation.

The rest of the Egress configuration is pretty standard now. After switching to the cluster-3 context we define a ExternalIPPool first. Since the nodeSelector is empty Antrea will choose one active node randomly and designate a standby node. You can also configure dedicated Egress nodes if needed.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

$ kubectl vsphere login --server supervisor.tanzu.lab \ -u administrator@vsphere.local \ --tanzu-kubernetes-cluster-namespace tkg \ --tanzu-kubernetes-cluster-name cluster-3 $ cat external_ip_pool.yaml apiVersion: crd.antrea.io/v1alpha2 kind: ExternalIPPool metadata: name: external-ip-pool spec: ipRanges: - start: 10.221.193.61 end: 10.221.193.62 - cidr: 10.221.193.48/28 nodeSelector: {} kubectl apply -f external_ip_pool.yaml |

What about the CIDR and IP range used in the configuration? Since the automatically created NSX segment for the cluster VMs is using the range 10.221.193.49/28 in our setup the ExternalIPPool is configured to use the last two (unused) IP addresses in that range.

Important: The standard approach is to use Egress IP addresses in the same range as the cluster VMs. Since these Egress IPs are not managed by vSphere 8 with Tanzu you have to be careful not to scale up the cluster to this range to avoid duplicate IP addresses. Technically you could use other ExternalIPPool ranges and let Antrea announce the settings and changes by BGP. But this is a story for some other time.

Let’s continue with our configuration. We create two Egressresources, one for Pods labeled web in the namespace prod and another one for Pods labeled web in the namespace staging.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

$ cat egress-prod-web.yaml apiVersion: crd.antrea.io/v1alpha2 kind: Egress metadata: name: egress-prod-web spec: appliedTo: namespaceSelector: matchLabels: kubernetes.io/metadata.name: prod podSelector: matchLabels: app: web externalIPPool: external-ip-pool $ cat egress-staging-web.yaml apiVersion: crd.antrea.io/v1alpha2 kind: Egress metadata: name: egress-staging-web spec: appliedTo: namespaceSelector: matchLabels: kubernetes.io/metadata.name: staging podSelector: matchLabels: app: web externalIPPool: external-ip-pool $ kubectl apply -f egress-prod-web.yaml $ kubectl apply -f egress-staging-web.yaml $ kubectl get egress NAME EGRESSIP AGE NODE egress-prod-web 10.221.193.61 43h cluster-3-node-pool-01-hgxk2-768974f9bc-l4fdq egress-staging-web 10.221.193.62 43h cluster-3-node-pool-01-hgxk2-768974f9bc-l4fdq $ export NODE=cluster-3-node-pool-01-hgxk2-768974f9bc-l4fdq $ kubectl get node $NODE -o jsonpath={.status.addresses[?\(@.type==\"InternalIP\"\)].address} 10.221.193.52 |

This looks good so far. Let’s create a jumpbox following the vSphere 8 documentation to access the above cluster node cluster-3-node-pool-01-hgxk2-768974f9bc-l4fdq with IP address 10.221.193.52 so we can see the new interface antrea-egress0 created by Antrea with both IP addresses (10.221.193.61/32 and 10.221.193.62/32) attached to it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

$ kubectl config use-context tkg $ cat jumpbox.yaml apiVersion: v1 kind: Pod metadata: name: jumpbox namespace: tkg spec: containers: - image: photon:3.0 name: jumpbox command: [ "/bin/bash", "-c", "--" ] args: - yum install -y openssh-server; mkdir /root/.ssh; cp /root/ssh/ssh-privatekey /root/.ssh/id_rsa; chmod 600 /root/.ssh/id_rsa; while true; do sleep 30; done; volumeMounts: - mountPath: "/root/ssh" name: ssh-key readOnly: true resources: requests: memory: 2Gi volumes: - name: ssh-key secret: secretName: cluster-3-ssh $ kubectl apply -f jumpbox.yaml $ kubectl exec -it jumpbox -- ssh vmware-system-user@10.221.193.52 ip address show antrea-egress0 6: antrea-egress0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default link/ether 7a:e1:c1:77:ef:62 brd ff:ff:ff:ff:ff:ff inet 10.221.193.61/32 scope global antrea-egress0 valid_lft forever preferred_lft forever inet 10.221.193.62/32 scope global antrea-egress0 valid_lft forever preferred_lft forever |

Next, we create the two namespaces prod and staging and deploy a simple web application into each of them.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

$ cat web.yaml apiVersion: apps/v1 kind: Deployment metadata: name: web spec: replicas: 2 selector: matchLabels: app: web template: metadata: labels: app: web spec: containers: - name: nginx image: gcr.io/kubernetes-development-244305/nginx:latest $ kubectl create clusterrolebinding default-tkg-admin-privileged-binding \ --clusterrole=psp:vmware-system-privileged --group=system:authenticated $ kubectl create ns prod $ kubectl create ns staging $ kubectl apply -f web.yaml -n prod $ kubectl apply -f web.yaml -n staging $ kubectl get pods -n prod NAME READY STATUS RESTARTS AGE web-58df64bd4b-hk7nw 1/1 Running 0 19s web-58df64bd4b-m8kqw 1/1 Running 0 19s $ kubectl get pods -n staging NAME READY STATUS RESTARTS AGE web-58df64bd4b-kr4zd 1/1 Running 0 38s web-58df64bd4b-n5r24 1/1 Running 0 38s |

To test that the web Pods in each namespace use a different Egressaddress let’s create a simple web application on the VM showip.tanzu.lab outside of NSX returning the IP address of the client accessing it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

$ ssh showip.tanzu.lab $ cd showfastip $ cat Dockerfile FROM tiangolo/uvicorn-gunicorn-fastapi:python3.9 COPY ./app /app $ cat app/main.py from fastapi import FastAPI, Request from fastapi.responses import HTMLResponse app = FastAPI() @app.get("/", response_class=HTMLResponse) async def index(request: Request): client_host = request.client.host return "Requester IP: " + client_host + "\n" $ docker build -t showfastip . $ docker run -d -p 80:80 showfastip $ exit |

And now the final test, showing that the pods in the different namespaces use different Egress addresses to access our web application.

|

1 2 3 4 |

$ kubectl -n prod exec web-58df64bd4b-hk7nw -- curl -s showip.tanzu.lab Requester IP: 10.221.193.61 $ kubectl -n staging exec web-58df64bd4b-kr4zd -- curl -s showip.tanzu.lab Requester IP: 10.221.193.62 |

And here we are: Antrea Egress successfully implemented on vSphere 8 with Tanzu using the AntreaConfig CRD.

See you next time!

Got any questions? Don’t hesitate to leave them in the comments or reach out to us @VMwareNSX on Twitter or LinkedIn.

Comments

0 Comments have been added so far