On the journey to modernizing your applications, are you struggling to find one single viable solution that meets all your app delivery requirements? Do you feel stuck with traditional appliance-based, open-source, or public cloud L4 and L7 load balancers, that are not equipped to support modern application architectures based on microservices – which require robust security, elastic autoscaling, integrated container services, and full-stack automation? Let me guide you through the following questions step-by-step:

Step 1: How have your applications and infrastructure evolved over the years?

Step 2: What are ingress services and how they help with DevSecOps?

Step 3: What are the challenges of deploying legacy or open-source or public cloud ingress services solutions?

Step 4: What is the one single ingress services solution that meets all your app delivery requirements – in containers, VMs and baremetal?

Let’s begin.

How have your applications and infrastructure evolved over the years?

The traditional 3-tier application model is composed of the web, app, and database tiers. Today’s modern apps have evolved significantly over the last 10 years to become more distributed. Your app architecture has evolved to a microservices-based architecture, and deployment environments also span VMs to bare metal to now containers across on-prem and cloud infrastructures.

As you are modernizing your applications and infrastructure, there are three major questions you should ask yourselves:

- How to connect your distributed apps that are on different architectures, and across different geos, sites, and clusters?

- How to simplify operations in your evolving infrastructure?

- How to bring your dev, operations, and security team together to achieve DevSecOps?

What do we mean by ingress services?

Following are the functionalities of “ideal” ingress services that you need for deploying your modern/container applications:

Traffic Management: From the connectivity point of view, full traffic management capabilities are required, so that at the ingress point, you can do local and global load balancing across multiple clusters and sites. Integrated DNS and IPAM services are needed, so you do not have to stitch together your solution.

Security: You need an intelligent web application firewall at the ingress point to ensure that the cluster access is safe and secure. Other capabilities such as app rate limiting, authentication, allow-list, deny-list are required to provide DDoS detection and mitigation.

Observability: You require an integrated solution that sits right in the path between the users and your backend application, enabling you to see every transaction that goes through, providing end-to-end visibility.

In your dev and test lab environment, you must have deployed many disparate tools ranging from the load balancer to ingress services, DNS/IPAM services, as well as service mesh and WAF. That works fine if you are only experimenting and evaluating different components that are stitched together to fulfill your app needs. However, if you need to bring your lab Kubernetes clusters to production-ready deployment, you must consider three important aspects – app connectivity, app security, observability.

Platform teams that support Kubernetes deployments are increasingly required to apply DevSecOps principles. Avi supports these principles:

Avi helps the Dev team to speed development and delivery of containerized workloads. Developers are able to self service provisioning services in minutes instead of waiting for an IT ticket that can take weeks. Avi’s data plane is elastic and scalable, developers can easily scale out or scale in service engines to support the ephemeral container workloads.

Avi enables the Sec team to secure the container lifecycle. The integrated platform provides application security that matches the granularity level and distributed nature of containers. Perimeter security such as traditional firewalls simply don’t cut it. The consolidated networking and security services provide a central point of control at the ingress point before attacks ever reach or infiltrate the Kubernetes clusters. A comprehensive security stack includes DDoS protection, rate limiting, IP reputation, SSL/TLS offload and encryption, signature-based policies against common vulnerabilities, such as SQL Injection (SQLi) and Cross-site Scripting (XSS).

Avi makes it easier for the Ops team to simplify operations of containers and clusters across clouds. Instead of a wall of knobs from disparate products, it is built on a consolidated, centrally managed fabric to deploy multiple services in a single platform – spanning VMs and containers. Avi provides end-to-end observability into application and network, allowing DevOps teams to easily troubleshoot and identify issues.

What are the challenges of deploying legacy or open-source or public cloud ingress services solution?

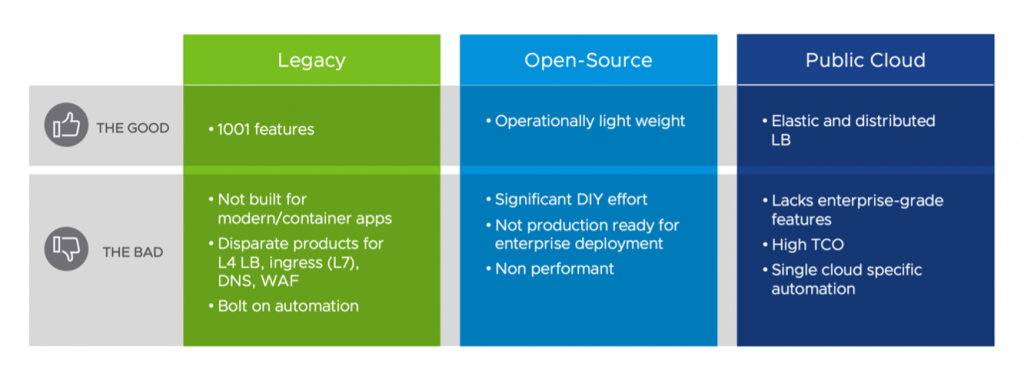

When moving the Kubernetes clusters to production, there are challenges with L4 and L7 deployments using legacy or open-source, or public cloud ingress services solutions (see Figure 1).

With disparate products, often from different vendors, you have to train your teams on usage and operation of L4 and L7 load balancing, WAF, DNS/IPAM solutions. You have to manually stitch the solution together, which results in very complex operations. It becomes hard to get end-to-end visibility into your app traffic. The legacy solutions do not have built-in REST APIs that present a huge automation bottleneck. It becomes daunting for the platform or DevSecOps team to be confident that their Kubernetes clusters can withstand the real-world production traffic demands.

Public cloud ingress services offer limited enterprise-grade functionalities with minimal support for advanced features and custom scripting capability. It becomes operational challenging to support a large-scale production deployment resulting in high TCO.

What is the one single ingress services solution that best fits all your modern and containerized application requirements?

VMware offers the modern app connectivity solution composed of two products:

VMware Tanzu is a portfolio of Kubernetes solutions that are tested and proven for enterprises in production environments. NSX Advanced Load Balancer (Avi Networks) integrates with various components in the networking layer and provides full capabilities in the Tanzu Advanced edition. It brings the shortest path to production-ready Kubernetes clusters and consolidates L4-7 services on a single scalable platform.

Together with Tanzu– the runtime environment to deploy and run Kubernetes clusters, Avi and Tanzu provide consolidated full-stack container services, including networking, security, and application services from a single vendor. At its core, Avi helps simplify the operations of a certified Kubernetes distribution at scale and with enterprise features.

Across the entire container lifecycle, Tanzu ensures resilient and secure connectivity of containers within and across clusters. The integration with Avi further bridges the support for both virtual machines and containers with a single solution. It also helps better secure, scale, and observe microservices deployed in production Kubernetes clusters.

The joint solution also can be used with existing data center tools and workflows to give developers secure, self-serve access to conformant Kubernetes clusters in VMware private clouds. This enables running the same Kubernetes distribution across data centers, public cloud, and edge for a consistent, secure experience for all development teams.

NSX Advanced Load Balancer (Avi Networks) Ingress Services

Avi consolidates all the app and ingress services onto one single platform. Now you have one integrated solution that provides both L4 and L7 load balancing, ingress services, WAF, GSLB, DNS/IPAM services – all in one place (see Fig 3). To give you full connectivity, Avi also integrates with other VMware solutions such as Tanzu Service Mesh (TSM) and Antrea (VMware’s open-source version for CNI solutions). All Kubernetes distributions such as Openshift, VMware Tanzu are supported by Avi. You can deploy Avi’s integrated solution in any environment – on-prem data center or multi-cloud.

Avi is a software-defined architecture. Unlike traditional hardware appliances, Avi is a 100% software solution that has a separate control and data plane. You can deploy your apps anywhere through an app service fabric – whether your existing apps are on VMs or bare metal or you are moving to containerized Kubernetes clusters.

Key technical benefits offered are:

Central Orchestration: With controller-based architecture, you get a centralized control in the control plane. Central orchestration enables you to define policies across the multi-cloud environment that ensures consistency, connectivity, and accountability across the deployment.

Elasticity: Workload demand is very unpredictable in a production environment. So, the ability to auto-scale and to scale back when the demand is low becomes critical, both from high availability and saving costs point of view.

Resilience: Avi service engines are centrally managed and provisioned by the controller automatically – this gives you the ability to quickly spin up a new service engine when one of them goes down. You are now able to shift your workloads to a different service engine without any disruption.

Automation: A single endpoint for API integrations provides cloud-native automation. Northbound API integrations enable single point management and operations of third-party automation tools such as Splunk, vRA, vRO, etc. The Avi Controller also integrates via REST APIs with Kubernetes ecosystems, including Google Kubernetes Engine (GKE), VMware Pivotal Container Services (PKS), Red Hat OpenShift, Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS), VMware Tanzu Kubernetes Grid (TKG), and more.

Analytics/Observability: Avi collects a broad range of useful analytics and logs fed into the central controller for learning, analysis, and decision automation. You can automate a lot of operations so that when you need to scale out a cluster or do cloud bursting into a different environment, it is detected and handled automatically – no manual intervention is required.

In Summary

VMware’s NSX Advanced Load Balancer (Avi Networks) provides the path to modernizing your applications, delivering universal connectivity and security with simplified operations. Avi ingress services solution offers one integrated platform for L4 and L7 app services, easily deployable for different Kubernetes architectures in hybrid (on-prem/multi-cloud) environment – unlike the legacy or open-source or public cloud ingress services solution.

To learn more, use the following resources:

Simplify Operations for Production Ready Kubernetes with Avi and Tanzu Solution Brief

Watch Application Delivery How-to Videos for Containers use case

Deliver Elastic Kubernetes Services and Ingress Controller Whitepaper