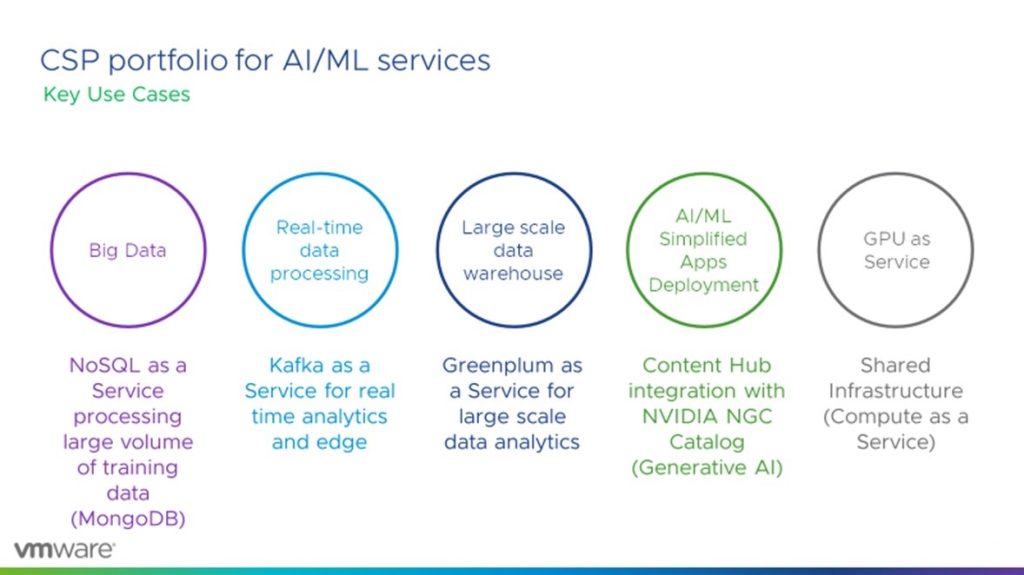

One of the most significant announcements from VMware Explore 2023 Las Vegas was the general session announcement of VMware Private AI (refer to the blog on the announcement here), an architectural approach for generative artificial intelligence services that offers enterprises the ability to deploy a range of open-source and commercial AI solutions, while better securing privacy and control of corporate data with integrated security and management. This is fantastic news for Cloud Services Providers looking to deliver the latest AI services for their tenants. 451 Research predicts tremendous Generative AI revenue growth from $3.7B in 2023 to $36.36B in 2028, at a healthy CAGR of 57.9%1. With an extensive network of partners helping to provide joint solutions with VMware, coupled with the scalable performance infrastructure platform of vSphere and VMware Cloud Foundation supported with GPU integrations, enterprises can deliver on a variety of use cases such as large-language models (LLM) for code generation, support center operations, IT operations automation, and more. Our Cloud Services Provider partners can take advantage of VMware Private AI using the same platform and services as our enterprise customers. Partners can offer true multi-tenant AI and ML-ready services for their tenants around various solutions ranging from databases, datalakes, NVIDIA GPU services, and more. Let’s explore some of the capabilities that are now available today:

Databases for Machine Learning

Highly scalable, secure, and resilient databases for machine learning workloads require features that offer efficiency, ease of use, and simplicity for data access. NoSQL databases such as MongoDB are essential because they can quickly scale out as data grows (horizontally scalable). In contrast, traditional relational SQL database solutions are only vertically scalable within one clustered host. NoSQL databases are also schema-less, which can allow for flexibility in design as the architecture for the machine learning shifts with the needs of the business. Cloud Services Providers can offer MongoDB solutions through VMware Cloud Director Data Services Extension, which supports MongoDB community and MongoDB enterprise database offerings. Because MongoDB leverages the Kubernetes container architecture orchestrated by VMware Cloud Director Container Service Extension and Tanzu Kubernetes Grid, partners can deliver a highly scalable, centrally managed, and more secure (both from a security as well as data availability and data protection point of view) database service for AI/ML workloads to their tenants. Check out this Feature Friday episode to learn how this solution benefits your tenants.

Improving Real-Time Analytics and Event Streaming Pipelines for ML

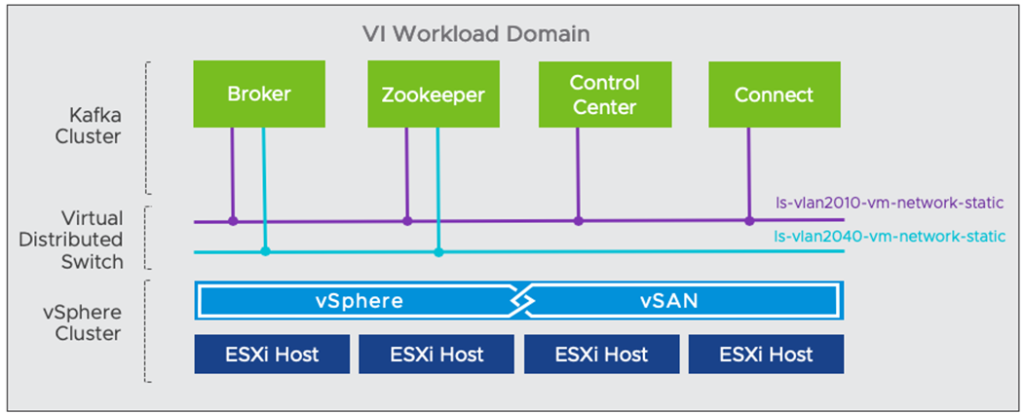

Partners can offer Kafka Streaming as a Service using VMware Cloud Director and VMware Cloud Foundation to deliver highly scalable streaming services for today’s modern application requirements to their customers. Kafka can handle trillions of events daily, whether messages are transitioning between microservices or streaming data and updating a training model in real time. With support for RabbitMQ already available through our Sovereign Cloud announcements at VMware Explore 2022, partners have a greater choice of messaging and streaming services to deploy based on the needs of their tenant’s workloads.

Datalake storage for LLMs

With the rise of AI, there is also the shift to large language models (LLMs), which have revolutionized the capabilities of AI in natural language understanding and generation (a great explanation of this can be found here). LLMs, such as OpenAI GPT-3 and GPT-4, can produce human-like text responses and code, as demonstrated in ChatGPT, leveraging vast amounts of data from which the models are trained. Being able to handle and efficiently sift through data to answer queries is critical for the success of the LLMs. VMware Greenplum helps address the requirement through its massively parallel processing (MPP) architecture with PostgreSQL, providing a highly scalable, high-performance data repository for large-scale data analytics and processing. This distributed scale-out architecture enables Greenplum to handle large volumes of data and perform complex analytical tasks on structured, semi-structured, and unstructured data. With multiple integrations to different data sources and real-time data processing through its streaming capabilities, a provider can deploy the solution for tenants that connect disparate sources and offer real-time data analysis and insights. Read more about the capabilities of VMware Greenplum in this blog.

Content Hub Integrates NVIDIA NGC AI catalog for faster AI Application Development

VMware announced the all-new Content Hub for Cloud Services Providers as a part of the VMware Cloud Director 10.5 release earlier this year. This new tool enhances the content catalog software management and accessibility experience for VM and container-based software components that a partner’s tenants want to access while building modern applications on their clouds. With Content Hub, partners integrate multiple sources, such as VMware Marketplace, Helm chart repositories, and VMware Application Catalog, to simplify the experience of how they deliver software components to their tenant’s developer teams, which in turn accelerates the development and usage of a partner’s infrastructure. Partners will no longer have to configure and maintain App Launchpad to deliver the software catalog content. With this, we are happy to announce that Content Hub also integrates with NVIDIA’s NGC catalog, an AI model development repository that helps developers integrate AI models into their architectures to build AI-based products faster. With this latest repository now accessible for partners to access and offer to their customers, Cloud Services Providers can continue to drive cutting-edge application software access for workloads their tenants are building without compromising security or ease of use. To learn how to add a catalog to Content Hub, check out our blog here.

VMware Cloud Foundation platform enhancements for AI/ML

The release of VMware Cloud Foundation (VCF) 5.0 support for our Cloud Services Providers this past summer delivered essential multi-tenant capabilities in several areas that our partners benefited from, including new isolated SSO workload domains and several scalability, performance, and management updates. Partners can better utilize infrastructure resources with this release, such as enabling up to 24 isolated workload domains and thus optimizing capabilities across their IaaS offerings to their customers. Within the release, additional specific improvements were made to support AI/ML workloads. Let’s review some of those capabilities here:

AI-Ready Enterprise Platform for Cloud Services Providers

The latest GPU virtualization innovations from NVIDIA can now be harnessed by Cloud Services Providers and deployed for tenant workloads around AI and ML. With the support of the NVIDIA AI Enterprise Suite, including the NVIDIA NeMo cloud-native framework, and support for NVIDIA Ampere A100 and A30 GPUs delivered through our technology partners, VMware Cloud Foundation can now run any customer’s latest AI/ML workloads. These capabilities supported with VCF 5.0 allow partners to extend their software-defined private or sovereign cloud platforms to support flexible and easily scalable AI-ready infrastructure, giving their customers the needed privacy to run AI services adjacent to their data, the desired performance to confidently run scale-out LLMs, and simplicity to enable a rapid time to value of their AI deployments.

Performance and security with DPUs

With the new vSphere Distributed Services Engine (DSE) in VCF 5.0, partners can modernize their data center infrastructure by offloading full-stack infrastructure functions from traditional CPUs to Data Processing Units (DPUs). DPUs deliver high-performance data and network processing capabilities within a system-on-a-chip (SoC) architecture, which enables the offloading of workloads from the x86 host to the DPU. How is this relevant to a customer’s workload? By offloading the workload to the DPU, the partner can see improved network bandwidth and reduced latency for these specialized workloads and simultaneously minimize scale constraints of x86 hardware for core workloads. The workload can enjoy higher I/O performance across network, storage, and compute while delivering a security airgap due to the inherent isolation of the workload on the DPUs separate from the x86 host cluster. This makes DPUs an excellent option for workloads such as those requiring line-rate performance or for security-focused customers wanting true workload isolation from other tenants on the cluster.

Pooled Memory performance

With the explosive growth in datasets and the large amount of processing involved, many customers and partners are experiencing constraints for memory to run their workloads. The desire to get the most out of their AI/ML workload in real-time is being challenged by infrastructure limitations to meet those needs in a scalable and cost-effective fashion. According to IDC, by 2024, nearly 25% of the global datasphere will be in real time2. VMware has addressed this challenge with software-defined memory tiering, which pools memory tiers across VMware hosts to deliver flexible, resilient memory management that achieves a better price-performance TCO for data-hungry real-time workloads. The architecture is designed to ensure workloads can receive the memory performance demanded while also allowing Cloud Services Providers to manage resources more effectively for performance, availability, and resilience from their infrastructure resources.

Summary

VMware delivers strong value for our Cloud Services Providers, with its broad set of capabilities and services that partners can deliver within more secure multi-tenant environments for their customers. Using these latest tools from VMware, partners are poised and ready to deliver value-added AI/ML solutions to meet the demands of this rapidly emerging industry. For more information, visit our cloudsolutions site to learn more about the products and services available.

1. Source: Johnston, Alex & Patience, Nick, 451 Research, Generative AI software market forecast, June 2023.

2. Reinsel, David; Gantz, John; Rydning, John, IDC, Data Age 2025: The Digitization of the World From Edge to Core, November 2018, Refreshed May 2020

VMware makes no guarantee that services announced in preview or beta will become available at a future date. The information in this article is for informational purposes only and may not be incorporated into any contract. This article may contain hyperlinks to non-VMware websites that are created and maintained by third parties who are solely responsible for the content on such websites.