VMware Cloud Director with Container Service Extension provides Kubernetes as a Service to Cloud Providers for Tanzu Kubernetes Clusters with Multi-tenancy at the core. This blog post provides a technical overview of Cluster API for VMware Cloud Director and how to get started with Cluster API.

Cluster API for VMware Cloud Director(CAPVCD) provides the following capabilities:

- Cluster API – Standarized approach to manage multiple Kubernetes cluster by Kubernetes Cluster – Create, scale, manage Kubernetes cluster with declarative API

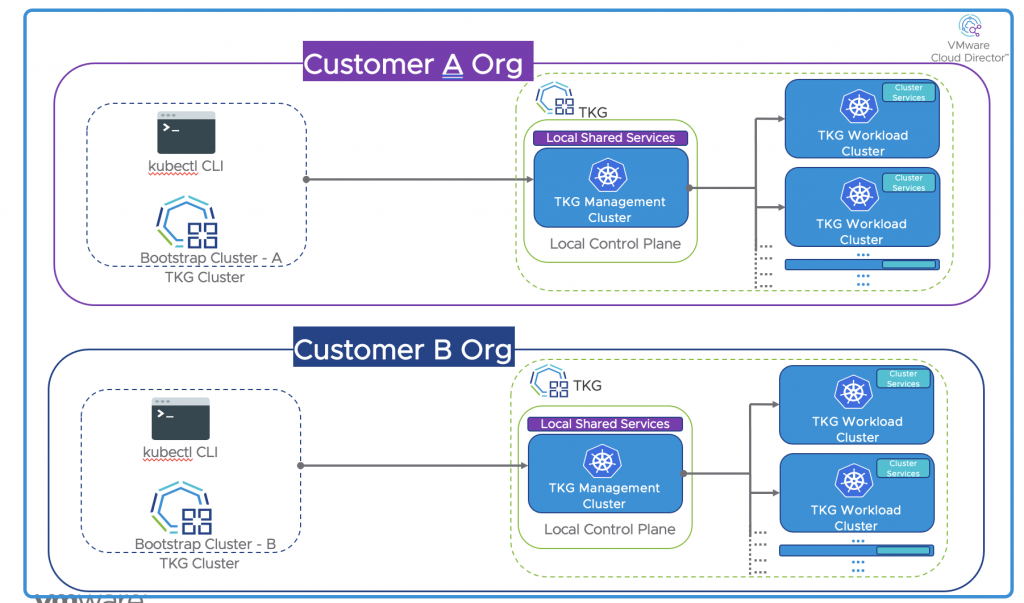

- Multi-Tenant – With Cluster API Provider for VMWare Cloud Director customers can self-manage their Management clusters AND workload clusters providing complete isolation.

- Multiple Control plane based Management Cluster – Customers can provide their own management cluster with multiple control plan, which gives Management High availability. The Controlplanes of Tanzu Clusters uses NSX-T Advanced Load Balancer.

This blog post describes basic concepts of Cluster API, how providers can offer CAPVCD to their customers, and how customers can self-manage Kubernetes Clusters.

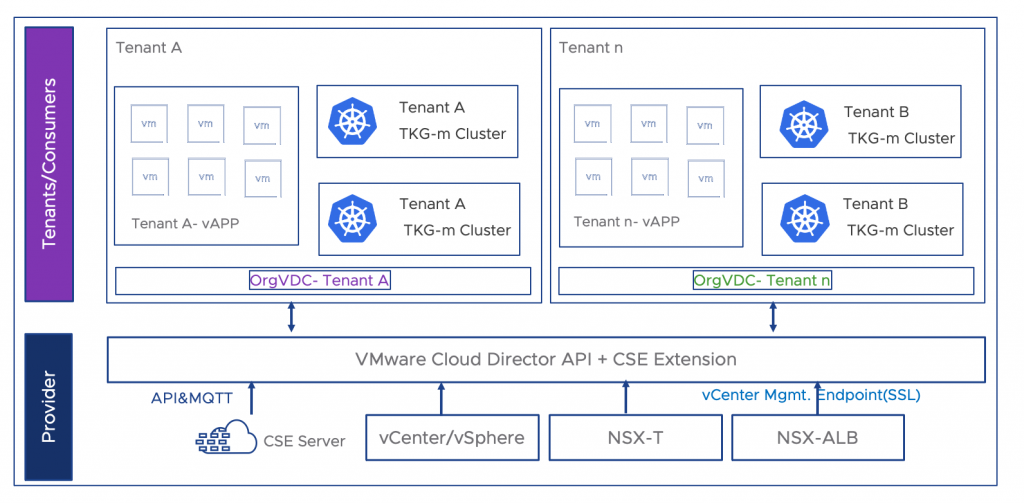

Cluster API for VMware Cloud Director is an additional package to be installed on a Tanzu Kubernetes Cluster. This requires the following components in the VMware Cloud Director and infrastructure. Figure 1 shows high-level infrastructure, this includes Container Service Extension, SDDC Stack, NSXT Unified Appliance, and NSX-T advanced Load Balancer. The customer can use the existing CSE-based Tanzu Kubernetes Cluster to introduce Cluster API in their organizations.

Cluster API Concepts

Bootstrap Cluster

This cluster initiates the creation of a Management cluster in a customer Organization. Optionally Bootstrao Cluster also manages Lifecycle of the Management cluster. Customers can use their CSE-created Tanzu Kubernetes Cluster as a bootstrap Cluster. Alternatively, customers can also use Kind as a bootstrap management cluster.

Management Cluster

This cluster is created by each customer organization to manage the workload clusters. The management cluster is usually created with multiple control plane VMS, with Cluster API. At this point, the management cluster manages lifecycle management of all workload clusters in their customer organizations. This TKG cluster has TKG packages and Cluster API Packages as described in the later section.

Workload Cluster

The customers can create workload clusters within their organization using management clusters. The workload clusters can have one or multiple control planes to achieve high availability.

For Providers: Offer Cluster API to customers

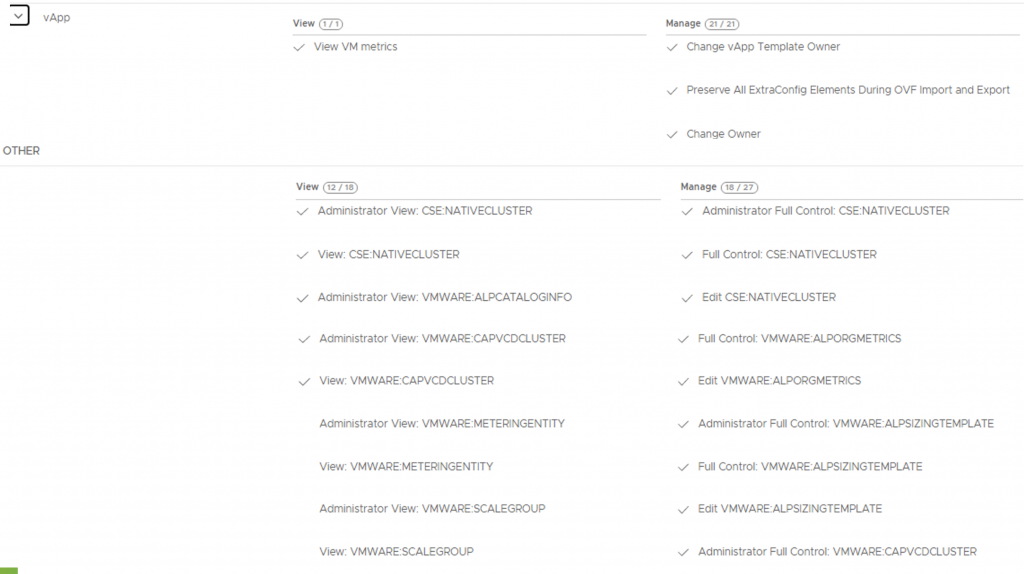

Provider admin user needs to publish the following rights bundles for successful Cluster API Operation on the Customer end.

- Preserve ExtraConfig Elements during OVA Import and Export (Follow this KB article to enable this right)

- Create a new RDE as follows:

POST: https://<VMware Cloud Director’s URL>/cloudapi/1.0.0/entityTypes

- Publish Rights bundles to Customer Organization

Provider admin can add the above-listed rights to the ‘Default Rights Bundle’ or create a new rights bundle and publish it to each customer organization at the time of onboarding. Figure 3 showcases all necessary rights of customer ACME to enable CAPVCD. Please refer to the documentation for the complete rights bundle for Tanzu Capabilities. After this step is complete, the customer can self-provision bootstrap/Management/Workload Clusters described in the next section.

For Customers: Self manage TKG clusters with Cluster API

Once The Tanzu Kubernetes Cluster is available as a bootstrap cluster, customer admin can follow these steps

Install clusterctl, kubectl, kind, docker on the local machine:

|

1 2 3 4 5 6 7 8 9 10 11 |

#These commands are examples for CenoOS, please use relavant command guide to install on Various Operating systems(Windows, MAC, Ubuntu, etc) ##Install Docker sudo yum install -y yum-utils sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo sudo yum install docker-ce docker-ce-cli containerd.io ##Install kind curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.12.0/kind-linux-amd64 chmod +x ./kind mv ./kind /usr/local/bin/kind ##Install clusterctl curl -L https://github.com/kubernetes-sigs/cluster-api/releases/download/v0.4.8/clusterctl-linux-amd64 -o clusterctl chmod +x ./clusterctl sudo mv ./clusterctl /usr/local/bin/clusterctl |

Convert Tanzu Kubernetes Cluster to Bootstrap Cluster

It is assumed that the customer admin has downloaded kubeconfig of the Tanzu Kubernetes cluster from the VCD tenant portal. This blog post explains how to prepare the Tanzu Kubernetes Cluster on VMware Cloud Director. For this section, we will refer Tanzu Kubernetes Cluster as a Bootstrap cluster.

Initialize cluster API in the bootstrap cluster

|

1 2 3 |

clusterctl init --core cluster-api:v0.4.2 -b kubeadm:v0.4.2 -c kubeadm:v0.4.2 #### get capvcd codebase to the local machine git clone --branch 0.5.0 https://github.com/vmware/cluster-api-provider-cloud-director.git |

Make changes to the following files based on Infrastructure

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

vi cluster-api-provider-cloud-director/config/manager/controller_manager_config.yaml vcd: host: https://<yourvcd site> # VCD endpoint with the format https://VCD_HOST. No trailing '/' org: "Customer Org1" # organization name associated with the user logged in to deploy clusters vdc: "Customer Org VDC1" # VDC name where the cluster will be deployed network: < Routed_Network1> # OVDC network to use for cluster deployment vipSubnet: < External Network Subnet example - 192.168.110.1/24> # Virtual IP CIDR for the external network loadbalancer: oneArm: startIP: "192.168.8.2" # First IP in the IP range used for load balancer virtual service endIP: "192.168.8.100" # Last IP in the IP range used for load balancer virtual service ports: http: 80 https: 443 tcp: 6443 # managementClusterRDEId is the RDE ID of this management cluster if it exists; if this cluster is a child of another CAPVCD based cluster, # an associated RDE will be created in VCD of Entity Type "urn:vcloud:type:vmware:capvcdCluster:1.0.0". # Retrieve that RDEId using the API - https://VCD_IP/cloudapi/1.0.0/entities/types/vmware/capvcdCluster/1.0.0 managementClusterRDEId: "" clusterResourceSet: csi: 1.1.1 # CSI version to be used in the workload cluster cpi: 1.1.1 # CPI version to be used in the workload cluster cni: 0.11.3 # Antrea version to be used in the workload cluster <meta charset="utf-8">vi cluster-api-provider-cloud-director/config/manager/kustomization.yaml resources: - manager.yaml generatorOptions: disableNameSuffixHash: true secretGenerator: - name: vcloud-basic-auth literals: - username=<cseadmin username> # VCD username to initialize the CAPVCD - password=<password> # Password configMapGenerator: - files: - controller_manager_config.yaml name: manager-config apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization ##Create CAPVCD on Bootstrap cluster for your VCD infrastructure: kubectl apply -k <meta charset="utf-8">cluster-api-provider-cloud-director/config/default #Verify CAPVCD is installed properly by checking pods on the bootstrap cluster: root@jump:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-7dc44947-l2wc7 1/1 Running 1 38h capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-cb9d954f5-trgr5 1/1 Running 0 38h capi-system capi-controller-manager-7594c7bc57-lddf7 1/1 Running 1 38h capvcd-system capvcd-controller-manager-6bd95c99d8-x7gph 1/1 Running 0 38h Tanzu Kubernetes Cluster is now boot strap cluster and ready to create Management cluster |

Provision Management Cluster

To create a Management Cluster, the user can continue to work with the bootstrap cluster. The following code section describes the steps to create the management cluster. Cluster API allows for declarative YAML to create clusters. the mgmt-capi.yaml file stores definitions of various components of the Management cluster. Once the management cluster is up, developers or other users with rights to create a TKG cluster can create a workload cluster with the management cluster’s kubeconfig.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 |

vi capi_mgmt.yaml apiVersion: cluster.x-k8s.io/v1alpha4 kind: Cluster metadata: name: mgmt-cluster namespace: default spec: clusterNetwork: pods: cidrBlocks: - 100.96.0.0/11 # pod CIDR for the cluster serviceDomain: k8s.test services: cidrBlocks: - 100.64.0.0/13 # service CIDR for the cluster controlPlaneRef: apiVersion: controlplane.cluster.x-k8s.io/v1alpha4 kind: KubeadmControlPlane name: capi-cluster-control-plane # name of the KubeadmControlPlane object associated with the cluster. namespace: default # kubernetes namespace in which the KubeadmControlPlane object reside. Should be the same namespace as that of the Cluster object infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDCluster name: capi-cluster-control-plane # name of the KubeadmControlPlane object associated with the cluster. namespace: default # kubernetes namespace in which the KubeadmControlPlane object reside. Should be the same namespace as that of the Cluster object infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDCluster name: mgmt-cluster # name of the VCDCluster object associated with the cluster. namespace: default # kubernetes namespace in which the VCDCluster object resides. Should be the same namespace as that of the Cluster object --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDCluster metadata: name: mgmt-cluster namespace: default spec: site: https://vcd-01a.corp.local # VCD endpoint with the format https://VCD_HOST. No trailing '/' org: ACME # VCD organization name where the cluster should be deployed ovdc: ACME_VDC_T # VCD virtual datacenter name where the cluster should be deployed ovdcNetwork: 172.16.2.0 # VCD virtual datacenter network to be used by the cluster userContext: username: "cseadmin" # username of the VCD persona creating the cluster password: "cseadmin-password" # password associated with the user creating the cluster refreshToken: "" # refresh token of the client registered with VCD for creating clusters. username and password can be left blank if refresh token is provided --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate metadata: name: capi-cluster-control-plane namespace: default spec: template: spec: catalog: cse # Catalog hosting the TKGm template, which will be used to deploy the control plane VMs template: ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776 # Name of the template to be used to create (or) upgrade the control plane nodes computePolicy: "" # Sizing policy to be used for the control plane VMs (this must be pre-published on the chosen organization virtual datacenter). If no sizing policy should be used, use "". --- apiVersion: controlplane.cluster.x-k8s.io/v1alpha4 kind: KubeadmControlPlane metadata: name: capi-cluster-control-plane namespace: default spec: kubeadmConfigSpec: clusterConfiguration: apiServer: certSANs: - localhost - 127.0.0.1 controllerManager: extraArgs: enable-hostpath-provisioner: "true" dns: imageRepository: projects.registry.vmware.com/tkg # image repository to pull the DNS image from imageTag: v1.8.0_vmware.5 # DNS image tag associated with the TKGm OVA used. The values must be retrieved from the TKGm ova BOM. Refer to the github documentation for more details etcd: local: imageRepository: projects.registry.vmware.com/tkg # image repository to pull the etcd image from imageTag: v3.4.13_vmware.15 # etcd image tag associated with the TKGm OVA used. The values must be retrieved from the TKGm ova BOM. Refer to the github documentation for more details imageRepository: projects.registry.vmware.com/tkg # image repository to use for the rest of kubernetes images users: - name: root sshAuthorizedKeys: - "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDC9GgcsHaszFbTO1A2yiQm9fC6BPkqNBefkRqdPYMdJ61t871TmUPO4RIqpdyVLtDLZIKyEB4d8sGyRTZ6fZSiYYfs2VBoHdisEFNKwYJoZUlnMvDT+Zm3ROoqjxxMnFL/cPnlh4lL9oVmcB8dom0E0MNxSRlGqbRPp1/NHXJ+dmOnQyskbIfSDte0pQ7+xRq0b3EgLTo3jj00UR4WUEvdQyCB8D/D3852DU+Aw5PH9MN7i6CemrkB0c8VQqtNfhX25DcBE8dAP3GmI/uYu6gqYBM6qw+JSz5C4ZQn2XFb4+oTATqrwAIdvRj276rUc53sjv2aQzD8eQWfhM++BGIWFq3+sAxf1AsoEmrVoWZSIMbRFIGfeFMKrJDIGt6VkRKCK73IlhloAT9h5RCbmeWupguKyu3CheBwhHGsySFwAdaSLozz4yevFvGLAmGZkPuWgx0hSJStuIGttJHuhIlBKx00xhqF1rfT9l83HaKkf9jXHRYvUXD7+WL6UhOgmjE= root@jump" <meta charset="utf-8">##This is an example RSA key, please generate a new RSA key to access your controlplane VM initConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external joinConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external machineTemplate: infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate name: capi-cluster-control-plane # name of the VCDMachineTemplate object used to deploy control plane VMs. Should be the same name as that of KubeadmControlPlane object namespace: default # kubernetes namespace of the VCDMachineTemplate object. Should be the same namespace as that of the Cluster object replicas: 3 # desired number of control plane nodes for the cluster version: v1.21.2+vmware.1 # Kubernetes version to be used to create (or) upgrade the control plane nodes. The value needs to be retrieved from the respective TKGm ova BOM. Refer to the documentation. --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate metadata: name: capi-cluster-md0 namespace: default spec: template: spec: catalog: cse # Catalog hosting the TKGm template, which will be used to deploy the worker VMs template: ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776 # Name of the template to be used to create (or) upgrade the worker nodes computePolicy: "" # Sizing policy to be used for the worker VMs (this must be pre-published on the chosen organization virtual datacenter). If no sizing policy should be used, use "". --- apiVersion: bootstrap.cluster.x-k8s.io/v1alpha4 kind: KubeadmConfigTemplate metadata: name: capi-cluster-md0 namespace: default spec: template: spec: users: - name: root sshAuthorizedKeys: - "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDC9GgcsHaszFbTO1A2yiQm9fC6BPkqNBefkRqdPYMdJ61t871TmUPO4RIqpdyVLtDLZIKyEB4d8sGyRTZ6fZSiYYfs2VBoHdisEFNKwYJoZUlnMvDT+Zm3ROoqjxxMnFL/cPnlh4lL9oVmcB8dom0E0MNxSRlGqbRPp1/NHXJ+dmOnQyskbIfSDte0pQ7+xRq0b3EgLTo3jj00UR4WUEvdQyCB8D/D3852DU+Aw5PH9MN7i6CemrkB0c8VQqtNfhX25DcBE8dAP3GmI/uYu6gqYBM6qw+JSz5C4ZQn2XFb4+oTATqrwAIdvRj276rUc53sjv2aQzD8eQWfhM++BGIWFq3+sAxf1AsoEmrVoWZSIMbRFIGfeFMKrJDIGt6VkRKCK73IlhloAT9h5RCbmeWupguKyu3CheBwhHGsySFwAdaSLozz4yevFvGLAmGZkPuWgx0hSJStuIGttJHuhIlBKx00xhqF1rfT9l83HaKkf9jXHRYvUXD7+WL6UhOgmjE= root@jump" ##This is an example RSA key, please generate a new RSA key to access your worker VM joinConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external --- apiVersion: cluster.x-k8s.io/v1alpha4 kind: MachineDeployment metadata: name: capi-cluster-md0 namespace: default spec: clusterName: mgmt-cluster # name of the Cluster object replicas: 1 # desired number of worker nodes for the cluster selector: matchLabels: null template: spec: bootstrap: configRef: apiVersion: bootstrap.cluster.x-k8s.io/v1alpha4 kind: KubeadmConfigTemplate name: capi-cluster-md0 # name of the KubeadmConfigTemplate object namespace: default # kubernetes namespace of the KubeadmConfigTemplate object. Should be the same namespace as that of the Cluster object clusterName: mgmt-cluster # name of the Cluster object infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate name: capi-cluster-md0 # name of the VCDMachineTemplate object used to deploy worker nodes namespace: default # kubernetes namespace of the VCDMachineTemplate object used to deploy worker nodes version: v1.21.2+vmware.1 # Kubernetes version to be used to create (or) upgrade the worker nodes. The value needs to be retrieved from the respective TKGm ova BOM. Refer to the documentation. #When working with capi.yaml file, please make sure to modify an object name to all references in the file. #example - clusterName: mgmt-cluster --> if changing this value, make sure to change all references to key 'clusterName' in the file. |

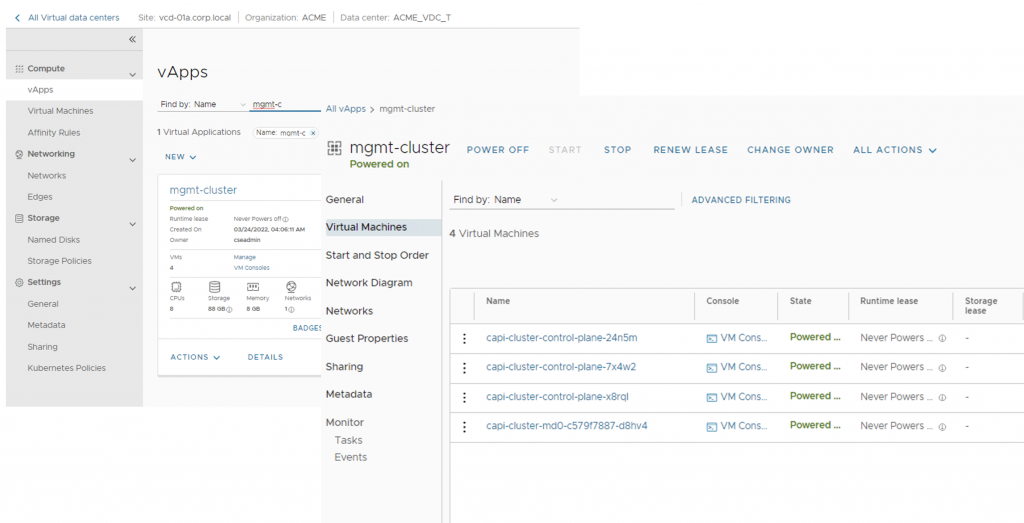

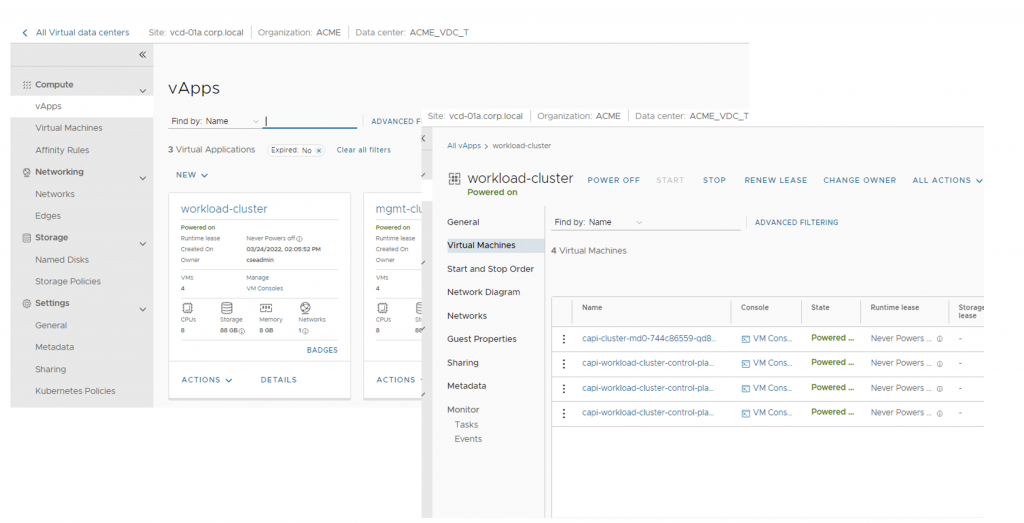

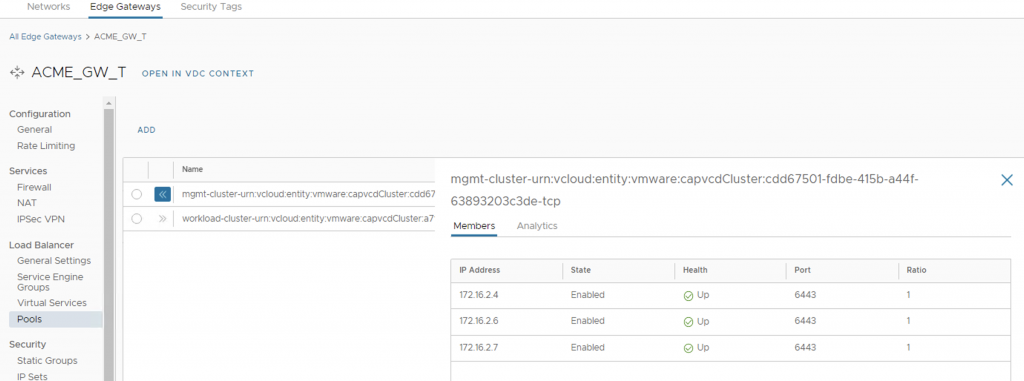

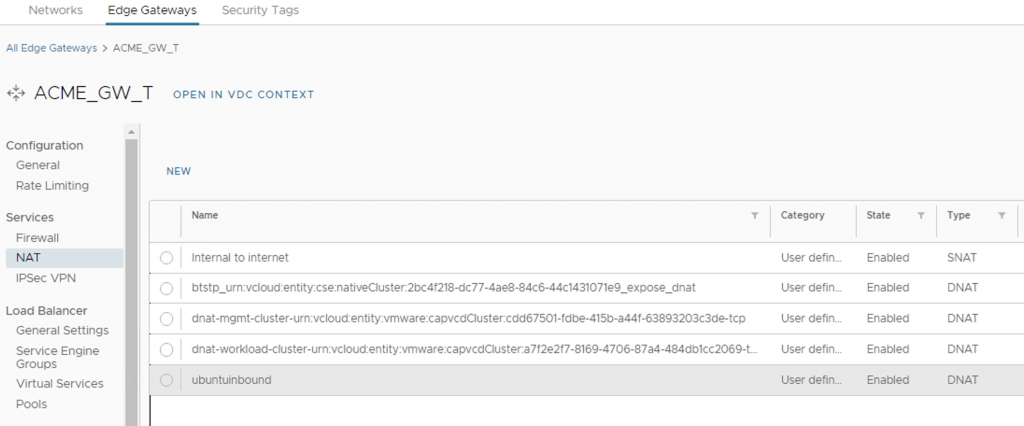

This YAML file creates a Tanzu Kubernetes cluster named mgmt-cluster with TKG version 1.21.2(ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776), 3 control plane VMs(kind: KubeadmControlPlane –> replicas:3) and 1 worker plane VM(kind: MachineDeployment –> replicas: 1). Change the spec based on the desired definition of the management cluster. Once the cluster is up and running, install Cluster API on this ‘mgmt-cluster’ and make it the management cluster. The following section shows the steps to convert this new mgmt-cluster as a management cluster and then create a workload cluster. Figure 4, 6 and 7 showcase created mgmt-cluster, its NAT rules and Load Balancer Pool (backed by NSX-T Advanced LB) on VMware Cloud Director.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 |

#here bootstrap.conf is kubeconfig of the bootstrap cluster from 1st section. kubectl --kubeconfig=bootstrap.conf apply -f capi-mgmt.yaml #check the cluster status root@jump:~# kubectl get clusters NAME PHASE mgmt-cluster Provisioned root@jump:~# #After successful deployment of the mgmt-cluster, check the machine status root@jump:~# kubectl get machines NAME PROVIDERID PHASE VERSION mgmt-cluster-control-plane-24n5m vmware-cloud-director://urn:vcloud:vm:a62758c0-dfc3-4d6b-a436-f0b6d283567f Running v1.21.2+vmware.1 mgmt-cluster-control-plane-7x4w2 vmware-cloud-director://urn:vcloud:vm:76ae58f7-3141-4598-8ac2-8cc703a950cf Running v1.21.2+vmware.1 mgmt-cluster-control-plane-x8rql vmware-cloud-director://urn:vcloud:vm:a677be71-d2e1-483f-8307-4450ea60fddb Running v1.21.2+vmware.1 mgmt-cluster-md0-c579f7887-d8hv4 vmware-cloud-director://urn:vcloud:vm:98338cfd-aaf0-4432-9a2f-8550a6a46e24 Running v1.21.2+vmware.1 #Fetch the kubeconfig of the mgmt-cluster clusterctl get kubeconfig mgmt-cluster > mgmt-cluster.kubeconfig #use mgmt-cluster config to work with the new management cluster export KUBECONFIG=mgmt-cluster.kubeconfig #root@jump:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE cert-manager cert-manager-848f547974-fmxs5 1/1 Running 0 26h cert-manager cert-manager-cainjector-54f4cc6b5-9qlzz 1/1 Running 0 26h cert-manager cert-manager-webhook-7c9588c76-g94nx 1/1 Running 0 26h kube-system antrea-agent-hm6vd 2/2 Running 0 35h kube-system antrea-agent-pjqbl 2/2 Running 0 35h kube-system antrea-agent-sjjhn 2/2 Running 0 35h kube-system antrea-agent-z4qdg 2/2 Running 0 35h kube-system antrea-controller-64bcc67f94-8lv7c 1/1 Running 0 35h kube-system coredns-8dcb5c56b-snx29 1/1 Running 0 35h kube-system coredns-8dcb5c56b-tznlp 1/1 Running 0 35h kube-system csi-vcd-controllerplugin-0 3/3 Running 0 35h kube-system csi-vcd-nodeplugin-kjw5w 2/2 Running 0 35h kube-system etcd-capi-cluster-control-plane-24n5m 1/1 Running 0 35h kube-system etcd-capi-cluster-control-plane-7x4w2 1/1 Running 0 35h kube-system etcd-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-24n5m 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-7x4w2 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-controller-manager-capi-cluster-control-plane-24n5m 1/1 Running 1 35h kube-system kube-controller-manager-capi-cluster-control-plane-7x4w2 1/1 Running 1 35h kube-system kube-controller-manager-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-proxy-jnjl2 1/1 Running 0 35h kube-system kube-proxy-lw6m7 1/1 Running 0 35h kube-system kube-proxy-m7gls 1/1 Running 0 35h kube-system kube-proxy-nsv9m 1/1 Running 0 35h kube-system kube-scheduler-capi-cluster-control-plane-24n5m 1/1 Running 1 35h kube-system kube-scheduler-capi-cluster-control-plane-7x4w2 1/1 Running 1 35h kube-system kube-scheduler-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system vmware-cloud-director-ccm-75bd684688-pcktg 1/1 Running 1 35h #convert this cluster to work as management cluster by installing Cluster API clusterctl init --core cluster-api:v0.4.2 -b kubeadm:v0.4.2 -c kubeadm:v0.4.2 kubectl apply -k cluster-api-provider-cloud-director/config/default root@jump:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-7dc44947-zdhvd 1/1 Running 1 26h capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-cb9d954f5-7snk2 1/1 Running 0 26h capi-system capi-controller-manager-7594c7bc57-mvpvx 1/1 Running 0 26h capvcd-system capvcd-controller-manager-6bd95c99d8-8fzvf 1/1 Running 0 26h cert-manager cert-manager-848f547974-fmxs5 1/1 Running 0 26h cert-manager cert-manager-cainjector-54f4cc6b5-9qlzz 1/1 Running 0 26h cert-manager cert-manager-webhook-7c9588c76-g94nx 1/1 Running 0 26h kube-system antrea-agent-hm6vd 2/2 Running 0 35h kube-system antrea-agent-pjqbl 2/2 Running 0 35h kube-system antrea-agent-sjjhn 2/2 Running 0 35h kube-system antrea-agent-z4qdg 2/2 Running 0 35h kube-system antrea-controller-64bcc67f94-8lv7c 1/1 Running 0 35h kube-system coredns-8dcb5c56b-snx29 1/1 Running 0 35h kube-system coredns-8dcb5c56b-tznlp 1/1 Running 0 35h kube-system csi-vcd-controllerplugin-0 3/3 Running 0 35h kube-system csi-vcd-nodeplugin-kjw5w 2/2 Running 0 35h kube-system etcd-capi-cluster-control-plane-24n5m 1/1 Running 0 35h kube-system etcd-capi-cluster-control-plane-7x4w2 1/1 Running 0 35h kube-system etcd-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-24n5m 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-7x4w2 1/1 Running 0 35h kube-system kube-apiserver-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-controller-manager-capi-cluster-control-plane-24n5m 1/1 Running 1 35h kube-system kube-controller-manager-capi-cluster-control-plane-7x4w2 1/1 Running 1 35h kube-system kube-controller-manager-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system kube-proxy-jnjl2 1/1 Running 0 35h kube-system kube-proxy-lw6m7 1/1 Running 0 35h kube-system kube-proxy-m7gls 1/1 Running 0 35h kube-system kube-proxy-nsv9m 1/1 Running 0 35h kube-system kube-scheduler-capi-cluster-control-plane-24n5m 1/1 Running 1 35h kube-system kube-scheduler-capi-cluster-control-plane-7x4w2 1/1 Running 1 35h kube-system kube-scheduler-capi-cluster-control-plane-x8rql 1/1 Running 0 35h kube-system vmware-cloud-director-ccm-75bd684688-pcktg 1/1 Running 1 35h |

Provision workload Cluster

Once the Management cluster is ready, create a new cluster as follows to create and manage new workload clusters with multiple control planes.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 |

#vi capi_workload.yaml apiVersion: cluster.x-k8s.io/v1alpha4 kind: Cluster metadata: name: workload-cluster namespace: default spec: clusterNetwork: pods: cidrBlocks: - 100.96.0.0/11 # pod CIDR for the cluster serviceDomain: k8s.test services: cidrBlocks: - 100.64.0.0/13 # service CIDR for the cluster controlPlaneRef: apiVersion: controlplane.cluster.x-k8s.io/v1alpha4 kind: KubeadmControlPlane name: capi-workload-cluster-control-plane # name of the KubeadmControlPlane object associated with the cluster. namespace: default # kubernetes namespace in which the KubeadmControlPlane object reside. Should be the same namespace as that of the Cluster object infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDCluster name: workload-cluster # name of the VCDCluster object associated with the cluster. namespace: default # kubernetes namespace in which the VCDCluster object resides. Should be the same namespace as that of the Cluster object --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDCluster metadata: name: workload-cluster namespace: default spec: site: https://vcd-01a.corp.local # VCD endpoint with the format https://VCD_HOST. No trailing '/' org: ACME # VCD organization name where the cluster should be deployed ovdc: ACME_VDC_T # VCD virtual datacenter name where the cluster should be deployed ovdcNetwork: 172.16.2.0 # VCD virtual datacenter network to be used by the cluster userContext: username: "cseadmin" # username of the VCD persona creating the cluster password: "VMware1!" # password associated with the user creating the cluster refreshToken: "" # refresh token of the client registered with VCD for creating clusters. username and password can be left blank if refresh token is provided --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate metadata: name: capi-workload-cluster-control-plane namespace: default spec: template: spec: catalog: cse # Catalog hosting the TKGm template, which will be used to deploy the control plane VMs template: ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776 # Name of the template to be used to create (or) upgrade the control plane nodes computePolicy: "" # Sizing policy to be used for the control plane VMs (this must be pre-published on the chosen organization virtual datacenter). If no sizing policy should be used, use "". --- apiVersion: controlplane.cluster.x-k8s.io/v1alpha4 kind: KubeadmControlPlane metadata: name: capi-workload-cluster-control-plane namespace: default spec: kubeadmConfigSpec: clusterConfiguration: apiServer: certSANs: - localhost - 127.0.0.1 controllerManager: extraArgs: enable-hostpath-provisioner: "true" dns: imageRepository: projects.registry.vmware.com/tkg # image repository to pull the DNS image from imageTag: v1.8.0_vmware.5 # DNS image tag associated with the TKGm OVA used. The values must be retrieved from the TKGm ova BOM. Refer to the github documentation for more details etcd: local: imageRepository: projects.registry.vmware.com/tkg # image repository to pull the etcd image from imageTag: v3.4.13_vmware.15 # etcd image tag associated with the TKGm OVA used. The values must be retrieved from the TKGm ova BOM. Refer to the github documentation for more details imageRepository: projects.registry.vmware.com/tkg # image repository to use for the rest of kubernetes images users: - name: root sshAuthorizedKeys: - "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDC9GgcsHaszFbTO1A2yiQm9fC6BPkqNBefkRqdPYMdJ61t871TmUPO4RIqpdyVLtDLZIKyEB4d8sGyRTZ6fZSiYYfs2VBoHdisEFNKwYJoZUlnMvDT+Zm3ROoqjxxMnFL/cPnlh4lL9oVmcB8dom0E0MNxSRlGqbRPp1/NHXJ+dmOnQyskbIfSDte0pQ7+xRq0b3EgLTo3jj00UR4WUEvdQyCB8D/D3852DU+Aw5PH9MN7i6CemrkB0c8VQqtNfhX25DcBE8dAP3GmI/uYu6gqYBM6qw+JSz5C4ZQn2XFb4+oTATqrwAIdvRj276rUc53sjv2aQzD8eQWfhM++BGIWFq3+sAxf1AsoEmrVoWZSIMbRFIGfeFMKrJDIGt6VkRKCK73IlhloAT9h5RCbmeWupguKyu3CheBwhHGsySFwAdaSLozz4yevFvGLAmGZkPuWgx0hSJStuIGttJHuhIlBKx00xhqF1rfT9l83HaKkf9jXHRYvUXD7+WL6UhOgmjE= root@jump" <meta charset="utf-8">##This is an example RSA key, please generate a new RSA key to access your Control plane VM initConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external joinConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external machineTemplate: infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate name: capi-workload-cluster-control-plane # name of the VCDMachineTemplate object used to deploy control plane VMs. Should be the same name as that of KubeadmControlPlane object namespace: default # kubernetes namespace of the VCDMachineTemplate object. Should be the same namespace as that of the Cluster object replicas: 3 # desired number of control plane nodes for the cluster version: v1.21.2+vmware.1 # Kubernetes version to be used to create (or) upgrade the control plane nodes. The value needs to be retrieved from the respective TKGm ova BOM. Refer to the documentation. --- apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate metadata: name: capi-cluster-md0 namespace: default spec: template: spec: catalog: cse # Catalog hosting the TKGm template, which will be used to deploy the worker VMs template: ubuntu-2004-kube-v1.21.2+vmware.1-tkg.2-14542111852555356776 # Name of the template to be used to create (or) upgrade the worker nodes computePolicy: "" # Sizing policy to be used for the worker VMs (this must be pre-published on the chosen organization virtual datacenter). If no sizing policy should be used, use "". --- apiVersion: bootstrap.cluster.x-k8s.io/v1alpha4 kind: KubeadmConfigTemplate metadata: name: capi-cluster-md0 namespace: default spec: template: spec: users: - name: root sshAuthorizedKeys: - "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDC9GgcsHaszFbTO1A2yiQm9fC6BPkqNBefkRqdPYMdJ61t871TmUPO4RIqpdyVLtDLZIKyEB4d8sGyRTZ6fZSiYYfs2VBoHdisEFNKwYJoZUlnMvDT+Zm3ROoqjxxMnFL/cPnlh4lL9oVmcB8dom0E0MNxSRlGqbRPp1/NHXJ+dmOnQyskbIfSDte0pQ7+xRq0b3EgLTo3jj00UR4WUEvdQyCB8D/D3852DU+Aw5PH9MN7i6CemrkB0c8VQqtNfhX25DcBE8dAP3GmI/uYu6gqYBM6qw+JSz5C4ZQn2XFb4+oTATqrwAIdvRj276rUc53sjv2aQzD8eQWfhM++BGIWFq3+sAxf1AsoEmrVoWZSIMbRFIGfeFMKrJDIGt6VkRKCK73IlhloAT9h5RCbmeWupguKyu3CheBwhHGsySFwAdaSLozz4yevFvGLAmGZkPuWgx0hSJStuIGttJHuhIlBKx00xhqF1rfT9l83HaKkf9jXHRYvUXD7+WL6UhOgmjE= root@jump" <meta charset="utf-8">##This is an example RSA key, please generate a new RSA key to access your worker VM joinConfiguration: nodeRegistration: criSocket: /run/containerd/containerd.sock kubeletExtraArgs: eviction-hard: nodefs.available<0%,nodefs.inodesFree<0%,imagefs.available<0% cloud-provider: external --- apiVersion: cluster.x-k8s.io/v1alpha4 kind: MachineDeployment metadata: name: capi-cluster-md0 namespace: default spec: clusterName: workload-cluster # name of the Cluster object replicas: 1 # desired number of worker nodes for the cluster selector: matchLabels: null template: spec: bootstrap: configRef: apiVersion: bootstrap.cluster.x-k8s.io/v1alpha4 kind: KubeadmConfigTemplate name: capi-cluster-md0 # name of the KubeadmConfigTemplate object namespace: default # kubernetes namespace of the KubeadmConfigTemplate object. Should be the same namespace as that of the Cluster object clusterName: workload-cluster # name of the Cluster object infrastructureRef: apiVersion: infrastructure.cluster.x-k8s.io/v1alpha4 kind: VCDMachineTemplate name: capi-cluster-md0 # name of the VCDMachineTemplate object used to deploy worker nodes namespace: default # kubernetes namespace of the VCDMachineTemplate object used to deploy worker nodes version: v1.21.2+vmware.1 # Kubernetes version to be used to create (or) upgrade the worker nodes. The value needs to be retrieved from the respective TKGm ova BOM. Refer to the documentation. #Create a workload cluster with capi_workload.yaml file kubectl kubeconfig mgmt-cluster.kubeconfig apply -f capi_workload.yaml #Fetch the kubeconfig of successfully created workload cluster clusterctl get kubeconfig workload-cluster > workload-cluster.kubeconfig |

Following screenshots showcase created vApp for Workload cluster and its NAT and Loadbalancer pool (backed by NSX-T Advanced Load Balancer)

Lifecycle management of the Management cluster

Customers can scale Management Clusters by modifying the YAML definition (In this example – capi_mgmt.yaml and capi_workload.yaml) to the desired state. These commands can be performed from the bootstrap cluster, which is the parent of the management cluster

|

1 2 3 4 5 6 7 8 9 |

#change number of worker VMs in management cluster from 1 to 2: #modify capi_workload.yaml conents from #replicas: 1 # desired number of worker nodes for the cluster to #replicas: 2 # desired number of worker nodes for the cluster #apply desired configuration: kubectl --kubeconfig=bootstrap.conf apply -f capi_mgmt.yaml |

To summarize, this blog post covered the High-level overview of the Cluster API on VMware Cloud Director for providers and customers onboarding, management, and launching workload clusters in the customer organization.

References: