In Part 1 of this series, we explored some of the pre-requisites for NVMe Memory Tiering such as workload assessment, memory activeness percentage, VM profile limitations, software pre-requisites, and compatibility of NVMe devices.

In addition, we highlighted the importance of adopting VMware Cloud Foundation (VCF) 9, which could provide a significant cost reduction in memory, better CPU utilization and greater VM consolidation. But before we can fully deploy this solution it is important we design with security, redundancy, and scalability in mind, and that is what this section is all about.

Security

Memory security is not necessarily a super popular topic for admins, and this is because memory is volatile. However attackers can leverage memory to store malicious information on non-volatile media to evade detection, but I digress – this is more a forensics topic (which I enjoy). Once power is no longer present the information on DRAM (volatile) disappears within minutes. So, with NVMe Memory Tiering we are moving pages from volatile (DRAM) to non-volatile media (NVMe).

In order to address any security concerns with memory pages being stored on NVMe devices, we have come up with a couple of solutions that our customers can easily implement after initial configuration.

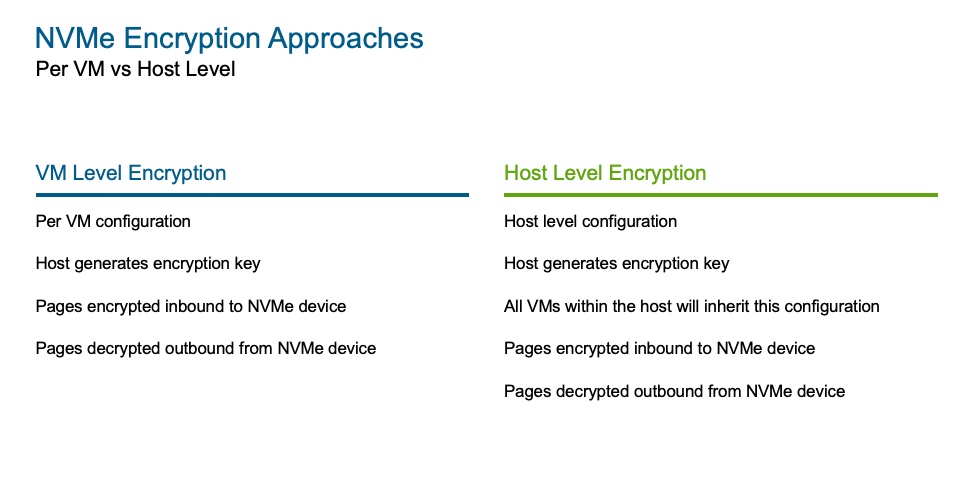

With this first release of the Memory Tiering feature, encryption is already part of the package, and ready to be implemented out of the box. In fact, you have the option to encrypt at the VM level (per VM) or at the host level (all VMs in the host). By default, this option is not enabled but can easily be added to your configuration within the vCenter UI.

For NVMe Memory Tiering encryption we do not require a Key Management System (KMS) or Native Key Provider (NKP), instead, the key will be randomly generated at the kernel level by each host using AES-XTS encryption. This alleviates the dependency on external key providers, given that the tiered-out data is applicable only to the lifetime of the VM. This random key (256-bit) is generated when a VM is powered-on, and the data is being encrypted as it is demoted out of DRAM into NVMe and decrypted when the data is promoted back up to DRAM for reading purposes. During VM migration (vMotion), the memory pages are decrypted first, then sent over on the encrypted vMotion channel to the destination, where a new key will be generated (by the destination host) for subsequent tier-outs at the destination. This process is the same for both “VM Level encryption” and “Host Level Encryption”, the only difference is where we are applying the configuration at.

Redundancy

The purpose of redundancy is to be able to enhance reliability and reduce downtime…and of course, peace of mind. When it comes to memory there are several techniques, some more common than others, but for the most part Error-Correcting Code (ECC) memory and having spare memory modules seem to be quite popular as a solution for memory redundancy. However, now with NVMe Memory Tiering, we need to consider both DRAM and NVMe. I won’t go into DRAM specific redundancy techniques but rather focus on NVMe redundancy in the context of memory.

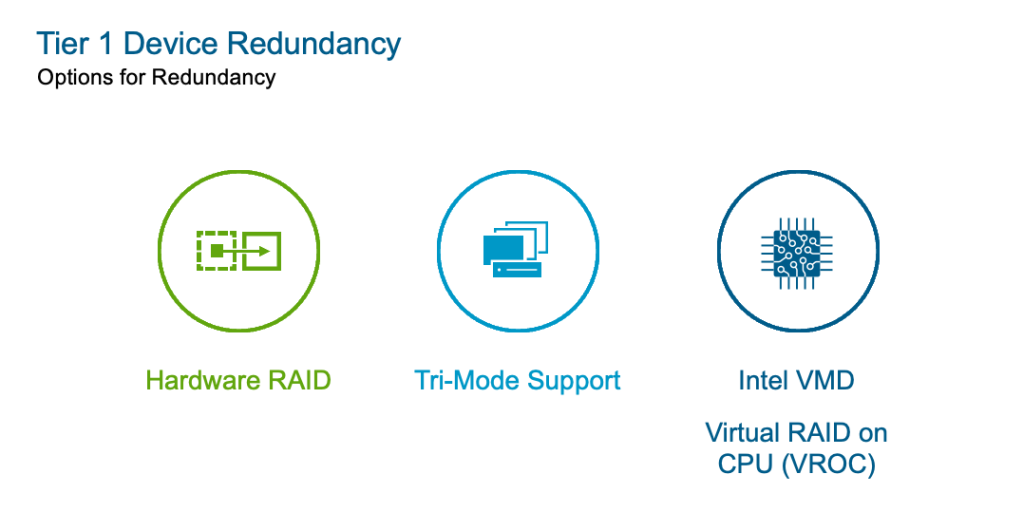

In VVF/VCF 9.0, NVMe Memory Tiering supports hardware-based RAID configuration, tri-mode support and VROC (Virtual RAID on CPU) to provide redundancy to the cold/inactive memory pages. As far as RAID goes, we don’t lock it down to a particular RAID, for example, RAID-1 is a good, supported alternative as solution for NVMe redundancy, but we also support other RAID configurations such as RAID-5, RAID-10, etc. but those will require a lot more NVMe devices and will increase your cost. Speaking of cost, we also need to account for RAID controllers if we want to use RAID for redundancy. Providing redundancy to cold pages is a design decision that needs to be considered in conjunction with cost and operational overhead. What matters most? Redundancy? Cost? Operations? It is also important to think about the presence of a RAID controller and vSAN. vSAN ESA does not support RAID controllers, but vSAN OSA does, however, they should be separated.

RAID PROs:

- Redundancy for NVMe as a memory device

- Enhance reliability

- Reduced downtime (possible)

RAID CONs:

- RAID Controller introduces

- Additional cost

- Operational overhead (configuration, updating firmware & drivers)

- Additional complexity

- New single point of failure

- Possible compatibility issues with vSAN if all drives share the same backplane

As you can see, we have pros and cons of having hardware-based redundancy. Keep an eye on this space, as updates may be done to new supported methods in the near future.

So, let’s say we decide to not pursue the RAID controller route. What happens if I have a single dedicated drive for NVMe Memory Tiering and it fails. In previous blog posts, we’ve discussed how only the VM cold pages are moved to NVMe when needed. This means there are no memory pages from the hosts on NVMe, it also means that there may or may not be a lot of cold pages on the NVMe device, and this is because we move pages out to NVMe when there is pressure on DRAM, as we don’t want to move pages (even if cold) if we really don’t have to (why waste compute resources?). So, in a scenario where some cold pages were moved out to NVMe and the NVMe device fails, the VMs with the corresponding pages on NVMe may enter a HA situation. I say MAY, because that will only happen when/IF the VM requests those cold pages back from NVMe, which in this case are not accessible. But if the VM never requests those pages, the VM will keep on running.

So, the failure scenario will depend on the actual activity at the moment of the NVMe failure. If no cold pages are on NVMe, nothing happens, if some pages are on NVMe – maybe a handful of VMs may eventually go into HA event and failover to another host, and if all the cold pages were on NVMe, it is possible all the VMs will go into an HA event as pages are requested from NVMe, so this may not be a full on ALL DOWN failure scenario. Some VMs may fail right away, some other VMs may fail later, and some VMs may not fail at all. It all depends. The important key aspect here is that the ESX host will keep on running, and the VM behavior may fluctuate based on activity.

Hopefully this explanation will help you gauge your level of comfort when it comes to redundancy and possible PROs and CONs of the current support.

Scalability

Scalability of memory is probably one of those unexpected situations that can be very costly. As you may know, memory accounts for a large percentage (up to 80%) of the total cost of a new server. Depending on how you approached the server purchase, you may have opted for smaller DIMMS and filling up every slot, which really gives you no option to scale memory unless you buy all new DIMMS or worse a new replacement server. You may have also opted to go with higher density DIMMS, and leave some slots open for future growth. This approach gives you an option to scale memory but at a price since you will have to purchase those new DIMMS (if they are still available) at a later date to be able to scale. Either approach is costly and slow, most companies take a lot of time approving new, out of scope expenses and cutting purchase orders.

This is another area in which NVMe Memory Tiering excels at, lowering your cost AND allowing you to scale your memory rapidly. In this case scaling memory becomes an exercise of purchasing at least one NVMe device and enabling Memory Tiering and just like that you get 100% MORE memory for your hosts. Talk about value. You can even “steal” a drive from your vSAN datastore if you can spare to use it for Memory Tiering… more on this later (proceed with caution).

For this section, it is important to understand limits and possibilities to future proof your investment. We’ve discussed the requirement of NVMe devices that meet certain Performance and Endurance classes, but what about size of NVMe devices? Well, that is a perfect segway for Part 3 were we will discuss sizing… I know, cliffhanger!

Blog series:

PART 1: Prerequisites and Hardware Compatibility

PART 2: Designing for Security, Redundancy, and Scalability (This Blog)

PART 4: vSAN Compatibility and Storage Considerations

PART 6: End-to-End Configuration

Additional information on Memory Tiering

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.