The term “workload” is often used in discussions in the design, operation, or troubleshooting of an environment. Whether it be for a private, public, or hybrid cloud, many gloss over the meaning of the term without having a clear understanding of what it is. Perhaps even worse is the possibility of discussing the topic when each party has a different interpretation of what it means.

Let’s explore what this term means as it relates to any type of storage system.

Sorting through a Basic Definition

Merriam-Webster’s generic definition of “workload” is “The amount of work performed or capable of being performed within a specific period.” It is a curious definition because common examples of its use demonstrate that “workload” in most contexts does not represent an effort in a fixed period, but rather an ongoing effort, and characterizations of that effort. Nonetheless, let’s look at how this definition applies to computational environments.

For computational environments, a workload is an expression of an ongoing effort of an application AND what is being requested of it. Much like the practical use of “workload” in other realms, it is focused primarily on the work itself, and what is used to achieve the work.

Many times, discussions will assume that a workload is only one item: The application. This is false. Responding to the question of “Can you describe the workload?” with simply “18 SQL servers” tells very little about the demand on a storage system. They could be idle, compared to a single SQL server in another environment that is extremely busy. Without knowing what is being requested of it, the definition of “workload” is incomplete. But it is not just about the data processed, as applications also influence how data is processed. A structured OLTP database may update data in a very different way than in an unstructured format, such as a flat-file share. Applications tend to shape the characteristics of the workload itself by how it processes the data, or the software limits inherent to the solution.

The Layers of a Workload in a Computational Environment

A storage system doesn’t have any concept of workloads as defined above. Therefore, we need to add more specificity to the term “workload” to better understand how this translates to a storage system.

The illustration in Figure 1 captures how a workload could be viewed as it relates to platforms that power environments. At the very top is what represents the definition we just established. But that is not enough. We need ways to define the characteristics of those workloads that can make them so unique for a given environment. This will capture the volume of data created or consumed during a period of effort, the magnitude of the effort, and the time of approximate repeatability of that effort. This is defined through two time-based factors: Duty cycles and working set sizes.

Figure 1. The layers that make up a workload in a computational environment.

Duty Cycles

The duty cycle represents a time interval or cycle of repeatability. Data requests and processing is highly dependent on the consumers of the data and the purpose of the application, and how this manifests itself over time. There is an aspect of approximate repeatability of this effort over time, where a set of processes occurs then reoccurs at an interval. For example, some code compiling systems may be triggered hourly, and run for 25 minutes, or some batch processes on a database server may run every 4 hours. The duration of effort, the interval of effort, and the magnitude of effort will vary depending on the needs of the consumers (systems and users) in that environment.

Figure 2. A simplified illustration of how duty cycles and working sets comprise a workload

Duty cycles can often be visually identified when looking at the storage performance metrics (IOPS and throughput) of a given system over time, perhaps a day or so. It may also reveal itself in similar patterns in CPU and memory, as often those are tied to storage I/O processing activities. While observing these metrics are good, discussing the processes and activity of these systems with the application administrator is a recommended step to better comprehend the metrics.

Working Sets

A working set refers to the amount of data that a process or workflow creates or uses in a given period. It can be thought of as hot, commonly accessed data of your overall storage capacity. Working set sizes can be important to understand in the realm of storage, as these systems attempt to cache hot data in a manner that demands the least amount of effort. Larger working set sizes can sometimes purge out otherwise hot data from caching tiers of storage systems and may introduce levels of performance inconsistency.

While there is no good way to view working set sizes within vCenter Server, observing the duty cycles of IOPS and throughput (as illustrated in Figure 2) can be a good start to identify patterns to see if there is a correlating impact on performance. For more information, see my old post “Working set sizes in the data center.”

What the Storage System Experiences

The workloads determine the level of activity on a storage system, but what we define a workload to be, and what the underlying layers of a storage system experience are two very different things. The working set size and the duty cycle are the manifestations of established patterns over time from the applications, the systems, and the users requesting the data. What the storage experiences are a series of read and write operations (I/O commands) and the associated payload to and from the storage system.

- Read Operations. Read operations refer to the “commands” that are issued to read the data desired. They can be for random blocks scattered around the storage system, or adjacent to each other. Randomness and sequentiality refer to the access pattern of the blocks on the storage device that these commands must fulfill. The latter can take less effort as it may be able to achieve more payload per command. This larger payload per command can increase throughput. If the data is read frequently, it is up to the storage system to place the data that best compliments that frequency of access. It can usually do this through caching algorithms.

- Write Operations. Write operations refer to the “commands” that are issued to write the data. They share some similarities in access patterns to read commands noted above, but writes are more difficult for a storage system because they require more effort. Allocating free blocks, writing the data resiliently, calculating checksums, compressing or deduplicating the data, and committing to the physical media. If the data is written frequently, storage systems may use techniques so that this frequently overwritten data remains in the buffers to improve the efficiency and performance of a storage system.

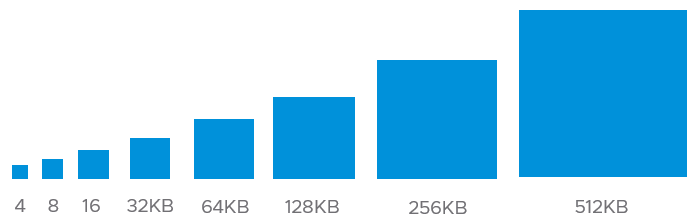

- Payload size. An I/O is a single unit of storage payload in flight. The payload size of an I/O can vary; thus we refer to it as “I/O size.” It is also sometimes referred to as “I/O length” or “block size.” Note that “block size” is used as a term in other contexts within the storage, and in this case, is not referring to on-disk filesystem structures. Contrary to popular belief, a VM will use a wide mix of I/O sizes, primarily dictated by the OS and application processes, and the distribution of I/Os will change on a second-by-second basis. It is this mix, multiplied with the IOPS, that determines the effective storage bandwidth consumed. I/O sizes can typically range from 4KB to 1MB in size, meaning that a single I/O can be 256x the size of another I/O. It is the number of I/Os per second (IOPS) multiplied by the I/O size of each of those discrete I/O commands that equals the throughput.

Figure 3. A visual representation of I/O sizes.

Previously existing as a VMware fling, VMware introduced IOInsight directly into vSAN in version 7 U1. This can help identify rates of randomness or sequentially, as well as I/O size distributions. The I/O size distribution is a summary over a fixed period, as opposed to a distribution over multiple time intervals (preferable), but it can still be of great utility in understanding the characteristics of a workload.

It is the combination of read and write operations, along with the resulting throughput that can affect how well the storage system can fulfill its responsibilities of timely data retrievals and writes. An overwhelming amount of I/O commands can place a different burden on the storage system than an overwhelming amount of throughput. The end result in both cases can be increased latency, and decreased performance of the application.

Summary

Not all workloads are created equal. But understanding what they are, and how to identify them in an environment can result in better design, operation, and optimization regardless of where the workloads reside.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.