Through the power of storage policies, vSAN provides the administrator the ability to granularly assign unique levels of resilience and space efficiency for data on a vSAN datastore. It is an extraordinarily flexible capability for enterprise storage, but it can sometimes cause confusion with interpreting storage capacity usage.

Let’s explore how vCenter Server renders the provisioning and usage of storage capacity for better capacity management. This guidance will apply to vSAN powered environments on-premises, and in the cloud using vSAN 7 or newer. For the sake of clarity, all references will be in Gigabytes (GB) and Terabytes (TB), as opposed to GiB and TiB, and may be rounded up or down to simplify the discussion.

Terms in vCenter when using Storage Arrays versus vSAN

When discussing storage capacity and consumption, common terms can mean different things when applied to different storage systems. For this reason, we will clarify what these terms within vCenter Server mean for both vSphere using traditional storage arrays, and vSAN. The two terms below can be found in vCenter Server, at the cluster level, in the VMs tab.

- Provisioned Space. This refers to how much storage capacity is being assigned to the VMs and/or virtual disk(s) by the hypervisor via the virtual settings of the VM. For example, a VM with 100GB VMDK would render itself as 100GB provisioned. A thin-provisioned storage system may not be provisioning that entire amount, but rather, only the space that is or has been used in the VMDK.

- Used space. This refers to how much of the “Provisioned Space” is being consumed with real data. As more data is being written to a volume this used space will increase. It will only decrease if the data is deleted and TRIM/UNMAP reclamation commands are issued inside of the guest VM using the VMDK.

Storage arrays will often advertise their capacity to the hypervisor after a global level of resilience is applied to the array. The extra storage capacity used for resilience and system overhead is masked from the administrator since all the data stored on the array is protected in the same manner and space efficiency level.

vSAN is different in how it advertises the overall cluster capacity and the values it presents in the “Provisioned Space” and “Used Space” categories in the vCenter Server. Let’s look at why this is the case.

Presentation of Capacity at the vSAN Cluster Level

vSAN presents all storage capacity available in the cluster in a raw form. The total capacity advertised by a cluster is the aggregate total of all of the capacity devices located in the disk groups of the hosts that comprise a vSAN cluster. Different levels of resilience can be assigned per VM, or even per VMDK thanks to storage policies. As a result, the cluster capacity advertised as available does not reflect the capacity available for data in a resilient manner, as it will be dependent on the assigned policy.

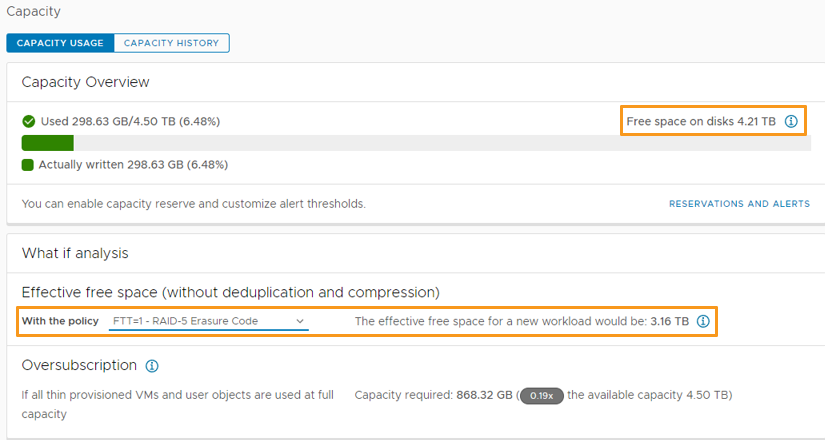

To give a better idea of effective free capacity one has in a cluster, vSAN provides a “What If analysis” to show how much effective free space for a new workload would be using the desired storage policy. As shown in Figure 1, while the overall free space on the disks is reported as 4.21TB, the “What if analysis” shows the effective free space of 3.16TB if the VM(s) were protecting using an FTT=1 by using a RAID-5 erasure code. While it only accounts for one storage policy type at a time, this can be a helpful tool in understanding the effective capacity available for use.

Figure 1. Capacity Overview and What-if Analysis for a vSAN cluster.

Just as with some storage arrays, vSAN thin provisions the storage capacity requested for a volume. The provisioned space of a VMDK will not be used unless it is, or has been used by the guest OS and will only be reclaimed after data is deleted and TRIM/UNMAP reclamation commands are issued inside of the guest VM using the VMDK. For more information, see the post: “The Importance of Space Reclamation for Data Usage Reporting in vSAN.”

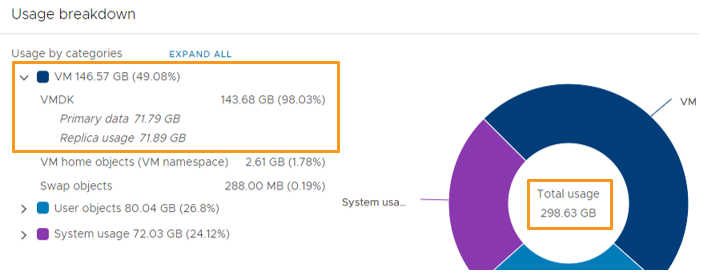

In the same cluster Capacity view, you will see a “Usage breakdown.” Under VM > VMDK, it is broken down into Primary data, and replica usage. The replica usage will reflect both replica objects used with RAID-1 mirroring and the portion of object data that is parity data for RAID-5/6 objects. The primary data and replica usage will reflect the data written in a thin-provisioned form. If the Object Space Reservations (discussed later) the reserved capacity will be reflected here.

Figure 2. Usage breakdown.

Recommendation: Do not use the “Files” tab in the datastore view when attempting to understand cluster capacity usage. The “Hosts and Clusters” view will be a much more effective view to show cluster capacity usage. One can view overall cluster capacity under Monitor > vSAN, or VM usage in the “VMs” tab.

Now let’s look at how thin provisioning and granular settings for resilience look at a VM level.

Presentation of Capacity at the VM Level

The “VMs” view within vCenter Server is a great place to understand how vSAN displays storage usage. For the following examples, several VMs have been created on a vSAN cluster, each with a single VMDK that is 100GB in size. The volume consists of the guest OS (roughly 4GB) and an additional 10GB of data created. This results in about 14GB of the 100GB volume used. Each VM described below will have a unique storage policy assigned to it to demonstrate how the values in the “Provisioned Space” and “Used Space” will change.

VM01. This uses a storage policy with a Level of Failure to Tolerate of 0, or FTT=0, meaning that there is no resilience of this data. We discourage the use of FTT=0 for production workloads, but for this exercise, it will be helpful to learn how storage policies impact the capacity consumed. In Figure 3 we can see the Provisioned space of 101.15GB, and the used space of 13.97GB is what we would expect based on the example described above.

Figure 3. Provisioned and used space for VM01 with FTT=0.

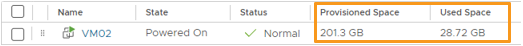

VM02. This uses a storage policy that specifies an FTT=1 using a RAID-1 mirror. Because this is a mirror, it is making a full copy of the data elsewhere (known as a replica object), so you will see below that both the provisioned space and the used space double when compared to VM01 using FTT=0.

Figure 4. Provisioned and used space for VM02 with FTT=1 using a RAID-1 mirror.

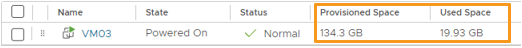

VM03. This uses a storage policy that specifies an FTT=1 using a RAID-5 erasure code on a single object to achieve resilience. This is much more efficient than a mirror, so you will see below that both provisioned space and used space as 1.33x the capacity when compared to VM01 using FTT=0.

Figure 5. Provisioned and used space for VM03 with FTT=1 using a RAID-5 erasure code.

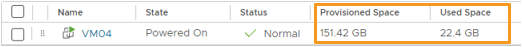

VM04. This uses a storage policy that specifies an FTT=2 using RAID-6 erasure code on a single object to achieve high resilience. As shown below, the provisioned space and used space will be 1.5x the capacity when compared to VM01 using FTT=0.

Figure 6. Provisioned and used space for VM04 with FTT=2 using a RAID-6 erasure code.

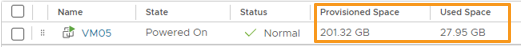

VM05. This uses a storage policy that specifies an FTT=1 through a RAID-1 mirror (similar to VM02), but the storage policy has an additional rule known as Object Space Reservation set to “100.” You will see below that even though the provisioned space is guaranteed on the cluster, the used space remains the same. as VM02 also using an FTT=1 through a RAID-1 mirror.

Figure 7. Provisioned and used space for VM05 with FTT=1 using a RAID-5 erasure code with an OSR=100.

The examples above demonstrate the following:

- The “Provisioned Space” and “Used Space” columns will change values when adjusting storage policy settings. This is different than traditional storage arrays that use a global resilience and efficiency setting.

- The value of provisioned space in this VM view does not reflect any storage-based thin provisioning occurring. This is consistent with storage arrays.

- vSAN Object space reservations do not change the values in the provisioned or used space categories.

Note that “Provisioned Space” may show different values when powered off, versus when powered on. This provisioned space is a result of the capacity of all VMDKs in addition to the VM memory object, VM Namespace object, etc.

Object Space Reservations

Storage Policies can use an optional rule known as Object Space Reservations (OSR). It serves as a capacity management mechanism where, when used, it reserves storage so that the provisioned space assigned to the given VMDK is guaranteed to be fully available. A value of 0 means the object will be thin provisioned, while a value of 100 means that it will be an equivalent of thick provisioned. It is a reservation in the sense that it proactively reserves the capacity in the cluster, but does not alter the VMDK or that file system inside of VMDK. Traditional thick provisioning such as Lazy Zeroed Thick (LZT) and Eager Zeroed Thick (EZT) are actions that occur inside of a guest VM against the file system on the VMDK.

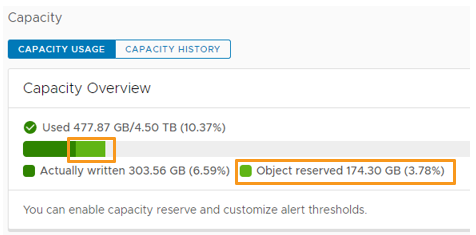

While the Provisioned Space and Used Space values in the enumerated list of VMs do not change when using a storage policy that has an OSR of 100 sets, the Object space reservation will be represented in the cluster capacity shown in Figure 8, in a light green color. While OSR settings will be honored when using Deduplication & Compression, or the Compression-Only service, the OSR values as shown on the Capacity Overview below will no longer be visible.

Figure 8. Objects that use an OSR will have their reservations noted at the cluster level.

Setting an OSR in a storage policy can be useful for certain use cases, but this type of reserved allocation should not be used unless it is necessary, as it will have the following impacts on your cluster:

- Negates the benefit of thin provisioning.

- Eliminates the benefit of reclamation techniques such as TRIM/UNMAP on guest VMs.

- Reduces space efficiency when using deduplication & compression.

The OSR settings do have a significant benefit over a thick provisioned VMDK, which is the reservation of capacity can be easily removed through a single change in a storage policy.

Recommendation: Unless an ISV specifically requires it, DO NOT set VMDKs or use VM templates with VMDKs that are thick provisioned. Its usefulness for performance is questionable, as the assumption that first writes take more effort than second writes is not always a given with modern storage systems. If you must, simply set the OSR to 100 for desired objects that you want their capacity guaranteed while avoiding any thick provisioning inside of a guest VMDK, which gives you the flexibility of changing at a later time.

Summary

vSAN takes a different approach in presenting storage capacity because it offers granular settings that are typically not available with a traditional storage array. Understanding how vSAN presents capacity usage will help in your day-to-day operations and strategic planning of resources across your environments.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.