The vSAN Express Storage Architecture (ESA) has undoubtedly demonstrated some remarkable performance capabilities since its debut in 2022. But these new levels of performance shouldn’t imply that it has hit its full potential. The initial release of the ESA laid the foundation for capabilities in storage performance once thought nearly impossible in a distributed storage system. Just as with vSAN 8 U1, the vSAN engineering team has taken the ESA in vSAN 8 U2 to all new levels.

The ESA in vSAN 8 U2 builds off of two specific improvements first introduced in vSAN 8 U1, and described in the post, “Performance Improvements with the Express Storage Architecture in vSAN 8 U1.” Much like past performance improvements described with the ESA, these new capabilities are embedded into the system and do not require any tuning or operational changes by an administrator. Let’s look at what has been done in vSAN 8 U2 to unlock even more performance potential from the Express Storage Architecture.

vSAN ESA Adaptive Write Path Optimizations

The ESA’s log-structured filesystem (LFS) in vSAN 8 U1 included a new adaptive write path that allowed it to determine in real-time the characteristics of the incoming I/O, and write data using one of two data paths based on what it deems as the most optimal for the incoming I/O. Its default write path handles smaller I/O sizes while its large I/O write path is designed to handle large I/O sizes or large quantities of outstanding I/O. This innovative approach helped solve a common challenge with storage systems: Delivering performance across a variety of workload conditions.

vSAN improves its LFS by tuning how it uses memory to process data under high demand. For each object, the LFS uses an I/O bank, which is an allocation of memory used to prepare data and metadata for an efficient full stripe write. In previous editions, this typically included two banks per object: One bank stripe for active incoming I/O, and another bank stripe flushing data as a stripe with parity to the capacity leg of an object. Instead of a fixed number of banks per object, vSAN 8 U2 will dynamically allocate a collection of bank stripes per object to accommodate more incoming I/O. This allows the LFS to flush multiple, non-active banks in an ordered fashion, as shown in Figure 1.

Figure 1. Adaptive write path optimizations for the ESA in vSAN 8 U2.

Write-intensive workloads placing a strain on a single VMDK will see the most benefit to this enhancement, where the result will be higher IOPS, higher throughput, and lower latency when the workloads demand it. The entire platform will see a collective benefit from this enhancement as well, as pending writes across a platform can be satisfied more quickly, freeing up resources for subsequent activity. How much better is the performance of a single VMDK in the ESA versus the OSA? A single VMDK using RAID-6 and the ESA can match the performance of an entire host of VMDKs using RAID-1 in the OSA. This is yet another example of the extraordinary potential of the ESA.

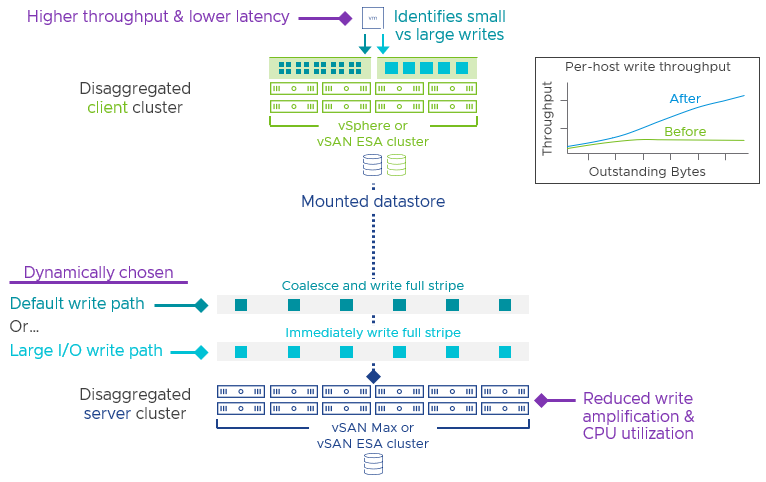

Adaptive Write Path for Cross-Cluster Capacity Sharing and vSAN Max

vSAN 8 U2 can deliver these impressive performance optimizations not only in a traditional vSAN HCI cluster, but to cross-cluster capacity sharing of vSAN HCI clusters, and fully disaggregated clusters using vSAN Max. This will allow the highest layer in the vSAN stack, located on the client cluster in these topologies, to pass data at a higher rate down to the lower layers of the vSAN stack located on the server cluster, as shown in Figure 2.

Figure 2. vSAN ESA adaptive write path for disaggregated storage

The actual gains observed in a production environment depend heavily on the workload conditions and the connectivity between the client cluster and the server cluster. But internal testing has shown dramatic performance improvements, especially in conditions consisting of large quantities of outstanding I/O, large I/O sizes, or a combination of both.

Recommendation. Use sufficient network connectivity between vSphere clusters and a vSAN Max cluster. A datastore provided by a vSAN Max storage cluster can be mounted by vSphere clusters (also known as a “vSAN compute cluster”) with as little as 10Gb connectivity between clusters, but higher bandwidth and lower latency connectivity between clusters may provide much better performance if the workloads on the client cluster are demanding it. See the “Networking” section in the vSAN Max Design and Operational Guidance document for more information.

Summary

vSAN 8 U2 continues to move the potential performance capabilities of the Express Storage Architecture to all new levels. It can satisfy performance expectations for even the most demanding applications, all without any additional complexity. Stay tuned for even more to come!

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.