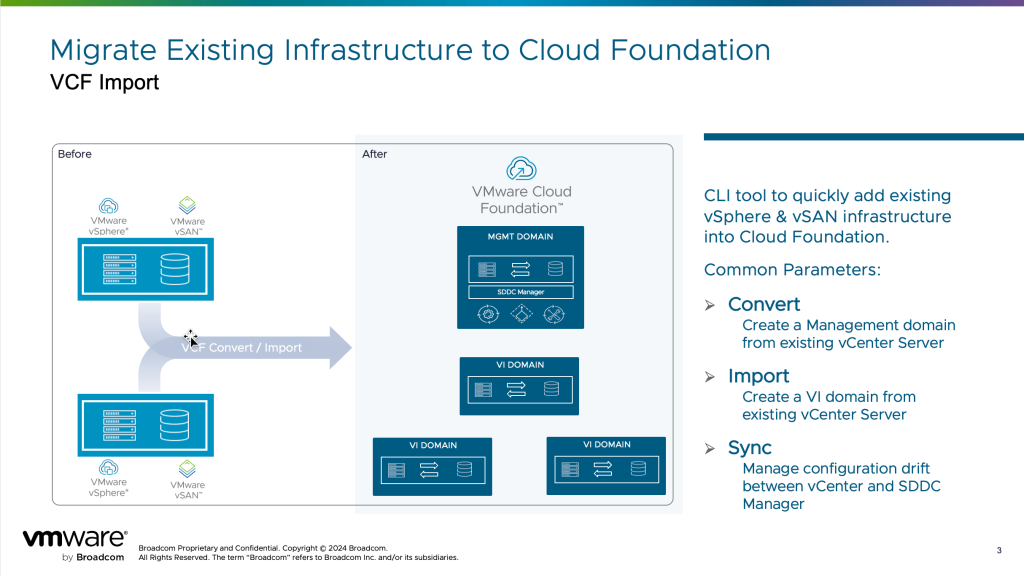

VMware Cloud Foundation (VCF) 5.2 introduced us to the VCF Import tool. The import tool is a command line tool that enables customers to easily and non-disruptively convert/import their existing vSphere infrastructure into a modern Cloud Foundation private cloud.

As is to be expected, this initial release of the VCF Import tool comes with some limitations, and while these limitations will be addressed over time they can present a challenge for early adopters of the tool. For example, one notable limitation that affects both VCF 5.2 and 5.2.1 is that when converting/importing workload domains the ESXi hosts are not configured with the requisite Tunnel Endpoints (TEPs) needed to enable virtual networking within the NSX Fabric. As such, before you can use the virtual networking capabilities provided by VCF Networking you need to complete some additional NSX configuration.

In this blog I provide an overview of the steps to manually enable virtual networking for a converted/imported domain. At a high-level this involves three things:

- Creating an IP Pool

- Configuring an overlay Transport Zone (TZ)

- Updating the host’s Transport Node Profile (TNP)

In this example I am using a vCenter Server instance named “vcenter-wld01.vcf.sddc.lab” that was imported as a Virtual Infrastructure (VI) domain named “wld01”. During the import, a new NSX Manager instance was deployed and the hosts in my domain configured for NSX with VLAN backed port groups. I will extend this default NSX configuration to include the configuration of TEPs on the ESXi hosts together with enabling an overlay Transport Zone (TZ).

Before updating the configuration, it’s good to verify that the domain was successfully imported and that there are no lingering alerts or configuration issues. There are three places you should look to validate the health of an imported domain: the SDDC Manager UI, the vSphere Client, and the NSX Manager UI.

Step 1: Confirm Successful Domain Convert/Import

Begin by ensuring that the vCenter Server instance was successfully imported and NSX properly deployed. The screenshot below shows the output of the VCF Import CLI tool. Note the status of “pass” for the vCenter instance named vcenter-wld01.vcf.sddc.lab.

Next, verify the domain’s status in the SDDC Manager UI. Here you’ll want to make sure that the domain’s status is “ACTIVE”. You’ll also want to confirm that the NSX Manager cluster was successfully deployed and has an IP address assigned.

Procedure:

From the SDDC Manager UI

- Navigate to Workload Domains -> wld01

- Confirm the workload domain status shows ACTIVE and has an NSX Manager IP address assigned.

Step 2: Verify Domain Is Healthy From The vSphere Client.

In vCenter, verify there are no active alarms for the imported vCenter Server instance or any of the configured clusters. Issues are typically manifested by warning icons on the respective objects in the inventory view. You can also select each object in the inventory tree and navigate to the “Monitor tab” where you can see any configuration issues or alerts under “Issues and Alarms”. I recommend you resolve any issues before proceeding.

Procedure:

From the vSphere Client

- Navigate to the Inventory View

- Expand the inventory

- Navigate to the vSphere Cluster -> Monitor -> All Issues

Step 3: Verify Domain Is Healthy From The NSX Manager UI.

Finally, from the NSX Manager UI, verify that all hosts have been successfully configured as indicated by the ‘NSX configuration’ state of ‘‘success’ and the ‘Status’ state of “up”.

Note that in the example below there is a single vSphere cluster. If you have multiple clusters in your domain, be sure to verify the NSX configuration of all hosts in each cluster.

Procedure:

From the NSX Manager UI

- Navigate to System -> Fabric -> Hosts

- Expand the cluster

With a healthy domain you are ready to proceed with updating the NSX configuration to enable virtual networking. Again, this involves creating an IP Pool with a range of IP addresses to use for the Host TEPs, creating/updating an overlay TZ, and updating the ESXi host Transport Node Policy (TNP).

Step 4: Create IP Pool for ESXi Host TEPs.

Before you enable virtual networking you first need to configure Tunnel Endpoint (TEP) IP addresses on the ESXi hosts. A separate TEP address is assigned to each physical NIC configured as an active uplink on the vSphere distributed switches (VDSs) that will back your NSX virtual networks. For example, if you have a VDS with two NICs, two TEPs will be assigned. If you have a VDS with four four NICs, four TEPs will be assigned. Keep this in mind when considering the total number of addresses you will need to reserve in the IP pool.

Note: NSX uses the Geneve protocol to encapsulate and decapsulate network traffic at the TEP. As such, the TEP interfaces need to be configured with an MTU greater than 1600 (MTU of 9000 (jumbo frames) is recommended).

To facilitate the assignment and ongoing management of the ESXi Host TEP IPs, create an “IP Pool” inside NSX. To do this, you first need to identify a range of IP addresses to use for the Host TEPs and assign this range to the NSX IP Pool.

In this example, I am using the subnet 172.16.254.0/24 for my host TEPs. I will create an IP Pool named “ESXi Host TEP Pool” with a range of fifty addresses (172.16.254.151-172.16.254.200).

Procedure:

From the NSX Manager UI:

- Navigate to Networking -> IP Address Pools

- Click ADD IP ADDRESS POOL

Enter the following:

- Name: ESXi Host TEP Pool

- Subnets:

- ADD SUBNET -> IP Ranges

- IP Ranges: 172.16.254.151-172.16.254.200

- CIDR: 172.16.254.0/24

- Gateway IP: 172.16.254.1

- Click ADD

- Click APPLY

- ADD SUBNET -> IP Ranges

- Click SAVE

Step 5: Update Default Overlay Transport Zone (TZ)

In NSX, a Transport Zone (TZ) controls which hosts can participate in a logical network. NSX provides a default overlay transport zone named “nsx-overlay-transportzone”. In this example I will use this TZ. If you choose not to use the default overlay TZ but to instead create your own overlay TZ, I recommend you set the TZ you create as the default overlay TZ.

Start by adding two tags to the TZ. These tags are used internally by the SDDC Manager to identify the TZ as being used by VCF. Note that there are two parts to the tag, the tag name and the tag scope. Also, tags are case sensitive so you will need to enter them exactly as shown.

| Tag Name | Scope |

|---|---|

| VCF | Created by |

| vcf | vcf-orchestration |

Procedure:

From the NSX Manager UI

- Navigate: System -> Fabric -> Transport Zones

- Edit “nsx-overlay-transportzone”

- Add two Tags:

- Tag: VCF Scope: Created by

- Tag: vcf Scope: vcf-orchestration

- Click SAVE

Step 6: Edit Transport Node Profile to Include Overlay TZ

Next, update the transport node profile to include the Overlay TZ. When you add the Overlay TZ to the transport node profile, NSX will automatically configure the TEPs on the ESXi hosts.

Procedure:

From the NSX Manager UI

- Navigate: System -> Fabric -> Hosts

- Click the “Transport Node Profile” tab

- Edit the Transport Node Policy

- Click the number under “Host Switch”. In this example I have one host switch.

- Edit the Host Switch where you want to enable the NSX overlay traffic.

In this example, there is only one host switch. In the event you have multiple switches, make sure you edit the host switch with the uplinks that you want to configure as TEPs.

- Click Under ‘Transport Zones’ to add your Overlay TZ (i.e. “nsx-overlay-transportzone”)

- Under ‘IPv4 Assignment’ select ‘Use IP Pool’

- Under IPv4 Pool select ‘ESXi TEP Pool’

- Click ADD

- Click APPLY

- Click SAVE

When you save the transport node profile, NSX automatically invokes a workflow to configure the TEPs on the ESXi hosts.

To monitor the host TEP configuration:

From the NSX Manager UI

- Navigate: System -> Fabric -> Hosts

- Click the Clusters Tab

- Expand the Cluster

Wait for the host TEP configuration to complete and the status for all hosts to return to “Success”. This can take a few minutes to complete.

Step 7: Verify TEPs on Each ESXi Host

Next, verify the host TEPs from the vSphere Client. Each ESXi host in the cluster should now show three additional vmkernel adapters – vmk10, vmk11, and vmk50. (Note that if you have more than two NICs on your hosts you will see additional vmkernel adapters). For each host, verify that the IP addresses assigned to the vmk10 and vmk11 vmkernel adapters are within the IP Pool range assigned in NSX. Note that vmk50 is used internally and assigned an internal IP address of 169.254.1.

Procedure:

From the vSphere Client

- Navigate: Hosts and Clusters -> Expand vCenter inventory

For each host in the cluster:

- Select the ESXi host

- Navigate: Configure – Networking -> VMkernel adapters

- Verify you see adapters: vmk10, vmk11, and vmk50

- Verify IP the IPs for vmk10 and vmk11 are valid TEP IPs assigned from the IP Pool in NSX.

Step 8: Verify Host Tunnels in NSX

At this point I have updated the NSX configuration to enable virtual networking by creating an IP pool, updating the default overlay Transport Zone (TZ), and configuring host Tunnel Endpoints (TEPs) on my ESXi hosts. The final step is to create a virtual network and attach some VMs to confirm everything is working correctly.

Begin by creating a virtual network (aka virtual segment) in NSX.

From the NSX Manager UI

- Navigate: Networking -> Segments

- Click ADD SEGMENT

- Name: vnet01

- Transport Zone: nsx-overlay-transportzone

- Subnet: 10.80.0.1/24

- Click SAVE

- Click NO (when prompted to continue configuring).

Note that when the virtual network is created in NSX, it is automatically exposed through the VDS on the vSphere cluster.

Next, return to the vSphere Client and create two test VMs. Note that an operating system for the test VMs is not needed to confirm the configuration of the TEP tunnels.

From the vSphere Client

- Navigate: Hosts and Clusters, expand the vCenter inventory

- Click Actions -> New Virtual Machine

- Select “Create a new virtual machine”

- Click NEXT

- Enter Virtual machine name: “testvm01”

- Click NEXT

- At the Select a compute resource, verify the compatibility checks succeed

- Click NEXT

- At the Select Storage screen, select the datastore and verify compatibility checks succeed

- Click NEXT

- At the Select compatibility screen, select a valid version (i.e. ESXi 8.0U2 and later)

- Click NEXT

- Set Guest OS to your preference

- Click NEXT

- At the customize hardware screen, set the network to vnet01

- Click NEXT

- Click FINISH

Create a clone of the ‘testvm01’ VM named ‘testvm02’. Verify ‘testvm02’ is also attached to the virtual network ‘vnet01’. Power both VMs on and make sure they are running on separate hosts. Use vMotion to migrate one of them if necessary.

Return to the NSX manager and confirm that the tunnels are successfully created.

From the NSX Manager UI

- Navigate: System -> Fabric -> Hosts

- Click to expand the cluster and view all hosts.

Verify that you see tunnels created and that the tunnel status is ‘up’ on the ESXi hosts where the ‘testvm01’ and ‘testvm02’ VMs are running. You may need to click refresh a few times as it can take a few minutes (e.g. ~2 or ~3) for the NSX UI to update the tunnel status.

Summary

In this post I showed how to update the NSX configuration of a converted or imported workload domain to enable virtual networking. The procedure involves creating an IP Pool for the Host TEP IP addresses, manually configuring an overlay Transport Zone (TZ), and updating the host transport node profile. Once completed you are able to enable virtual networking and logical switching in your converted/imported workload domains.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.