With the announcement at VMware Explore 2023 in Las Vegas of VMware Private AI with NVIDIA, Broadcom and NVIDIA created a vision of an enterprise-grade, robust generative AI solution focused on privacy, choice, flexibility, performance, and security for our enterprise customers.

Today, Broadcom is happy to announce new capabilities coming to the joint platform, with the VCF 5.2.1 release coming later this year, as well as with future Broadcom releases and support for new NVIDIA capabilities.

VMware Private AI Foundation with NVIDIA

With the general availability of VMware Private AI Foundation with NVIDIA in May 2024, Broadcom, in collaboration with NVIDIA, delivered a private and secure generative AI platform for enterprises that can unlock Gen AI and unleash productivity.

Built and run on the industry-leading private cloud platform, VMware Cloud Foundation (VCF), VMware Private AI Foundation with NVIDIA comprises NVIDIA NIM microservices, which support AI models from NVIDIA and others in the community, and NVIDIA AI tools and frameworks — all included with licenses for the NVIDIA AI Enterprise software platform.

Since its general availability, VMware Private AI Foundation with NVIDIA has gained tremendous momentum. It is now being deployed with several customers, such as the US Senate Federal Credit Union and other technology providers.

VMware Private AI Foundation with NVIDIA is an advanced service available on top of VCF. NVIDIA AI Enterprise software licenses must be purchased separately.

Let’s get into the details.

New capabilities to be released with VCF 5.2.1

Model Store

Currently, some enterprises lack the proper governance measures for downloading and deploying LLMs. With the introduction of the Model Store capability, MLOps teams and data scientists can curate and provide secure LLMs with integrated access control (RBAC). This can help ensure the governance and security of the environment and the privacy of enterprise data and IP.

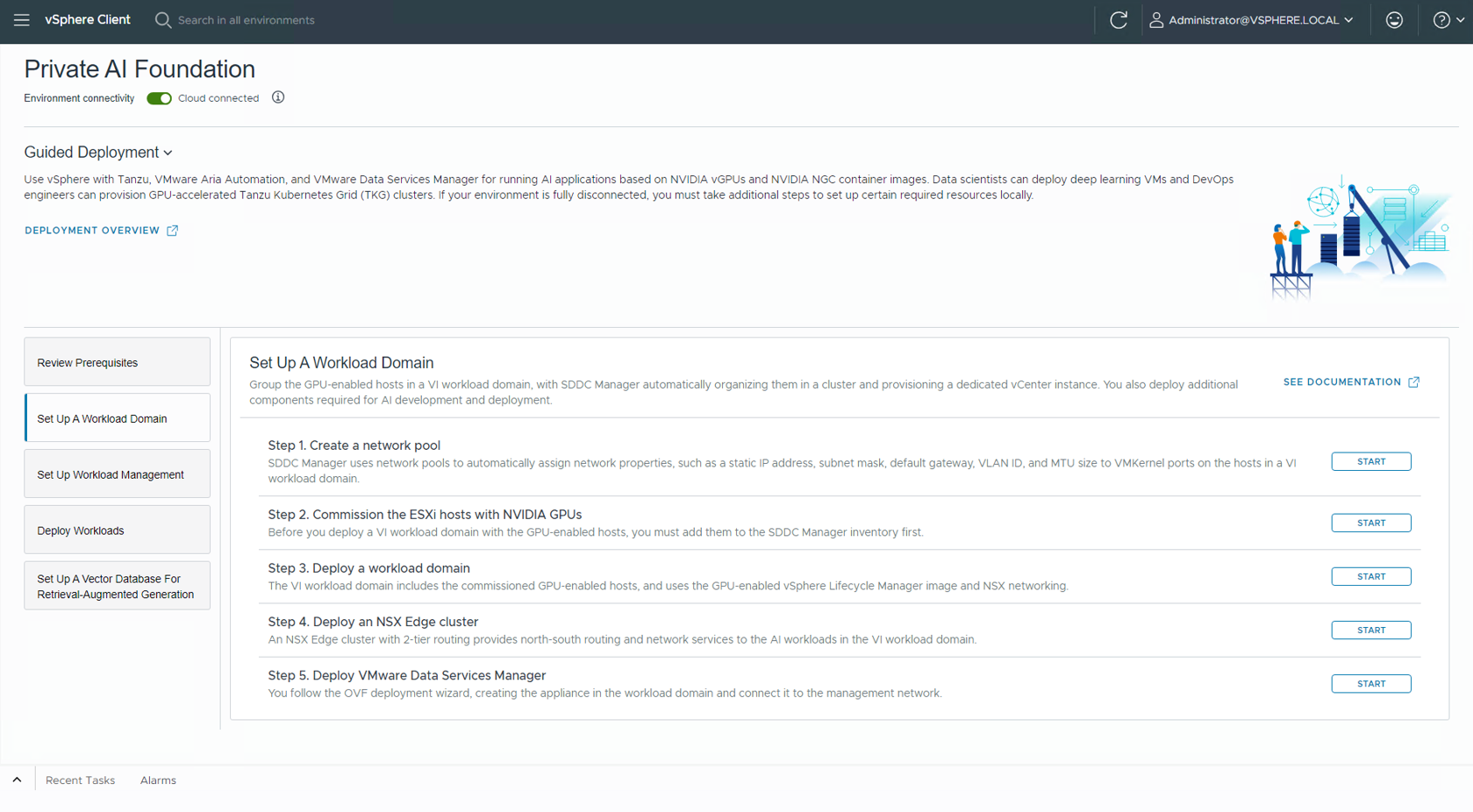

Guided Deployment

Deploying Gen AI on VCF requires creating a Workload Domain and deploying additional components, which may be time-consuming for administrators. With this new capability, the workload domain creation workflow and deployment of other components of VMware Private AI Foundation with NVIDIA will become significantly more streamlined. This can significantly increase deployment speed, reduce admin time, and lead to a faster time to market.

NVIDIA AI Enterprise Capabilities

NVIDIA NIM Agent Blueprints

NVIDIA NIM Agent Blueprints, announced today, are reference AI workflows for specific use cases that enable enterprises to build their own generative AI flywheels. NIM Agent Blueprints include all the AI tools developers need to build and deploy customized generative AI applications. The first NIM Agent Blueprints include a digital human workflow for customer service, a generative virtual screening workflow for drug discovery, and a multimodal PDF data extraction workflow for enterprise RAG.

NVIDIA NIM

NVIDIA NIM, part of NVIDIA AI Enterprise, is a set of easy-to-use microservices designed for secure, reliable deployment of high-performance AI model inferencing across public and private clouds, and workstations.

These prebuilt containers support a broad spectrum of AI models—from open-source community models to NVIDIA AI Foundation models, as well as custom AI models. NIM microservices can be deployed with a single command for easy integration into enterprise-grade AI applications using standard APIs and just a few lines of code. Built on robust foundations, including inference engines like NVIDIA Triton Inference Server, NVIDIA TensorRT, NVIDIA TensorRT-LLM, and PyTorch, NIM is engineered to facilitate seamless AI inferencing at scale, helping users deploy AI applications anywhere with confidence. NIM microservices for models including Llama, Mistral, Mixtral, NVIDIA NeMo Retriever and NVIDIA Riva, and more are generally available on build.nvidia.com and can be deployed on VMware Private AI Foundation.

NVIDIA NIM Operator

NVIDIA NIM Operator makes it easy to deploy generative AI pipelines into production by automating the deployment, scaling, and management of RAG pipelines and AI inference using NVIDIA NIM microservices. NIM Operator delivers reduced inference latency and increased auto-scaling performance with intelligent model caching. NVIDIA NIM Operator will be available soon with NVIDIA AI Enterprise.

Capabilities planned for a future release

Broadcom also announced that exciting capabilities are being engineered for future releases of VMware Private AI Foundation with NVIDIA.

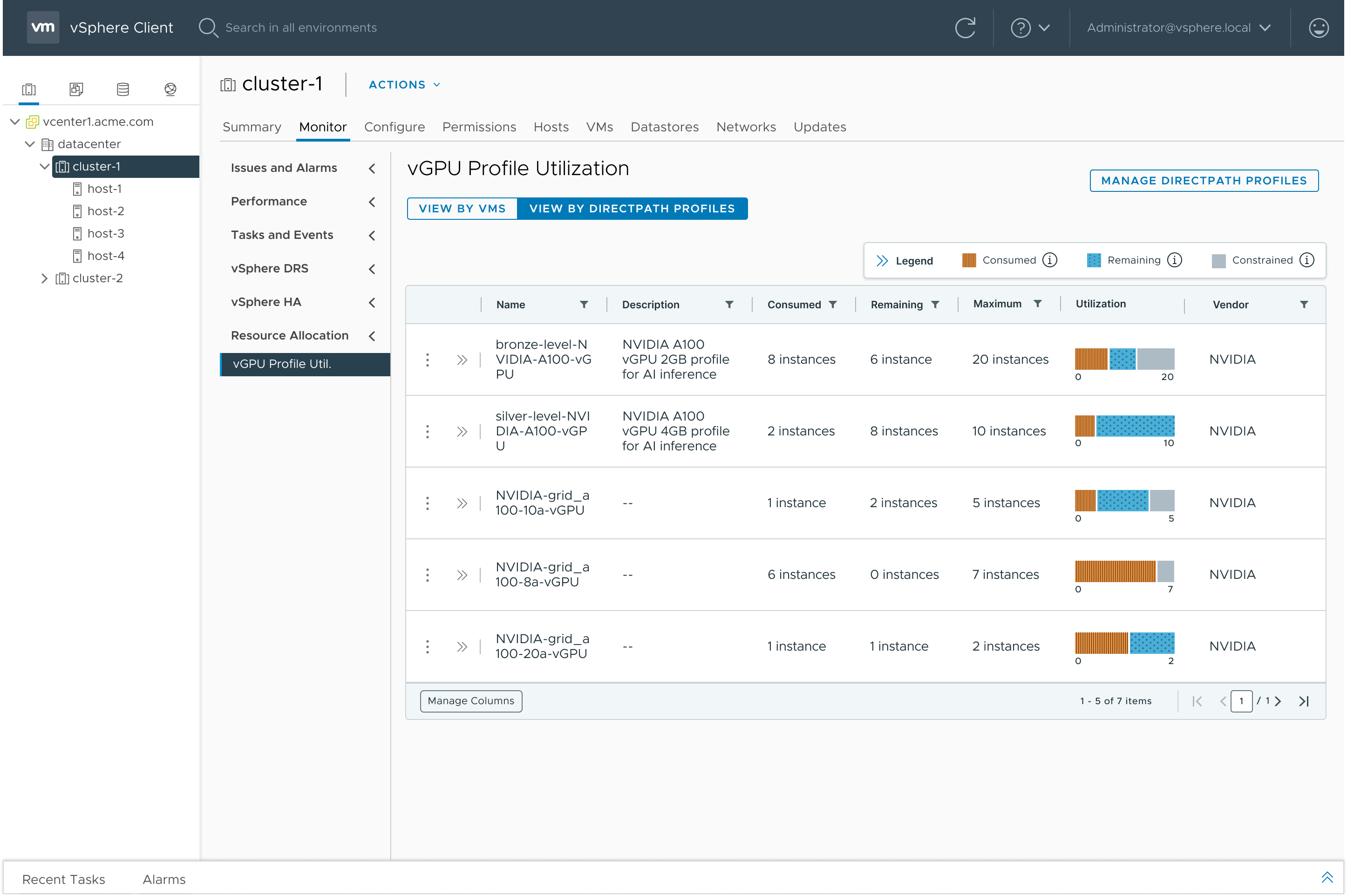

vGPU profile visibility

NVIDIA vGPU allows a physical GPU to be shared among multiple VMs and helps maximize GPU utilization. Enterprises with a significant GPU footprint often create many vGPUs over time. However, admins cannot easily view already created vGPUs, requiring manual tracking of vGPUs and their allocation across GPUs. This manual process wastes admin time.

The new capability will allow administrators to view all vGPUs across their GPU footprint via an easy-to-use UI screen in vCenter and eliminate the manual tracking of the vGPUs, significantly reducing admin time.

GPU reservations

Today, admins cannot reserve space for vGPUs for future use. This prevents them from allocating NVIDIA GPU space for Gen AI applications in advance, potentially causing performance issues when these applications are deployed.

With the launch of this new capability, admins will be able to reserve resource pools for vGPU profiles ahead of time for better capacity planning, improving both performance and operational efficiency.

Data Indexing & Retrieval Service

Due to a lack of expertise, data indexing, retrieval, and vectorization can be complex tasks that many customers need help with. This can lead to issues with the quality of Gen AI output and high data preparation costs. The cost and security ramifications of putting proprietary data into the public cloud where such data preparation services exist also inhibit Gen AI adoption.

The Data Indexing and Retrieval Service will allow enterprises to chunk, index private data sources (e.g., PDFs, CSVs, PPTs, Microsoft Office docs, internal web or wiki pages) and vectorize the data. This vectorized data will be made available through knowledge bases. As data changes, data scientists and the MLOps teams can update the knowledge bases on a schedule or on demand as needed, ensuring that Gen AI applications can access the latest data.

This capability will reduce deployment time, simplify the data preparation process, and improve Gen AI output quality for data scientists and MLOps teams.

AI Agent Builder Service

AI agents are increasingly integrated into generative AI applications, enhancing their capabilities and enabling a wide range of creative and functional tasks. LLMs must be customized with enterprises’ private data to make these use cases real, a complex task requiring special expertise.

This new capability will help application developers, MLOps engineers, and data scientists build and deploy AI Agents by utilizing LLMs from the Model Store and data from the Data Indexing & Retrieval service.

Ecosystem expansion

Broadcom is excited to add new ISVs (Independent Software Vendors) and SIs (System Integrators) to the VMware Private AI Foundation with NVIDIA ecosystem who can help deploy special AI applications to support developers with code generation and deploying Gen AI applications quickly.

Codeium

Codeium is a leading private AI code assist solution millions of developers use today. It enables a powerful AI coding assistant that assists developers with code generation, debugging, testing, modernization, and more.

HCL

HCLTech provides a private Gen AI offering designed to help enterprises accelerate their Gen AI journey through a structured approach. Paired with a customized pricing model and HCLTech’s data and AI services, this turnkey solution enables customers to move from Gen AI POC to production more quickly, with a clearly defined TCO.

Tabnine

Tabnine’s personalized AI tools offer enterprises a seamless and integrated development experience, enabling them to build and deploy applications faster and more efficiently with VMware. Deploying Tabnine with VMware Private AI Foundation with NVIDIA means customers can get the full benefit of AI code assistants without sacrificing privacy or control.

WWT

WWT is a leading technology solution provider and VMware’s preferred partner for full-stack AI solutions. WWT has developed and supported AI applications for over 75 enterprises and works with VMware to empower clients to quickly realize value from VMware Private AI Foundation with NVIDIA, from deploying and operating infrastructure to AI applications and other services.

Ready to get started on your AI and ML journey? Check out these helpful resources:

- Complete this form to contact us!

- Read the VMware Private AI Foundation with NVIDIA solution brief.

- Learn more about VMware Private AI Foundation with NVIDIA.

- Experience NVIDIA NIM at build.nvidia.com.

- Explore NVIDIA AI Enterprise with a free 90-day license.

Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.