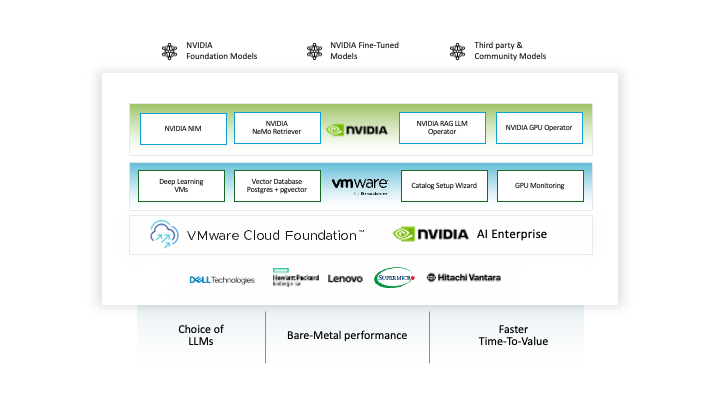

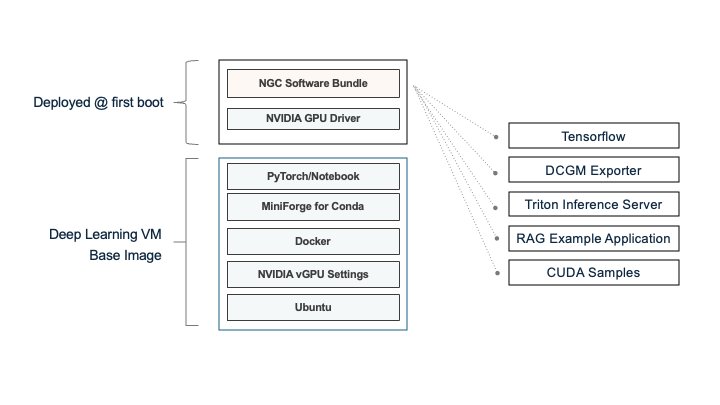

A key part of the VMware Private AI Foundation with NVIDIA is the idea of a “deep learning VM”, as shown in the blue layer of the architecture outline below. A deep learning VM is a VM image that is packaged and distributed by VMware with an operating system and a set of tools in it as a foundation for doing data science work.

Users of VMware Private AI Foundation with NVIDIA such as systems administrators, platform engineers or DevOps folks, can download the VM image from a VMware site and place it into a vSphere Content Library, where it can be used to clone new VMs. The Content Library is a standard repository for VM images and operating systems that is widely used in VMware Cloud Foundation (VCF).

Up to VMware Cloud Foundation 5.2, the base DLVM that VMware provides with VMware Private AI Foundation with NVIDIA contained the Docker runtime engine, the NVIDIA container toolkit and the Ubuntu operating system and it was setup in readiness for the NVIDIA vGPU Driver to be installed at first boot time.

Now with VCF 5.2, we see added toolkits in the base DLVM image, such as Conda and PyTorch for use in Jupyter Notebooks by the data scientist.

A DLVM image is typically cloned from the content library in which it is placed to become a running VM. This is done by the VCF Automation tool as part of a data science user’s request. This all happens without the data science user having to worry about the infrastructure details that support a VM, or a group of VMs that make up a Kubernetes cluster. Users can also clone that new VM by hand if they want to – but the VCF Automation tool makes that a much simpler process. Once the new VM starts up, one or more of the software packages shown on the right side above are downloaded from the NVIDIA GPU Cloud (NGC) site, or a local container repository, and installed into the VM’s guest operating system. The choice of which packages are needed is up to the user who is requesting the new DLVM.

This new DLVM image offers the following use cases:

- In web-connected environments, users such as data scientists can use the Docker CLI to pull and run containers, suitable for their work.

- Data scientists can use MiniForge for Conda to install necessary packages, in connected environments.

- In both cases above, given a local package/container repository, the DLVM can also be used for air-gapped environments. That local container repository that has previously been loaded with the vetted container images.

Jupyter Notebook with PyTorch on a DLVM

As part of its initialization, the new DLVM running on VCF 5.2 uses the cloud-init method to install Pip, Python, JupyterLab, PyTorch, and a GPU programming platform (such as the CUDA libraries). This collection of tools provides data scientists and application developers with an out-of-the-box JupyterLab environment with PyTorch and GPU support. So they can get started on their model testing, fine-tuning and augmentation right away, without being too concerned about the virtual infrastructure that is supporting their DLVM. This removes significant obstacles from the end-user or data scientist’s concerns, when they want to get going quickly on data science work.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.