Artificial Intelligence (AI) is rapidly transforming industries, and Generative AI (Gen AI) is pushing the boundaries of what’s possible, creating new content and redefining value creation. However, enterprises face significant challenges in AI adoption, especially concerning privacy, data security, and the need for adaptable infrastructure. This is why Broadcom announced VMware Private AI.

Announcement of Our Collaboration

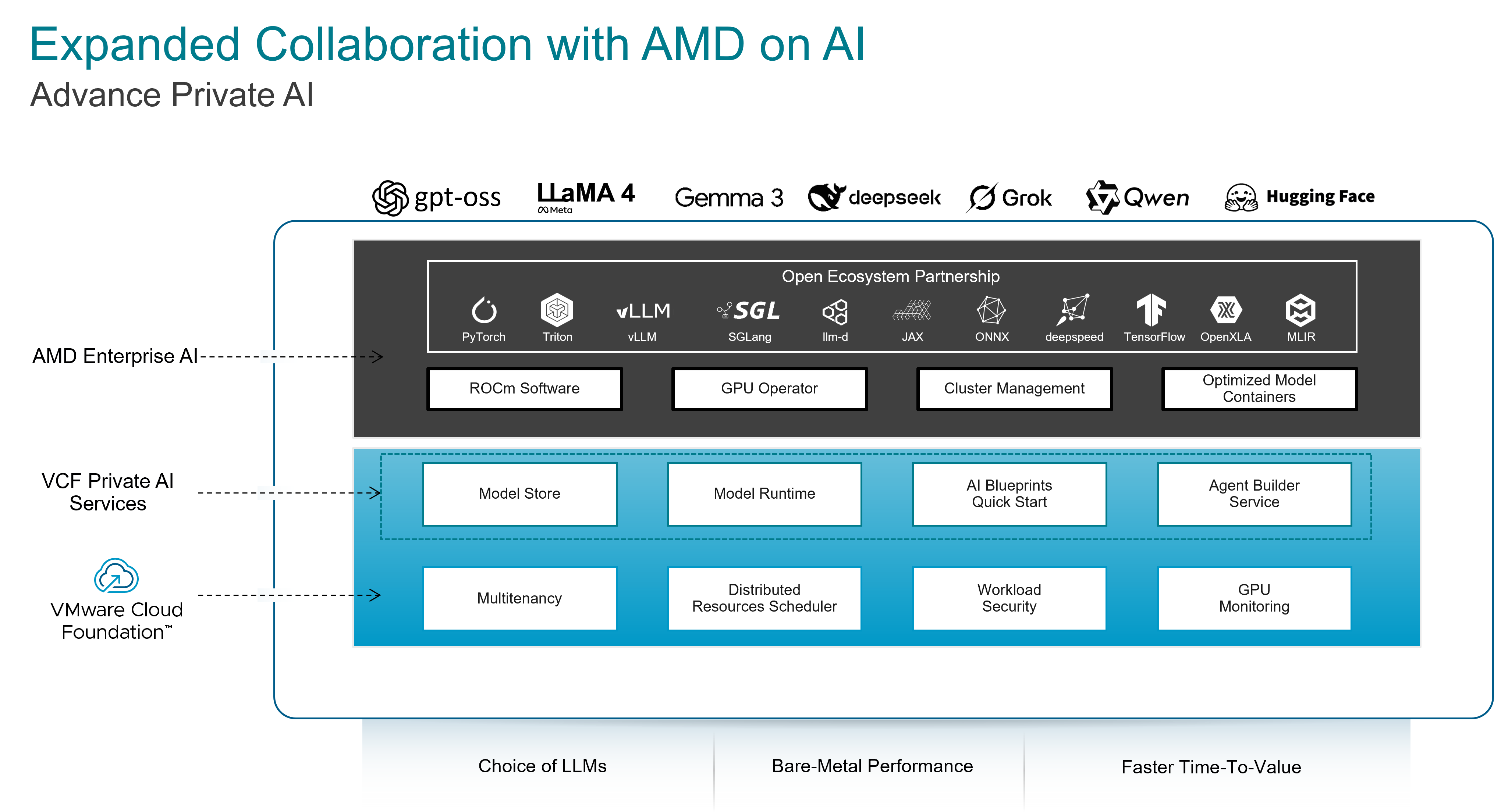

Today Broadcom and AMD are announcing the expansion of our collaboration to Advance AI for enterprises with a private, secure, and high-performance AI infrastructure. We will release in the future a joint platform bringing VMware Cloud Foundation (VCF) together with AMD Enterprise AI software and AMD Instinct™ GPUs. Together this will enable privacy and security of infrastructure, simplify infrastructure management and streamline AI model deployment.

We will also enable Broadcom’s Enhanced DirectPath (I/O) driver models with AMD GPUs to take advantage of VCF’s unique virtualization capabilities for delivering a scalable and efficient platform for AI workloads.

Addressing Key Enterprise AI Challenges

Our expanded efforts directly tackle the core issues enterprises encounter with AI deployments:

- Privacy & Security: Training AI models on public platforms risks exposing sensitive confidential data. Our solution will ensure increased protection of intellectual property, confidential data and access control.

- Choice & Flexibility: Enterprises need the freedom to choose the LLM and the ecosystem that best fits their specific use cases.

- Cost: AI models are complex and costly to architect. Our platform will help streamline infrastructure management and optimize costs with software inbuilt capabilities, hardware integrations and optimizations.

- Performance & Scalability: AI and Gen AI demand significant infrastructural resources. Fine-tuning, customizing, deploying, and querying them can be intensive, and scaling up can be challenging without adequate resources. Efficient allocation and balancing of specialized hardware, such as GPUs, across the organization is critical to ensure low latency and fast response times. The combination of VCF and AMD Instinct™ MI350 Series GPUs provides the scalable compute, high-bandwidth networking, and optimized storage necessary for low-latency and fast response times, even for the most demanding workloads.

- Compliance: Organizations in different industries and countries have different compliance and legal needs that enterprise solutions, including AI and Gen AI, must meet. Gen AI access control, workload placement, and audit readiness are vital when deploying generative models. This platform at release will assist enterprises with fully compliant AI models.

Solution Architecture

The core of this expanded collaboration is a robust architecture we will release in the future for enterprise AI. Let’s review the components of this:

- VMware Cloud Foundation (VCF) – VMware Cloud Foundation is the industry’s first private cloud platform that delivers public cloud scale and agility, private cloud security, resilience and performance, and low overall total cost of ownership for both your AI and non- AI workloads. The versatility offered through this architecture enables cloud admins to utilize different workload domains, which can each be customized to support specific workload types, optimizing for workload performance and resource utilization, specifically GPUs.

- VCF Private AI Services– VCF Private AI Services will be provided to enterprises as part of their VCF subscription. These services will provide powerful capabilities like vector databases, deep learning VMs, Data indexing and retrieval service, AI Agent Builder service and more to enable privacy and security, simplify infrastructure management and streamline model deployment.

- Enterprise AI Software– An open software stack including ROCm Software, programming models, tools, compilers, libraries and runtimes for AI solutions developed on AMD Instinct GPUs

- AMD Instinct GPUs: AMD Instinct GPUs deliver high performance workloads using models like Llama3.1 405B, Llama 3.1 70B, Mistral 7B, DeepSeek V2-Lite and more. Special containers are available for Pytorch, JAX, TensorFlow, vLLM and SGLang to accelerate your inference, fine-tuning and training workloads. Built on the new cutting-edge 4th Gen AMD CDNA™ architecture MI350 series GPUs will deliver exceptional efficiency and performance for training massive AI models, high-speed inference, and complex HPC workloads like scientific simulations, data processing, and computational modeling.

Note: Supermicro Computer Inc. server AS-8125GS-TNMR2 with MI300 GPUs are now supported in DirectPath I/O mode with VCF.

Capability Details

Let’s get into some of the capabilities that this expanded collaboration will enable for customers in future.

1. Enable Privacy & Security of AI Models:

This collaboration will enable privacy and control of corporate data, integrated security and management and help enterprises build and deploy private and secure AI models. Let’s examine some of the capabilities that we will offer in this category.

VCF Capabilities

- Multi-tenancy: With VCF enabled multi-tenancy capability, cloud service providers and enterprise administrators will be able to enable secure and private AI environments and achieve high efficiency and scalability.

- Workload security: VCF has several built-in capabilities to improve workload security that enterprises will be able to deploy. These include Secure Boot, Virtual TPM, vSphere Trust Authority, VM Encryption, and more.

- Identity and Access Management: VCF integrates with various identity and access management solutions, including VMware Identity Manager and third-party identity providers. This ensures that only authorized users and applications can access AI models and data sets.

- Network security: VCF helps protect applications with micro-segmentation, full-stack networking & security, and advanced threat prevention at the network level via software-dedicated firewalls for applications and their associated AI models and data sets.

VCF Private AI Services enabled capabilities

- Model Store: Enterprises lack the proper governance measures for downloading and deploying LLMs from ISVs like Hugging Face or other third-party sources. This can pose significant risks to enterprises, as LLMs with malware or viruses could be introduced into the environment, knowingly or unknowingly. With the Model Store capability backed by vSphere Harbor, ML Ops teams and data scientists will be able to curate and provide secure LLMs with integrated access control (RBAC). This will ensure governance and security for the environment and the privacy of enterprise data and IP.

- Air-Gapped Support: Air-gapping the most sensitive assets slashes cyber-risk exposure, maintains environment compliance, and safeguards revenue and reputation. This solution will be deployable in air-gapped environments, supporting the business needs of customers, with data confidentiality and isolation for their critical workloads.

AMD Enabled Capabilities: AMD will enable several features to add privacy and security to our platform.

- Root-of-Trust Protocols – AMD Instinct MI350 Series GPUs will provide secure boot by verifying accelerator integrity using an accelerator Root of Trust. Additionally, AMD will provide platform-level Root of Trust built on trusted firmware and compliant with DMTF’s Security Protocols and Data Models (SPDM).

- Encrypted GPU Links – Infinity Fabric™ security features will help protect high-speed GPU-to-GPU communications using AES-128 encryption, securing model traffic during distributed training and inference.

- Data Integrity Protections – ECC on HBM3e and caches, along with advanced RAS features will enhance system reliability, helping protect model parameters against data corruption.

- Open, Inspectable AMD ROCm™ Software – As a fully open-source platform, the ROCm software stack will allow community inspection and improvement—enabling fast vulnerability detection and ongoing protection for the GPU software ecosystem.

2. Simplify Infrastructure Management

Architecting AI models is challenging and expensive due to the rapid evolution of vendors, SaaS components, and cutting-edge AI software. However, Broadcom and AMD will offer specialized capabilities to streamline infrastructure management for AI environments and reduce costs. Our extensive joint expertise and strong industry partnerships will ensure a simplified deployment and management experience for enterprises. Let’s examine some of the capabilities we will offer in more detail.

VCF and VCF Private AI Services enabled capabilities

- Distributed Resource Scheduler (DRS): This industry-leading capability helps achieve excellent cost optimization and workload performance by optimally placing workloads on hosts. ESXi hosts are grouped into resource clusters to segregate the computing needs of different business units.

- Vector Databases for Enabling RAG Workflows: Vector databases have become very important for Retrieval Augmentation Generation workflows. Broadcom will enable Vector databases for fast data querying, better accuracy and real-time updates to enhance the outputs of the LLMs without requiring retraining of those LLMs, which can be very costly and time-consuming.

- AI Blueprints Quick Start: Infrastructure provisioning of AI projects involves complex steps, including selecting and deploying the right VM classes, GPU based K8s clusters and AI/ML software, such as the containers. LOB admins carry out these specialized tasks. In many enterprises, Data Scientists and DevOps spend a lot of time assembling the infrastructure they need for AI/ML model development and production. This capability will address these challenges by enabling the creation of AI Blueprints, which will allow LOB Admins on Day 0 to quickly design, curate, and offer infrastructure catalog objects through VCF’s self-service portal (formerly VCF Automation), greatly simplifying the deployment of AI workloads.

AMD Enabled Capabilities

- Leading-Class HBM3E Memory for Bigger Models, Fewer GPUs – The AMD Instinct MI350 Series GPUs deliver a massive 288 GB of HBM3E memory with up to 8 TB/s bandwidth—empowering data centers to run larger AI models with fewer GPUs and streamlining infrastructure footprint.

- Integration Advantage with High-Performance 5th Gen AMD EPYC CPUs – When deployed alongside the 5th Gen EPYC™ processors, AMD Instinct MI350 Series GPUs form a unified, high-throughput AI compute platform tailored for enterprise-scale workloads

- Plug-and-Play Upgrade Path – MI350 Series GPUs are built for drop-in compatibility with existing MI300 platforms, enabling customers to scale up performance quickly without costly re-architecture or disruptive forklift upgrades.

3. Streamline Model Deployment

Broadcom and AMD’s expanded collaboration on AI will deliver a comprehensive suite of capabilities to streamline AI model deployment and optimization. Key capabilities will include the following-

VCF and VCF Private AI Services enabled capabilities

- Data Indexing and Retrieval Service: This service will allow enterprises to chunk and index private data sources (e.g., PDFs, CSVs, PPTs, Microsoft Office docs, internal web or wiki pages) and vectorize the data. This vectorized data will be made available through knowledge bases that can be updated on a schedule or on demand as needed, ensuring that Gen AI applications can access the latest data. This capability will reduce deployment time, simplify data preparation, and improve Gen AI output quality for data scientists.

- API Gateway: Organizations integrating LLMs face challenges such as security risks, frequent API changes, scalability issues, and inconsistent provider APIs. API Gateway service will solve these challenges by enforcing secure authentication. With built-in compatibility for OpenAI API, the preferred API standard for developers building applications, it will simplify standardization across applications. The gateway will abstract underlying model changes, providing a consistent API and operational flexibility

- Model Runtime: The Model Runtime service will enable data scientists to create and manage model endpoints for their applications. These endpoints will abstract away the complexity of creating model instances. Users or systems that need to make predictions will not need to know how the model works internally; they simply need to send the correct input to the endpoint and receive the output. Model endpoints enable scalable deployment. Instead of running the model locally for each request (which can be resource-intensive), it can be deployed on a server that can handle multiple requests concurrently.

- Agent Builder Service: AI agents are increasingly being integrated into generative AI applications, enhancing their capabilities and enabling a wide range of creative and functional tasks. The Agent Builder Service will allow for GenAI application developers to build AI Agents by using resources from the Model Store, Model Runtime, and Data Indexing and Retrieval Service.

AMD Enabled Capabilities

- Out-of-the-Box Model Availability – Pre-optimized support for today’s most popular LLMs, vision models, and generative AI workloads means developers can begin deploying high-value models immediately on AMD Instinct™ MI350 Series GPUs

- Deep Ecosystem Collaborations – Close work with leading AI ISVs, including Hugging Face, PyTorch, and others, ensures seamless integration with widely adopted frameworks, enabling teams to train, fine-tune, and serve models faster.

- Broad Framework Support – AMDROCm™ software enables full-stack acceleration across PyTorch, TensorFlow, JAX, and Triton—giving developers flexibility to use their preferred tools .

- On-Premises Scalability – AMD Instinct™MI350 Series GPUs are designed for rack-scale deployments, allowing enterprises to start small and scale up seamlessly as model demand grows—whether training in-house or deploying inference at scale.

Unlocking New Use Cases

This collaboration will empower enterprises to securely customize, fine-tune, and deploy large language models within their private corporate environment, enabling critical use cases such as:

- GPU-as-a-Service: Accelerate Gen AI adoption with scalable, on-demand GPU infrastructure for various business units, enabling independent innovation with IT governance. GPU-as-a-Service will enable on-demand provisioning of capabilities like Jupyter Notebooks, models-as-a-service and more, allowing organizations to accelerate data science and Gen AI workflows on high-performance virtual infrastructure. This will enable business units to innovate independently while ensuring IT governance and cost control.

- Contact Centers Resolution Experience: Elevate customer satisfaction, quality of content and feedback contact centers provide customers. Using chatbots, built with this solution, contact centers will be able to enable higher quality, faster, and personalized support for their customers.

- Agentic AI- Enable automated real-time actions and accelerated results with agentic AI. This will enable organizations to leverage AI-driven insights for operations like predictive maintenance, automation, and customer personalization while maintaining full control over their data and infrastructure

- Document Search and Summarization- Amplify employee productivity by improving document, policy, and procedure research with RAG (retrieval-augmented generation). This will enable enterprises to deliver intelligent support RAG chatbots for enterprises’ business units and their customers that provide significantly more accurate and relevant responses.

- Private & Secure Content Creation: Unlock secure, scalable content creation with Gen AI using private data. This will facilitate various applications, including creative writing, marketing campaigns, and product specifications. Getting a unique voice in marketing and sales training with an enterprise’s private domain data helps provide a unique voice in their marketing materials. This is important because enterprises don’t want AI to homogenize their communication styles, making them sound identical to their competitors.

Resources

- Learn more about this solution at VMware.com/AIML.

- Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF.

Editorial Notes: The information in this news release is for informational purposes only and may not be incorporated into any contract. There is no commitment or obligation to deliver any items presented herein. VMware Cloud Foundation with VMware Private AI Services is purchased directly from Broadcom or authorized Broadcom Partners.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.