The introduction of global deduplication in vSAN for VMware Cloud Foundation (VCF) 9.0 ushers in a new era for space efficiency without compromise in vSAN. The use of sophisticated space efficiency techniques to store more data than what is physically possible using traditional ways of storing data helps you do more with what you already have.

But deduplication in vSAN for VCF 9.0 is more than just an often sought-after feature in the search for the ideal storage solution. The novel approach takes advantage of vSAN’s distributed architecture and improves its ability to deduplicate data as a cluster size grows. It also pairs well with the VCF licensing model that includes vSAN storage as a part of your license.

This makes vSAN global deduplication more space efficient, and much more affordable than VCF using other storage solutions. When looking at the total cost of ownership (TCO) as described in more detail below, VCF with vSAN is up to 34% lower in cost than VCF with a competing storage offering in a 10,000 core environment. Our internal estimates suggest that in this same model, vSAN global deduplication alone can drive down your entire VCF cost by as much as 4% – or roughly 10 million dollars! Let’s look at how the characteristics of vSAN global deduplication can help drive down costs of your virtual private cloud using VCF.

Measuring Efficiency

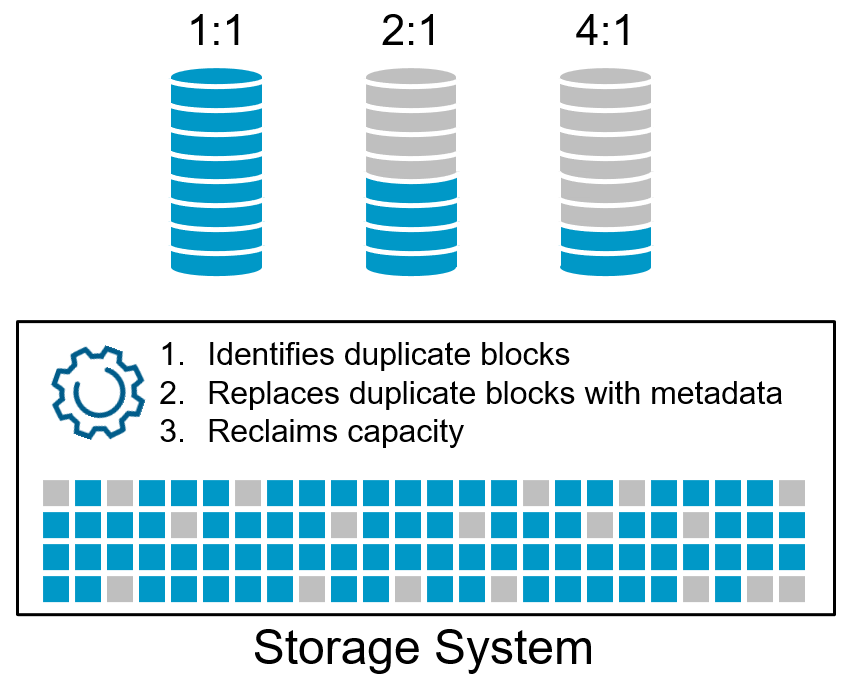

To properly understand the benefit of deduplication, we must have a way to measure its effectiveness. Deduplication effectiveness is typically expressed as a ratio, stating the size of the data before deduplication followed by the size of data after deduplication. A higher ratio equals more capacity savings. A ratio may also be expressed by dropping the “:1” where “4:1” would be displayed as “4x.”

Figure. A visual representation of deduplication ratios.

While a deduplication ratio is easy to understand, unfortunately storage systems may measure this in different ways. Some may show deduplication’s efficiency only as an overall “data reduction” ratio that includes other techniques such as data compression, cloning and thin provisioning. Others may show deduplication ratios that omit metadata and other overheads that may not be included in the measurement. This is important to understand if you are comparing the effectiveness of deduplication between storage solutions.

Several factors influence the effectiveness of deduplication in a storage system. They included, but are not limited to:

- Design of deduplication system. Storage systems are often designed with tradeoffs of effectiveness versus effort, which is what drives different approaches to deduplication.

- Size/granularity of deduplication. This represents the unit of data it looks for duplicates. The finer the granularity, the higher likelihood of duplicate matches.

- Amount of data within the deduplication domain. Typically, the larger amount of data, the better the chances are that it can be deduplicated with other data.

- Likeness of data. The unit of data evaluated must be an exact match to another unit of data before deduplication can provide any benefit. Sometimes applications may perform encryption or other techniques that undermine the ability for data to be deduplicated.

- Characteristics of data and workloads. Data generated by application may produce data that is more favorable to deduplication than others. For example, structured data such as OLTP databases may exhibit less potential duplicate data than unstructured data.

The last two bullet points are related to workloads and data sets that are unique to the customer. These are often the reason why some data has a better ability to deduplicate than other data. But the design of the storage system plays a significant role in deduplication efficiency. Can it deduplicate with minimal interference of production workloads? Can it deduplicate at a fine level of granularity with a large deduplication domain for maximum efficiency? vSAN’s global deduplication has been designed to provide superior results with minimal impact on workloads.

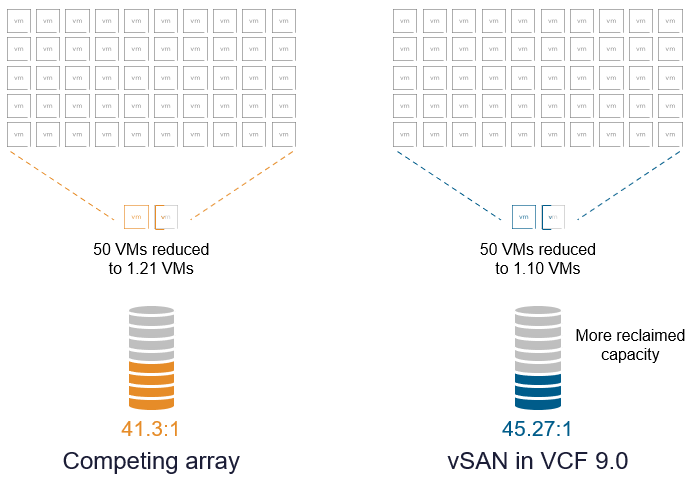

A simple internal test demonstrated vSAN’s superior design. 50 full clones were created on a competing storage array, and 50 full clones were created on vSAN. Factoring in both deduplication and compression capabilities, the storage array had an overall data reduction ratio of 41.3:1. vSAN had an overall data reduction ratio of 45.27:1. This represents the impressive deduplication capability of vSAN, paired with data compression for even more savings. While this example is not a representation of deduplication rates found in general data sets, it demonstrates the effectiveness of deduplication in vSAN.

Figure. Comparing data reduction rates between a competing storage array and vSAN in VCF 9.0.

Scaling out for Better Efficiency

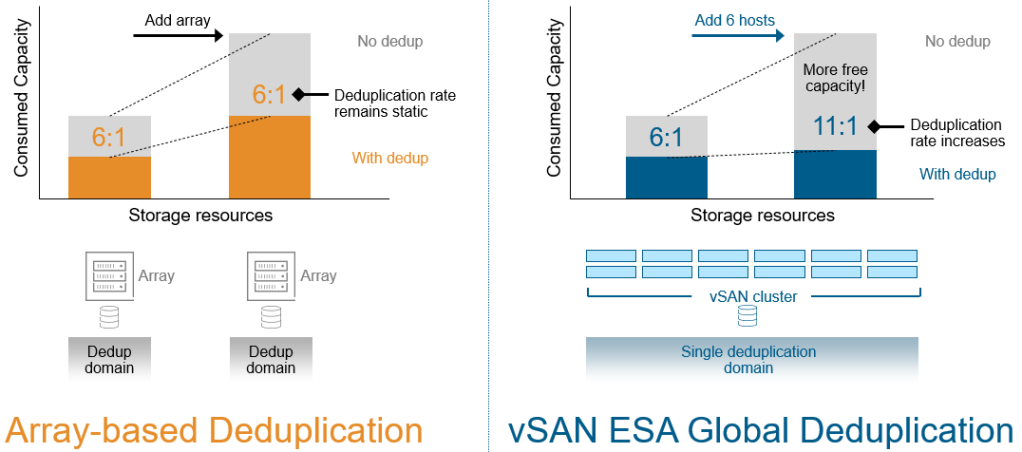

The design of a deduplication in a storage system plays a significant, but not exclusive factor in the overall effectiveness of the technique. For example, the deduplication domain determines the boundary of data that the system looks for duplicate blocks. The larger the deduplication domain, the greater the probability of duplicate data, and thus a more space efficient system.

Traditional modular storage arrays were typically not designed as a distributed scale out solution. Their deduplication domain is typically limited to the storage array. When a customer needs to scale out by adding another array, the deduplication domain will be split. This means that identical data can be living on two different arrays, but cannot be deduplicated across the two arrays because the deduplication domain does not grow as additional storage is added.

vSAN global deduplication is different. It takes advantage of vSAN distributed scale-out architecture. The vSAN cluster is the deduplication domain, which means that as additional hosts are added, the deduplication domain automatically grows, increasing the probability of duplicate data, and delivering an increasing deduplication ratio.

The figure below illustrates this example. On the left we see a traditional modular storage array that is producing a 6:1 deduplication ratio. If another array is added, each discrete array may offer that same deduplication ratio, but misses out on the ability to deduplicate data across arrays.

On the right we see a 6-host vSAN cluster that was producing a 6:1 deduplication ratio. As hosts are added, any data residing on the expanded hosts reside in the same deduplication domain as the original 6 hosts. This means that the deduplication ratio would increase as the number of hosts are added, and the data set becomes larger.

Figure. Demonstrating the increased efficiency of vSAN Global Deduplication as a cluster size is scaled.

Using More of What You Already Own

Driving down costs has a strong correlation to increasing usage of existing hardware and software assets. The higher the usage of hardware and software, the less these assets go to waste, and the more one can defer future expenditures.

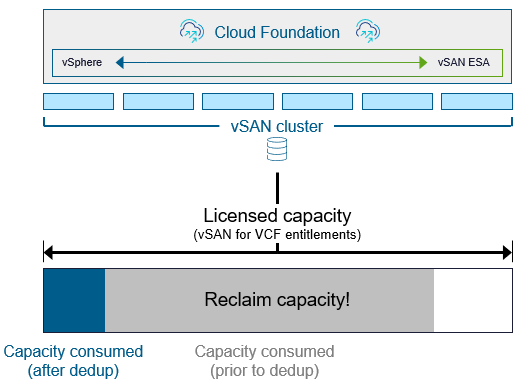

The licensing model of vSAN with VCF, paired with vSAN global deduplication make for a winning combination. Each VCF core license includes 1TiB of raw vSAN storage. But with global deduplication, whatever capacity is reclaimed directly benefits you! For example, a 6 host vSAN cluster consisting of hosts with 32 cores per host would provide 192 TiB of vSAN storage as a part of the VCF licensing. If that cluster provides a 6:1 deduplication ratio, one could store nearly 1.2 PiB of storage using the existing licensing.

Figure. Understanding licensed capacity versus effective capacity after deduplication.

Real Cost Savings

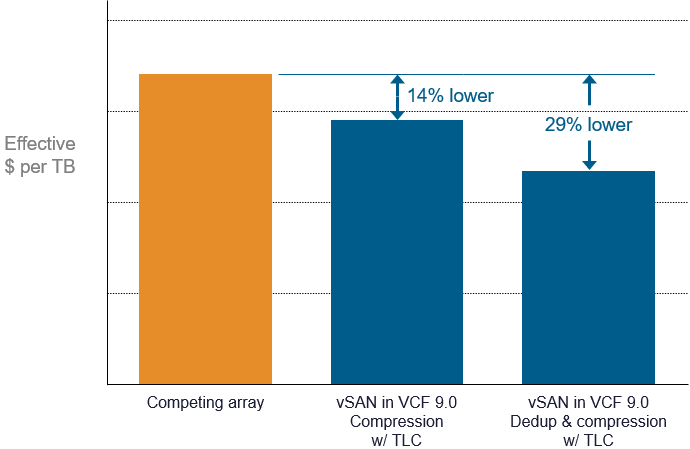

When storage is provided as a part of the VCF license, it makes sense that storage costs will go down because there is less to purchase. In the example below, we compare the effective price per TB when VCF uses a competing storage array with deduplication to serve up tier-1 workloads versus vSAN with VCF 9.0. Given that the data sets were structured data (SQL), data reduction rates were very modest. Based on a model of 10,000 VCF cores with assumed levels of CPU utilization and licensing costs, the effective price per TB is already 14% lower by only using data compression in vSAN. But the cost of storage (cost per TB) is 29% lower when using vSAN deduplication and compression. Our own estimates suggest that the total cost of ownership for VCF will be reduced by as much as 34%.

Figure. Comparing effective costs of VCF when using a competing array versus vSAN.

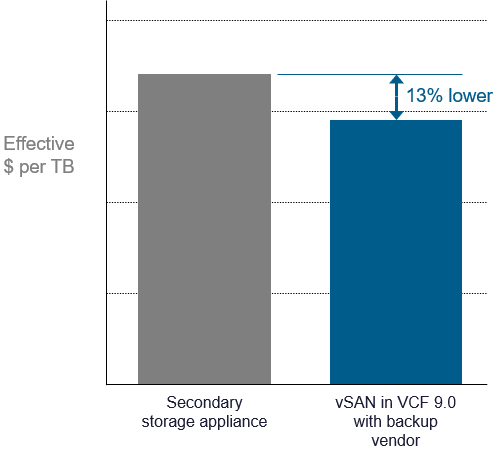

What about secondary storage? Even when vSAN uses Read-Intensive TLC devices and paired with a common, third-party backup vendor, it can result in a lower cost per TB than with an external secondary storage appliance. This comparison also used the same 10,000 VCF core environment with assumed levels of CPU utilization and licensing costs. Even with the additional costs of a third-party backup vendor, vSAN came in at a 13% lower cost per TB.

Figure. Comparing secondary storage costs using an appliance versus vSAN with a backup solution.

If you are interested in trying it out with the P01 release of VCF 9.0, you can contact Broadcom for more details using this form. We will be focusing on customers who would like to enable it on single site vSAN HCI or vSAN storage clusters ranging from 3-16 hosts using 25GbE or faster networking. Initially, some topologies like stretched clusters and some data services like data-at-rest encryption will not be supported while using this feature.

Summary

We believe global deduplication for vSAN in VCF 9.0 will be as good or better than deduplication offerings with other storage solutions. Given that VCF customers are entitled to 1TiB of raw vSAN storage for every VCF core licensed, this results in a tremendous potential to provide all of your storage capacity through your existing licensing, and driving your costs lower than ever before. What if your most recent storage array was the last one you will ever purchase? vSAN in VCF 9.0 may make that a reality.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.