As part of the plethora of features announced for VMware Cloud Foundation 9.0, Memory Tiering stood out as one of the key features within VMware vSphere in VCF 9.0. Similar to vSphere’s many features, Memory Tiering with NVMe offers reduce cost of ownership as well as full integration with ESX (yes, it is ESX again), and VMware vCenter as well as flexible deployment choices, giving our customers many options during configuration.

Memory Tiering with NVMe was introduced as part of VCF 9.0, and it is important to highlight its value to companies looking to reduce cost, especially when it comes to procuring hardware, as memory cost is a substantial part of hardware BOMs. A feature that brings scalable memory approaches by leveraging low cost hardware can really make a huge difference on how IT spend is allocated and what projects get prioritize.

Memory Tiering with NVMe can be configured from the vCenter UI, that you know and love, ESXCLI for the command line lovers, and script capabilities with PowerCLI. You can also use a combination of these options to configure memory tiering whether you are enabling tiering at the host level or at the cluster level.

Hardware Matters

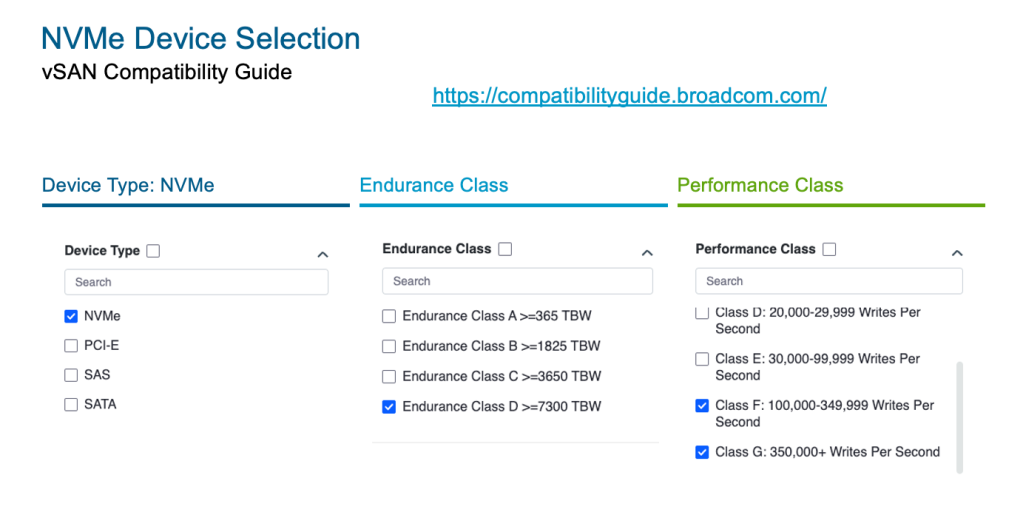

Prior to configuring Memory Tiering, it is important to pay attention to the recommended hardware to be used. This is a very strong recommendation by the way. Since we will be leveraging NVMe devices as memory, we want those devices to not only be resilient but also perform extremely well under heavy use. Just like we did with vSAN recommended devices, we have a recommendation to use NVMe devices to have an endurance class of class D (>=7300 TBW), and a performance class of F or higher (100,000= writes per second) for the purpose of memory tiering. We suggest you visit our vSAN compatibility guide, to verify that the devices you plan to use meet the recommendations. It is also important to highlight that many form factors are supported. So, if your server has no open slots for 2.5” drives, but has an M.2 slot open for example, you can certainly use that slot for Memory Tiering.

NVMe Partition Creation

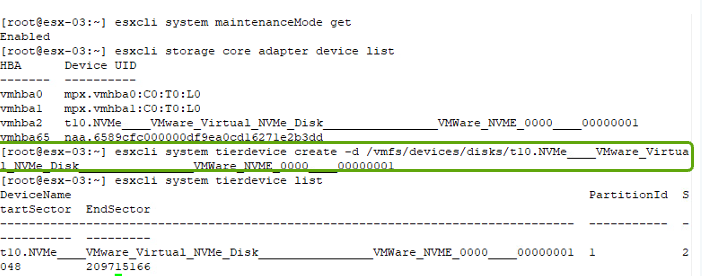

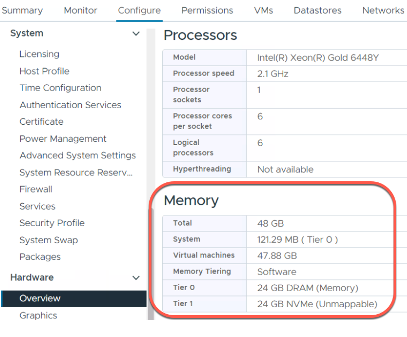

After we have carefully selected the recommended NVMe devices, which by the way you can have in a RAID configuration for redundancy purposes, the next step is to create a partition for NVMe tiering. If the device selected has existing partition(s), those will have to be deleted prior to configuration. The maximum partition size with this version is 4TB, but you can certainly use a larger device, allowing you to cycle through the cells and potentially extend the life of the NVMe device. Although the partition size will depend on the device capacity (up to 4TB), the amount of NVMe used will be calculated based on the amount of DRAM for the host and the ratio configuration. The default ratio of DRAM:NVMe for VCF 9.0 is 1:1, a 4x improvement from our tech preview version on vSphere 8.0U3. For example, if you have a host with 1TB of DRAM, and a 4TB NVMe device; you will have a partition size of 4TB but the amount of NVMe to be used will be 1TB… unless the ratio is changed. The ratio is a user configurable parameter; however, caution is advised as changing the ratio may cause negative impacts and it is highly dependent on the activeness of the workloads. More on this later. In this current version, creating the partition is done at the host ESXCLI command line with the following command:

esxcli system tierdevice create -d /vmfs/devices/disks/<UID of NVMe device>

Example:

Host/Cluster Configuration

After creating the tierdevice partition, the only step left id to configure the host or the cluster. Yes, you have the flexibility to configure one or a few hosts or the entire cluster. Ideally you would want to configure a cluster in a homogenous way; however, there are some VM types that are not yet supported with NVMe tiering such as high performance/ low latency VMs, Security VMs (SEV, SGX, TDX), FT VMs, Monster VMs (1TB Memory, 128 vCPUs), and nested VMs. In the case you have some of these VMs on a cluster with other workloads that can leverage Memory Tiering with NVMe, you have the option to dedicate some hosts to such VMs or disable Memory Tiering at the VM level.

To enable Memory Tiering on a host you can use ESXCLI, PowerCLI, or VC UI. In ESXCLI the command is as simple as enabling this parameter with:

esxcli system settings kernel set -s MemoryTiering -v TRUE

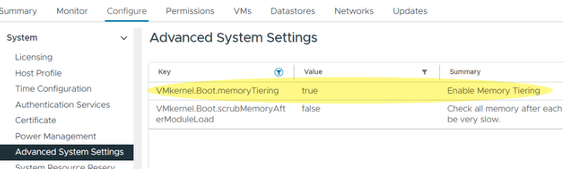

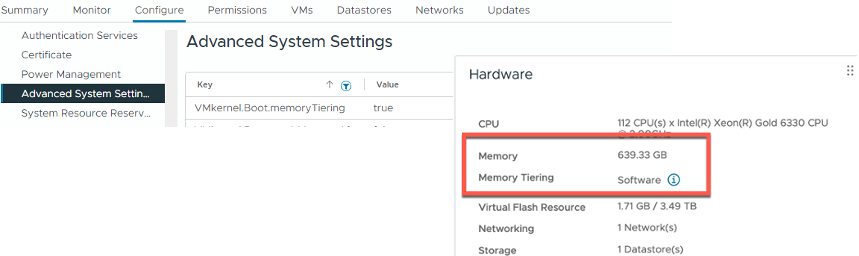

And in the vCenter UI is as easy as changing the VMkernel.Boot.memoryTiering to TRUE. Do notice the word BOOT. You do need to reboot the host in order for this setting to take effect. And the place the host in maintenance mode prior to applying the change.

What If I want to configure the entire cluster? Well, that is even easier. You can use the Desired State Configuration feature within vCenter. All you need to do is create a new draft with this setting enabled under vmkernel>options>memory_tiering and set it to true, then it is just as simple as applying that to all hosts in the clusters (or just some of the hosts).

Once you remediate the configuration, the hosts will automatically be put in maintenance mode and rebooted in a rolling fashion as needed. And that is it. Now you can enjoy better VM consolidation ratios, reduced cost of ownership, and easy memory scalability done at a much lower price point. After configuration is done you will see at least 2x memory increase and visibility of the memory tiers within the vCenter UI.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.