Implementing Private AI

What does it mean to “implement Private AI” for one or more use cases on the VMware Cloud Foundation (VCF) platform?

This set of blogs provides examples of what it means to “Implement Private AI”. These example use cases are implemented internally in Broadcom today for private use. They have already proven to be valuable to the business at Broadcom itself, giving you more confidence that similar use cases can be achieved with your VCF installation on your own premises. We discuss two use-cases in part 1 and two more in part 2 of this series of blogs.

These use-cases were chosen to improve the business by:-

-increasing the efficiency of customer-facing employees using chatbots with company data,

-helping developers build better code using assistants.

The example use cases for VMware Private AI Foundation with NVIDIA we discuss are:-

1. Build a (back-office) chatbot to help customer-facing representatives and employees in general deal with sensitive company data in a contact center or back-office scenario.

2. Provide a coding assistant to software engineers to help develop their applications.

3. Use document summarization techniques to help employees in their tasks of understanding existing company-private content or for creating new content.

4. Create an internal hosting portal for foundation models from the open-source community such that data scientists can easily choose different models to find the best fit for their purpose.

We examine the first two use cases in this blog and cover the others in subsequent articles.

Use Case 1: Building a Chatbot that Understands Company Private Data

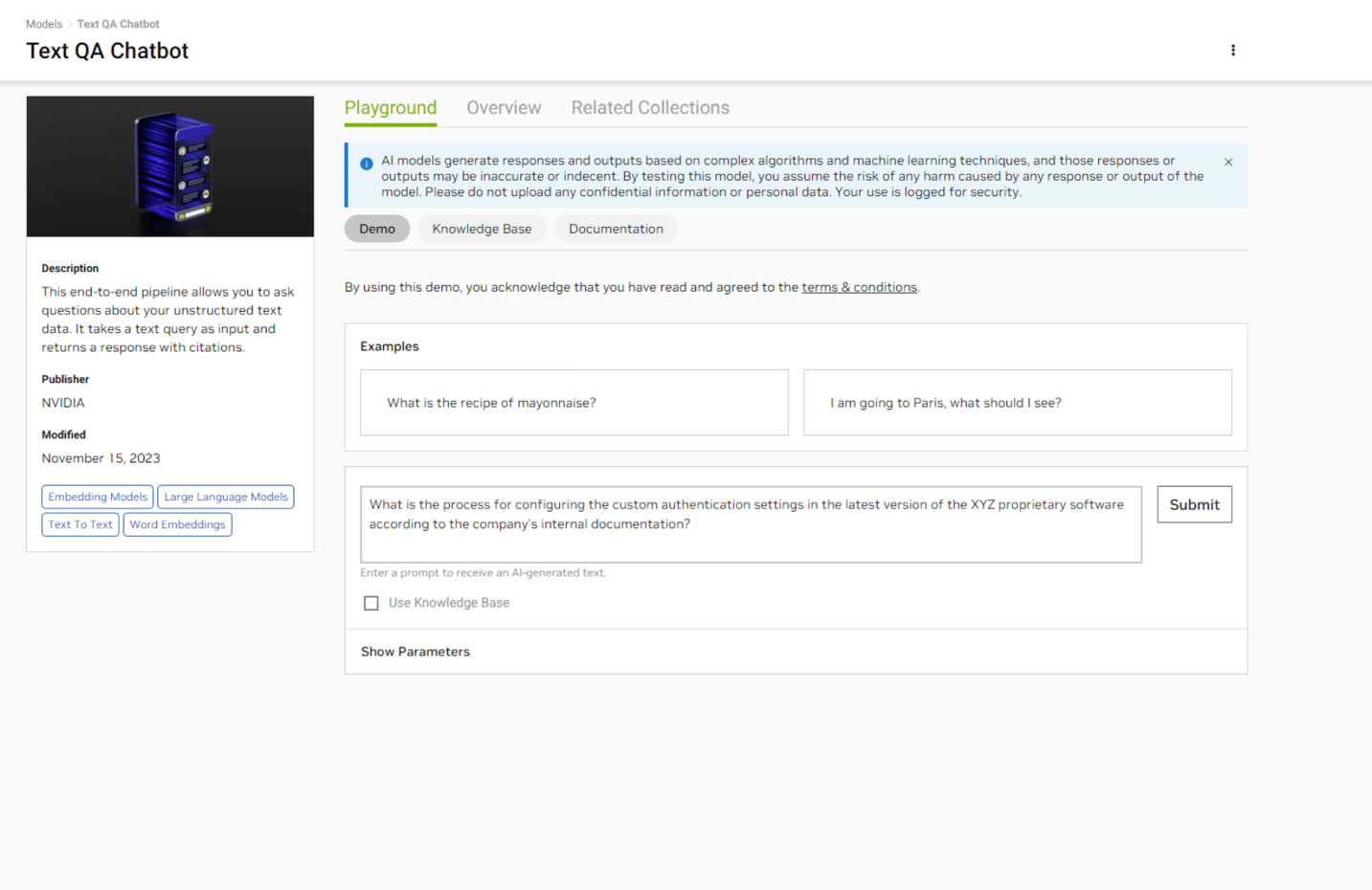

This application type is the most common starter application for people who are embarking on their Generative AI exploration. The core value that differentiates this from public cloud chatbots is the use of private data to answer questions about matters that are internal to the company. This chatbot is for internal purposes only, thus reducing any perceived risk and acting as a learning opportunity for future externally facing applications. Here is an example of the user interface for a starter, simple chatbot application from the NVIDIA AI Enterprise suite that comes with the product – there are many different examples of chatbots available for those starting out in this area. You can read a technical brief from NVIDIA on chatbots here

Figure 1: A text question and answer sample chatbot from the VMware Private Foundation with NVIDIA collection

Figure 1: A text question and answer sample chatbot from the VMware Private Foundation with NVIDIA collection

The set of steps to try out that starter sample chatbot as a learning exercise for you is given in this VMware Private Foundation with NVIDIA technical overview

Contemporary AI-aware chatbot applications are designed to use a vector database that contains your private company data. This data is chunked, indexed and loaded into the vector database offline from general use of the chatbot. Once a question comes into the chatbot application from a user, any relevant data for the question is retrieved from the vector database first and then both that retrieved data and the original query are fed to a Large Language Model (LLM) for processing. The LLM then processes and summarizes that retrieved data, along with the original prompt (question) into an easily digestible form for the user. This design approach is referred to as Retrieval Augmented Generation (RAG). RAG has become an accepted way of structuring a Generative AI application to augment the LLM’s knowledge with your company’s private data, thus providing more accurate answers. Updating the private data is now an update to the database, which is far more easily done than re-training or fine-tuning the model.

An example use of the chatbot application would be as follows. A customer is in conversation with a company employee and is asking about a feature they want to see in a future release of the company’s software product. The employee is not sure of the answer, so they consult their chatbot and interact with it in a conversational style using natural language. The backend logic in the chatbot application retrieves the relevant data from that private data source, processes it in the LLM and presents it in summary form to the employee, who can now decide to answer the customer’s question more accurately.

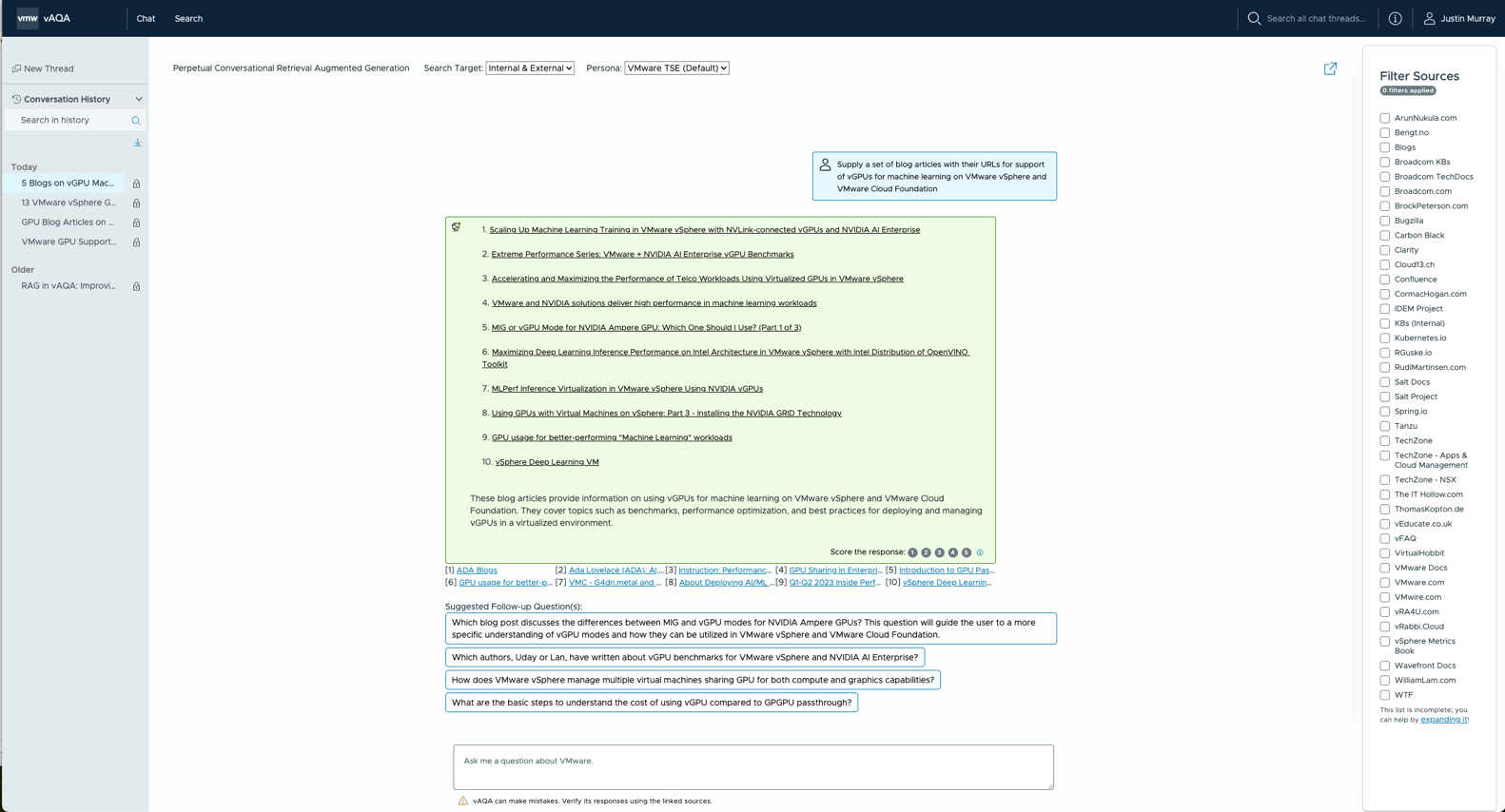

Within Broadcom, our data scientists implemented a production chatbot for our internal use named vAQA, or “VMware’s Automated Question Answering Service”. This has powerful features for interactive chat or search over data that is collected both internally and externally as shown in the Filter Sources on the right side navigation. The user may wish to confine their search to just external data sources and prevent any internal data from being used, to guarantee confidentiality, as one usage. Users can do that very easily with the Filtering options.

Using this in the simplest way shows the power of asking natural language questions to an informed system, as seen here. We asked it for any blog articles with their URLs that have information about virtual GPUs on VCF, as a test. It responded with a set of URLs that are pertinent to the question – and it cites its sources, importantly. There is a lot more functionality here than data retrieval and processing, but that is outside of scope here.

Figure 2: VMware’s Automated Question Answering Service (vAQA) responds to a question about vGPUs

This particular chatbot system uses embeddings stored in a database for question-related lookup and one or more LLMs for processing the results, as well as the GPU drivers at the shared infrastructure level to support that processing.

How VMware Private AI Foundation with NVIDIA Enables Creation of a Chatbot for Private Data

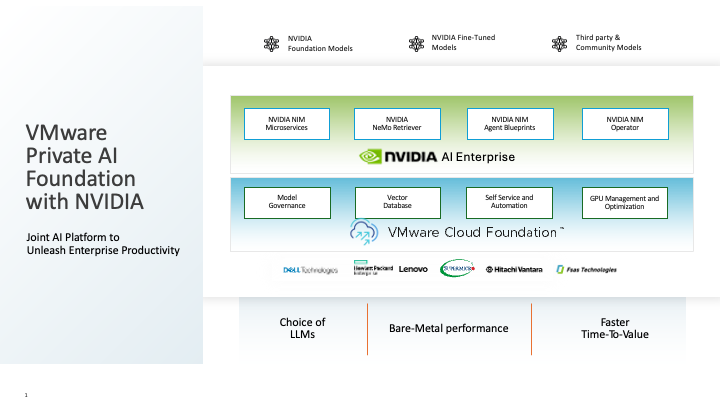

The diagram below summarizes the various parts of the VMware Private AI Foundation with NVIDIA architecture. More detail can be found on this at VMware Private AI Foundation with NVIDIA – a Technical Overview as well as on the NVIDIA AI Enterprise documentation site

Figure 3: The VMware Private AI Foundation with NVIDIA architecture

For implementing a Chatbot application, we can use several of the components from the above architecture to design and deliver a working application (starting with the blue layer from VMware).

- The Model Governance facility would be used to test, evaluate, and store those already pre-trained large language models that we consider to be safe and appropriate for business use in a library (referred to as a “model gallery” that is based on Harbor). The process for doing this model evaluation is unique to each enterprise

- The Vector Database functionality would be used through deployment of that database in a friendly fashion using VCF Automation. The database would subsequently be loaded with cleansed and organized private business data.

- “Self-service automation” tooling based on VCF Automation would be used to provision sets of deep learning VMs for testing the model and subsequently for provisioning Kubernetes clusters for deployment and scaling of the application.

- The GPU Monitoring facilities within VCF Operations would be used to gauge the performance-related effects of the application on the GPU hardware and on the system as a whole.

You can get the best practices and technical advice from VMware authors on deploying your own RAG-based chatbot application by reading the VMware RAG Starter Pack article along with the technical documents mentioned above.

Use Case 2: Provide a coding assistant to help engineers with their development process

Providing a coding assistant to accelerate the software development processes is one of the highest impact use cases for any organization developing software. This includes inline code suggestions, auto-completion, refactoring, code reviews, and a variety of IDE integrations, among others.

VMware’s engineers and data scientists worked with a number of AI-driven tools in this coding assistants area and after much investigation, settled on two third-party vendors, Codeium and Tabnine, that are integrated with the VMware Private AI Foundation with NVIDIA. We will briefly describe the first of those two here.

The key idea is to help the developer while they are coding, so that they can chat with an AI “adviser” without interrupting their flow. That adviser gives code suggestions directly within the editor that can be accepted with a simple press of the “Tab” key. According to the Codeium company, over 44% of newly committed code in their customer base comes from the Codeium tooling. For more information on this code adviser check this article.

One of the fascinating features of coding assistants is their ability to predict what your next action might be in your programming, beyond just inserting the next code snippet. It can take context from before and after your current cursor position and present a code insertion with that context in mind. Coding is just part of the overall development process, of course, so the goal here is to help with code review, testing, documenting, and refactoring as well. Developers also work in teams, so the collaboration across team members can be augmented as well, using multi-repository indexing, seat management, and other techniques.

How VMware Private AI Foundation with NVIDIA Helps in Deploying Coding Assistants

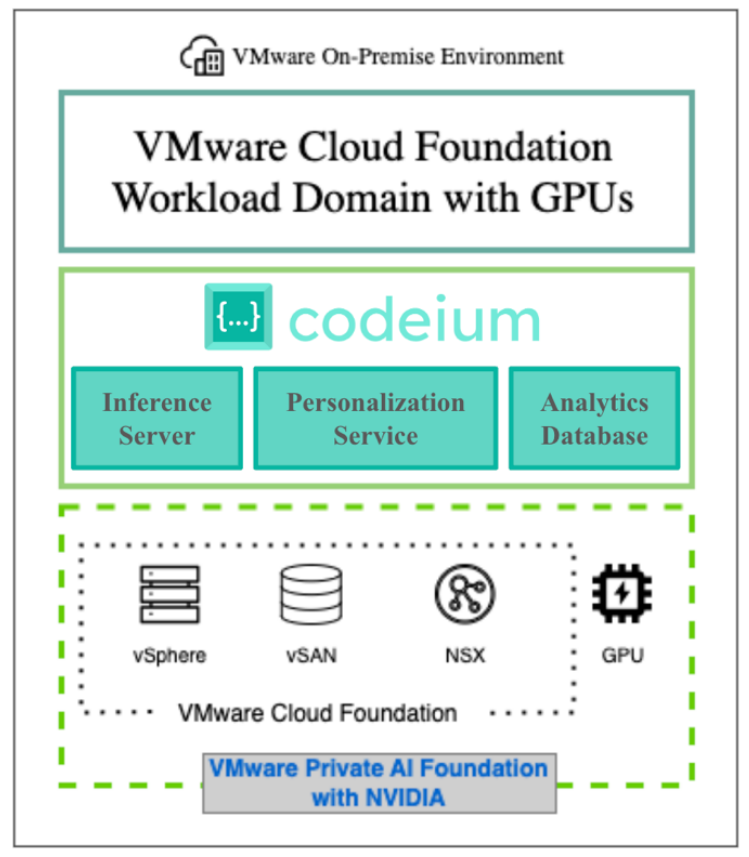

The third party coding assistant from Codeium is deployed on-premises either in a VM running on Docker or on a Kubernetes cluster, such as one created by the vSphere Kubernetes Service (VKS). None of the user’s code, whether developed by hand or generated by the tool, leaves the company, thus protecting the intellectual property embodied in it. The target deployment K8S cluster is created by the VCF Automation tool and it has GPU awareness through the use of a VMware Private AI Foundation with NVIDIA feature – the GPU Operator. This Operator deploys the correct vGPU drivers into pods that run on that K8S cluster to support the virtual GPU functionality. Once this is in place, the Codeium functionality is deployed onto K8S using Helm charts. The individual parts of the Codeium infrastructure include an Inference Server, a Personalization Server and an Analytics Database as shown in Figure 4.

Figure 4: The Codeium Assistant Software running on the VMware Private AI Foundation with NVIDIA

You can get more information on using Codeium with the VMware Private AI Foundation with NVIDIA in this solution brief https://www.vmware.com/docs/codeium-vcf-solution-brief

Here are some simple example uses of Codeium to first generate a function in Python based on a text description.

Figure 5: The Codeium assistant writing a new function based on a natural language instruction

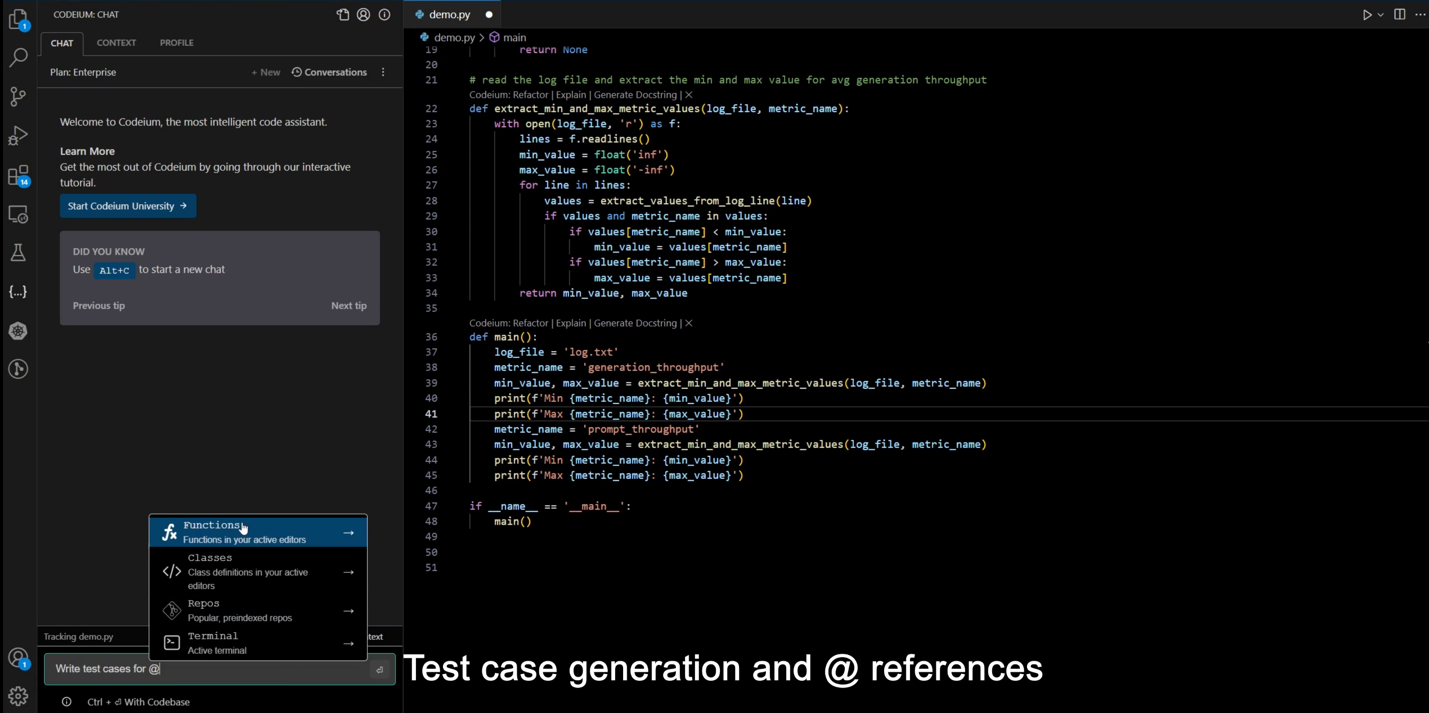

Secondly we asked the code assistant to write and include test use cases for the function done earlier.

Figure 6: The Codeium assistant creating test cases for functionality that it has implemented earlier

Summary

In part 1 of this series, we looked at two example use cases of implementing Private AI on VCF – a back-office chatbot that improves customer experience in contact centers and a coding assistant to help engineers be more efficient in their work. We will expand on these examples with two more use cases in part 2 of this series. These are examples from Broadcom’s own experiences in deploying private AI. There will naturally be many more use cases that apply to specific vertical industries or horizontal use cases as the whole field matures in the market. However, we have found that you can gain business advantage by deploying early in a private AI way. For further information, see the Private AI Ready Infrastructure for VMware Cloud Foundation Validated Solution the and the VMware Private AI Foundation with NVIDIA Guide

For financial services use-cases see the Private AI: Innovation in Financial Services Combined with Security and Compliance article

More information on use cases is available at Why Private AI is becoming the preferred choice for enterprise AI deployment

VCF makes the deployment of these two use cases seamless for enterprises by leveraging advanced technologies and quickly delivering complex tasks through automation while making sure your data remains secure on-premises. You can get started with VCF today!

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.