In today’s dynamic business landscape, Generative AI (Gen AI) applications have emerged as pivotal tools for organizations seeking to optimize operations, enhance decision-making processes, and gain competitive advantage.

AI technologies encompass many capabilities, including machine learning, deep learning, natural language processing, high-performance computing, computer vision, and robotics. These technologies are being applied across various industries, revolutionizing traditional business models and unlocking unprecedented opportunities.

One of the primary benefits of AI applications lies in their ability to analyze vast amounts of data swiftly and accurately. Through advanced algorithms, AI systems can identify patterns, trends, and insights that might go unnoticed by human analysts. This data-driven approach empowers organizations to make informed decisions, improve strategic planning, and anticipate market changes more precisely.

Embracing the challenges of AI technology

Organizations must proactively address risks related to data privacy, cost, performance, choice and compliance to ensure successful AI deployment and mitigate potential challenges such as unauthorized access, breaches or data leaks.

Also, considering the foundation software used in AI, the number of software packages you need to manage, the risk of introducing security vulnerabilities, and understanding all web of dependencies makes it nearly impossible for many enterprises to maintain a consistent, secure, and stable software platform for building and running AI is a complex and resource-intensive undertaking that slows down projects and hinders success.

Tackling Challenges Head-On

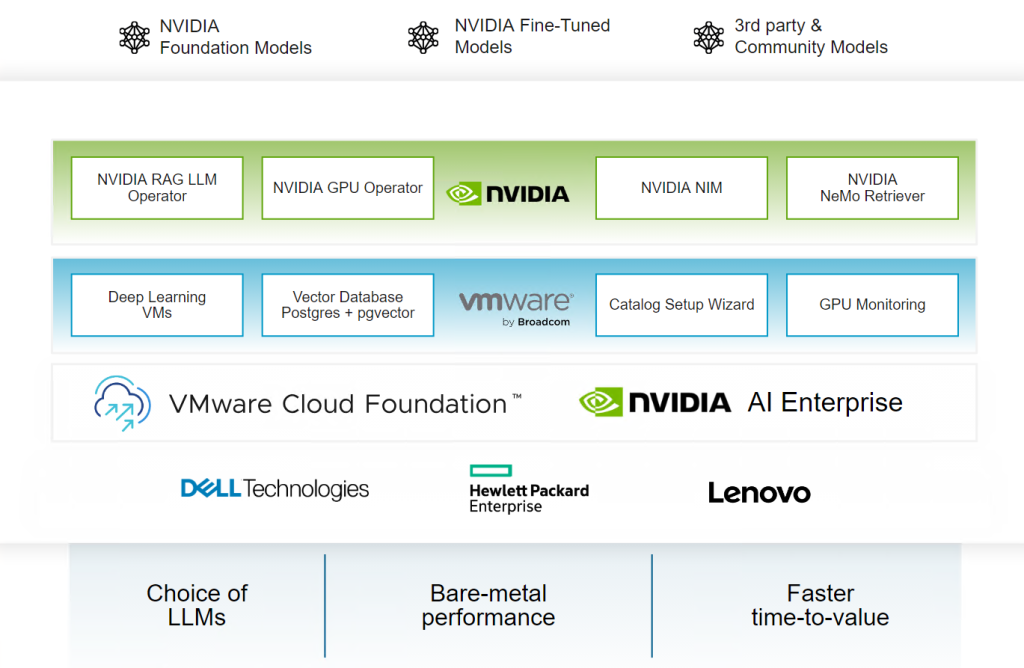

To address this for our customers and in collaboration with our NVIDIA partner, we are introducing the initial availability of a new capability called Private AI Automation Services, powered by VMware Aria Automation (8.16.2) and VMware Cloud Foundation “Private AI Foundation for NVIDIA.”

VMware Cloud Foundation provides a unified platform for managing all workloads through a self-service, automated IT environment, including VMs, containers, and AI technologies.

VMware Private AI Foundation with NVIDIA provides a high-performance, secure, cloud-native AI software platform for provisioning AI workloads based on NVIDIA GPU Cloud (NGC) containers that VMware by Broadcom specifically validated on vSphere ESXi hosts with NVIDIA GPUs for deep learning, machine learning, and high-performance computing (HPC) that provides containers, models, model scripts, and industry solutions so data scientists, developers and researchers can focus on building solutions and gathering insights faster.

This integration offers Private AI Automation Services, a collection of features that enable Cloud Admins to quickly design, curate, and deliver optimized AI infrastructure catalog objects through Aria Automation’s self-service Service Broker portal.

In this release, the Catalog Setup Wizard is a new capability which assists Cloud Admins in publishing the following catalog items with ease:

- AI Workstation – A GPU-enabled Deep Learning VM that can be configured with the desired vCPU, vGPU, Memory, and AI/ML NGC containers from NVIDIA.

- AI Kubernetes Cluster – A GPU-enabled Tanzu Kubernetes cluster automatically configured with NVIDIA GPU operator.

Note: The Catalog Setup Wizard is not enabled by default. Customers must contact VMware by Broadcom Professional Services to activate it for their organization.

By combining VMware Cloud Foundation with VMware Private AI Foundation with NVIDIA , developers, data scientists, and ML practitioners access a comprehensive solution for AI/ML infrastructure services.

At the same time, IT administrators can ensure governance and control of resources through Consumption Policies such as Approvals, Leasing, Resource Quota, IaC Templates, and Role-based Access Control. These policies allow project members to efficiently utilize AI/ML infrastructure services while guaranteeing optimal and secure resource usage.

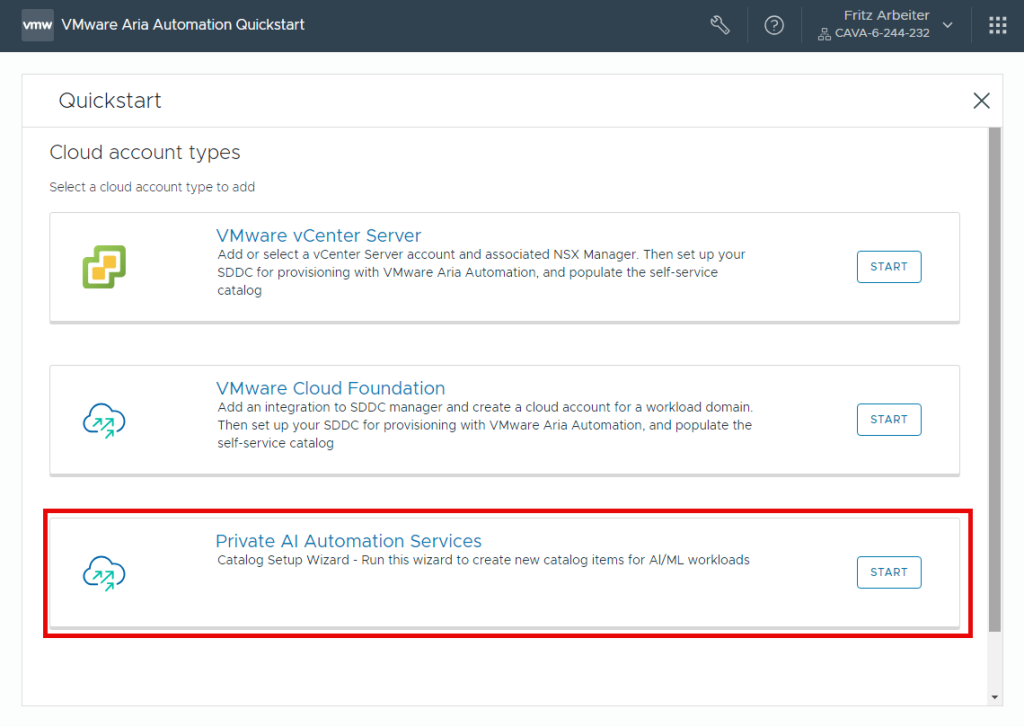

Private AI Automation Services Quickstart

Before starting the Catalog Setup Wizard, you need to make sure you have the following:

- VCF 5.1.1, which includes vCenter Server 8.0U2b.

- Configure CCI Supervisor Single Sign On (SSO) service. (See Documentation).

- VMware Aria Automation 8.16.2 or higher.

- A Configured vCenter Server Cloud Account in Aria Automation.

- A vSphere Configured GPU Enabled Supervisor Cluster via Workload Management.

- A vSphere Content Library Subscription to https://packages.vmware.com/dl-vm/lib.json

- NVIDIA NGC Enterprise org with a premium cloud service subscription.

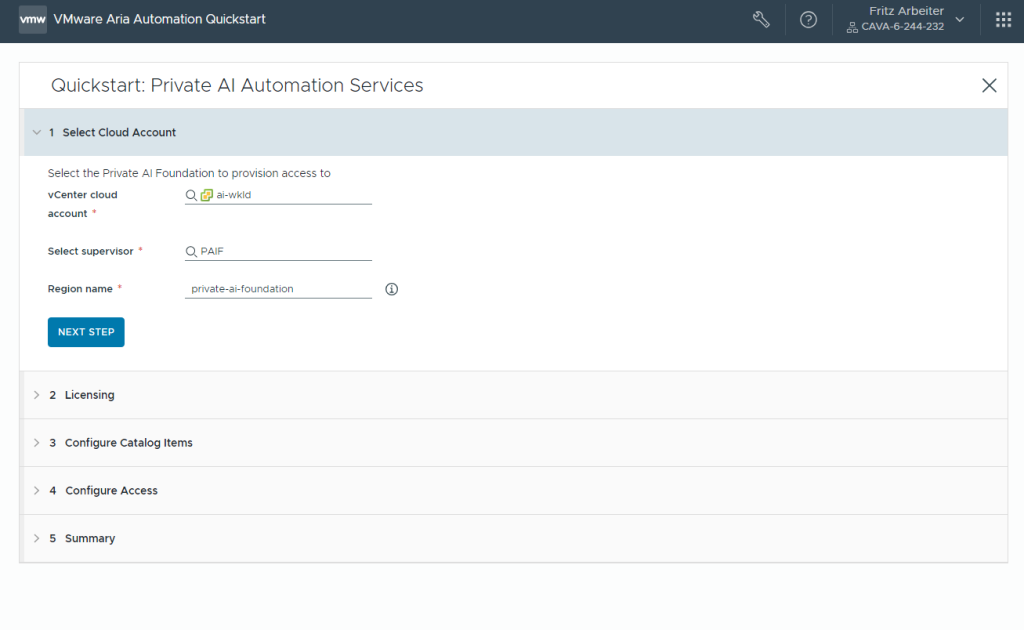

Step 1: Select Cloud Account

We will start by selecting the vCenter Server Cloud Account, GPU Enabled Supervisor Cluster, and a Region Name if one does not exist already.

An administrator creates a Region to group one or more supervisors. Each enterprise determines what Regions make sense for its environment. VMware by Broadcom recommends having similarly configured Supervisors (GPU-enabled Supervisors, for example ) within a region so that supervisor Namespaces can be placed in any of the Region’s supervisors when created.

Step 2: Licensing

Next, provide details of your NVIDIA license through your NVIDIA NGC Enterprise Org and its premium cloud service subscription.

We will use the Cloud License Service (CLS) instance hosted on the NVIDIA Licensing Portal here and pass both the Client Configuration Token and the Licensing portal API key. NVIDIA Customers can also use a Delegated License Service (DLS) instance hosted on-premises at a location accessible from your private network inside your data center.

Step 3: Configure Catalog Items

Here, we will provide all the details required and used by the catalog items ( AI Workstation and AI Kubernetes Cluster ) created by the workflow.

We will first select the VM image supplied by VMware by Broadcom via a provided vCenter content library subscription URL: https://packages.vmware.com/dl-vm/lib.json.

This image is an Ubuntu 22.04 Linux Operating System prepped with the NVIDIA vGPU driver, Docker Engine and NVIDIA Container Toolkit.

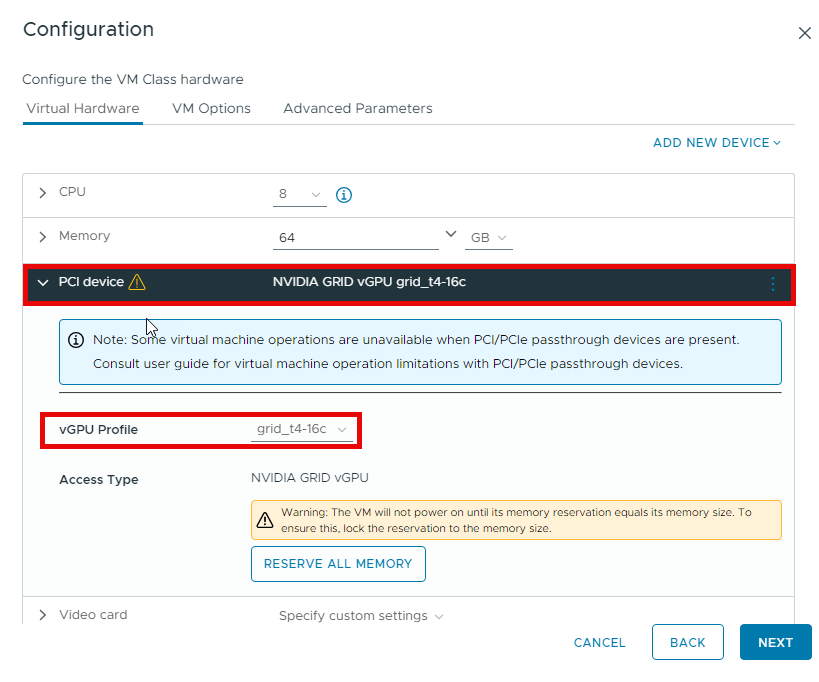

Next, we will be able to select at least one GPU-capable and one Non-GPU-capable VM class to make available for your users.

A VM class is a template that defines CPU, Memory, and reservations for VMs. Administrators can use the OOTB default VM Classes or create custom VM classes based on their needs, like the one we selected (a100-40gb) for a GPU-capable VM Class configured to use the NVIDIA PCI Device and vGPU Profile.

These VM classes are then made available within the provisioned supervisor namespace we create for every AI Workstation or Kubernetes Cluster deployment via a supervisor namespace class created by the workflow and assigned to a project.

The AI Workstation and AI Kubernetes Cluster Worker nodes use the GPU-capable VM class, whereas the AI Kubernetes Cluster Control plane nodes use the non-GPU-capable VM class.

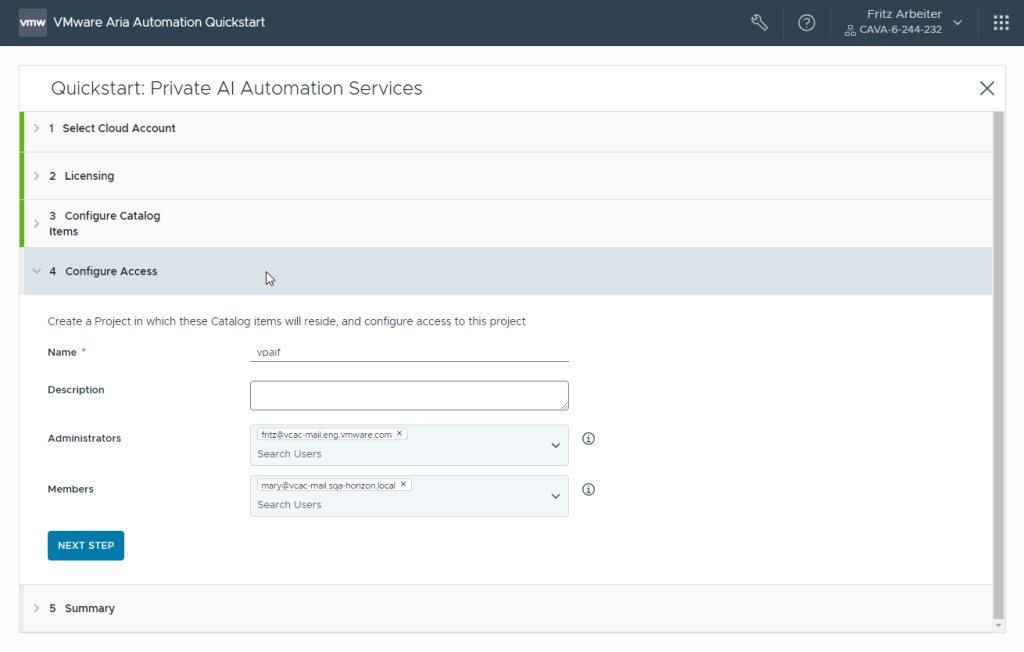

Step 4: Configure Access.

Projects are used to organize and govern what your users can do and determine which Cloud Zones or K8S Zones you can deploy cloud templates to in your cloud infrastructure.

Cloud administrators set up projects to which they can add users and Cloud Zones or K8S Zones. Anyone who creates and deploys cloud templates must be a member of at least one project.

This step allows us to create a project where these Catalog Items will reside and configure user access. The project will also have access to the Region and the Supervisor Namespace Class created by the workflow.

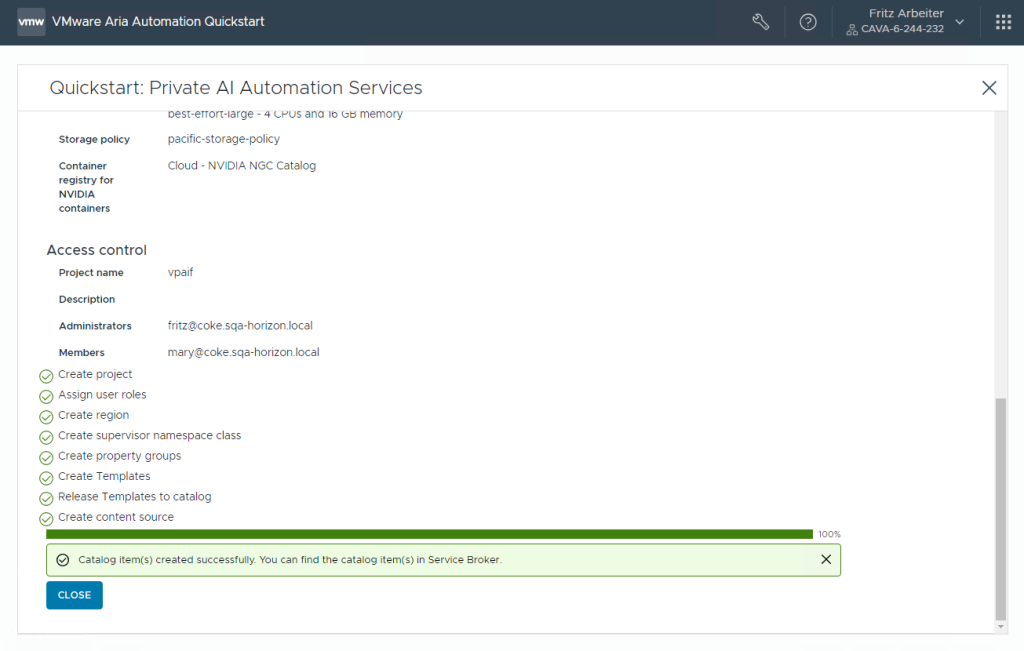

Step 5: Summary

Finally, we will review the information provided before finishing the workflow and Clicking Run QuickStart.

Once the workflow is completed, we can see all the tasks the workflow executed:

- Create Project

- Assign User Roles

- Create Region

- Create Supervisor namespace class

- Create Property Groups

- Create Templates

- Release Templates to Catalog

- Create Content Source.

Suppose we switch to Aria Automation Service Broker. In that case, we can see our AI Workstation and AI\ Kubernetes Cluster Catalog Items have been added to our project and are available to all project users to request.

- AI Workstation Item Catalog provisions a Workstation (VM) with preconfigured GPU capabilities and options to pre-install

- selectable customizations and software bundles during deployment, such as:

- PyTorch: The PyTorch NGC Container is optimized for GPU acceleration and contains a validated set of libraries that enable and optimize GPU performance and contains software for accelerating ETL (DALI, RAPIDS), Training (cuDNN, NCCL), and Inference (TensorRT) workloads.

- Tensor Flow: The TensorFlow NGC Container is optimized for GPU acceleration and contains validated libraries that enable and optimize GPU performance. This container may also include modifications to the TensorFlow source code to maximize performance and compatibility and software for accelerating ETL (DALI, RAPIDS), Training (cuDNN, NCCL), and Inference (TensorRT) workloads.

- DCGM Exporter: DCGM-Exporter is an exporter for Prometheus that monitors the company’s health and gets metrics from GPUs. It leverages DCGM using Go bindings to collect GPU telemetry and exposes GPU metrics to Prometheus using an HTTP endpoint (/metrics). DCGM-Exporter can be standalone or deployed as part of the NVIDIA GPU Operator.

- Triton Inference Server: Triton Inference Server provides a cloud and edge inferencing solution optimized for CPUs and GPUs. It supports an HTTP/REST and GRPC protocol that allows remote clients to request inferencing for any model the server manages. For edge deployments, Triton is available as a shared library with a C API that allows its complete functionality to be included directly in an application.

- Generative AI Workflow – RAG: This reference solution demonstrates how to find business value in generative AI by augmenting an existing foundational LLM to fit your business use case, which is done using retrieval augmented generation (RAG), which retrieves facts from an enterprise knowledge base containing a company’s business data.

- CUDA Samples: This is a collection of containers used to run CUDA workloads on GPUs. The collection includes containerized CUDA samples, such as vector Add (to demonstrate vector addition), nbody (or gravitational n-body simulation) and others. These containers can validate the software configuration of GPUs or run some example workloads.

- Al Kubernetes Cluster Item Catalog provisions a VMware Tanzu Kubernetes Cluster with customizable, GPU-capable worker nodes to run AI/ML cloud-native workloads. The cluster will run Kubernetes version 1.26.5 with Ubuntu nodes.

The supervisor VM and TKG services within a supervisor namespace facilitate the setup of the Private AI infrastructure via the Cloud Consumption Interface (CCI), which is now available on-premises for VMware Cloud Foundation customers, also in this release.

Cloud Consumption Interface (CCI) powered by VMware Aria Automation enables enterprises to develop, deploy, and manage Modern Applications with increased agility, flexibility, and modern techniques on vSphere while maintaining control of their vSphere infrastructure.

Summary

VMware Cloud Foundation is the core infrastructure platform for VMware Private AI Foundation with NVIDIA , delivering modern private cloud infrastructure that enables organizations to use Artificial Intelligence (AI) applications to stay ahead of the curve and drive sustainable growth in today’s rapidly evolving business landscape.

VMware Private AI Foundation with NVIDIA provides a high-performance, secure, cloud-native AI software platform for provisioning AI workloads based on NVIDIA GPU Cloud (NGC) containers for deep learning, machine learning, and high-performance computing (HPC) that provides container models, model scripts, and industry solutions so data scientists, developers and researchers can focus on building solutions and gathering insights faster. Meanwhile, IT administrators can ensure resource governance and control through Consumption Policies and Role-based Access Control, allowing project members to efficiently utilize AI infrastructure services while guaranteeing optimal and secure resource usage.

Ready to get started on your AI and ML journey? Visit us online at VMware Aria Automation for additional resources:

- Announcing the Initial Availability of VMware Private AI Foundation with NVIDIA

- Automation Services for VMware Private AI

- Learn more about VMware Private AI

Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.