VMware Private AI Foundation with NVIDIA provides a secure, self-service, high-performance platform for quickly deploying AI-based workloads. Key components like the Private AI Automation Services Quickstart Wizard in VCF Automation help Cloud Administrators quickly set up AI Workstations and AI Kubernetes Clusters, simplifying data scientists’ deployment of AI infrastructure. This integration enhances the overall efficiency and governance of AI/ML infrastructure services.

This blog will highlight the new Private AI Automation Services enhancements introduced in VMware Cloud Foundation Automation 8.18.1 within the latest VMware Cloud Foundation 5.2.1 platform.

Configure Catalog Items

In this release and within the Quickstart -> Configure Catalogue Items section, the Assembler Admin can select your organization’s approved Kubernetes runtime version. This version is then configured within the generated AI Kubernetes VCF Automation Templates.

These templates are then version-controlled and released to the Service Broker as catalog items to deploy AI Kubernetes Clusters when requested by developers and data scientists.

Just so you know, the Kubernetes release version list depends on the vCenter and Supervisor versions actively being used when running the Private AI Automation Services Quickstart Wizard against it.

We have also included Information to give the Assembler Admin some insights into the Minimum GPU configuration required for the LLM model (Llama-2-13b-chat) being used, how the GPU-capable VM class should be configured in vCenter and finally, when GPU-capable VM classes with Unified Virtual Memory (UVM) support are required, as illustrated in the screenshot below.

We have also added a column showing whether Unified Virtual Memory (UVM) Support is being used across all the discovered Supervisor GPU-capable VM Classes.

DSM Support in QuickStart

VMware Data Services Manager offers modern database and data services management for vSphere. This solution provides a data-as-a-service toolkit for on-demand provisioning and automated management of PostgreSQL and MySQL databases in a vSphere environment.

This release introduces Data Service Manager (DSM) Integration, which is the highlight of this release; where the Assembles Admin can enable it for specific projects if needed, where it creates three additional AI RAG-based with DSM support catalog items within Private AI Automation Services for data scientists to request and provision AI RAG-based workloads with support of DSM Databases, especially PostgreSQL with pgvector extension support that enables you to store and search identical vector embeddings in PostgreSQL, making the database suitable for use in search-based AI and ML applications.

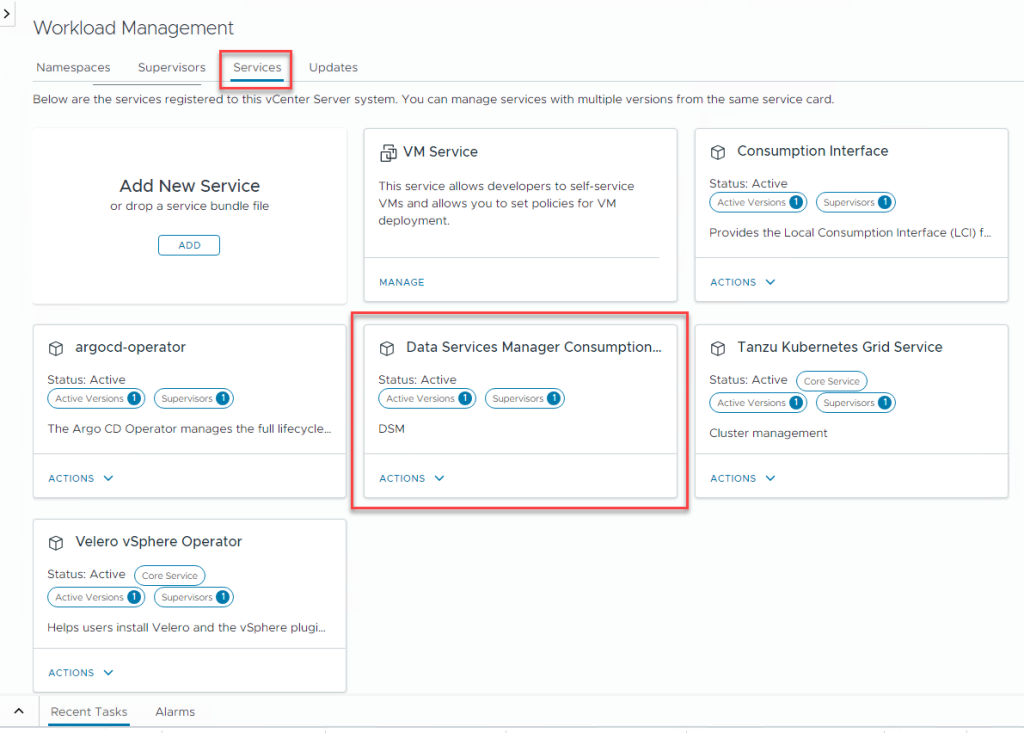

The Configure Data Services Manager step will only show up for the cloud admin within the Private AI Automation Services Quickstart if the following requirements are met:

- The DSM Consumption Operator Supervisor service is installed and activated on the selected supervisor. Once configured, the operator service can talk to the DSM appliance to provision a database when requested by the Cloud Consumption Interface backend service within VCF Automation via the new AI RAG-based with DSM support catalog items.

- The Data Service Manager (DSM) is deployed and configured with at least one infrastructure policy.

DSM Support in Catalog

The three new AI RAG-based with DSM support catalog items, as illustrated in the below screenshot, are:

- DSM Database (Standalone PostgreSQL database component)

- AI RAG Workstation with DSM

- AI Kubernetes RAG Cluster with DSM

The AI RAG Workstation and AI Kubernetes RAG Cluster with DSM catalog items within the request form can either use a New Database for the AI RAG-based with DSM support deployment or, as the data scientist, you can opt to use an Existing Database, where you can use a Connection String that includes the database credentials and the destination FQDN/ IP of an existing and shared database instance to which you want to connect the new AI RAG-based deployment to.

DCGM Export & JupyterLab Authentication

Last but not least, based on customer feedback, we are introducing the option to expose DCGM Metrics via a LoadBalancer for all the AI Workstation catalog items that a data scientist can request and deploy ( 4 in Total )

DCGM-Exporter, which stands for Data Center GPU Manager is a tool based on the Go APIs to NVIDIA DCGM that allows users to gather GPU metrics, understand workload behavior, and monitor GPUs in clusters. It is written in Go and exposes GPU metrics at an HTTP endpoint (/metrics) for monitoring solutions such as Prometheus.

We are also introducing the Ability to Enable JupyterLab Authentication for PyTorch and TensorFlow NGC Software bundles when selected within the AI Workstation catalog item for better security.

Both capabilities are available within the request form as simple check-box options, as illustrated in the screenshot below.

Summary

VMware Private AI Foundation with NVIDIA continues to improve with each release providing our customers with a high-performance, secure and cloud-native AI platform for provisioning AI workloads based on NVIDIA GPU Cloud (NGC) containers for deep learning, machine learning, and high-performance computing (HPC) running on VMware Cloud Foundation delivering modern private cloud infrastructure that enables organizations to use Generative AI applications to stay ahead of the curve and drive sustainable growth in today’s rapidly evolving business landscape.

Ready to get started on your AI and ML journey? Visit us online at VMware Aria Automation for additional resources:

- Read the VMware Private AI Foundation with NVIDIA solution brief.

- Learn more about VMware Private AI

Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.