In a previous blog, we discussed the March 2024 release of VMware Aria Automation 8.16.2, highlighting the integration of Private AI Automation Services with NVIDIA’s GPU Cloud (NGC). The new features include the VMware Private AI Foundation with NVIDIA, which provides a secure, self-service, high-performance platform for deploying AI workloads. Key components like the Catalog Setup Wizard help Cloud Administrators quickly set up AI Workstations and AI Kubernetes Clusters, simplifying the deployment of AI infrastructure. This integration enhances the efficiency and governance of AI/ML infrastructure services.

This blog will highlight the new Private AI Automation Services enhancements introduced in VMware Aria Automation 8.18.0 within the latest VMware Cloud Foundation 5.2 platform.

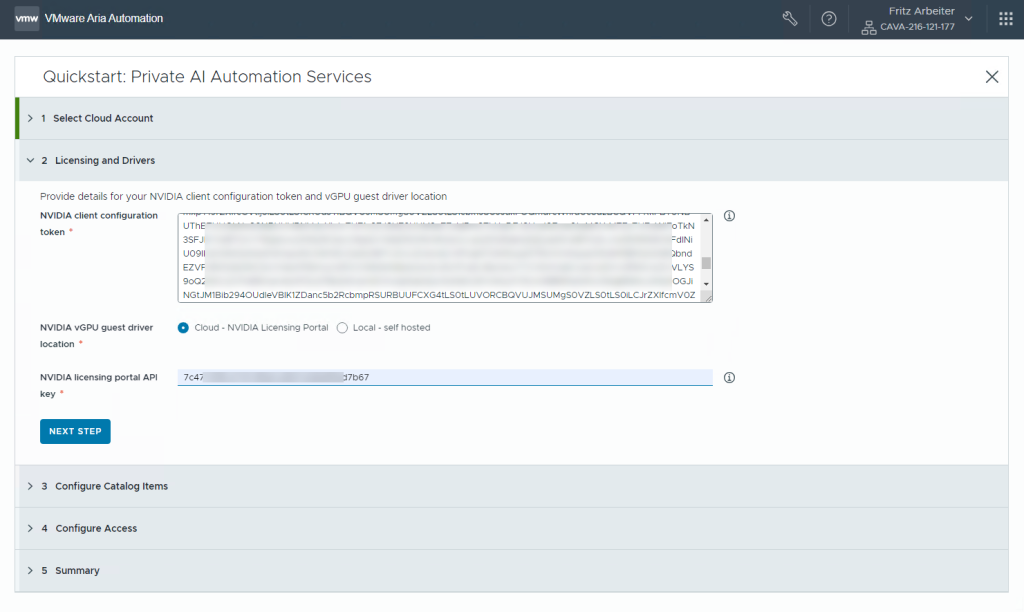

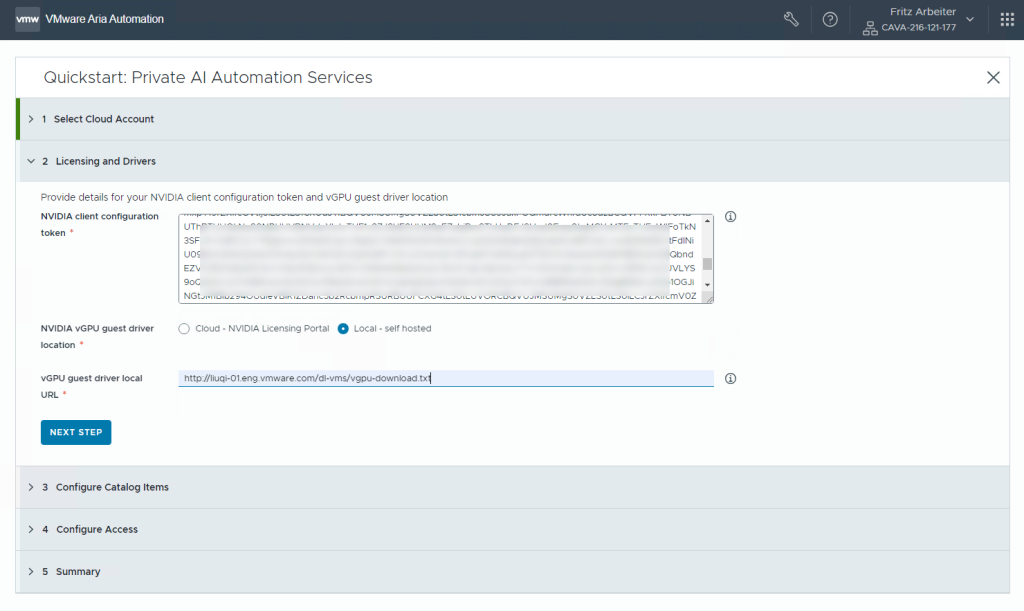

Licensing and Drivers

This section is now much more straightforward to ensure clarity. The Cloud Administrator can simply provide two pieces of information:

- The NVIDIA client configuration token. The token is ultimately passed to the provisioned AI Workstation or the AI Kubernetes Cluster to enable the full capabilities of the vGPU driver.

- The NVIDIA vGPU Driver location where the Cloud Administrator can select between:

- Cloud – NVIDIA Licensing Portal, where you must pass a licensing portal API key (Figure 1)

- Local – Self-Hosted, where you must pass the vGPU guest driver local URL (Figure 2)

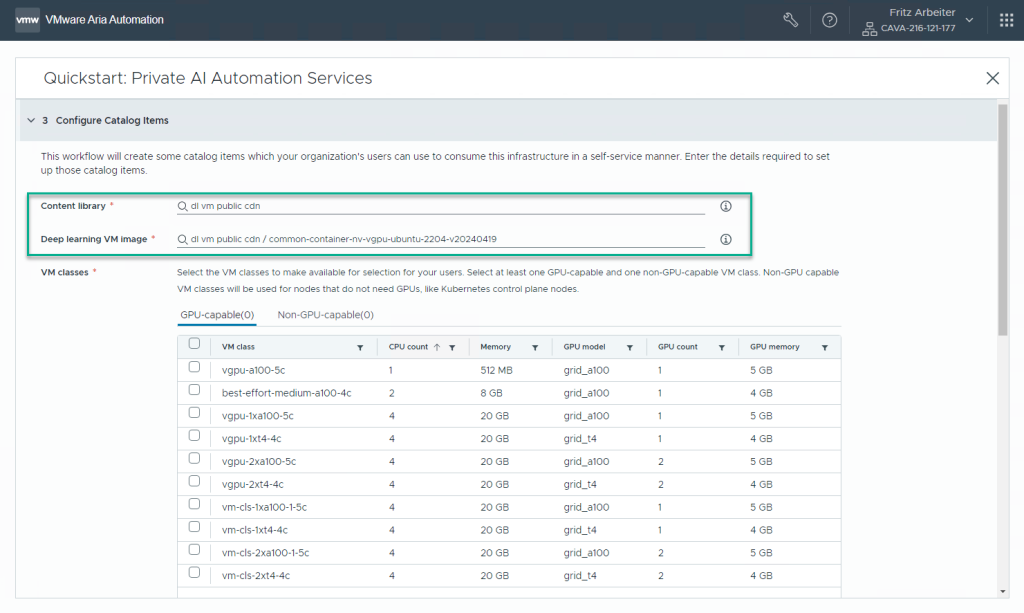

Configure Catalog Items

In this section of the quickstart workflow, we have introduced the ability to target a specific content library to quickly locate the Deep Learning Virtual Machine Image (DLVM) by:

- Limiting the results to displaying the content of one library.

- If any existing Kubernetes images, i.e. Tanzu Kubernetes Releases (TKR), exist within the targeted content library, we automatically filter them out.

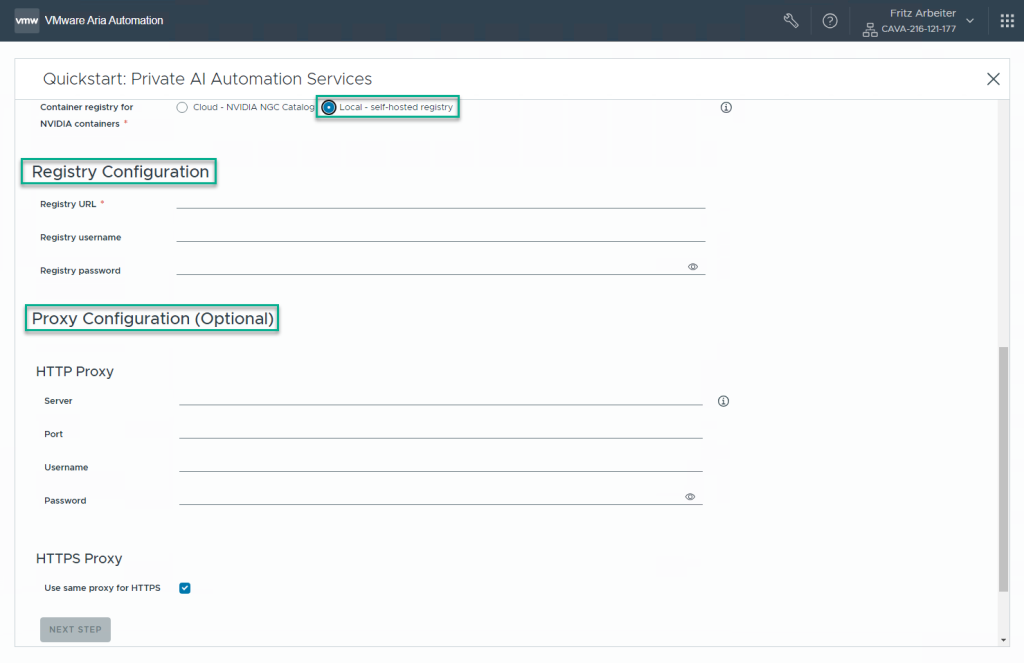

We have also introduced air-gapped environment support for non-RAG AI Workstation catalog items such as PyTorch, TensorFlow, CUDA Samples, and Triton Inferencing Server by enabling the configuration of a private registry within the quickstart workflow to point to a self-hosting of a container registry holding the NVIDIA container images.

Lastly, we are introducing support for HTTP or HTTPs Proxy Server Configuration, which will help customers without direct internet access download the vGPU driver from NVIDIA or pull down the non-RAG AI Workstation containers we mentioned earlier.

Please note that the RAG AI Workstation and AI Kubernetes Cluster catalog items do not yet support air-gapped environments and will need direct internet access to be deployed successfully.

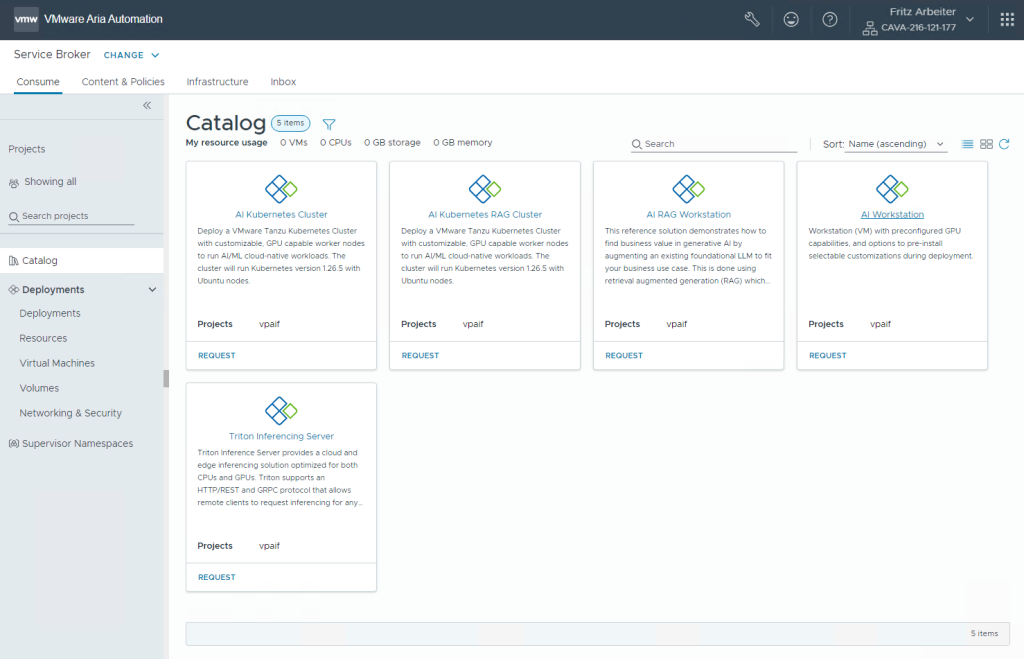

Catalog Items

To improve the usability and maintainability of our Private AI Automation services item catalogs, we have decided to:

- Split the AI Workstation from one into three distinct catalog items:

- AI Workstation that can optionally run ( PyTorch, TensorFlow, CUDA Samples or None ).

- AI RAG Workstation.

- Triton Inferencing Server.

- All the AI Workstation catalog items can run additional custom cloud-init configurations if needed.

- Add an AI Kubernetes RAG Cluster catalog item to provision a Kubernetes Cluster with preinstalled vGPU and RAG Operators, allowing customers to run AI RAG-based Applications like Chatbot Applications.

The total produced Private AI Automation Services catalog items in VMware Aria Automation 8.18.0 will be 5 compared to only 2 previously.

- 3 x AI Workstation Catalog Items

- 2 x AI Kubernetes Clusters Catalog Items.

Summary

VMware Cloud Foundation is the core infrastructure platform for VMware Private AI Foundation with NVIDIA, delivering modern private cloud infrastructure software that enables organizations to use Artificial Intelligence (AI) applications to stay ahead of the curve and drive sustainable growth in today’s rapidly evolving business landscape.

VMware Private AI Foundation with NVIDIA provides a high-performance, secure, cloud-native AI software platform for provisioning AI workloads based on NVIDIA GPU Cloud (NGC) containers for deep learning, machine learning, and high-performance computing (HPC) that provides container models, model scripts, and industry solutions so data scientists, developers and researchers can focus on building solutions and gathering insights faster. Meanwhile, IT administrators can ensure resource governance and control through Consumption Policies and Role-based Access Control, allowing project members to efficiently utilize AI infrastructure services while guaranteeing optimal and secure resource usage.

Ready to get started on your AI and ML journey? Visit us online at VMware Aria Automation for additional resources:

- Announcing the Initial Availability of VMware Private AI Foundation with NVIDIA

- Automation Services for VMware Private AI

- VMware Private AI Foundation with NVIDIA Server Guidance

- Read the VMware Private AI Foundation with NVIDIA solution brief

- Learn more about VMware Private AI

Connect with us on Twitter at @VMwareVCF and on LinkedIn at VMware VCF.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.